Real-Time Grasp Type Recognition Using Leap Motion Controller’ 1

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

{Download PDF} High Five with Julius

HIGH FIVE WITH JULIUS PDF, EPUB, EBOOK Paul Frank | 10 pages | 24 Feb 2010 | CHRONICLE BOOKS | 9780811871471 | English | California, United States High Five with Julius PDF Book Rate this:. My 3-year-old loved giving each character a high-five and then her hand would linger as she felt the different textures. English On Shelf. How Can We Help? As two-way G-League point guard Kadeem Allen bounced away the final seconds, a large throng of Knicks fans stood up and roared as the club moved to Big Deal, Baby. McGraw Hill. Nice Partner! Randle and others talked about a lack of focus at the morning shootaround Monday. You are commenting using your WordPress. Table of Contents. Universal Conquest Wiki. Smiling this much is so heartwarming! It did the trick. On Shelf. I think focusing primarily on the individual is definitely the first step. A medical study found that fist bumps and high fives spread fewer germs than handshakes. Welcome to another Write a Review Wednesday , a meme started by Tara Lazar as a way to show support to authors of kids literature. Each spread encourages kids to celebrate amazing everyday achievementsfrom sharing their toys to just being themselvesand features a touch-and-feel texture that will keep little ones engaged as they strive to be and do their very best. Redirected from High-five. National High Five Day is a project to give out high fives and is typically held on the third Thursday in April. Categories : introductions American cultural conventions Hand gestures. By continuing to use this website, you agree to their use. -

CGI Developer's Guide by Eugene Eric Kim

CGI Developer's Guide By Eugene Eric Kim Introduction Chapter 1 Common Gateway Interface (CGI) What Is CGI? Caveats Why CGI? Summary Choosing Your Language Chapter 2 The Basics Hello, World! The <form> Tag Dissecting hello.cgi The <input> Tag Hello, World! in C Submitting the Form Outputting CGI Accepting Input from the Browser Installing and Running Your CGI Program Environment Variables Configuring Your Server for CGI GET Versus POST Installing CGI on UNIX Servers Encoded Input Installing CGI on Windows Parsing the Input Installing CGI on the Macintosh A Simple CGI Program Running Your CGI General Programming Strategies A Quick Tutorial on HTML Forms Summary Chapter 3 HTML and Forms A Quick Review of HTML Some Examples HTML Basic Font Tags Comments Form The <head> Tag Ordering Food Forms Voting Booth/Poll The <FORM> Tag Shopping Cart The <INPUT> Tag Map The <SELECT> Tag Summary The <TEXTAREA> Tag Chapter 4 OutPut Revised January 20, 2009 Page 1 of 428 CGI Developer's Guide By Eugene Eric Kim Header and Body: Anatomy of Server Displaying the Current Date Response Server-Side Includes HTTP Headers On-the-Fly Graphics Formatting Output in CGI A "Counter" Example MIME Counting the Number of Accesses Location Text Counter Using Server-Side Includes Status Graphical Counter Other Headers No-Parse Header Dynamic Pages Summary Using Programming Libraries to Code CGI Output Chapter 5 Input Background cgi-lib.pl How CGI Input Works cgihtml Environment Variables Strategies Encoding -

Introduction What’S Race Got to Do with It? Postwar German History in Context

After the Nazi Racial State: Difference and Democracy in Germany and Europe Rita Chin, Heide Fehrenbach, Geoff Eley, and Atina Grossmann http://www.press.umich.edu/titleDetailDesc.do?id=354212 The University of Michigan Press, 2009. Introduction What’s Race Got to Do With It? Postwar German History in Context Rita Chin and Heide Fehrenbach In June 2006, just prior to the start of the World Cup in Germany, the New York Times ran a front-page story on a “surge in racist mood” among Germans attending soccer events and anxious of‹cials’ efforts to discour- age public displays of racism before a global audience. The article led with the recent experience of Nigerian forward Adebowale Ogungbure, who, af- ter playing a match in the eastern German city of Halle, was “spat upon, jeered with racial remarks, and mocked with monkey noises” as he tried to exit the ‹eld. “In rebuke, he placed two ‹ngers under his nose to simulate a Hitler mustache and thrust his arm in a Nazi salute.”1 Although the press report suggested the contrary, the racist behavior directed at Ogungbure was hardly resurgent or unique. Spitting, slurs, and offensive stereotypes have a long tradition in the German—and broader Euro-American—racist repertoire. Ogungbure’s wordless gesture, more- over, gave the lie to racism as a worrisome product of the New Europe or even the new Germany. Rather, his mimicry ef‹ciently suggested continu- ity with a longer legacy of racist brutality reaching back to the Third Reich. In effect, his response to the antiblack bigotry of German soccer fans was accusatory and genealogically precise: it screamed “Nazi!” and la- beled their actions recidivist holdovers from a fanatical fascist past. -

Research Article Automated Region Extraction from Thermal Images for Peripheral Vascular Disease Monitoring

Hindawi Journal of Healthcare Engineering Volume 2018, Article ID 5092064, 14 pages https://doi.org/10.1155/2018/5092064 Research Article Automated Region Extraction from Thermal Images for Peripheral Vascular Disease Monitoring Jean Gauci ,1 Owen Falzon ,1 Cynthia Formosa ,2 Alfred Gatt ,2 Christian Ellul,2 Stephen Mizzi ,2 Anabelle Mizzi ,2 Cassandra Sturgeon Delia,3 Kevin Cassar,3 Nachiappan Chockalingam ,4 and Kenneth P. Camilleri 1 1Centre for Biomedical Cybernetics, University of Malta, Msida MSD2080, Malta 2Department of Podiatry, University of Malta, Msida MSD2080, Malta 3Department of Surgery, University of Malta, Msida MSD2080, Malta 4Faculty of Health Sciences, Staffordshire University, Staffordshire ST4 2DE, UK Correspondence should be addressed to Jean Gauci; [email protected] Received 18 June 2018; Revised 26 October 2018; Accepted 15 November 2018; Published 13 December 2018 Guest Editor: Orazio Gambino Copyright © 2018 Jean Gauci et al. )is is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. )is work develops a method for automatically extracting temperature data from prespecified anatomical regions of interest from thermal images of human hands, feet, and shins for the monitoring of peripheral arterial disease in diabetic patients. Binarisation, morphological operations, and geometric transformations are applied in cascade to automatically extract the required data from 44 predefined regions of interest. )e implemented algorithms for region extraction were tested on data from 395 participants. A correct extraction in around 90% of the images was achieved. )e process of automatically extracting 44 regions of interest was performed in a total computation time of approximately 1 minute, a substantial improvement over 10 minutes it took for a corresponding manual extraction of the regions by a trained individual. -

Drome De ROSENTHAL (II) 14944 Rc)SSLE's Syndrome

ROSSLE'S 14944-14967 Saccharomyces halluzinatorisches Angst-Syndrom) / syn 14954 rules, pregnancy control / Schwanger drome de ROSENTHAL (II) schaftskontrollregeln f (1. moglichst nicht 14944 Rc)SSLE'S syndrome / ROSSLE' Syndrom (Se invasiv [Ultraschall], 2. nur mit kurzlebigen xogener Kleinwuchs) / nanisme sexoge Radionukliden, vor allem zur Diagnose der nique Nieren- und Plazentafunktion, erst ab 3. 14945 rotation, chromosomal / Rotation, chromo Trimenon [JANISCH]) / regles de controle de somale f (in Bivalenten mit Chiasma zwi grossesse f schen Diplotan und Diakinese eintretende 14955 rules, sense for / Gefuhl fur Regeln n Formverandrung) / rotation chromosomi (psych.) / regles, sens pour m quef 14956 ruling forecast; science forecasting / Richtli 14946 round pronator syndrome / Pronator-teres nienprognose; Wissenschaftsprognose f / Syndrom (Schwache in den langen Finger prevision directrice; prevision scientifique f beugem) / syndrome de pronateur rond 14957 rumors / Geriichte n (konnen aus Traumen 14947 ROVIRALTA'S syndrome / ROVIRALTA' Syn entstehen) / rumeurs f drom (Pylorusstenose und Hiatushemie 14958 running, dancing / Laufen, tanzerisches n mit Magenektopie beim Saugling) / syn (tagliches ohne Leistungsdruck bis zur Er drome phrenopylorique (ROVIRALTA) miidung, besser als Radfahren, Schwim 14948 ROWLEY'S syndrome / ROWLEY' Syndrom men, Reiten, halt aerobe Kapazitat kon (SchwerhOrigkeit mit Halsfisteln) / syn stant, eroffnet Kollateralen und vermehrt drome de ROWLEY Zahl und GroBe der Mitochondrien) / cou 14949 ROWLEy-ROSENBERG syndrome / ROWLEY rir dansant m ROSENBERG' Syndrom (Gestorte Riickab 14959 rupture of the bladder / Blaseruptur f(klin. sorption fast aller Aminosauren) / syn Leitsymptom: blutige Dysurie; sichre Dia drome de ROWLEy-ROSENBERG gnose: Zystographie mit Kontrastmittel, 14950 rubb~~g, emotional/ Reibung, emotionale f wenn nicht moglich, Probelaparotomie und (bei UbervOlkrung groBres Problem als bei jeder gesicherten Ruptur sofortige Op. -

Animatronic Wireless Hand

ANIMATRONIC WIRELESS HAND A PROJECT REPORT Submitted by J. JANET M. KAVITHA R. KIRAN CHANDER in partial fulfillment for the award of the degree of BACHELOR OF ENGINEERING IN ELECTRICAL AND ELECTRONICS ENGINEERING EASWARI ENGINEERING COLLEGE, CHENNAI-89 ANNA UNIVERSITY: CHENNAI-600 025 APRIL 2019 ii ANNA UNIVERSITY: CHENNAI 600 025 BONAFIDE CERTIFICATE Certified that this project report “ANIMATRONIC WIRELESS HAND” is the bonafide work of “J.JANET (310615105030), M.KAVITHA (310615105033) and R.KIRAN CHANDER (310615105035)” who carried out the project work under my supervision. SIGNATURE SIGNATURE Dr.E.KALIAPPAN,M.Tech., Ph.D., Ms.B.PONKARTHIKA,M.E., HEAD OF THE DEPARTMENT SUPERVISOR ASSISTANT PROFESSOR DEPARTMENT OF ELECTRICAL AND DEPARTMENT OF ELECRICAL AND ELECTRONICS ENGINEERING ELECTRONICS ENGINEERING EASWARI ENGINEERING COLLEGE EASWARI ENGINEERING COLLEGE RAMAPURAM - 600089 RAMAPURAM – 600089 Submitted for the University examination held for project work at Easwari Engineering College on ……………………… INTERNAL EXAMINER EXTERNAL EXAMINER iii ACKNOWLEDGEMENT We would like to express our sincere thanks to our respected chairman, Dr. R. SHIVAKUMAR, M.D., Ph.D., for providing us with requisite infrastructure throughout the course. We would like to express our sincere thanks to our beloved Principal Dr. K. KATHIRAVAN, M.Tech., Ph.D., for his encouragement. We are highly indebted to the Department of Electrical and Electronics Engineering for providing all the facilities for the successful completion of the project. With a deep sense of gratitude, we would like to sincerely thank our Head of the Department Dr.E.KALIAPPAN, M.Tech., Ph.D., for his constant support, encouragement and valuable guidance for our project work. We are extremely grateful to our project supervisor Ms.B.PONKARTHIKA M.E., Assistant Professor, Department of Electrical and Electronics Engineering for her consent guidance, suggestions and kind help in bringing out this project within scheduled time frame. -

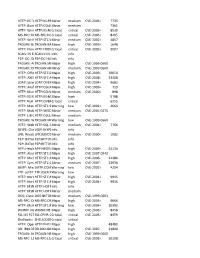

HTTP: IIS "Propfind" Rem HTTP:IIS:PROPFIND Minor Medium

HTTP: IIS "propfind"HTTP:IIS:PROPFIND RemoteMinor DoS medium CVE-2003-0226 7735 HTTP: IkonboardHTTP:CGI:IKONBOARD-BADCOOKIE IllegalMinor Cookie Languagemedium 7361 HTTP: WindowsHTTP:IIS:NSIISLOG-OF Media CriticalServices NSIISlog.DLLcritical BufferCVE-2003-0349 Overflow 8035 MS-RPC: DCOMMS-RPC:DCOM:EXPLOIT ExploitCritical critical CVE-2003-0352 8205 HTTP: WinHelp32.exeHTTP:STC:WINHELP32-OF2 RemoteMinor Buffermedium Overrun CVE-2002-0823(2) 4857 TROJAN: BackTROJAN:BACKORIFICE:BO2K-CONNECT Orifice 2000Major Client Connectionhigh CVE-1999-0660 1648 HTTP: FrontpageHTTP:FRONTPAGE:FP30REG.DLL-OF fp30reg.dllCritical Overflowcritical CVE-2003-0822 9007 SCAN: IIS EnumerationSCAN:II:IIS-ISAPI-ENUMInfo info P2P: DC: DirectP2P:DC:HUB-LOGIN ConnectInfo Plus Plus Clientinfo Hub Login TROJAN: AOLTROJAN:MISC:AOLADMIN-SRV-RESP Admin ServerMajor Responsehigh CVE-1999-0660 TROJAN: DigitalTROJAN:MISC:ROOTBEER-CLIENT RootbeerMinor Client Connectmedium CVE-1999-0660 HTTP: OfficeHTTP:STC:DL:OFFICEART-PROP Art PropertyMajor Table Bufferhigh OverflowCVE-2009-2528 36650 HTTP: AXIS CommunicationsHTTP:STC:ACTIVEX:AXIS-CAMERAMajor Camerahigh Control (AxisCamControl.ocx)CVE-2008-5260 33408 Unsafe ActiveX Control LDAP: IpswitchLDAP:OVERFLOW:IMAIL-ASN1 IMail LDAPMajor Daemonhigh Remote BufferCVE-2004-0297 Overflow 9682 HTTP: AnyformHTTP:CGI:ANYFORM-SEMICOLON SemicolonMajor high CVE-1999-0066 719 HTTP: Mini HTTP:CGI:W3-MSQL-FILE-DISCLSRSQL w3-msqlMinor File View mediumDisclosure CVE-2000-0012 898 HTTP: IIS MFCHTTP:IIS:MFC-EXT-OF ISAPI FrameworkMajor Overflowhigh (via -

Schweizer / WS 09/10 - 1 - 18

Schweizer / WS 09/10 - 1 - 18. Feb. 2011 Schweizer / WS 09/10 - 2 - 18. Feb. 2011 Pragmatik I - Beschreibung von 1. Impulse zur Arbeitsfeldumschreibung 1.1 Sprachkritik als Ideologiekritik: "Im Interesse..." Text, Ko-Text und situativem Kontext 1.2 Wortsinn und Kommunikationssituation: Kaspar Hauser 1.3 Stilistik, Rhetorik - Regeln zur Textgestaltung? 1.4 Segmentierung einer Kommunikation: top down: A users gram- - Harald Schweizer - mar 1.5 Streit unter ComputerlinguistInnen: top down oder bottom Di 17-19 Uhr Sand 6, Hs 2 up? 1.6 Wortformen-Syntax vs. kontextbezogene Semantik (TG) = eigen- ständige Strukturen: Poet K. Marti Unter: http://www-ct.informatik.uni-tuebingen.de/index.htm LEH- 1.7 Schrittweise Enthüllung der gemeinten Bedeutung: Wertungen RE LEHRANGEBOT ist dieses file (Gliederung/Literaturliste/Mate- 1.8 Politische Semantik: Vordenker 1.9 Theoretische Aufarbeitung rialien) zugänglich 1.91 Definitionsversuch 1.92 Einbeziehung nicht-sprachlicher Botschaften 1.93 Kommunikationsmodell - etwas verändert 1.94 GRICE: Implikatur (im Gegensatz zu Implikation) Fakultät für Informations- und Kognitionswissenschaften 1.95 Implikation (im Gegensatz zu Implikatur) 1.96 Besteht eine Grammatik aus Regeln? Arbeitsbereich Textwissenschaft 1.97 Versuch einer Zusammenfassung 2. Kontextbildende Elementare Mechanismen/textgrammatisch 2.1 Überprüfung der Prädikatbedeutungen 2.11 "Echte" Prädikate = Außenweltveränderungen, persönlich zure- Sand 13 chenbar, nicht lediglich Ortsveränderung 2.12 Illokutionssignale 72076 Tübingen 2.13 Interpretamente (Adjunktion) [email protected] 2.14 Weitgefaßter Terminus "Modalverb" 2.15 Deixis: Topologie/Chronologie Fon: 29-75248 2.16 Weitere Rezeptionssteuerungen Fax: 29-5060 2.2 Kontextbildung im Wortsinn 2.3 Revision der semantischen Prädikation: tg Verschiebung 2.4 Textdeiktische Elemente: Anaphern/Kataphern Sprechstunden: Mi 11-13 (bzw. -

“In the Name of the Holy Trinity”: Examining Credibility Under Anarchy Through 250 Years of Treaty-Making

\In The Name of the Holy Trinity": Examining Credibility Under Anarchy Through 250 Years of Treaty-Making Krzysztof Pelcy November 2019, Prepared for IPES 2019 Abstract Where does the binding force of international treaties come from? This article considers three centuries of international peace treaties to chart how signatories have sought to convince one another of the viability of their commitments. I show how one means of doing so was by invoking divine authority: treaty violations were punished by divine sanction in heaven and excommunication on earth. Anarchy, \the fundamental assumption of international politics," is commonly defined as \the absence of a supreme power." Yet an examination of peace treaties from the 1600s onwards suggests that for much of the post-Westphalian era, sovereigns would not have envisioned themselves as operating under anarchy. Rather, they strategically invoked divine authority to add credibility to their commitments. Signatories facing a high probability of war are seen relying more heavily on invocations of divine authority. Strikingly, treaties that invoke divine authority then show a greater conflict-abating effect. Using automated text analysis of two thousand peace, commerce, and navigation treaties spanning 250 years, I show how treaty performance was affected when signatories lost the ability to invoke the divine as a means of binding themselves. God appears to be statistically significant. yDepartment of Political Science, McGill University, [email protected]. 1 Introduction \The Ratification of the -

Author Guidelines for 8

HAND GESTURE RECOGNITION USING A SKELETON-BASED FEATURE REPRESENTATION WITH A RANDOM REGRESSION FOREST Shaun Canavan, Walter Keyes, Ryan Mccormick, Julie Kunnumpurath, Tanner Hoelzel, and Lijun Yin Binghamton University ABSTRACT In this paper, we propose a method for automatic hand gesture recognition using a random regression forest with a novel set of feature descriptors created from skeletal data acquired from the Leap Motion Controller. The efficacy of our proposed approach is evaluated on the publicly available University of Padova Microsoft Kinect and Leap Motion dataset, as well as 24 letters of the English alphabet in American Sign Language. The letters that are dynamic (e.g. j and z) are not evaluated. Using a random regression forest Figure 1. Proposed gesture recognition overview. to classify the features we achieve 100% accuracy on the University of Padova Microsoft Kinect and Leap Motion and because of this it is natural to use this camera for hand dataset. We also constructed an in-house dataset using the gesture recognition. The Leap skeletal tracking model gives 24 static letters of the English alphabet in ASL. A us access to the following information that we use to create classification rate of 98.36% was achieved on this dataset. our feature descriptors; (1) palm center; (2) hand direction We also show that our proposed method outperforms the (3) fingertip positions; (4) total number of fingers (extended current state of the art on the University of Padova and non-extended); and (5) finger pointing directions. Using Microsoft Kinect and Leap Motion dataset. this information from the Leap we propose six new feature descriptors which include (1) extended finger binary Index Terms— Gesture, Leap, ASL, recognition representation; (2) max finger range; (3) total finger area; (4) finger length-width ratio; and (5-6) finger directions and 1. -

Communication Practices in Forest Environmental Education Elizabeth Dickinson

University of New Mexico UNM Digital Repository Communication ETDs Electronic Theses and Dissertations 6-25-2010 Constructing, Consuming, and Complicating the Human-Nature Binary: Communication Practices in Forest Environmental Education Elizabeth Dickinson Follow this and additional works at: https://digitalrepository.unm.edu/cj_etds Recommended Citation Dickinson, Elizabeth. "Constructing, Consuming, and Complicating the Human-Nature Binary: Communication Practices in Forest Environmental Education." (2010). https://digitalrepository.unm.edu/cj_etds/13 This Dissertation is brought to you for free and open access by the Electronic Theses and Dissertations at UNM Digital Repository. It has been accepted for inclusion in Communication ETDs by an authorized administrator of UNM Digital Repository. For more information, please contact [email protected]. CONSTRUCTING, CONSUMING, AND COMPLICATING THE HUMAN-NATURE BINARY: COMMUNICATION PRACTICES IN FOREST ENVIRONMENTAL EDUCATION BY ELIZABETH A. DICKINSON B.A., Communication Studies, California State University, San Bernardino, 1996 M.A., Communication Studies, New Mexico State University, 1998 DISSERTATION Submitted in Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy Communication The University of New Mexico Albuquerque, New Mexico May, 2010 iii DEDICATION This project is dedicated to my two inspirations over the last year and a half—my young precious niece, Audrey K. Dickinson, and the beautiful forests with whom I spent so much time. May you flourish and guide us through our challenging and ever-changing human ways. iv ACKNOWLEDGMENTS I am grateful and indebted to those who made this project a great joy. This experience was significant due to the relationships I forged, with people and nature alike. In keeping with a theme in this project, I use the “parts of a tree” as a metaphor to recognize the individuals who made my journey memorable. -

Numbering Systems a Number Is a Basic Unit of Mathematics. Numbers

Numbering Systems A number is a basic unit of mathematics. Numbers are used for counting, measuring, and comparing amounts. A numeral system is a set of symbols, or numerals, that are used to represent numbers. The most common number system uses 10 symbols called digits—0, 1, 2, 3, 4, 5, 6, 7, 8, and 9—and combinations of these digits. Numeral systems are classified by base. Base 2 - Binary Base 10 - Decimal Base 8 - Octal Base 16 - Hexadecimal base is the number of unique digits, including zero, that a numeral system uses to represent numbers. For example, for the decimal system the base is ten, because it uses the ten digits from 0 through 9. A base is rather simple to understand. The base is simply The highest value a position can reach in the system. Binary • Binary is a mathematical numbering system which is used a lot in computer science and used also for our mobile phones. Binary follows a system of base-2 numeral system, which is represented by two symbols; 1 and 0. • Digital systems such as digital clocks and phone signals, use binary. • Binary can be used to do mathematical calculations, basic calculations such as addition, subtraction and multiplication can be done as shown below. Addition Subtraction Multiplication • 1 + 1 = 0 (remainder 1 that • 0 - 0 = 0 • 0 x 0 = 0 is carried over to the next • 1 - 0 = 1 • 0 x 1 = 0 column) • 1 - 1 = 0 • 1 x 0 = 0 • 1 + 0 = 1 • 0 - 1 = 1 with a borrow from the • 1 x 1 = 1 • 0 + 0 = 0 next column Finger Binary Finger binary is a method created that allows you to count and show binary numbers using the fingers on your hands.