Advances in Distributed Operations and Mission Activity Planning for Mars Surface Exploration

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

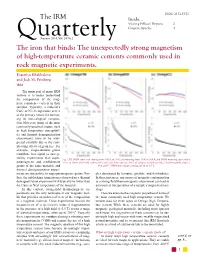

The IRM the Iron That Binds: the Unexpectedly Strong Magnetism Of

ISSN: 2152-1972 The IRM Inside... Visiting Fellows' Reports 2 Current Articles 4 QuarterlySummer 2014, Vol. 24 No.2 The iron that binds: The unexpectedly strong magnetism of high-temperature ceramic cements commonly used in rock magnetic experiments. Evgeniya Khakhalova and Josh M. Feinberg IRM The main goal of many IRM visitors is to better understand the composition of the mag- netic remanence carriers in their samples. Typically, a material’s Curie or Néel temperature serves as the primary means for estimat- ing its mineralogical composi- tion. However, many of the most commonly used techniques, such as high temperature susceptibil- ity and thermal demagnetization experiments, have to be inter- preted carefully due to the com- plicating effects of grain size. For example, single-domain grains contribute less signal to suscep- tibility experiments than super- Fig 1. RT SIRM curves on cooling from 300 K to 10 K and warming from 10 K to 300 K and SIRM warming curves from paramagnetic and multidomain 10 K to 300 K after field-cooling (FC) and zero-field-cooling (ZFC) of samples (a) Omega700_3, (b) Omega600, and (c) grains of the same material, and OmegaCC. SIRM was imparted using a field of 2.5 T. thermal demagnetization experi- ments are insensitive to superparamagnetic grains. Fur- ples dominated by hematite, goethite, and ferrihydrite). ther, the unblocking temperatures observed in a thermal In these instances, any source of magnetic contamination demagnetization experiment will typically be lower than in a strong-field thermomagnetic experiment can lead to the Curie or Néel temperature of the material. an incorrect interpretation of a sample’s magnetic miner- In this context, strong-field thermomagnetic ex- alogy. -

A Retrospective Snapshot of the Planning Processes in MER Operations After 5 Years

A Retrospective Snapshot of the Planning Processes in MER Operations After 5 Years Anthony Barrett, Deborah Bass, Sharon Laubach, and Andrew Mishkin Jet Propulsion Laboratory California Institute of Technology 4800 Oak Grove Drive M/S 301-260 {firstname.lastname}@jpl.nasa.gov Abstract Over the past five years on Mars, the Mars Exploration Rovers have traveled over 20 kilometers to climb tall hills and descend into craters. Over that period the operations process has continued to evolve as deep introspection by the MER uplink team suggests streamlining improvements and lessons learned adds complexity to handle new problems. As such, the operations process and its supporting tools automate enough of the drudgery to circumvent staff burnout issues while being nimble and safe enough to let an operations staff respond to the problems that Mars throws up on a daily basis. This paper describes the currently used integrated set of planning processes that support rover operations on a daily basis after five years of evolution. Figure 1. Mars Exploration Rover For communications, each rover has a high gain antenna Introduction for receiving instructions from Earth, and a low gain On January 3 & 24, 2004 Spirit and Opportunity, the twin antenna for transmitting data to the Odyssey or Mars Mars Exploration Rovers (MER), landed on opposite sides Reconnaissance orbiters with subsequent relay to Earth. of Mars at Gusev Crater and Meridiani Planum Given that it takes between 6 and 44 minutes for a signal to respectively. Each rover was originally expected to last 90 travel to and from the rovers, simple joystick operations Sols (Martian days) due to dust accumulation on the solar are not feasible. -

2020 Crew Health & Performance EVA Roadmap

NASA/TP-20205007604 Crew Health and Performance Extravehicular Activity Roadmap: 2020 Andrew F. J. Abercromby1 Omar Bekdash4 J. Scott Cupples1 Jocelyn T. Dunn4 E. Lichar Dillon2 Alejandro Garbino3 Yaritza Hernandez4 Alexandros D. Kanelakos1 Christine Kovich5 Emily Matula6 Matthew J. Miller7 James Montalvo6 Jason Norcross4 Cameron W. Pittman7 Sudhakar Rajulu1 Richard A. Rhodes1 Linh Vu8 1NASA Johnson Space Center, Houston, Texas 2University of Texas Medical Branch, Galveston, Texas 3GeoControl Systems Inc., Houston, Texas 4KBR, Houston, Texas 5The Aerospace Corporation, Houston, Texas 6Stinger Ghaffarian Technologies (SGT) Inc., Houston, Texas 7Jacobs Technology, Inc., Houston, Texas 8MEI Technologies, Inc., Houston, Texas National Aeronautics and Space Administration Johnson Space Center Houston, Texas 77058 October 2020 The NASA STI Program Office ... in Profile Since its founding, NASA has been dedicated to the • CONFERENCE PUBLICATION. advancement of aeronautics and space science. The Collected papers from scientific and NASA scientific and technical information (STI) technical conferences, symposia, seminars, program plays a key part in helping NASA or other meetings sponsored or maintain this important role. co-sponsored by NASA. The NASA STI program operates under the • SPECIAL PUBLICATION. Scientific, auspices of the Agency Chief Information Officer. technical, or historical information from It collects, organizes, provides for archiving, and NASA programs, projects, and missions, disseminates NASA’s STI. The NASA STI often concerned with subjects having program provides access to the NTRS Registered substantial public interest. and its public interface, the NASA Technical Report Server, thus providing one of the largest • TECHNICAL TRANSLATION. collections of aeronautical and space science STI in English-language translations of foreign the world. Results are published in both non-NASA scientific and technical material pertinent to channels and by NASA in the NASA STI Report NASA’s mission. -

Of Curiosity in Gale Crater, and Other Landed Mars Missions

44th Lunar and Planetary Science Conference (2013) 2534.pdf LOCALIZATION AND ‘CONTEXTUALIZATION’ OF CURIOSITY IN GALE CRATER, AND OTHER LANDED MARS MISSIONS. T. J. Parker1, M. C. Malin2, F. J. Calef1, R. G. Deen1, H. E. Gengl1, M. P. Golombek1, J. R. Hall1, O. Pariser1, M. Powell1, R. S. Sletten3, and the MSL Science Team. 1Jet Propulsion Labora- tory, California Inst of Technology ([email protected]), 2Malin Space Science Systems, San Diego, CA ([email protected] ), 3University of Washington, Seattle. Introduction: Localization is a process by which tactical updates are made to a mobile lander’s position on a planetary surface, and is used to aid in traverse and science investigation planning and very high- resolution map compilation. “Contextualization” is hereby defined as placement of localization infor- mation into a local, regional, and global context, by accurately localizing a landed vehicle, then placing the data acquired by that lander into context with orbiter data so that its geologic context can be better charac- terized and understood. Curiosity Landing Site Localization: The Curi- osity landing was the first Mars mission to benefit from the selection of a science-driven descent camera (both MER rovers employed engineering descent im- agers). Initial data downlinked after the landing fo- Fig 1: Portion of mosaic of MARDI EDL images. cused on rover health and Entry-Descent-Landing MARDI imaged the landing site and science target (EDL) performance. Front and rear Hazcam images regions in color. were also downloaded, along with a number of When is localization done? MARDI thumbnail images. The Hazcam images were After each drive for which Navcam stereo da- used primarily to determine the rover’s orientation by ta has been acquired post-drive and terrain meshes triangulation to the horizon. -

2005 Astronomy Magazine Index

2005 Astronomy Magazine Index Subject index flyby of Titan, 2:72–77 Einstein, Albert, 2:30–53 Cassiopeia (constellation), 11:20 See also relativity, theory of Numbers Cassiopeia A (supernova), stellar handwritten manuscript found, 3C 58 (star remnant), pulsar in, 3:24 remains inside, 9:22 12:26 3-inch telescopes, 12:84–89 Cat's Eye Nebula, dying star in, 1:24 Einstein rings, 11:27 87 Sylvia (asteroid), two moons of, Celestron's ExploraScope telescope, Elysium Planitia (on Mars), 5:30 12:33 2:92–94 Enceladus (Saturn's moon), 11:32 2003 UB313, 10:27, 11:68–69 Cepheid luminosities, 1:72 atmosphere of water vapor, 6:22 2004, review of, 1:31–40 Chasma Boreale (on Mars), 7:28 Cassini flyby, 7:62–65, 10:32 25143 (asteroid), 11:27 chonrites, and gamma-ray bursts, 5:30 Eros (asteroid), 11:28 coins, celestial images on, 3:72–73 Eso Chasma (on Mars), 7:28 color filters, 6:67 Espenak, Fred, 2:86–89 A Comet Hale-Bopp, 7:76–79 extrasolar comets, 9:30 Aeolis (on Mars), 3:28 comets extrasolar planets Alba Patera (Martian volcano), 2:28 from beyond solar system, 12:82 first image of, 4:20, 8:26 Aldrin, Buzz, 5:40–45 dust trails of, 12:72–73 first light from, who captured, 7:30 Altair (star), 9:20 evolution of, 9:46–51 newly discovered low-mass planets, Amalthea (Jupiter's moon), 9:28 extrasolar, 9:30 1:68–71 amateur telescopes. See telescopes, Conselice, Christopher, 1:20 smallest, 9:26 amateur constellations whether have diamond layers, 5:26 Andromeda Galaxy (M31), 10:84–89 See also names of specific extraterrestrial life, 4:28–34 disk of stars surrounding, 7:28 constellations eyepieces, telescope. -

Mars Exploration Rover Mobility and IDD Downlink Analysis Tools

To appear in i-SAIRAS Conference Proceedings, Feb 2008, Los Angeles, CA, USA Mars Exploration Rover Mobility and IDD Downlink Analysis Tools Jeffrey J. Biesiadecki and Robert Liebersbach and Mark W. Maimone Jet Propulsion Laboratory, California Institute of Technology Pasadena, CA USA [email protected] Abstract— Driving and manipulation activities on the Mars various flight software (FSW) subsystems [13] (e.g., the Exploration Rovers often must be planned from scratch Mobility software module [2]), and are typically stored in each day, making this a fast-paced activity by typical space Flash memory prior to transmission [15]. Data are usually mission standards. Such a schedule requires rapid turnaround in understanding what happened during activities on the relayed through UHF frequencies to an orbiter at Mars previous Martian Solar Day (Sol). In addition to obvious (e.g., Mars Odyssey) or can be transmitted directly to faults, subtle changes in expected behavior must be detected Earth at lower bandwidth using X-band frequencies. The and understood rapidly, to prevent the next day's plan from Deep Space Network receives the raw data and forwards exacerbating any imminent problem. it to the Jet Propulsion Laboratory (JPL), where processes We developed an advanced set of tools to help speed up the understanding of previous Sols' activities. Plots of detailed en- controlled by the Ground Data Systems [19] and Mission gineering data are generated automatically and incorporated Data Operations Teams automatically archive the raw data, into HTML pages, allowing quick visual inspection of each generate preliminary reports [18], and populate an archived activity. Data from different sources are grouped together filesystem with individual Data Product files. -

Geological Analysis of Martian Rover‐Derived Digital Outcrop Models

Earth and Space Science RESEARCH ARTICLE Geological Analysis of Martian Rover-Derived Digital Outcrop 10.1002/2018EA000374 Models Using the 3-D Visualization Tool, Planetary Special Section: Robotics 3-D Viewer—PRo3D Planetary Mapping: Methods, Tools for Scientific Analysis and Robert Barnes1 , Sanjeev Gupta1 , Christoph Traxler2 , Thomas Ortner2, Arnold Bauer3, Exploration Gerd Hesina2, Gerhard Paar3 , Ben Huber3,4, Kathrin Juhart3, Laura Fritz2, Bernhard Nauschnegg3, 5 5 Key Points: Jan-Peter Muller , and Yu Tao • Processing of images from 1 2 stereo-cameras on Mars rovers Department of Earth Science and Engineering, Imperial College London, London, UK, VRVis Zentrum für Virtual Reality 3 4 produces 3-D Digital Outcrop und Visualisierung Forschungs-GmbH, Vienna, Austria, Joanneum Research, Graz, Austria, Now at ETH Zürich, Zürich, Models (DOMs) which are rendered Switzerland, 5Mullard Space Science Laboratory, University College London, London, UK and analyzed in PRo3D • PRo3D enables efficient, real-time rendering and geological analysis of Abstract Panoramic camera systems on robots exploring the surface of Mars are used to collect images of the DOMs, allowing extraction of large amounts of quantitative data terrain and rock outcrops which they encounter along their traverse. Image mosaics from these cameras • Methodologies for sedimentological are essential in mapping the surface geology and selecting locations for analysis by other instruments on and structural DOM analyses in PRo3D the rover’s payload. 2-D images do not truly portray the depth of field of features within an image, nor are presented at four localities along the MSL and MER traverses their 3-D geometry. This paper describes a new 3-D visualization software tool for geological analysis of Martian rover-derived Digital Outcrop Models created using photogrammetric processing of stereo-images Supporting Information: using the Planetary Robotics Vision Processing tool developed for 3-D vision processing of ExoMars • Supporting Information S1 • Data Set S1 PanCam and Mars 2020 Mastcam-Z data. -

Keynote Address

National Aeronautics and Space Administration Jet Propulsion Laboratory California Institute of Technology Pasadena, California Ground systems in support of space exploration for Ground Systems Architectures Workshop April 3, 2008 Charles Elachi, Director NASA Jet Propulsion Laboratory California Institute of Technology 4/3/08 Elachi GSAW 1 National Aeronautics and Space Administration Ground systems have supported Jet Propulsion Laboratory California Institute of Technology Pasadena, California space exploration for a half century • 1958: Explorer 1 news conference at National Academy with William Pickering, James van Allen, and Werner von Braun National Aeronautics and Where are we now? About 58 NASA Space Administration Jet Propulsion Laboratory robotic explorers, plus a number of California Institute of Technology Pasadena, California international missions (some examples) ESA Venus Express orbiting Venus ESA Rosetta to study Spitzer studying stars and Ulysses studying the comet in 2014 galaxies in the infrared sun Aqua studying Earth’s CALIPSO studying Earth’s Hubble studying the universe oceans climate Rovers “Spirit” and “Opportunity” studying Mars Two Voyagers on an Aura studying Earth’s interstellar mission atmosphere MESSENGER on its way to Mercury QuikScat, Jason 1, CloudSat, and GRACE (plus ASTER, MISR, AIRS, MLS and TES instruments) monitoring Earth. Chandra studying the ESA Mars Express orbiting Mars x-ray universe Japanese SELENE studying Earth’s moon New Horizons on its way to Pluto National Aeronautics and Space Administration Now: A continuous robotic presence Jet Propulsion Laboratory California Institute of Technology Pasadena, California on and in orbit around Mars 2001 Mars Odyssey Spirit Mars Reconnaissance Orbiter “Do not go where the path may lead, go instead where there is no path and leave a trail.” Opportunity Emerson. -

Martian Rock Types Analysis of Surface Composition

Martian rock types Analysis of surface composition • Most of the knowledge from the surface compostion of Mars comes from: • Orbital spacecraft’s spectroscopic data • Analysis by rovers on the surface • Analysis of meteroites Southern Highlands • Mainly Basalts – Consists primarily of Olivine, Feldspars and Pyroxenes Northern Lowlands • Mainly Andesite – More evolved forms of magma – Highly volatile – Constitutes the majority of the crust Intermediate Felsic Types • High Silica Rocks • Exposed on the surface near Syrtis Major • Uncommon but include: • Dacites and Granitoids • Suggest diverse crustal composition Sedimentary Rocks • Widespread on the surface • Makes up the majority of the Northern lowlands deposists – May have formed from sea/lake deposits – Some show cross‐bedding in their layers – Hold the best chance to find fossilized life Carbonate Rocks • Formed through hydrothermal precipitation Tracks from rover unveil different soil types • Most soil consists of finely ground basaltic rock fragments • Contains iron oxide which gives Mars its red color • Also contains large amounts of sulfur and chlorine Tracks from rover unveil different soil types • Light colored soil is silica rich • High concentrations suggest water must have been involved to help concentrate the silica Blueberries • Iron rich spherules Meteorites on Mars What is a Martian meteorite? • Martian meteorites are achondritic meteorites with strong linear correlations of gases in the Martian atmosphere. Therefore, the gas trapped in each meteorite matches those that the Viking Lander found in Mar’s atmosphere. The graph below explains this correlation. • Image: http://www.imca.cc/mars/martian‐meteorites.htm Types of Martian Meteorites • 34 meteorites have been found that are Martian and they can be separated into 4 major categories with sub‐categories. -

The Planets (2014)

The Planets 2014 Youth Concert Teacher Resource Materials Ann Arbor Symphony Orchestra Music in the Key of A2® Acknowledgments The Ann Arbor Symphony Orchestra is grateful to the area music and classroom teachers, school admin- istrators, and teaching artists who have collaborated with the Symphony on this Youth Concert and the accompanying resource materials. We recognize the following major donors for their support of the 2014 Youth Concert, The Planets. Mardi Gras Fund Anonymous Contributors Sasha Gale Zac Moore Sarah Ruddy Cover image courtesy of NASA. Ann Arbor Symphony Orchestra 220 E. Huron, Suite 470 Ann Arbor, MI 48104 734-9994-4801 [email protected] a2so.com © 2013 Ann Arbor Symphony Orchestra. All rights reserved. Table of Contents Introduction .................................................................................................................................................................................................................... 4 How to Use These Materials .................................................................................................................................................................................5 Concert Program ......................................................................................................................................................................................................... 6 Unit: The Orchestra ....................................................................................................................................................................................................7 -

Marsette Vona, Ph.D

MARSETTE VONA,PH.D. http://www.ccs.neu.edu/research/gpc [email protected] Norwich, VT (U.S. Citizen) (626) 658-0972 PROFESSIONAL INTERESTS AND EXPERTISE I architect, implement, and manage software and hardware projects that push the frontiers of human experience, capability, and knowledge. I have particular expertise in robot design and kinematics, 3D sens- ing and perception, algorithms for geometry processing and graphics, CAD, and user interfaces including augmented and virtual reality. I am detail-oriented and enjoy both working as an individual contributor, as well as building project strategy, interacting with customers, and managing development. EXPERIENCE AND EDUCATION 2015–present NASA Jet Propulsion Laboratory Pasadena, CA User Interface Software Developer at JPL Ops Lab Landform: reconstructing Mars terrain - project lead, developer Tomo: volumetric rendering for CT scan data in virtual reality - technical lead, developer ProtoSpace: collaborative augmented reality for spacecraft CAD - project lead, developer 1st Place for Best Augmented Reality Experience at Unity Vision Summit, 2017 2010–2015 Northeastern University College of Computer and Information Science Boston, MA Assistant Professor of Computer Science Founder and PI of the Geometric and Physical Computing research group NSF CAREER Award, 2012 2003–2009 Massachusetts Institute of Technology Cambridge, MA Ph.D. in Electrical Engineering and Computer Science Advisor: Daniela Rus Thesis: Virtual Articulation and Kinematic Abstraction in Robotics 2001–2003 NASA Jet Propulsion Laboratory Pasadena, CA User Interface and 3D Software Developer Science Operations Software for the Mars Exploration Rover NASA Software of the Year Award Recipient, 2004 1999–2001 Massachusetts Institute of Technology Cambridge, MA M.S. in Electrical Engineering and Computer Science Advisor: David Trumper Thesis: Metrology Techniques for Compound Rotary-Linear Motion 1995–1999 Dartmouth College Hanover, NH B.A. -

Mer Contact Science Targets: Archived Database and Inclusion in the Analyst’S Notebook

51st Lunar and Planetary Science Conference (2020) 1942.pdf MER CONTACT SCIENCE TARGETS: ARCHIVED DATABASE AND INCLUSION IN THE ANALYST’S NOTEBOOK. T. C. Stein1, R. E. Arvidson1, S. J. Van Bommel1, K. L. Wagstaff2, E. Riloff3, F. Zhou1, E. A. Guin- ness1, 1McDonnell Center for the Space Sciences, Department of Earth and Planetary Sciences, Washington University in St. Louis, 1 Brookings Drive, CB 1169, St. Louis, MO 63130, [email protected], [email protected], vanbom- [email protected], [email protected], 2Jet Propulsion Laboratory, California Institute of Technology, kiri.l.wag- [email protected], 3University of Utah, [email protected]. Introduction: The Mars Exploration Rovers (MER) sion managers. From these, we compiled a list of obser- mission surface operations began in January 2004 and vation targets, using the Basilisk information extraction ended in May 2011 (Spirit) and February 2019 (Oppor- system [3] to scan these reports and highlight possible tunity) [1]. Throughout the mission, the science team target names for human review. Basilisk was trained us- made numerous measurements of targets using the Al- ing a seed target list generated from the planning tools pha-Particle X-ray Spectrometer (APXS), Mössbauer and from instrument lists and plan summaries in the An- (MB), Microscopic Imager (MI), and Rock Abrasion alyst's Notebook. It learned frequently occurring Eng- Tool (RAT) arm-based instruments, assigning an infor- lish patterns in which targets are mentioned, such as mal name to each target. During the MER mission “images of X”. Applying these patterns yielded many closeout phase, a database of contact science targets was additional candidate targets.