A Privacy Analysis of Google and Yandex Safe Browsing Thomas Gerbet, Amrit Kumar, Cédric Lauradoux

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Cross-Platform Analysis of Indirect File Leaks in Android and Ios Applications

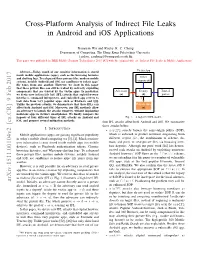

Cross-Platform Analysis of Indirect File Leaks in Android and iOS Applications Daoyuan Wu and Rocky K. C. Chang Department of Computing, The Hong Kong Polytechnic University fcsdwu, [email protected] This paper was published in IEEE Mobile Security Technologies 2015 [47] with the original title of “Indirect File Leaks in Mobile Applications”. Victim App Abstract—Today, much of our sensitive information is stored inside mobile applications (apps), such as the browsing histories and chatting logs. To safeguard these privacy files, modern mobile Other systems, notably Android and iOS, use sandboxes to isolate apps’ components file zones from one another. However, we show in this paper that these private files can still be leaked by indirectly exploiting components that are trusted by the victim apps. In particular, Adversary Deputy Trusted we devise new indirect file leak (IFL) attacks that exploit browser (a) (d) parties interfaces, command interpreters, and embedded app servers to leak data from very popular apps, such as Evernote and QQ. Unlike the previous attacks, we demonstrate that these IFLs can Private files affect both Android and iOS. Moreover, our IFL methods allow (s) an adversary to launch the attacks remotely, without implanting malicious apps in victim’s smartphones. We finally compare the impacts of four different types of IFL attacks on Android and Fig. 1. A high-level IFL model. iOS, and propose several mitigation methods. four IFL attacks affect both Android and iOS. We summarize these attacks below. I. INTRODUCTION • sopIFL attacks bypass the same-origin policy (SOP), Mobile applications (apps) are gaining significant popularity which is enforced to protect resources originating from in today’s mobile cloud computing era [3], [4]. -

1 Questions for the Record from the Honorable David N. Cicilline, Chairman, Subcommittee on Antitrust, Commercial and Administra

Questions for the Record from the Honorable David N. Cicilline, Chairman, Subcommittee on Antitrust, Commercial and Administrative Law of the Committee on the Judiciary Questions for Mr. Kyle Andeer, Vice President, Corporate Law, Apple, Inc. 1. Does Apple permit iPhone users to uninstall Safari? If yes, please describe the steps a user would need to take in order to do so. If no, please explain why not. Users cannot uninstall Safari, which is an essential part of iPhone functionality; however, users have many alternative third-party browsers they can download from the App Store. Users expect that their Apple devices will provide a great experience out of the box, so our products include certain functionality like a browser, email, phone and a music player as a baseline. Most pre-installed apps can be deleted by the user. A small number, including Safari, are “operating system apps”—integrated into the core operating system—that are part of the combined experience of iOS and iPhone. Removing or replacing any of these operating system apps would destroy or severely degrade the functionality of the device. The App Store provides Apple’s users with access to third party apps, including web browsers. Browsers such as Chrome, Firefox, Microsoft Edge and others are available for users to download. 2. Does Apple permit iPhone users to set a browser other than Safari as the default browser? If yes, please describe the steps a user would need to take in order to do so. If no, please explain why not. iPhone users cannot set another browser as the default browser. -

Website Nash County, NC

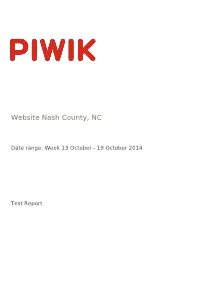

Website Nash County, NC Date range: Week 13 October - 19 October 2014 Test Report Visits Summary Value Name Value Unique visitors 5857 Visits 7054 Actions 21397 Maximum actions in one visit 215 Bounce Rate 46% Actions per Visit 3 Avg. Visit Duration (in seconds) 00:03:33 Website Nash County, NC | Date range: Week 13 October - 19 October 2014 | Page 2 of 9 Visitor Browser Avg. Time on Browser Visits Actions Actions per Visit Avg. Time on Bounce Rate Conversion Website Website Rate Internet Explorer 2689 8164 3.04 00:04:42 46% 0% Chrome 1399 4237 3.03 00:02:31 39.24% 0% Firefox 736 1784 2.42 00:02:37 50% 0% Unknown 357 1064 2.98 00:08:03 63.87% 0% Mobile Safari 654 1800 2.75 00:01:59 53.36% 0% Android Browser 330 1300 3.94 00:02:59 41.21% 0% Chrome Mobile 528 2088 3.95 00:02:04 39.02% 0% Mobile Safari 134 416 3.1 00:01:39 52.24% 0% Safari 132 337 2.55 00:02:10 49.24% 0% Chrome Frame 40 88 2.2 00:03:36 52.5% 0% Chrome Mobile iOS 20 46 2.3 00:01:54 60% 0% IE Mobile 8 22 2.75 00:00:45 37.5% 0% Opera 8 13 1.63 00:00:26 62.5% 0% Pale Moon 4 7 1.75 00:00:20 75% 0% BlackBerry 3 8 2.67 00:01:30 33.33% 0% Yandex Browser 2 2 1 00:00:00 100% 0% Chromium 1 3 3 00:00:35 0% 0% Mobile Silk 1 8 8 00:04:51 0% 0% Maxthon 1 1 1 00:00:00 100% 0% Obigo Q03C 1 1 1 00:00:00 100% 0% Opera Mini 2 2 1 00:00:00 100% 0% Puffin 1 1 1 00:00:00 100% 0% Sogou Explorer 1 1 1 00:00:00 100% 0% Others 0 0 0 00:00:00 0% 0% Website Nash County, NC | Date range: Week 13 October - 19 October 2014 | Page 3 of 9 Mobile vs Desktop Avg. -

XCSSET Update: Abuse of Browser Debug Modes, Findings from the C2 Server, and an Inactive Ransomware Module Appendix

XCSSET Update: Abuse of Browser Debug Modes, Findings from the C2 Server, and an Inactive Ransomware Module Appendix Introduction In our first blog post and technical brief for XCSSET, we discussed the depths of its dangers for Xcode developers and the way it cleverly took advantage of two macOS vulnerabilities to maximize what it can take from an infected machine. This update covers the third exploit found that takes advantage of other popular browsers on macOS to implant UXSS injection. It also details what we’ve discovered from investigating the command-and-control server’s source directory — notably, a ransomware feature that has yet to be deployed. Recap: Malware Capability List Aside from its initial entry behavior (which has been discussed previously), here is a summarized list of capabilities based on the source files found in the server: • Repackages payload modules to masquerade as well-known mac apps • Infects local Xcode and CocoaPods projects and injects malware to execute when infected project builds • Uses two zero-day exploits and trojanizes the Safari app to exfiltrate data • Uses a Data Vault zero-day vulnerability to dump and steal Safari cookie data • Abuses the Safari development version (SafariWebkitForDevelopment) to inject UXSS backdoor JS payload • Injects malicious JS payload code to popular browsers via UXSS • Exploits the browser debugging mode for affected Chrome-based and similar browsers • Collects QQ, WeChat, Telegram, and Skype user data in the infected machine (also forces the user to allow Skype and -

HTTP Cookie - Wikipedia, the Free Encyclopedia 14/05/2014

HTTP cookie - Wikipedia, the free encyclopedia 14/05/2014 Create account Log in Article Talk Read Edit View history Search HTTP cookie From Wikipedia, the free encyclopedia Navigation A cookie, also known as an HTTP cookie, web cookie, or browser HTTP Main page cookie, is a small piece of data sent from a website and stored in a Persistence · Compression · HTTPS · Contents user's web browser while the user is browsing that website. Every time Request methods Featured content the user loads the website, the browser sends the cookie back to the OPTIONS · GET · HEAD · POST · PUT · Current events server to notify the website of the user's previous activity.[1] Cookies DELETE · TRACE · CONNECT · PATCH · Random article Donate to Wikipedia were designed to be a reliable mechanism for websites to remember Header fields Wikimedia Shop stateful information (such as items in a shopping cart) or to record the Cookie · ETag · Location · HTTP referer · DNT user's browsing activity (including clicking particular buttons, logging in, · X-Forwarded-For · Interaction or recording which pages were visited by the user as far back as months Status codes or years ago). 301 Moved Permanently · 302 Found · Help 303 See Other · 403 Forbidden · About Wikipedia Although cookies cannot carry viruses, and cannot install malware on 404 Not Found · [2] Community portal the host computer, tracking cookies and especially third-party v · t · e · Recent changes tracking cookies are commonly used as ways to compile long-term Contact page records of individuals' browsing histories—a potential privacy concern that prompted European[3] and U.S. -

The Financialisation of Big Tech

Engineering digital monopolies The financialisation of Big Tech Rodrigo Fernandez & Ilke Adriaans & Tobias J. Klinge & Reijer Hendrikse December 2020 Colophon Engineering digital monopolies The financialisation of Big Tech December 2020 Authors: Rodrigo Fernandez (SOMO), Ilke Editor: Marieke Krijnen Adriaans (SOMO), Tobias J. Klinge (KU Layout: Frans Schupp Leuven) and Reijer Hendrikse (VUB) Cover photo: Geralt/Pixabay With contributions from: ISBN: 978-94-6207-155-1 Manuel Aalbers and The Real Estate/ Financial Complex research group at KU Leuven, David Bassens, Roberta Cowan, Vincent Kiezebrink, Adam Leaver, Michiel van Meeteren, Jasper van Teffelen, Callum Ward Stichting Onderzoek Multinationale The Centre for Research on Multinational Ondernemingen Corporations (SOMO) is an independent, Centre for Research on Multinational not-for-profit research and network organi- Corporations sation working on social, ecological and economic issues related to sustainable Sarphatistraat 30 development. Since 1973, the organisation 1018 GL Amsterdam investigates multinational corporations The Netherlands and the consequences of their activities T: +31 (0)20 639 12 91 for people and the environment around F: +31 (0)20 639 13 21 the world. [email protected] www.somo.nl Made possible in collaboration with KU Leuven and Vrije Universiteit Brussel (VUB) with financial assistance from the Research Foundation Flanders (FWO), grant numbers G079718N and G004920N. The content of this publication is the sole responsibility of SOMO and can in no way be taken to reflect the views of any of the funders. Engineering digital monopolies The financialisation of Big Tech SOMO Rodrigo Fernandez, Ilke Adriaans, Tobias J. Klinge and Reijer Hendrikse Amsterdam, December 2020 Contents 1 Introduction .......................................................................................................... -

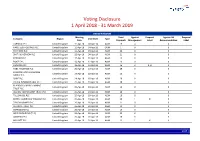

Global Voting Activity Report to March 2019

Voting Disclosure 1 April 2018 - 31 March 2019 UNITED KINGDOM Meeting Total Against Proposal Against ISS Proposal Company Region Vote Date Type Date Proposals Management Label Recommendation Label CARNIVAL PLC United Kingdom 11-Apr-18 04-Apr-18 AGM 19 0 0 HANSTEEN HOLDINGS PLC United Kingdom 11-Apr-18 04-Apr-18 OGM 1 0 0 RIO TINTO PLC United Kingdom 11-Apr-18 04-Apr-18 AGM 22 0 0 SMITH & NEPHEW PLC United Kingdom 12-Apr-18 04-Apr-18 AGM 21 0 0 PORVAIR PLC United Kingdom 17-Apr-18 10-Apr-18 AGM 15 0 0 BUNZL PLC United Kingdom 18-Apr-18 12-Apr-18 AGM 19 0 0 HUNTING PLC United Kingdom 18-Apr-18 12-Apr-18 AGM 16 2 3, 8 0 HSBC HOLDINGS PLC United Kingdom 20-Apr-18 13-Apr-18 AGM 29 0 0 LONDON STOCK EXCHANGE United Kingdom 24-Apr-18 18-Apr-18 AGM 26 0 0 GROUP PLC SHIRE PLC United Kingdom 24-Apr-18 18-Apr-18 AGM 20 0 0 CRODA INTERNATIONAL PLC United Kingdom 25-Apr-18 19-Apr-18 AGM 18 0 0 BLACKROCK WORLD MINING United Kingdom 25-Apr-18 19-Apr-18 AGM 15 0 0 TRUST PLC ALLIANZ TECHNOLOGY TRUST PLC United Kingdom 25-Apr-18 19-Apr-18 AGM 10 0 0 TULLOW OIL PLC United Kingdom 25-Apr-18 19-Apr-18 AGM 16 0 0 BRITISH AMERICAN TOBACCO PLC United Kingdom 25-Apr-18 19-Apr-18 AGM 20 1 8 0 TAYLOR WIMPEY PLC United Kingdom 26-Apr-18 20-Apr-18 AGM 21 0 0 ALLIANCE TRUST PLC United Kingdom 26-Apr-18 20-Apr-18 AGM 13 0 0 SCHRODERS PLC United Kingdom 26-Apr-18 20-Apr-18 AGM 19 0 0 WEIR GROUP PLC (THE) United Kingdom 26-Apr-18 20-Apr-18 AGM 23 0 0 AGGREKO PLC United Kingdom 26-Apr-18 20-Apr-18 AGM 20 0 0 MEGGITT PLC United Kingdom 26-Apr-18 20-Apr-18 AGM 22 1 4 0 1/47 -

Google, Bing, Yahoo!, Yandex & Baidu

Google, bing, Yahoo!, Yandex & Baidu – major differences and ranking factors. NO IMPACT » MAJOR IMPACT 1 2 3 4 TECHNICAL Domains & URLs - Use main pinyin keyword in domain - Main keyword in the domain - Main keyword in the domain - Main keyword in the domain - Easy to remember Keyword Domain 1 - As short as possible 2 - As short as possible 2 - As short as possible 11 - As short as possible - Use main pinyin keyword in URL - Main keyword as early as possible - Main keyword as early as possible - Main keyword as early as possible - Easy to remember Keyword in URL Path 3 - One specific keyword 3 - One specific keyword 3 - One specific keyword 11 - As short as possible - As short as possible - As short as possible - As short as possible - No filling words - No filling words - As short as possible Length of URL 2 2 2 22 - URL directory depth as brief as possible - No repeat of terms - No repeat of terms - Complete website secured with SSL -No ranking boost from HTTPS - No active SSL promotion - Complete website secured with SSL HTTPS 3 - No mix of HTTP and HTTPS 2 -Complete website secured with SSL 1 - Mostly recommended for authentication 22 - HTTPS is easier to be indexed than HTTP Country & Language - ccTLD or gTLD with country and language directories - ccTLD or gTLD with country and language directories - Better ranking with ccTLD (.cn or .com.cn) - Slightly better rankings in Russia with ccTLD .ru Local Top-Level Domain 3 - No regional domains, e.g. domain.eu, domain.asia 3 - No regional domains, e.g. -

Safe Browsing Services: to Track, Censor and Protect Thomas Gerbet, Amrit Kumar, Cédric Lauradoux

Safe Browsing Services: to Track, Censor and Protect Thomas Gerbet, Amrit Kumar, Cédric Lauradoux To cite this version: Thomas Gerbet, Amrit Kumar, Cédric Lauradoux. Safe Browsing Services: to Track, Censor and Protect. [Research Report] RR-8686, INRIA. 2015. hal-01120186v1 HAL Id: hal-01120186 https://hal.inria.fr/hal-01120186v1 Submitted on 4 Mar 2015 (v1), last revised 8 Sep 2015 (v4) HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Safe Browsing Services: to Track, Censor and Protect Thomas Gerbet, Amrit Kumar, Cédric Lauradoux RESEARCH REPORT N° 8686 February 2015 Project-Teams PRIVATICS ISSN 0249-6399 ISRN INRIA/RR--8686--FR+ENG Safe Browsing Services: to Track, Censor and Protect Thomas Gerbet, Amrit Kumar, Cédric Lauradoux Project-Teams PRIVATICS Research Report n° 8686 — February 2015 — 25 pages Abstract: Users often inadvertently click malicious phishing or malware website links, and in doing so they sacrifice secret information and sometimes even fully compromise their devices. The purveyors of these sites are highly motivated and hence construct intelligently scripted URLs to remain inconspicuous over the Internet. In light of the ever increasing number of such URLs, new ingenious strategies have been invented to detect them and inform the end user when he is tempted to access such a link. -

Written Testimony of Keith Enright Chief Privacy Officer, Google United

Written Testimony of Keith Enright Chief Privacy Officer, Google United States Senate Committee on Commerce, Science, and Transportation Hearing on “Examining Safeguards for Consumer Data Privacy” September 26, 2018 Chairman Thune, Ranking Member Nelson, and distinguished members of the Committee: thank you for the opportunity to appear before you this morning. I appreciate your leadership on the important issues of data privacy and security, and I welcome the opportunity to discuss Google’s work in these areas. My name is Keith Enright, and I am the Chief Privacy Officer for Google. I have worked at the intersection of technology, privacy, and the law for nearly 20 years, including as the functional privacy lead for two other companies prior to joining Google in 2011. In that time, I have been fortunate to engage with legislators, regulatory agencies, academics, and civil society to help inform and improve privacy protections for individuals around the world. I lead Google’s global privacy legal team and, together with product and engineering partners, direct our Office of Privacy and Data Protection, which is responsible for legal compliance, the application of our privacy principles, and generally meeting our users’ expectations of privacy. This work is the effort of a large cross-functional team of engineers, researchers, and other experts whose principal mission is protecting the privacy of our users. Across every single economic sector, government function, and organizational mission, data and technology are critical keys to success. With advances in artificial intelligence and machine learning, data-based research and services will continue to drive economic development and social progress in the years to come. -

Guideline for Securing Your Web Browser P a G E | 2

CMSGu2011-02 CERT-MU SECURITY GUIDELINE 2011 - 02 Mauritian Computer Emergency Response Team Enhancing Cyber Security in Mauritius Guideline For Securing Your Web Browser National Computer Board Mauritius JuJunene 2011 2011 Version 1.7 IssueIssue No. No. 4 2 National Computer Board © Table of Contents 1.0 Introduction .......................................................................................................................... 7 1.1 Purpose and Scope ........................................................................................................... 7 1.2 Audience........................................................................................................................... 7 1.3 Document Structure.......................................................................................................... 7 2.0 Background .......................................................................................................................... 8 3.0 Types of Web Browsers ....................................................................................................... 9 3.1 Microsoft Internet Explorer .............................................................................................. 9 3.2 Mozilla Firefox ................................................................................................................. 9 3.3 Safari ................................................................................................................................ 9 3.4 Chrome .......................................................................................................................... -

View the Slides

Alice in Warningland A Large-Scale Field Study of Browser Security Warning Effectiveness Devdatta Akhawe Adrienne Porter Felt UC Berkeley Google, Inc. Given a choice between dancing pigs and security, the user will pick dancing pigs every time Felten and McGraw Securing Java 1999 a growing body of measurement studies make clear that ...[users] are oblivious to security cues [and] ignore certificate error warnings Herley The Plight of The Targeted Attacker at Scale 2010 Evidence from experimental studies indicates that most people don’t read computer warnings, don’t understand them, or simply don’t heed them, even when the situation is clearly hazardous. Bravo-Lillo Bridging the Gap in Computer Security Warnings 2011 Firefox Malware Warning Chrome SSL Warning Firefox SSL Warning today A large scale measurement of user responses to modern warnings in situ today A large scale measurement of user responses to modern warnings in situ What did we measure? Clickthrough Rate # warnings ignored # warnings shown (across all users) What is the ideal click through rate? 0% Why aim for a 0% rate? • Low false positives => protecting users – The Google Safe Browsing list (malware/phishing warnings) has low false positives • High false positives ? (SSL Warnings) – Low clickthrough incentivizes websites to fix their SSL errors – False positives annoy users and browsers should reduce the number of false warnings to achieve 0% clickthrough rate How did we measure it? Browser Telemetry • A mechanism for browsers to collect pseudonymous performance and quality