Proceedings of SMC Sound and Music Computing Conference 2013

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Effects of Instruction on the Singing Ability of Children Ages 5-11

THE EFFECTS OF INSTRUCTION ON THE SINGING ABILITY OF CHILDREN AGES 5 – 11: A META-ANALYSIS Christina L. Svec Dissertation Proposal Prepared for the Degree of DOCTOR OF PHILOSOPHY UNIVERSITY OF NORTH TEXAS August 2015 APPROVED: Debbie Rohwer, Major Professor and Chair of the Department of Music Education Don Taylor, Committee Member Robin Henson, Committee Member Benjamin Brand, Committee Member James C. Scott, Dean of the College of Music Costas Tsatsoulis, Interim Dean of the Toulouse Graduate School Svec, Christina L. The Effects of Instruction on the Singing Ability of Children Ages 5-11: A Meta-Analysis. Doctor of Philosophy (Music Education), August 15 2015, 192 pp., 14 tables, reference list, 280 titles. The purpose of the meta-analysis was to address the varied and somewhat stratified study results within the area of singing ability and instruction by statistically summarizing the data of related studies. An analysis yielded a small overall mean effect size for instruction across 34 studies, 433 unique effects, and 5,497 participants ranging in age from 5- to 11-years old (g = 0.43). The largest overall study effect size across categorical variables included the effects of same and different discrimination techniques on mean score gains. The largest overall effect size across categorical moderator variables included research design: Pretest-posttest 1 group design. Overall mean effects by primary moderator variable ranged from trivial to moderate. Feedback yielded the largest effect regarding teaching condition, 8-year-old children yielded the largest effect regarding age, girls yielded the largest effect regarding gender, the Boardman assessment measure yielded the largest effect regarding measurement instrument, and song accuracy yielded the largest effect regarding measured task. -

UNIVERSITY of CALIFORNIA, SAN DIEGO Probabilistic Topic Models

UNIVERSITY OF CALIFORNIA, SAN DIEGO Probabilistic Topic Models for Automatic Harmonic Analysis of Music A dissertation submitted in partial satisfaction of the requirements for the degree Doctor of Philosophy in Computer Science by Diane J. Hu Committee in charge: Professor Lawrence Saul, Chair Professor Serge Belongie Professor Shlomo Dubnov Professor Charles Elkan Professor Gert Lanckriet 2012 Copyright Diane J. Hu, 2012 All rights reserved. The dissertation of Diane J. Hu is approved, and it is ac- ceptable in quality and form for publication on microfilm and electronically: Chair University of California, San Diego 2012 iii DEDICATION To my mom and dad who inspired me to work hard.... and go to grad school. iv TABLE OF CONTENTS Signature Page . iii Dedication . iv Table of Contents . v List of Figures . viii List of Tables . xii Acknowledgements . xiv Vita . xvi Abstract of the Dissertation . xvii Chapter 1 Introduction . 1 1.1 Music Information Retrieval . 1 1.1.1 Content-Based Music Analysis . 2 1.1.2 Music Descriptors . 3 1.2 Problem Overview . 5 1.2.1 Problem Definition . 5 1.2.2 Motivation . 5 1.2.3 Contributions . 7 1.3 Thesis Organization . 9 Chapter 2 Background & Previous Work . 10 2.1 Overview . 10 2.2 Musical Building Blocks . 10 2.2.1 Pitch and frequency . 11 2.2.2 Rhythm, tempo, and loudness . 17 2.2.3 Melody, harmony, and chords . 18 2.3 Tonality . 18 2.3.1 Theory of Tonality . 19 2.3.2 Tonality in music . 22 2.4 Tonality Induction . 25 2.4.1 Feature Extraction . 26 2.4.2 Tonal Models for Symbolic Input . -

Viola Da Gamba Society: Write-Up of Talk Given at St Gabriel's Hall

Viola da Gamba Society: write-up of talk given at St Gabriel’s Hall, Pimlico, 27 January 2019 John Bryan: The highs and lows of English consort music: investigating compass, range and register as a guide to instrumental character The ideas and research presented here were central to a chapter in Early English Viols: Instruments, Makers and Music, jointly written with Michael Fleming (Routledge, 2017) so this article should be viewed as an introduction and summary of some of the more detailed evidence available there. My premise is that one way of trying to understand what English viols might have been like in the Tudor and Stuart periods is to investigate the ranges of the pieces they played. On the whole, composers tend to know their instruments and write what is effective for them. So Haydn writes differently for the piano when he’s heard instruments made in London in the 1790s, exploiting their sonorous bass register in comparison with the more lightly strung instruments he had known in Vienna. Some composers’ use of range challenges norms: Beethoven’s addition of piccolo and contrabassoon to the woodwind in the finale of his Symphony 5 (1808) revolutionised the sound of the orchestra by adding an extra octave at either end of the section. Analysing the ranges used in English viol consort music might therefore provide insights into the sonorities and functions of the instruments of the period. First some definitions, as used here: Compass: the distance between the lowest bass note and the highest treble note in a composition. John Dowland, in his translation of Ornithoparcus’ Micrologus (London, 1609) writes: ‘The Compasse is nothing else, but a circuite or space allowed by the authoritie of the Musicians to the Tones for their rising and falling.’ Range: the distance between the lowest and highest note of a single part or voice within a polyphonic composition. -

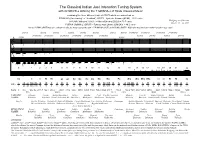

The Classical Indian Just Intonation Tuning System

The Classical Indian Just Intonation Tuning System with 22 SRUTI-s defining the 7 SWARA-s of Hindu Classical Music combining the three different kinds of SRUTI which are understood as PRAMANA ("measuring" or "standard") SRUTI = Syntonic Comma (81/80) = 21.5 cents NYUNA ("deficient") SRUTI = Minor Chroma (25/24) = 70.7 cents Wolfgang von Schweinitz October 20 - 22, 2006 PURNA ("fullfilling") SRUTI = Pythagorean Limma (256/243) = 90.2 cents (where PURNA SRUTI may also, enharmonically, be interpreted as the sum of PRAMANA SRUTI and NYUNA SRUTI = Major Chroma (135/128 = 81/80 * 25/24) = 92.2 cents) purna nyuna purna nyuna purna nyuna purna purna pramana pramana pramana pramana pramana pramana pramana pramana pramana pramana nyuna purna nyuna purna nyuna nyuna # # # # # # " ! # " # # ! # # # <e e f m n >m n # # # # # f # n t e u f m n # # ! # # # <e e# f# m# n# e f m n >m ! n" t <f e u f m n # # # # # # " ! # " # # ! # # # <e e f m n >m n ! # # # # # f # n t e u f m n # # ! # # # <e e# f# m# n# n o u v >u n " t <f e u f m n # # " ! ! # # ! # # # <n n# o# u# v# >u n # # # # f # # n" t e u f m n # # # ! # # # <e e # f # m# n# n o u v >u n " t <f e u f m n 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 # # ! # # ! <e e# f# m# n# >m n " # # f n" t e# u f# m# n# $ # ! <e e# f# m# n# n# o# u# v# >u n " t <f e#u f# m# n# Sa ri ri Ri Ri ga ga Ga Ga ma ma Ma Ma Pa dha dha Dha Dha ni ni Ni Ni Sa 1 25 21 256 135 16 10 9 7 32 6 5 81 4 27 45 64 729 10 3 25 128 405 8 5 27 7 16 9 15 243 40 2 Ratio 1 24 20 243 128 15 9 8 6 27 5 4 64 3 20 32 45 -

Music Theory Contents

Music theory Contents 1 Music theory 1 1.1 History of music theory ........................................ 1 1.2 Fundamentals of music ........................................ 3 1.2.1 Pitch ............................................. 3 1.2.2 Scales and modes ....................................... 4 1.2.3 Consonance and dissonance .................................. 4 1.2.4 Rhythm ............................................ 5 1.2.5 Chord ............................................. 5 1.2.6 Melody ............................................ 5 1.2.7 Harmony ........................................... 6 1.2.8 Texture ............................................ 6 1.2.9 Timbre ............................................ 6 1.2.10 Expression .......................................... 7 1.2.11 Form or structure ....................................... 7 1.2.12 Performance and style ..................................... 8 1.2.13 Music perception and cognition ................................ 8 1.2.14 Serial composition and set theory ............................... 8 1.2.15 Musical semiotics ....................................... 8 1.3 Music subjects ............................................. 8 1.3.1 Notation ............................................ 8 1.3.2 Mathematics ......................................... 8 1.3.3 Analysis ............................................ 9 1.3.4 Ear training .......................................... 9 1.4 See also ................................................ 9 1.5 Notes ................................................ -

Improvising Rhythmic-Melodic Designs in South Indian Karnatak Music: U

Improvising Rhythmic-Melodic Designs in South Indian Karnatak Music: U. Shrinivas Live in 1995 Garrett Field INTRODUCTION N the 1970s, 1980s, and 1990s, ethnomusicologists strove to make known the technical details I of modal improvisation as practiced in the Eastern Arab world, Iran, North India, South India, and Turkey.1 A supplement to such monographs and dissertations was Harold Powers’s (1980b) ambitious encyclopedia entry that sought to synthesize data about modes from these five regions. Powers’s broad conception of mode perhaps to some extent legitimated his project to compare and contrast such diverse musical traditions. Powers defined the term “mode” as any group of pitches that fall on a scale-tune spectrum (1980b, 830, 837). During the last ten years ethnomusicologists have attempted to build upon the above- cited studies of modal improvisation.2 In the realm of North India, for example, Widdess (2011) and Zadeh (2012) argue that large-scale form and small-scale chunks of repeated melody can be understood as schemas and formulaic language, respectively. Widdess’s article is important because it introduces two terms—“internal scalar expansion” and “consonant reinforcement” (Widdess 2011, 199–200, 205)—to describe key features of North Indian ālap.3 Zadeh’s article is noteworthy because she contends that Albert Lord’s ([1960] 2000) argument that Serbo- Croatian epic poets fashioned poetry out of “formulas” can also be made with regard to rāga improvisation. One lacuna in the academic quest to understand the nuances of modal improvisation is scholarship about a type of modal improvisation that could be considered “hybrid” because of its dual emphasis on rhythm and melody. -

Music! Curriculum! ! ! ! ! ! ! ! ! Elementary!

! FAIRBANKS!NORTH!STAR!BOROUGH!SCHOOL!DISTRICT! ! ! MUSIC! CURRICULUM! ! ! ! ! ! ! ! ! ! ELEMENTARY! (K66)! ! Draft!Four:!January!9,!2017 ! ! ! Table!of!Contents! ! ! General!Music! Kindergarten!..............................................................................................................................................................................!4! First!Grade!...................................................................................................................................................................................!6! Second!Grade!..............................................................................................................................................................................!9! Third!Grade!...............................................................................................................................................................................!12! Fourth!Grade!............................................................................................................................................................................!15! Fifth!Grade!.................................................................................................................................................................................!18! Sixth!Grade!................................................................................................................................................................................!20! Choir!..............................................................................................................................................................................................!24! -

A STORY UNTOLD a Suite for Solo Piano Inspired by the Heroic

A STORY UNTOLD A suite for solo piano inspired by the Heroic Journey CHRISTOPHER E. FEIGE A thesis submitted to the Faculty of Graduate Studies in partial fulfillment of the requirements for the degree of MASTER OF ARTS Graduate Program in Music York University Toronto, Ontario December, 2013 © Christopher Feige 2013 ii Abstract: This thesis is an exploration of the role an audience might assume in a musical performance, and is a test of the hypothesis that listeners' engagement with a composition can be increased by giving them a role in the creation of a programmatic narrative frame for the music. A Story Untold is a piano suite in seven movements designed around stages of the Heroic Journey as described by Joseph Campbell in his book The Hero With a Thousand Faces. Seven stages from his list of seventeen were used as inspiration for musical compositions, and together these movements musically portray the story of a heroic journey. The story is unwritten, but each movement's title describes a key event in the hero's quest and together they outline a framework onto which listeners, by consciously or unconsciously filling in the details, can create their own story inspired by the music. The written component of this thesis contains reflections on Campbell's work as well as analyses of the individual movements. iii DEDICATION To those who have wished the hero in their favorite story was more like them, and vice versa, this is for you. iv ACKNOWLEDGEMENTS: I would like to express immense gratitude to Michael Coghlan and Al Henderson for the support they have provided me both as I completed this work and throughout my time at York University. -

Music Craft AMEB’S

Music Craft AMEB’s Online Music Shop GENERAL REQUIREMENTS – WRITTEN EXAMINATIONS ‘middle c’ Introduction A The Music Craft syllabus is available for examination in the theo- W A YAA X A ! 1 1 2 2 2 3 retical and aural aspects of music. Music Craft provides a graded C B c g cA b# c c e c series of examinations from Preliminary to Grade 6. b # A A A A Written Examinations A The aural component of written examinations is administered by Great C small c means of a recording. Before the commencement of the written Great B small g Series 4 grade 2 examination candidates will be given a short listening time Preliminary The Australian Music Examinations Board’s publication catalogue contains Scale degrees Piano well over 250 titles. The titles cover most AMEB syllabus areas: in order to become familiar with the sounds to be used on the The method of writing scale degree numbers in Music Craft is as • MUSIC CRAFT, THEORY OF MUSIC, MUSICIANSHIP • AURAL TESTS AND SIGHT READING examination CD. When undertaking a written exam, candidates for Leisure • PIANO follows: • FOR LEISURE – PIANO, SINGING AND SAXOPHONE • STRINGS are encouraged to write neatly and clearly on examination papers. • WOODWIND • Scale degree numbers above the notes of the scale or melody • GUITAR Pianoseries16 • SINGING For the guidance of candidates, the maximum number of marks • BRASS • Carets (ˆ)to be written over scale degree numbers. • CONTEMPORARY POPULAR MUSIC (CPM) allotted to each question is shown on the examination paper. Example: AMEB’s catalogue of books and recordings covers the full range of repertoire from Classical to contemporary, Baroque to bossa nova. -

Title Page Contents Acknowledgements Glossary

Cover Page The handle http://hdl.handle.net/1887/45012 holds various files of this Leiden University dissertation Author: Berentsen, Niels Title: Discantare Super Planum Cantum : new approaches to vocal polyphonic improvisation 1300-1470 Issue Date: 2016-12-14 Discatttare Super Planum Cantum New Approaches to Vocal Poþhonic Improvisation 1.300-L470 Proefschrift ter vetkdjging van de gaad van Doctot LLrr de lJnivetsiteit Leiden op gezugvan Rector Magnificus prof.mt. CJJ.M. Stolker, voþns besluit van het College voor Ptomotíes te verdedigen op uroensdag 14 decembet2016 klokke 13.45 uut doot Niels Berentsen geboten te's-Gtavenhage Q'{L) tn 1,987 Promotores Prof. Frans de Ruiter Ijniversiteit Leiden Prof. Gérard Geay Conservatoire National Supérieur de Lyon Dr. Fabrice Fitch Royal Northern College of Music Manchester (rrr9 Stratton Bull Katholieke l]niversiteit Leuven Ptomotiecommissie Prof.dr. Henk Borydodf Universiteit Leiden Ptof.dt. David Fallows University of Manchester / Schola Cantorum Basiliensis Dr. Âdam Gilbert Thornton School of Music, University of Southern Caltforttta Prof. Jean-Yves Haymoz Haute École de Musique de Genève / Conservatoire National Supérieur de Lyon Corina Marn Schola Cantorum Basiliensis l- Dit proefschrift is geschreven als een gedeeltelijke vervulling van de vereisten voor het doctoraatsprogramma docARTES. De overblijvende vereiste bestaat uit een demonstratie van de onderzoeksresultaten in de vorm van een artistieke presentatie. Het docARTES programma is georganiseerd door het Orpheus lnstituut te Gent. ln samenwerking met de Universiteit Leiden, de Hogeschoolder Kuhsten Den Haag, het Conservatorium van Amsterdam, de Katholieke Universiteit Leuven en het Lemmensinstituut. Disclaimer The author has made every effort to trace the copyright and owners of the illustrations reproduced in this dissertation. -

Musicians' Guide

Fedora 14 Musicians' Guide A guide to Fedora Linux's audio creation and music capabilities. Christopher Antila Musicians' Guide Fedora 14 Musicians' Guide A guide to Fedora Linux's audio creation and music capabilities. Edition 1 Author Christopher Antila [email protected] Copyright © 2010 Red Hat, Inc. and others. The text of and illustrations in this document are licensed by Red Hat under a Creative Commons Attribution–Share Alike 3.0 Unported license ("CC-BY-SA"). An explanation of CC-BY-SA is available at http://creativecommons.org/licenses/by-sa/3.0/. The original authors of this document, and Red Hat, designate the Fedora Project as the "Attribution Party" for purposes of CC-BY-SA. In accordance with CC-BY-SA, if you distribute this document or an adaptation of it, you must provide the URL for the original version. Red Hat, as the licensor of this document, waives the right to enforce, and agrees not to assert, Section 4d of CC-BY-SA to the fullest extent permitted by applicable law. Red Hat, Red Hat Enterprise Linux, the Shadowman logo, JBoss, MetaMatrix, Fedora, the Infinity Logo, and RHCE are trademarks of Red Hat, Inc., registered in the United States and other countries. For guidelines on the permitted uses of the Fedora trademarks, refer to https://fedoraproject.org/wiki/ Legal:Trademark_guidelines. Linux® is the registered trademark of Linus Torvalds in the United States and other countries. Java® is a registered trademark of Oracle and/or its affiliates. XFS® is a trademark of Silicon Graphics International Corp. or its subsidiaries in the United States and/or other countries. -

Making Music with ABC PLUS

' $ Guido Gonzato Making Music with ABC PLUS Scherzando 3 mf A guide to the notation and its applications & % Making Music with ABC PLUS Copyleft © Guido Gonzato, PhD, 2003–2012 Latest update: March 30, 2012 Typeset with LATEX, with the help of the Jed editor andLATEX4JED. This manual is released under the terms of the GNU Free Documentation License, http://www.gnu.org/licenses/fdl.html The latest version of this manual is available at: http://abcplus.sourceforge.net/#ABCGuide To Annarosa, Bruno, Lorenzo Œ Contents About This Book xiii 1 Music on the Computer with ABC PLUS 1 1.1 Introduction...................................1 1.1.1 Requirements..............................2 1.1.2 Software................................2 1.1.3 Why ABC?...............................3 1.2 Getting Started.................................6 1.2.1 Installing the Programs.........................6 1.2.2 ABC in a Nutshell...........................7 1.2.3 Our First Score.............................8 2 Melody 13 2.1 Notes and Symbols............................... 13 2.1.1 Pitch: A-G a-g ,’ .......................... 13 2.1.2 Note Length: L: ............................ 14 2.1.3 Rests and Spacing: z Z x y ..................... 15 2.1.4 Accidentals: ˆ = ........................... 16 2.1.5 Dotted Notes: < > ........................... 17 2.1.6 Ties, Slurs, Staccato: - () . ..................... 18 2.1.7 Tuplets: (n ............................... 18 2.1.8 Chords: [] ............................... 19 2.1.9 Lyrics: W: w: ............................. 20 2.1.10 Foreign Characters........................... 22 Never Type Accented Letters!..................... 22 2.1.11 Grace Notes: ˜ fg ........................... 23 2.1.12 Forcing Line Breaks: ! ......................... 24 2.1.13 Avoiding Line Breaks: n ........................ 24 2.1.14 Inline Fields.............................. 25 2.2 Music Properties...............................