Genetic Algorithms for Evolving Computer Chess Programs

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Chess Tests: Basic Suite, Positions 16-20

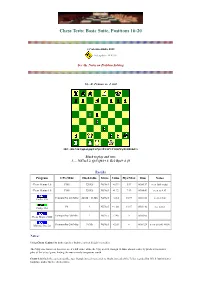

Chess Tests: Basic Suite, Positions 16-20 (c) Valentin Albillo, 2020 Last update: 14/01/98 See the Notes on Problem Solving 16.- Z. Franco vs. J. Gil FEN: rbb1N1k1/pp1n1ppp/8/2Pp4/3P4/4P3/P1Q2PPq/R1BR1K2/ b Black to play and win: 1. ... Nd7xc5 2. Qc5 Qh1+ 3. Ke2 Bg4+ 4. f3 Results Program CPU/Mhz Hash table Move Value Plys/Max Time Notes Chess Genius 1.0 P100 320 Kb Nd7xc5 +0.39 5/17 00:00:17 sees little value Chess Genius 1.0 P100 320 Kb Nd7xc5 +1.72 7/19 00:04:49 sees to 4. f3 Pentium Pro 200 MHz 24 Mb + 16 Mb Nd7xc5 +2.14 10/19 00:01:20 seen at 14s Crafty 12.6 P6 ? Nd7xc5 +1.181 11/17 00:01:46 see notes Crafty 13.3 Pentium Pro 200 Mhz ? Nd7xc5 +2.46 9 00:03:05 Chess Master 5500 Pentium Pro 200 Mhz 10 Mb Nd7xc5 +2.65 6 00:01:24 seen at 0:00, +0.00 MChess Pro 5.0 Notes: Using Chess Genius 1.0, both searches find the correct Knight's sacrifice. The 5-ply one, however, does not see it's full value, while the 7-ply search, though 16 times slower, correctly predicts the next 6 plies of the actual game, finding the move nearly two pawns worth. Crafty 12.6 finds the correct sacrifice too, though it needs to search to 10 ply, instead of the 7 plies required by CG1.0, but its better hardware makes for the shortest time. -

Draft – Not for Circulation

A Gross Miscarriage of Justice in Computer Chess by Dr. Søren Riis Introduction In June 2011 it was widely reported in the global media that the International Computer Games Association (ICGA) had found chess programmer International Master Vasik Rajlich in breach of the ICGA‟s annual World Computer Chess Championship (WCCC) tournament rule related to program originality. In the ICGA‟s accompanying report it was asserted that Rajlich‟s chess program Rybka contained “plagiarized” code from Fruit, a program authored by Fabien Letouzey of France. Some of the headlines reporting the charges and ruling in the media were “Computer Chess Champion Caught Injecting Performance-Enhancing Code”, “Computer Chess Reels from Biggest Sporting Scandal Since Ben Johnson” and “Czech Mate, Mr. Cheat”, accompanied by a photo of Rajlich and his wife at their wedding. In response, Rajlich claimed complete innocence and made it clear that he found the ICGA‟s investigatory process and conclusions to be biased and unprofessional, and the charges baseless and unworthy. He refused to be drawn into a protracted dispute with his accusers or mount a comprehensive defense. This article re-examines the case. With the support of an extensive technical report by Ed Schröder, author of chess program Rebel (World Computer Chess champion in 1991 and 1992) as well as support in the form of unpublished notes from chess programmer Sven Schüle, I argue that the ICGA‟s findings were misleading and its ruling lacked any sense of proportion. The purpose of this paper is to defend the reputation of Vasik Rajlich, whose innovative and influential program Rybka was in the vanguard of a mid-decade paradigm change within the computer chess community. -

California State University, Northridge Implementing

CALIFORNIA STATE UNIVERSITY, NORTHRIDGE IMPLEMENTING GAME-TREE SEARCHING STRATEGIES TO THE GAME OF HASAMI SHOGI A graduate project submitted in partial fulfillment of the requirements for the degree of Master of Science in Computer Science By Pallavi Gulati December 2012 The graduate project of Pallavi Gulati is approved by: ______________________________ ______________________________ Peter N Gabrovsky, Ph.D Date ______________________________ ______________________________ John J Noga, Ph.D Date ______________________________ ______________________________ Richard J Lorentz, Ph.D, Chair Date California State University, Northridge ii ACKNOWLEDGEMENTS I would like to acknowledge the guidance provided by and the immense patience of Prof. Richard Lorentz throughout this project. His continued help and advice made it possible for me to successfully complete this project. iii TABLE OF CONTENTS SIGNATURE PAGE .......................................................................................................ii ACKNOWLEDGEMENTS ........................................................................................... iii LIST OF TABLES .......................................................................................................... v LIST OF FIGURES ........................................................................................................ vi 1 INTRODUCTION.................................................................................................... 1 1.1 The Game of Hasami Shogi .............................................................................. -

The SSDF Chess Engine Rating List, 2019-02

The SSDF Chess Engine Rating List, 2019-02 Article Accepted Version The SSDF report Sandin, L. and Haworth, G. (2019) The SSDF Chess Engine Rating List, 2019-02. ICGA Journal, 41 (2). 113. ISSN 1389- 6911 doi: https://doi.org/10.3233/ICG-190107 Available at http://centaur.reading.ac.uk/82675/ It is advisable to refer to the publisher’s version if you intend to cite from the work. See Guidance on citing . Published version at: https://doi.org/10.3233/ICG-190085 To link to this article DOI: http://dx.doi.org/10.3233/ICG-190107 Publisher: The International Computer Games Association All outputs in CentAUR are protected by Intellectual Property Rights law, including copyright law. Copyright and IPR is retained by the creators or other copyright holders. Terms and conditions for use of this material are defined in the End User Agreement . www.reading.ac.uk/centaur CentAUR Central Archive at the University of Reading Reading’s research outputs online THE SSDF RATING LIST 2019-02-28 148673 games played by 377 computers Rating + - Games Won Oppo ------ --- --- ----- --- ---- 1 Stockfish 9 x64 1800X 3.6 GHz 3494 32 -30 642 74% 3308 2 Komodo 12.3 x64 1800X 3.6 GHz 3456 30 -28 640 68% 3321 3 Stockfish 9 x64 Q6600 2.4 GHz 3446 50 -48 200 57% 3396 4 Stockfish 8 x64 1800X 3.6 GHz 3432 26 -24 1059 77% 3217 5 Stockfish 8 x64 Q6600 2.4 GHz 3418 38 -35 440 72% 3251 6 Komodo 11.01 x64 1800X 3.6 GHz 3397 23 -22 1134 72% 3229 7 Deep Shredder 13 x64 1800X 3.6 GHz 3360 25 -24 830 66% 3246 8 Booot 6.3.1 x64 1800X 3.6 GHz 3352 29 -29 560 54% 3319 9 Komodo 9.1 -

Python-Chess Release 1.0.0 Unknown

python-chess Release 1.0.0 unknown Sep 24, 2020 CONTENTS 1 Introduction 3 2 Documentation 5 3 Features 7 4 Installing 11 5 Selected use cases 13 6 Acknowledgements 15 7 License 17 8 Contents 19 8.1 Core................................................... 19 8.2 PGN parsing and writing......................................... 35 8.3 Polyglot opening book reading...................................... 42 8.4 Gaviota endgame tablebase probing................................... 44 8.5 Syzygy endgame tablebase probing................................... 45 8.6 UCI/XBoard engine communication................................... 47 8.7 SVG rendering.............................................. 58 8.8 Variants.................................................. 59 8.9 Changelog for python-chess....................................... 61 9 Indices and tables 93 Index 95 i ii python-chess, Release 1.0.0 CONTENTS 1 python-chess, Release 1.0.0 2 CONTENTS CHAPTER ONE INTRODUCTION python-chess is a pure Python chess library with move generation, move validation and support for common formats. This is the Scholar’s mate in python-chess: >>> import chess >>> board= chess.Board() >>> board.legal_moves <LegalMoveGenerator at ... (Nh3, Nf3, Nc3, Na3, h3, g3, f3, e3, d3, c3, ...)> >>> chess.Move.from_uci("a8a1") in board.legal_moves False >>> board.push_san("e4") Move.from_uci('e2e4') >>> board.push_san("e5") Move.from_uci('e7e5') >>> board.push_san("Qh5") Move.from_uci('d1h5') >>> board.push_san("Nc6") Move.from_uci('b8c6') >>> board.push_san("Bc4") Move.from_uci('f1c4') -

2000/4 Layout

Virginia Chess Newsletter 2000- #4 1 GEORGE WASHINGTON OPEN by Mike Atkins N A COLD WINTER'S NIGHT on Dec 14, 1799, OGeorge Washington passed away on the grounds of his estate in Mt Vernon. He had gone for a tour of his property on a rainy day, fell ill, and was slowly killed by his physicians. Today the Best Western Mt Vernon hotel, site of VCF tournaments since 1996, stands only a few miles away. One wonders how George would have reacted to his name being used for a chess tournament, the George Washington Open. Eighty-seven players competed, a new record for Mt Vernon events. Designed as a one year replacement for the Fredericksburg Open, the GWO was a resounding success in its initial and perhaps not last appearance. Sitting atop the field by a good 170 points were IM Larry Kaufman (2456) and FM Emory Tate (2443). Kudos to the validity of 1 the rating system, as the final round saw these two playing on board 1, the only 4 ⁄2s. Tate is famous for his tactics and EMORY TATE -LARRY KAUFMAN (13...gxf3!?) 14 Nh5 gxf3 15 Kaufman is super solid and FRENCH gxf3 Nf8 16 Rg1+ Ng6 17 Rg4 rarely loses except to brilliancies. 1 e4 e6 2 Nf3 d5 3 Nc3 Nf6 Bd7 18 Kf1 Nd8 19 Qd2 Bb5 Inevitably one recalls their 4 e5 Nfd7 5 Ne2 c5 6 d4 20 Re1 f5 21 exf6 Bb4 22 f7+ meeting in the last round at the Nc6 7 c3 Be7 8 Nf4 cxd4 9 Kxf7 23 Rf4+ Nxf4 24 Qxf4+ 1999 Virginia Open, there also cxd4 Qb6 10 Be2 g5 11 Ke7 25 Qf6+ Kd7 26 Qg7+ on on the top board. -

SECRETS of POSITIONAL SACRIFICE Authors GM Nikola Nestorović, IM Dejan Nestorović

IM Dejan Nestorović GM Nikola Nestorović SECRETS OF POSITIONAL SACRIFICE Authors GM Nikola Nestorović, IM Dejan Nestorović Editorial board Vitomir Božić, Irena Nestorović, Miloš Perunović, Branko Tadić, Igor Žveglić Cover design Aleksa Mitrović Translator Ivan Marinković Proofreading Vitomir Božić Contributors Katarina Nestorović, Lazar Nestorović Editor-in-chief Branko Tadić General Manager Vitomir Božić President Aleksandar Matanović © Copyright 2021 Šahovski informator All rights reserved. No part of this publication may be reproduced, stored in a retrieval system or transmitted in any form or by any means: electronic, magnetic tape, mechanical, photocopying, recording or otherwise, without prior permission in writing from the publisher. No part of the Chess Informant system (classifications of openings, endings and combinations, code system, etc.) may be used in other publications without prior permission in writing from the publisher. ISBN 978-86-7297-119-4 Izdavač Šahovski informator 11001 Beograd, Francuska 31, Srbija Phone: (381 11) 2630-109 E-mail: [email protected] Internet: https://www.sahovski.com A Word from the Author “Secrets of Positional Sacrifice” is the second book, created within the premises of the Nestor- ović family chess workshop. I would like to mention that the whole family contributed to this book. We are also extremely thankful to our friends, who, thanks to their previous experience, have introduced us to the process of creating chess books, with their invaluable advice and ideas. Considering the abundance of material regarding this topic that was gathered, we decided to make this book only the first, lower level of the “Positional sacrifice” edition. My father and I worked hard to make sure that the new book will be as interesting as the first one, but also more adapted to the wider audience. -

A Book About Razuvaev.Indb

Compiled by Boris Postovsky Devoted to Chess The Creative Heritage of Yuri Razuvaev New In Chess 2019 Contents Preface – From the compiler . 7 Foreword – Memories of a chess academic . 9 ‘Memories’ authors in alphabetical order . 16 Chapter 1 – Memories of Razuvaev’s contemporaries – I . 17 Garry Kasparov . 17 Anatoly Karpov . 19 Boris Spassky . 20 Veselin Topalov . .22 Viswanathan Anand . 23 Magnus Carlsen . 23 Boris Postovsky . 23 Chapter 2 – Selected games . 43 1962-1973 – the early years . 43 1975-1978 – grandmaster . 73 1979-1982 – international successes . 102 1983-1986 – expert in many areas . 138 1987-1995 – always easy and clean . 168 Chapter 3 – Memories of Razuvaev’s contemporaries – II . 191 Evgeny Tomashevsky . 191 Boris Gulko . 199 Boris Gelfand . 201 Lyudmila Belavenets . 202 Vladimir Tukmakov . .202 Irina Levitina . 204 Grigory Kaidanov . 206 Michal Krasenkow . 207 Evgeny Bareev . 208 Joel Lautier . 209 Michele Godena . 213 Alexandra Kosteniuk . 215 5 Devoted to Chess Chapter 4 – Articles and interviews (by and with Yuri Razuvaev) . 217 Confessions of a grandmaster . 217 My Gambit . 218 The Four Knights Opening . 234 The gambit syndrome . 252 A game of ghosts . 258 You are right, Monsieur De la Bourdonnais!! . 267 In the best traditions of the Soviet school of chess . 276 A lesson with Yuri Razuvaev . 283 A sharp turn . 293 Extreme . 299 The Botvinnik System . 311 ‘How to develop your intellect’ . 315 ‘I am with Tal, we all developed from Botvinnik . ’. 325 Chapter 5 – Memories of Razuvaev’s contemporaries – III . .331 Igor Zaitsev . 331 Alexander Nikitin . 332 Albert Kapengut . 332 Alexander Shashin . 335 Boris Zlotnik . 337 Lev Khariton . 337 Sergey Yanovsky . -

Www . Polonia Chess.Pl

Amplico_eng 12/11/07 8:39 Page 1 26th MEMORIAL of STANIS¸AW GAWLIKOWSKI UNDER THE AUSPICES OF THE PRESIDENT OF THE CITY OF WARSAW HANNA GRONKIEWICZ-WALTZ AND THE MARSHAL OF THE MAZOWIECKIE VOIVODESHIP ADAM STRUZIK chess.pl polonia VII AMPLICO AIG LIFE INTERNATIONAL CHESS TOURNEMENT EUROPEAN RAPID CHESS CHAMPIONSHIP www. INTERNATIONAL WARSAW BLITZ CHESS CHAMPIONSHIP WARSAW • 14th–16th December 2007 Amplico_eng 12/11/07 8:39 Page 2 7 th AMPLICO AIG LIFE INTERNATIONAL CHESS TOURNAMENT WARSAW EUROPEAN RAPID CHESS CHAMPIONSHIP 15th-16th DEC 2007 Chess Club Polonia Warsaw, MKS Polonia Warsaw and the Warsaw Foundation for Chess Development are one of the most significant organizers of chess life in Poland and in Europe. The most important achievements of “Polonia Chess”: • Successes of the grandmasters representing Polonia: • 5 times finishing second (1997, 1999, 2001, 2003 and 2005) and once third (2002) in the European Chess Club Cup; • 8 times in a row (1999-2006) team championship of Poland; • our players have won 24 medals in Polish Individual Championships, including 7 gold, 11 silver and 4 bronze medals; • GM Bartlomiej Macieja became European Champion in 2002 (the greatest individual success in the history of Polish chess after the Second World War); • WGM Beata Kadziolka won the bronze medal at the World Championship 2005; • the players of Polonia have had qualified for the World Championships and World Cups: Micha∏ Krasenkow and Bart∏omiej Macieja (six times), Monika Soçko (three times), Robert Kempiƒski and Mateusz Bartel (twice), -

Extended Null-Move Reductions

Extended Null-Move Reductions Omid David-Tabibi1 and Nathan S. Netanyahu1,2 1 Department of Computer Science, Bar-Ilan University, Ramat-Gan 52900, Israel [email protected], [email protected] 2 Center for Automation Research, University of Maryland, College Park, MD 20742, USA [email protected] Abstract. In this paper we review the conventional versions of null- move pruning, and present our enhancements which allow for a deeper search with greater accuracy. While the conventional versions of null- move pruning use reduction values of R ≤ 3, we use an aggressive re- duction value of R = 4 within a verified adaptive configuration which maximizes the benefit from the more aggressive pruning, while limiting its tactical liabilities. Our experimental results using our grandmaster- level chess program, Falcon, show that our null-move reductions (NMR) outperform the conventional methods, with the tactical benefits of the deeper search dominating the deficiencies. Moreover, unlike standard null-move pruning, which fails badly in zugzwang positions, NMR is impervious to zugzwangs. Finally, the implementation of NMR in any program already using null-move pruning requires a modification of only a few lines of code. 1 Introduction Chess programs trying to search the same way humans think by generating “plausible” moves dominated until the mid-1970s. By using extensive chess knowledge at each node, these programs selected a few moves which they consid- ered plausible, and thus pruned large parts of the search tree. However, plausible- move generating programs had serious tactical shortcomings, and as soon as brute-force search programs such as Tech [17] and Chess 4.x [29] managed to reach depths of 5 plies and more, plausible-move generating programs fre- quently lost to brute-force searchers due to their tactical weaknesses. -

Deep Hiarcs 14 Uci Chess Engine Download

Deep Hiarcs 14 Uci Chess Engine Download Deep Hiarcs 14 Uci Chess Engine Download 1 / 3 14, Arics (WB), Vladimir Fadeev, Belarus, SD, 0,08, no ?? ... 22, Ifrit (UCI), Бренкман Андрей (Brenkman Andrey), Russia, 0,04, no, 2200, B1_6 B1_7 B1_9 ... 1. deep hiarcs 14 uci chess engine download 2. deep hiarcs chess explorer download o Deep HIARCS 14 WCSC (available with the Deep HIARCS Chess Explorer ... Please note you can add other UCI chess engines including third party UCI ... publisher is "Applied Computer Concepts Ltd.", do not trust the download if it is not ... deep hiarcs 14 uci chess engine download deep hiarcs 14 uci chess engine download, deep hiarcs chess explorer, deep hiarcs chess explorer download, deep hiarcs chess explorer for mac Red Giant VFX Suite 1.0.4 With Crack [Latest] Free download deep hiarcs 14 uci engine torrent Files at Software Informer. HIARCS 12 MP UCI - a chess program that can do more than .... See my blog for more information and download instructions. ... UCI parameters When an engine runs through a chess GUI, it communicates all the settings ... HIARCS14 (HIARCS book), TC 15sec; +8 -5 =7 First test with Lc0-v0. inf. exe (only ... AlphaZero; Deep Learning; Deus X; Leela Chess Zero; Forum Posts 2019. call of duty black ops crack indir oyuncehennemi multi.flash.kit.2.10.30 free download deep hiarcs chess explorer download Pinegrow Web Editor Crack 5.91 With keygen Free Download 2020 It connects a UCI chess engine to an xboard interface such as ... the AlphaZero-style Monte Carlo Tree Search and deep neural networks a flexible, .. -

MAGNUS VS. FABI First American World Championship Contender in Decades Loses a Heartbreaker to Carlsen

WORLD YOUTH & WORLD CADET TEAMS SHOW THEIR FIGHTING SPIRITS FACE OFF MAGNUS VS. FABI First American world championship contender in decades loses a heartbreaker to Carlsen. February 2019 | USChess.org 13th annual OPEN at FOXWOODS April 17-21 (Open), 18-21 or 19-21 (other sections) EASTER WEEKEND - RETURNING AFTER 5 YEARS! Open: 9 rounds, GM & IM norms possible! Lower sections: 7 rounds At the elegant, ultra modern FOXWOODS RESORT CASINO In the Connecticut woods, 1½ hours from Boston, 2½ hours from New York Prizes $100,000 based on 650 paid entries, $75,000 minimum guaranteed! A SPECTACULAR SITE! Foxwoods Resort Casino, in Prize limits: 1) Under 26 games rated as of April 2019 the woods of Southeastern Connecticut near the Mystic official, $800 in U1100, $1500 U1400, $2500 U1600 or coast. 35 restaurants, 250 gaming tables, 5500 slot U1800. 2) If post-event rating posted 4/15/18-4/15/19 was machines, non-smoking casino, entertainment, shopping, more than 30 pts over section maximum, limit $1500. world’s largest Native American museum. Mixed doubles: $1200-800-600-400-200 projected. Open Section, April 17-21: 9SS, 40/2, SD/30 d10. FIDE Male/female teams, must average under 2200, only rounds rated, GM & IM norms possible. 1-7 of Open count, register before both begin round 2. Other Sections, April 18-21 or 19-21: 7SS, 40/2, SD/30 Schedules: 5-DAY (Open only): Late reg. ends Wed. d10 (3-day option, rds 1-2 G/60 d10). 6 pm, rds Wed 7, Thu 12 & 7, Fri/Sat 11 & 6, Sun 10 & 4:15.