Chapter-5 STABILITY of NON-AUTONOMOUS SYSTEMS

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Solution Bounds, Stability and Attractors' Estimates of Some Nonlinear Time-Varying Systems

1 Solution Bounds, Stability and Attractors’ Estimates of Some Nonlinear Time-Varying Systems Mark A. Pinsky and Steve Koblik Abstract. Estimation of solution norms and stability for time-dependent nonlinear systems is ubiquitous in numerous applied and control problems. Yet, practically valuable results are rare in this area. This paper develops a novel approach, which bounds the solution norms, derives the corresponding stability criteria, and estimates the trapping/stability regions for a broad class of the corresponding systems. Our inferences rest on deriving a scalar differential inequality for the norms of solutions to the initial systems. Utility of the Lipschitz inequality linearizes the associated auxiliary differential equation and yields both the upper bounds for the norms of solutions and the relevant stability criteria. To refine these inferences, we introduce a nonlinear extension of the Lipschitz inequality, which improves the developed bounds and estimates the stability basins and trapping regions for the corresponding systems. Finally, we conform the theoretical results in representative simulations. Key Words. Comparison principle, Lipschitz condition, stability criteria, trapping/stability regions, solution bounds AMS subject classification. 34A34, 34C11, 34C29, 34C41, 34D08, 34D10, 34D20, 34D45 1. INTRODUCTION. We are going to study a system defined by the equation n (1) xAtxftxFttt , , [0 , ), x , ft ,0 0 where matrix A nn and functions ft:[ , ) nn and F : n are continuous and bounded, and At is also continuously differentiable. It is assumed that the solution, x t, x0 , to the initial value problem, x t0, x 0 x 0 , is uniquely defined for tt0 . Note that the pertained conditions can be found, e.g., in [1] and [2]. -

Strange Attractors and Classical Stability Theory

Nonlinear Dynamics and Systems Theory, 8 (1) (2008) 49–96 Strange Attractors and Classical Stability Theory G. Leonov ∗ St. Petersburg State University Universitetskaya av., 28, Petrodvorets, 198504 St.Petersburg, Russia Received: November 16, 2006; Revised: December 15, 2007 Abstract: Definitions of global attractor, B-attractor and weak attractor are introduced. Relationships between Lyapunov stability, Poincare stability and Zhukovsky stability are considered. Perron effects are discussed. Stability and instability criteria by the first approximation are obtained. Lyapunov direct method in dimension theory is introduced. For the Lorenz system necessary and sufficient conditions of existence of homoclinic trajectories are obtained. Keywords: Attractor, instability, Lyapunov exponent, stability, Poincar´esection, Hausdorff dimension, Lorenz system, homoclinic bifurcation. Mathematics Subject Classification (2000): 34C28, 34D45, 34D20. 1 Introduction In almost any solid survey or book on chaotic dynamics, one encounters notions from classical stability theory such as Lyapunov exponent and characteristic exponent. But the effect of sign inversion in the characteristic exponent during linearization is seldom mentioned. This effect was discovered by Oscar Perron [1], an outstanding German math- ematician. The present survey sets forth Perron’s results and their further development, see [2]–[4]. It is shown that Perron effects may occur on the boundaries of a flow of solutions that is stable by the first approximation. Inside the flow, stability is completely determined by the negativeness of the characteristic exponents of linearized systems. It is often said that the defining property of strange attractors is the sensitivity of their trajectories with respect to the initial data. But how is this property connected with the classical notions of instability? For continuous systems, it was necessary to remember the almost forgotten notion of Zhukovsky instability. -

Chapter 8 Stability Theory

Chapter 8 Stability theory We discuss properties of solutions of a first order two dimensional system, and stability theory for a special class of linear systems. We denote the independent variable by ‘t’ in place of ‘x’, and x,y denote dependent variables. Let I ⊆ R be an interval, and Ω ⊆ R2 be a domain. Let us consider the system dx = F (t, x, y), dt (8.1) dy = G(t, x, y), dt where the functions are defined on I × Ω, and are locally Lipschitz w.r.t. variable (x, y) ∈ Ω. Definition 8.1 (Autonomous system) A system of ODE having the form (8.1) is called an autonomous system if the functions F (t, x, y) and G(t, x, y) are constant w.r.t. variable t. That is, dx = F (x, y), dt (8.2) dy = G(x, y), dt Definition 8.2 A point (x0, y0) ∈ Ω is said to be a critical point of the autonomous system (8.2) if F (x0, y0) = G(x0, y0) = 0. (8.3) A critical point is also called an equilibrium point, a rest point. Definition 8.3 Let (x(t), y(t)) be a solution of a two-dimensional (planar) autonomous system (8.2). The trace of (x(t), y(t)) as t varies is a curve in the plane. This curve is called trajectory. Remark 8.4 (On solutions of autonomous systems) (i) Two different solutions may represent the same trajectory. For, (1) If (x1(t), y1(t)) defined on an interval J is a solution of the autonomous system (8.2), then the pair of functions (x2(t), y2(t)) defined by (x2(t), y2(t)) := (x1(t − s), y1(t − s)), for t ∈ s + J (8.4) is a solution on interval s + J, for every arbitrary but fixed s ∈ R. -

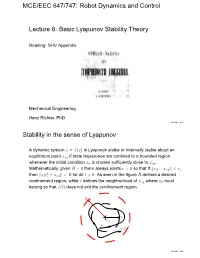

Robot Dynamics and Control Lecture 8: Basic Lyapunov Stability Theory

MCE/EEC 647/747: Robot Dynamics and Control Lecture 8: Basic Lyapunov Stability Theory Reading: SHV Appendix Mechanical Engineering Hanz Richter, PhD MCE503 – p.1/17 Stability in the sense of Lyapunov A dynamic system x˙ = f(x) is Lyapunov stable or internally stable about an equilibrium point xeq if state trajectories are confined to a bounded region whenever the initial condition x0 is chosen sufficiently close to xeq. Mathematically, given R> 0 there always exists r > 0 so that if ||x0 − xeq|| <r, then ||x(t) − xeq|| <R for all t> 0. As seen in the figure R defines a desired confinement region, while r defines the neighborhood of xeq where x0 must belong so that x(t) does not exit the confinement region. R r xeq x0 x(t) MCE503 – p.2/17 Stability in the sense of Lyapunov... Note: Lyapunov stability does not require ||x(t)|| to converge to ||xeq||. The stronger definition of asymptotic stability requires that ||x(t)|| → ||xeq|| as t →∞. Input-Output stability (BIBO) does not imply Lyapunov stability. The system can be BIBO stable but have unbounded states that do not cause the output to be unbounded (for example take x1(t) →∞, with y = Cx = [01]x). The definition is difficult to use to test the stability of a given system. Instead, we use Lyapunov’s stability theorem, also called Lyapunov’s direct method. This theorem is only a sufficient condition, however. When the test fails, the results are inconclusive. It’s still the best tool available to evaluate and ensure the stability of nonlinear systems. -

Exponential Stability for the Resonant D’Alembert Model of Celestial Mechanics

DISCRETE AND CONTINUOUS Website: http://aimSciences.org DYNAMICAL SYSTEMS Volume 12, Number 4, April 2005 pp. 569{594 EXPONENTIAL STABILITY FOR THE RESONANT D'ALEMBERT MODEL OF CELESTIAL MECHANICS Luca Biasco and Luigi Chierchia Dipartimento di Matematica Universit`a\Roma Tre" Largo S. L. Murialdo 1, 00146 Roma (Italy) (Communicated by Carlangelo Liverani) Abstract. We consider the classical D'Alembert Hamiltonian model for a rotationally symmetric planet revolving on Keplerian ellipse around a ¯xed star in an almost exact \day/year" resonance and prove that, notwithstanding proper degeneracies, the system is stable for exponentially long times, provided the oblateness and the eccentricity are suitably small. 1. Introduction. Perturbative techniques are a basic tool for a deep understand- ing of the long time behavior of conservative Dynamical Systems. Such techniques, which include the so{called KAM and Nekhoroshev theories (compare [1] for gen- eralities), have, by now, reached a high degree of sophistication and have been applied to a great corpus of di®erent situations (including in¯nite dimensional sys- tems and PDE's). KAM and Nekhoroshev techniques work under suitable non{ degeneracy assumptions (e.g, invertibility of the frequency map for KAM or steep- ness for Nekhoroshev: see [1]); however such assumptions are strongly violated exactly in those typical examples of Celestial Mechanics, which { as well known { were the main motivation for the Dynamical System investigations of Poincar¶e, Birkho®, Siegel, Kolmogorov, Arnold, Moser,... In this paper we shall consider the day/year (or spin/orbit) resonant planetary D'Alembert model (see [10]) and will address the problem of the long time stability for such a model. -

Adding Integral Action for Open-Loop Exponentially Stable Semigroups and Application to Boundary Control of PDE Systems A

1 Adding integral action for open-loop exponentially stable semigroups and application to boundary control of PDE systems A. Terrand-Jeanne, V. Andrieu, V. Dos Santos Martins, C.-Z. Xu Abstract—The paper deals with output feedback stabilization of boundary regulation is considered for a general class of of exponentially stable systems by an integral controller. We hyperbolic PDE systems in Section III. The proof of the propose appropriate Lyapunov functionals to prove exponential theorem obtained in the context of hyperbolic systems is given stability of the closed-loop system. An example of parabolic PDE (partial differential equation) systems and an example of in Section IV. hyperbolic systems are worked out to show how exponentially This paper is an extended version of the paper presented in stabilizing integral controllers are designed. The proof is based [16]. Compare to this preliminary version all proofs are given on a novel Lyapunov functional construction which employs the and moreover more general classes of hyperbolic systems are forwarding techniques. considered. Notation: subscripts t, s, tt, ::: denote the first or second derivative w.r.t. the variable t or s. For an integer n, I I. INTRODUCTION dn is the identity matrix in Rn×n. Given an operator A over a ∗ The use of integral action to achieve output regulation and Hilbert space, A denotes the adjoint operator. Dn is the set cancel constant disturbances for infinite dimensional systems of diagonal matrix in Rn×n. has been initiated by S. Pojohlainen in [12]. It has been extended in a series of papers by the same author (see [13] II. -

Chapter 8 Nonlinear Systems

Chapter 8 Nonlinear systems 8.1 Linearization, critical points, and equilibria Note: 1 lecture, §6.1–§6.2 in [EP], §9.2–§9.3 in [BD] Except for a few brief detours in chapter 1, we considered mostly linear equations. Linear equations suffice in many applications, but in reality most phenomena require nonlinear equations. Nonlinear equations, however, are notoriously more difficult to understand than linear ones, and many strange new phenomena appear when we allow our equations to be nonlinear. Not to worry, we did not waste all this time studying linear equations. Nonlinear equations can often be approximated by linear ones if we only need a solution “locally,” for example, only for a short period of time, or only for certain parameters. Understanding linear equations can also give us qualitative understanding about a more general nonlinear problem. The idea is similar to what you did in calculus in trying to approximate a function by a line with the right slope. In § 2.4 we looked at the pendulum of length L. The goal was to solve for the angle θ(t) as a function of the time t. The equation for the setup is the nonlinear equation L g θ�� + sinθ=0. θ L Instead of solving this equation, we solved the rather easier linear equation g θ�� + θ=0. L While the solution to the linear equation is not exactly what we were looking for, it is rather close to the original, as long as the angleθ is small and the time period involved is short. You might ask: Why don’t we just solve the nonlinear problem? Well, it might be very difficult, impractical, or impossible to solve analytically, depending on the equation in question. -

Exponential Stability of Distributed Parameter Systems Governed by Symmetric Hyperbolic Partial Differential Equations Using Lyapunov’S Second Method ∗

ESAIM: COCV 15 (2009) 403–425 ESAIM: Control, Optimisation and Calculus of Variations DOI: 10.1051/cocv:2008033 www.esaim-cocv.org EXPONENTIAL STABILITY OF DISTRIBUTED PARAMETER SYSTEMS GOVERNED BY SYMMETRIC HYPERBOLIC PARTIAL DIFFERENTIAL EQUATIONS USING LYAPUNOV’S SECOND METHOD ∗ Abdoua Tchousso1, 2, Thibaut Besson1 and Cheng-Zhong Xu1 Abstract. In this paper we study asymptotic behaviour of distributed parameter systems governed by partial differential equations (abbreviated to PDE). We first review some recently developed results on the stability analysis of PDE systems by Lyapunov’s second method. On constructing Lyapunov functionals we prove next an asymptotic exponential stability result for a class of symmetric hyper- bolic PDE systems. Then we apply the result to establish exponential stability of various chemical engineering processes and, in particular, exponential stability of heat exchangers. Through concrete examples we show how Lyapunov’s second method may be extended to stability analysis of nonlin- ear hyperbolic PDE. Meanwhile we explain how the method is adapted to the framework of Banach spaces Lp,1<p≤∞. Mathematics Subject Classification. 37L15, 37L45, 93C20. Received November 2, 2005. Revised September 4, 2006 and December 7, 2007. Published online May 30, 2008. 1. Introduction Lyapunov’s second method, called also Lyapunov’s direct method, is one of efficient and classical methods for studying asymptotic behavior and stability of the dynamical systems governed by ordinary differential equations. It dates back to Lyapunov’s thesis published at the beginning of the twentieth century [22]. The applications of the method and its theoretical development have been marked by the works of LaSalle and Lefschetz in the past [19,35] and numerous successful achievements later in the control of mechanical, robotic and aerospace systems (cf. -

Introduction to Mathematical Modeling Difference Equations, Differential Equations, & Linear Algebra (The First Course of a Two-Semester Sequence)

Introduction to Mathematical Modeling Difference Equations, Differential Equations, & Linear Algebra (The First Course of a Two-Semester Sequence) Dr. Eric R. Sullivan [email protected] Department of Mathematics Carroll College, Helena, MT Content Last Updated: January 8, 2018 1 ©Eric Sullivan. Some Rights Reserved. This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License. You may copy, distribute, display, remix, rework, and per- form this copyrighted work, but only if you give credit to Eric Sullivan, and all deriva- tive works based upon it must be published under the Creative Commons Attribution- NonCommercial-Share Alike 4.0 United States License. Please attribute this work to Eric Sullivan, Mathematics Faculty at Carroll College, [email protected]. To view a copy of this license, visit https://creativecommons.org/licenses/by-nc-sa/4.0/ or send a letter to Creative Commons, 171 Second Street, Suite 300, San Francisco, Cali- fornia, 94105, USA. Contents 0 To the Student and the Instructor5 0.1 An Inquiry Based Approach...........................5 0.2 Online Texts and Other Resources........................7 0.3 To the Instructor..................................7 1 Fundamental Notions from Calculus9 1.1 Sections from Active Calculus..........................9 1.2 Modeling Explorations with Calculus...................... 10 2 An Introduction to Linear Algebra 16 2.1 Why Linear Algebra?............................... 16 2.2 Matrix Operations and Gaussian Elimination................. 20 2.3 Gaussian Elimination: A First Look At Solving Systems........... 23 2.4 Systems of Linear Equations........................... 28 2.5 Linear Combinations............................... 35 2.6 Inverses and Determinants............................ 38 2.6.1 Inverses................................... 40 2.6.2 Determinants.............................. -

Stability Analysis for a Class of Partial Differential Equations Via

1 Stability Analysis for a Class of Partial terms of differential matrix inequalities. The proposed Differential Equations via Semidefinite method is then applied to study the stability of systems q Programming of inhomogeneous PDEs, using weighted norms (on Hilbert spaces) as LF candidates. H Giorgio Valmorbida, Mohamadreza Ahmadi, For the case of integrands that are polynomial in the Antonis Papachristodoulou independent variables as well, the differential matrix in- equality formulated by the proposed method becomes a polynomial matrix inequality. We then exploit the semidef- Abstract—This paper studies scalar integral inequal- ities in one-dimensional bounded domains with poly- inite programming (SDP) formulation [21] of optimiza- nomial integrands. We propose conditions to verify tion problems with linear objective functions and poly- the integral inequalities in terms of differential matrix nomial (sum-of-squares (SOS)) constraints [3] to obtain inequalities. These conditions allow for the verification a numerical solution to the integral inequalities. Several of the inequalities in subspaces defined by boundary analysis and feedback design problems have been studied values of the dependent variables. The results are applied to solve integral inequalities arising from the using polynomial optimization: the stability of time-delay Lyapunov stability analysis of partial differential equa- systems [23], synthesis control laws [24], [29], applied to tions. Examples illustrate the results. optimal control law design [15] and system analysis [11]. Index Terms—Distributed Parameter Systems, In the context of PDEs, preliminary results have been PDEs, Stability Analysis, Sum of Squares. presented in [19], where a stability test was formulated as a SOS programme (SOSP) and in [27] where quadratic integrands were studied. -

Analysis of ODE Models

Analysis of ODE Models P. Howard Fall 2009 Contents 1 Overview 1 2 Stability 2 2.1 ThePhasePlane ................................. 5 2.2 Linearization ................................... 10 2.3 LinearizationandtheJacobianMatrix . ...... 15 2.4 BriefReviewofLinearAlgebra . ... 20 2.5 Solving Linear First-Order Systems . ...... 23 2.6 StabilityandEigenvalues . ... 28 2.7 LinearStabilitywithMATLAB . .. 35 2.8 Application to Maximum Sustainable Yield . ....... 37 2.9 Nonlinear Stability Theorems . .... 38 2.10 Solving ODE Systems with matrix exponentiation . ......... 40 2.11 Taylor’s Formula for Functions of Multiple Variables . ............ 44 2.12 ProofofPart1ofPoincare–Perron . ..... 45 3 Uniqueness Theory 47 4 Existence Theory 50 1 Overview In these notes we consider the three main aspects of ODE analysis: (1) existence theory, (2) uniqueness theory, and (3) stability theory. In our study of existence theory we ask the most basic question: are the ODE we write down guaranteed to have solutions? Since in practice we solve most ODE numerically, it’s possible that if there is no theoretical solution the numerical values given will have no genuine relation with the physical system we want to analyze. In our study of uniqueness theory we assume a solution exists and ask if it is the only possible solution. If we are solving an ODE numerically we will only get one solution, and if solutions are not unique it may not be the physically interesting solution. While it’s standard in advanced ODE courses to study existence and uniqueness first and stability 1 later, we will start with stability in these note, because it’s the one most directly linked with the modeling process. -

Mathematical Modeling of Control Systems

OGATA-CH02-013-062hr 7/14/09 1:51 PM Page 13 2 Mathematical Modeling of Control Systems 2–1 INTRODUCTION In studying control systems the reader must be able to model dynamic systems in math- ematical terms and analyze their dynamic characteristics.A mathematical model of a dy- namic system is defined as a set of equations that represents the dynamics of the system accurately, or at least fairly well. Note that a mathematical model is not unique to a given system.A system may be represented in many different ways and, therefore, may have many mathematical models, depending on one’s perspective. The dynamics of many systems, whether they are mechanical, electrical, thermal, economic, biological, and so on, may be described in terms of differential equations. Such differential equations may be obtained by using physical laws governing a partic- ular system—for example, Newton’s laws for mechanical systems and Kirchhoff’s laws for electrical systems. We must always keep in mind that deriving reasonable mathe- matical models is the most important part of the entire analysis of control systems. Throughout this book we assume that the principle of causality applies to the systems considered.This means that the current output of the system (the output at time t=0) depends on the past input (the input for t<0) but does not depend on the future input (the input for t>0). Mathematical Models. Mathematical models may assume many different forms. Depending on the particular system and the particular circumstances, one mathemati- cal model may be better suited than other models.