Analysis of ODE Models

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Solution Bounds, Stability and Attractors' Estimates of Some Nonlinear Time-Varying Systems

1 Solution Bounds, Stability and Attractors’ Estimates of Some Nonlinear Time-Varying Systems Mark A. Pinsky and Steve Koblik Abstract. Estimation of solution norms and stability for time-dependent nonlinear systems is ubiquitous in numerous applied and control problems. Yet, practically valuable results are rare in this area. This paper develops a novel approach, which bounds the solution norms, derives the corresponding stability criteria, and estimates the trapping/stability regions for a broad class of the corresponding systems. Our inferences rest on deriving a scalar differential inequality for the norms of solutions to the initial systems. Utility of the Lipschitz inequality linearizes the associated auxiliary differential equation and yields both the upper bounds for the norms of solutions and the relevant stability criteria. To refine these inferences, we introduce a nonlinear extension of the Lipschitz inequality, which improves the developed bounds and estimates the stability basins and trapping regions for the corresponding systems. Finally, we conform the theoretical results in representative simulations. Key Words. Comparison principle, Lipschitz condition, stability criteria, trapping/stability regions, solution bounds AMS subject classification. 34A34, 34C11, 34C29, 34C41, 34D08, 34D10, 34D20, 34D45 1. INTRODUCTION. We are going to study a system defined by the equation n (1) xAtxftxFttt , , [0 , ), x , ft ,0 0 where matrix A nn and functions ft:[ , ) nn and F : n are continuous and bounded, and At is also continuously differentiable. It is assumed that the solution, x t, x0 , to the initial value problem, x t0, x 0 x 0 , is uniquely defined for tt0 . Note that the pertained conditions can be found, e.g., in [1] and [2]. -

Strange Attractors and Classical Stability Theory

Nonlinear Dynamics and Systems Theory, 8 (1) (2008) 49–96 Strange Attractors and Classical Stability Theory G. Leonov ∗ St. Petersburg State University Universitetskaya av., 28, Petrodvorets, 198504 St.Petersburg, Russia Received: November 16, 2006; Revised: December 15, 2007 Abstract: Definitions of global attractor, B-attractor and weak attractor are introduced. Relationships between Lyapunov stability, Poincare stability and Zhukovsky stability are considered. Perron effects are discussed. Stability and instability criteria by the first approximation are obtained. Lyapunov direct method in dimension theory is introduced. For the Lorenz system necessary and sufficient conditions of existence of homoclinic trajectories are obtained. Keywords: Attractor, instability, Lyapunov exponent, stability, Poincar´esection, Hausdorff dimension, Lorenz system, homoclinic bifurcation. Mathematics Subject Classification (2000): 34C28, 34D45, 34D20. 1 Introduction In almost any solid survey or book on chaotic dynamics, one encounters notions from classical stability theory such as Lyapunov exponent and characteristic exponent. But the effect of sign inversion in the characteristic exponent during linearization is seldom mentioned. This effect was discovered by Oscar Perron [1], an outstanding German math- ematician. The present survey sets forth Perron’s results and their further development, see [2]–[4]. It is shown that Perron effects may occur on the boundaries of a flow of solutions that is stable by the first approximation. Inside the flow, stability is completely determined by the negativeness of the characteristic exponents of linearized systems. It is often said that the defining property of strange attractors is the sensitivity of their trajectories with respect to the initial data. But how is this property connected with the classical notions of instability? For continuous systems, it was necessary to remember the almost forgotten notion of Zhukovsky instability. -

Chapter 8 Stability Theory

Chapter 8 Stability theory We discuss properties of solutions of a first order two dimensional system, and stability theory for a special class of linear systems. We denote the independent variable by ‘t’ in place of ‘x’, and x,y denote dependent variables. Let I ⊆ R be an interval, and Ω ⊆ R2 be a domain. Let us consider the system dx = F (t, x, y), dt (8.1) dy = G(t, x, y), dt where the functions are defined on I × Ω, and are locally Lipschitz w.r.t. variable (x, y) ∈ Ω. Definition 8.1 (Autonomous system) A system of ODE having the form (8.1) is called an autonomous system if the functions F (t, x, y) and G(t, x, y) are constant w.r.t. variable t. That is, dx = F (x, y), dt (8.2) dy = G(x, y), dt Definition 8.2 A point (x0, y0) ∈ Ω is said to be a critical point of the autonomous system (8.2) if F (x0, y0) = G(x0, y0) = 0. (8.3) A critical point is also called an equilibrium point, a rest point. Definition 8.3 Let (x(t), y(t)) be a solution of a two-dimensional (planar) autonomous system (8.2). The trace of (x(t), y(t)) as t varies is a curve in the plane. This curve is called trajectory. Remark 8.4 (On solutions of autonomous systems) (i) Two different solutions may represent the same trajectory. For, (1) If (x1(t), y1(t)) defined on an interval J is a solution of the autonomous system (8.2), then the pair of functions (x2(t), y2(t)) defined by (x2(t), y2(t)) := (x1(t − s), y1(t − s)), for t ∈ s + J (8.4) is a solution on interval s + J, for every arbitrary but fixed s ∈ R. -

Robot Dynamics and Control Lecture 8: Basic Lyapunov Stability Theory

MCE/EEC 647/747: Robot Dynamics and Control Lecture 8: Basic Lyapunov Stability Theory Reading: SHV Appendix Mechanical Engineering Hanz Richter, PhD MCE503 – p.1/17 Stability in the sense of Lyapunov A dynamic system x˙ = f(x) is Lyapunov stable or internally stable about an equilibrium point xeq if state trajectories are confined to a bounded region whenever the initial condition x0 is chosen sufficiently close to xeq. Mathematically, given R> 0 there always exists r > 0 so that if ||x0 − xeq|| <r, then ||x(t) − xeq|| <R for all t> 0. As seen in the figure R defines a desired confinement region, while r defines the neighborhood of xeq where x0 must belong so that x(t) does not exit the confinement region. R r xeq x0 x(t) MCE503 – p.2/17 Stability in the sense of Lyapunov... Note: Lyapunov stability does not require ||x(t)|| to converge to ||xeq||. The stronger definition of asymptotic stability requires that ||x(t)|| → ||xeq|| as t →∞. Input-Output stability (BIBO) does not imply Lyapunov stability. The system can be BIBO stable but have unbounded states that do not cause the output to be unbounded (for example take x1(t) →∞, with y = Cx = [01]x). The definition is difficult to use to test the stability of a given system. Instead, we use Lyapunov’s stability theorem, also called Lyapunov’s direct method. This theorem is only a sufficient condition, however. When the test fails, the results are inconclusive. It’s still the best tool available to evaluate and ensure the stability of nonlinear systems. -

Combinatorial and Analytical Problems for Fractals and Their Graph Approximations

UPPSALA DISSERTATIONS IN MATHEMATICS 112 Combinatorial and analytical problems for fractals and their graph approximations Konstantinos Tsougkas Department of Mathematics Uppsala University UPPSALA 2019 Dissertation presented at Uppsala University to be publicly examined in Polhemsalen, Ångströmlaboratoriet, Lägerhyddsvägen 1, Uppsala, Friday, 15 February 2019 at 13:15 for the degree of Doctor of Philosophy. The examination will be conducted in English. Faculty examiner: Professor Ben Hambly (Mathematical Institute, University of Oxford). Abstract Tsougkas, K. 2019. Combinatorial and analytical problems for fractals and their graph approximations. Uppsala Dissertations in Mathematics 112. 37 pp. Uppsala: Department of Mathematics. ISBN 978-91-506-2739-8. The recent field of analysis on fractals has been studied under a probabilistic and analytic point of view. In this present work, we will focus on the analytic part developed by Kigami. The fractals we will be studying are finitely ramified self-similar sets, with emphasis on the post-critically finite ones. A prototype of the theory is the Sierpinski gasket. We can approximate the finitely ramified self-similar sets via a sequence of approximating graphs which allows us to use notions from discrete mathematics such as the combinatorial and probabilistic graph Laplacian on finite graphs. Through that approach or via Dirichlet forms, we can define the Laplace operator on the continuous fractal object itself via either a weak definition or as a renormalized limit of the discrete graph Laplacians on the graphs. The aim of this present work is to study the graphs approximating the fractal and determine connections between the Laplace operator on the discrete graphs and the continuous object, the fractal itself. -

Stability Theory for Ordinary Differential Equations 1. Introduction and Preliminaries

JOURNAL OF THE CHUNGCHEONG MATHEMATICAL SOCIETY Volume 1, June 1988 Stability Theory for Ordinary Differential Equations Sung Kyu Choi, Keon-Hee Lee and Hi Jun Oh ABSTRACT. For a given autonomous system xf = /(a?), we obtain some properties on the location of positive limit sets and investigate some stability concepts which are based upon the existence of Liapunov functions. 1. Introduction and Preliminaries The classical theorem of Liapunov on stability of the origin 鉛 = 0 for a given autonomous system xf = makes use of an auxiliary function V(.x) which has to be positive definite. Also, the time deriva tive V'(⑦) of this function, as computed along the solution, has to be negative definite. It is our aim to investigate some stability concepts of solutions for a given autonomous system which are based upon the existence of suitable Liapunov functions. Let U C Rn be an open subset containing 0 G Rn. Consider the autonomous system x' = /(⑦), where xf is the time derivative of the function :r(t), defined by the continuous function f : U — Rn. When / is C1, to every point t()in R and Xq in 17, there corresponds the unique solution xq) of xf = f(x) such that ^(to) = ⑦⑴ For such a solution, let us denote I(xq) = (aq) the interval where it is defined (a, ⑵ may be infinity). Let f : (a, &) -나 R be a continuous function. Then f is decreasing on (a, b) if and only if the upper right-sided Dini derivative D+f(t) = lim sup f〔t + h(-f(t) < o 九 一>o+ h Received by the editors on March 25, 1988. -

Stability and Bifurcation of Dynamical Systems

STABILITY AND BIFURCATION OF DYNAMICAL SYSTEMS Scope: • To remind basic notions of Dynamical Systems and Stability Theory; • To introduce fundaments of Bifurcation Theory, and establish a link with Stability Theory; • To give an outline of the Center Manifold Method and Normal Form theory. 1 Outline: 1. General definitions 2. Fundaments of Stability Theory 3. Fundaments of Bifurcation Theory 4. Multiple bifurcations from a known path 5. The Center Manifold Method (CMM) 6. The Normal Form Theory (NFT) 2 1. GENERAL DEFINITIONS We give general definitions for a N-dimensional autonomous systems. ••• Equations of motion: x(t )= Fx ( ( t )), x ∈ »N where x are state-variables , { x} the state-space , and F the vector field . ••• Orbits: Let xS (t ) be the solution to equations which satisfies prescribed initial conditions: x S(t )= F ( x S ( t )) 0 xS (0) = x The set of all the values assumed by xS (t ) for t > 0is called an orbit of the dynamical system. Geometrically, an orbit is a curve in the phase-space, originating from x0. The set of all orbits is the phase-portrait or phase-flow . 3 ••• Classifications of orbits: Orbits are classified according to their time-behavior. Equilibrium (or fixed -) point : it is an orbit xS (t ) =: xE independent of time (represented by a point in the phase-space); Periodic orbit : it is an orbit xS (t ) =:xP (t ) such that xP(t+ T ) = x P () t , with T the period (it is a closed curve, called cycle ); Quasi-periodic orbit : it is an orbit xS (t ) =:xQ (t ) such that, given an arbitrary small ε > 0 , there exists a time τ for which xQ(t+τ ) − x Q () t ≤ ε holds for any t; (it is a curve that densely fills a ‘tubular’ space); Non-periodic orbit : orbit xS (t ) with no special properties. -

Arnold: Swimming Against the Tide / Boris Khesin, Serge Tabachnikov, Editors

ARNOLD: Real Analysis A Comprehensive Course in Analysis, Part 1 Barry Simon Boris A. Khesin Serge L. Tabachnikov Editors http://dx.doi.org/10.1090/mbk/086 ARNOLD: AMERICAN MATHEMATICAL SOCIETY Photograph courtesy of Svetlana Tretyakova Photograph courtesy of Svetlana Vladimir Igorevich Arnold June 12, 1937–June 3, 2010 ARNOLD: Boris A. Khesin Serge L. Tabachnikov Editors AMERICAN MATHEMATICAL SOCIETY Providence, Rhode Island Translation of Chapter 7 “About Vladimir Abramovich Rokhlin” and Chapter 21 “Several Thoughts About Arnold” provided by Valentina Altman. 2010 Mathematics Subject Classification. Primary 01A65; Secondary 01A70, 01A75. For additional information and updates on this book, visit www.ams.org/bookpages/mbk-86 Library of Congress Cataloging-in-Publication Data Arnold: swimming against the tide / Boris Khesin, Serge Tabachnikov, editors. pages cm. ISBN 978-1-4704-1699-7 (alk. paper) 1. Arnold, V. I. (Vladimir Igorevich), 1937–2010. 2. Mathematicians–Russia–Biography. 3. Mathematicians–Soviet Union–Biography. 4. Mathematical analysis. 5. Differential equations. I. Khesin, Boris A. II. Tabachnikov, Serge. QA8.6.A76 2014 510.92–dc23 2014021165 [B] Copying and reprinting. Individual readers of this publication, and nonprofit libraries acting for them, are permitted to make fair use of the material, such as to copy select pages for use in teaching or research. Permission is granted to quote brief passages from this publication in reviews, provided the customary acknowledgment of the source is given. Republication, systematic copying, or multiple reproduction of any material in this publication is permitted only under license from the American Mathematical Society. Permissions to reuse portions of AMS publication content are now being handled by Copyright Clearance Center’s RightsLink service. -

An Introduction to Dynamical Systems and Chaos G.C

An Introduction to Dynamical Systems and Chaos G.C. Layek An Introduction to Dynamical Systems and Chaos 123 G.C. Layek Department of Mathematics The University of Burdwan Burdwan, West Bengal India ISBN 978-81-322-2555-3 ISBN 978-81-322-2556-0 (eBook) DOI 10.1007/978-81-322-2556-0 Library of Congress Control Number: 2015955882 Springer New Delhi Heidelberg New York Dordrecht London © Springer India 2015 This work is subject to copyright. All rights are reserved by the Publisher, whether the whole or part of the material is concerned, specifically the rights of translation, reprinting, reuse of illustrations, recitation, broadcasting, reproduction on microfilms or in any other physical way, and transmission or information storage and retrieval, electronic adaptation, computer software, or by similar or dissimilar methodology now known or hereafter developed. The use of general descriptive names, registered names, trademarks, service marks, etc. in this publication does not imply, even in the absence of a specific statement, that such names are exempt from the relevant protective laws and regulations and therefore free for general use. The publisher, the authors and the editors are safe to assume that the advice and information in this book are believed to be true and accurate at the date of publication. Neither the publisher nor the authors or the editors give a warranty, express or implied, with respect to the material contained herein or for any errors or omissions that may have been made. Printed on acid-free paper Springer (India) Pvt. Ltd. is part of Springer Science+Business Media (www.springer.com) Dedicated to my father Late Bijoychand Layek for his great interest in my education. -

The Fractional View of Complexity

entropy Editorial The Fractional View of Complexity António M. Lopes 1,∗ and J.A. Tenreiro Machado 2 1 UISPA–LAETA/INEGI, Faculty of Engineering, University of Porto, R. Dr. Roberto Frias, 4200-465 Porto, Portugal 2 Institute of Engineering, Polytechnic of Porto, Deptartment of Electrical Engineering, R. Dr. António Bernardino de Almeida, 431, 4249-015 Porto, Portugal; [email protected] * Correspondence: [email protected]; Tel.: +351-220413486 Received: 27 November 2019; Accepted: 11 December 2019; Published: 13 December 2019 Keywords: fractals; fractional calculus; complex systems; dynamics; entropy; information theory Fractal analysis and fractional differential equations have been proven as useful tools for describing the dynamics of complex phenomena characterized by long memory and spatial heterogeneity. There is a general agreement about the relation between the two perspectives, but the formal mathematical arguments supporting their relation are still being developed. The fractional derivative of real order appears as the degree of structural heterogeneity between homogeneous and inhomogeneous domains. A purely real derivative order would imply a system with no characteristic scale, where a given property would hold regardless of the scale of the observations. However, in real-world systems, physical cut-offs may prevent the invariance spreading over all scales and, therefore, complex-order derivatives could yield more realistic models. Information theory addresses the quantification and communication of information. Entropy and complexity are concepts that often emerge in relation to systems composed of many elements that interact with each other, which appear intrinsically difficult to model. This Special Issue focuses on the synergies of fractals or fractional calculus and information theory tools, such as entropy, when modeling complex phenomena in engineering, physics, life, and social sciences. -

Linear Hydrodynamic Stability

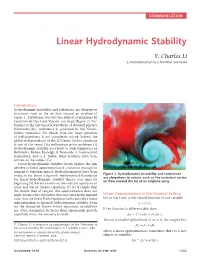

COMMUNICATION Linear Hydrodynamic Stability Y. Charles Li Communicated by Christina Sormani Introduction Hydrodynamic instability and turbulence are ubiquitous in nature, such as the air flow around an airplane of Figure 1. Turbulence was also the subject of paintings by Leonardo da Vinci and Vincent van Gogh (Figure 2). Tur- bulence is the last unsolved problem of classical physics. Mathematically, turbulence is governed by the Navier– Stokes equations, for which even the basic question of well-posedness is not completely solved. Indeed, the global well-posedness of the 3D Navier–Stokes equations is one of the seven Clay millennium prize problems [1]. Hydrodynamic stability goes back to such luminaries as Helmholtz, Kelvin, Rayleigh, O. Reynolds, A. Sommerfeld, Heisenberg, and G. I. Taylor. Many treatises have been written on the subject [5]. Linear hydrodynamic stability theory applies the sim- ple idea of linear approximation of a function through its tangent to function spaces. Hydrodynamicists have been Figure 1. Hydrodynamic instability and turbulence trying to lay down a rigorous mathematical foundation are ubiquitous in nature, such as the turbulent vortex for linear hydrodynamic stability theory ever since its air flow around the tip of an airplane wing. beginning [5]. Recent results on the solution operators of Euler and Navier–Stokes equations [2] [3] [4] imply that the simple idea of tangent line approximation does not apply because the derivative does not exist in the inviscid Linear Approximation in the Simplest Setting case, thus the linear Euler equations fail to provide a linear Let us start with a real-valued function of one variable approximation to inviscid hydrodynamic stability. -

Stability Analysis of Complex Dynamical Systems: Some Computational Methods Narotham Reddy Sarabudla Iowa State University

Iowa State University Capstones, Theses and Retrospective Theses and Dissertations Dissertations 1981 Stability analysis of complex dynamical systems: some computational methods Narotham Reddy Sarabudla Iowa State University Follow this and additional works at: https://lib.dr.iastate.edu/rtd Part of the Electrical and Electronics Commons Recommended Citation Sarabudla, Narotham Reddy, "Stability analysis of complex dynamical systems: some computational methods " (1981). Retrospective Theses and Dissertations. 6944. https://lib.dr.iastate.edu/rtd/6944 This Dissertation is brought to you for free and open access by the Iowa State University Capstones, Theses and Dissertations at Iowa State University Digital Repository. It has been accepted for inclusion in Retrospective Theses and Dissertations by an authorized administrator of Iowa State University Digital Repository. For more information, please contact [email protected]. INFORMATION TO USERS This was produced from a copy of a document sent to us for microfilming. While the most advanced technological means to photograph and reproduce this document have been used, the quality is heavily dependent upon the quality of the material submitted. The following explanation of techniques is provided to help you understand markings or notations which may appear on this reproduction. 1. The sign or "target" for pages apparently lacking from the document photographed is "Missing Page(s)". If it was possible to obtain the missing page(s) or section, they are spliced into the film along with adjacent pages. This may have necessitated cutting through an image and duplicating adjacent pages to assure you of complete continuity. 2. When an image on the film is obliterated with a round black mark it is an indication that the film inspector noticed either blurred copy because of movement during exposure, or duplicate copy.