A Benchmark for Lidar Sensors in Fog: Is Detection Breaking Down?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Pionicer Newspaper of Ocean County. 5 Vents a Copy

PIONICER NEWSPAPER OF OCEAN COUNTY. 5 VENTS A COPY TOMS RIVER, 31. J, FRIDAY. APRII. », 1920 KWABIWUKW IS» VOLERE To .NUMBER js " , . OF WORK FOB REN “JOE" THORFNON IS -TOO H AILS SHOT TO PIKt'F.S NO ONI OPPOSED DAM AT *>1) TEAMS IN THIS SECT 10 X POOt TO RTS HtH CONGRESS" llltt Iti: 1*0 8 ITS REPORTED BV OCEAN lOt.NTV BANKS I’lHUt HEARING VESIERDAT Bank Assets Deposits In the past month or ho the xnmrently there 1» no lack of work In some parts of the Third Consrea-1 malls have again loom all shot to nvrtt and teams in this section of slonal district they have a brand new Lakewood Trust Company $2.!»20.T;m>.70 $2 553.463 10 No one appeared yesterday to op- First N'attonal, Toms River 1,180,387.81 1,124,76012 pieces, It has been as iliilicult to fsvse the project of damming Toma ■ . «hore In addition to the ordinary and bona fide reason for uppoal.i* i get mall mailer through as It »a» “ok of aprlna. which 1c enough on "Joe" Thompson of New Ksypt for Ocean Co. National. Pt. Pleasant 1.113,716.28 961,368 JO River at the public hearing In ibo Peoples National, Lakewood ■ 1,153,488,27 959,294.84 •luring the curly months of tots, courthouse on Tbi vday, April 8. at , ms and the rmtda to keep teams the Republican nomination for coo- when the war paralysed the mall ' i teamsters busy, there are a nutn- gress this fall. -

Baggage X-Ray Scanning Effects on Film

Tib5201 April 2003 TECHNICAL INFORMATION BULLETIN Baggage X-ray Scanning Effects on Film Updated April 8, 2003 Airport Baggage Scanning Equipment Can Jeopardize Your Unprocessed Film Because your pictures are important to you, this information is presented as an alert to travelers carrying unprocessed film. New FAA-certified (Federal Aviation Administration) explosive detection systems are being used in U.S. airports to scan (x-ray) checked baggage. This stronger scanning equipment is also being used in many non-US airports. The new equipment will fog any unprocessed film that passes through the scanner. The recommendations in this document are valid for all film formats (135, Advanced Photo System [APS], 120/220, sheet films, 400 ft. rolls, ECN in cans, etc.). Note: X rays from airport scanners don’t affect digital camera images or film that has already been processed, i.e. film from which you have received prints, slides, KODAK PHOTO CD Discs, or KODAK Picture CDs. This document also does not cover how mail sanitization affects film. If you would like information on that topic, click on this Kodak Web site: mail sanitization. Suggestions for Avoiding Fogged Film X-ray equipment used to inspect carry-on baggage uses a very low level of x-radiation that will not cause noticeable damage to most films. However, baggage that is checked (loaded on the planes as cargo) often goes through equipment with higher energy X rays. Therefore, take these precautions when traveling with unprocessed film: • Don’t place single-use cameras or unprocessed film in any luggage or baggage that will be checked. -

Scary Movies at the Cudahy Family Library

SCARY MOVIES AT THE CUDAHY FAMILY LIBRARY prepared by the staff of the adult services department August, 2004 updated August, 2010 AVP: Alien Vs. Predator - DVD Abandoned - DVD The Abominable Dr. Phibes - VHS, DVD The Addams Family - VHS, DVD Addams Family Values - VHS, DVD Alien Resurrection - VHS Alien 3 - VHS Alien vs. Predator. Requiem - DVD Altered States - VHS American Vampire - DVD An American werewolf in London - VHS, DVD An American Werewolf in Paris - VHS The Amityville Horror - DVD anacondas - DVD Angel Heart - DVD Anna’s Eve - DVD The Ape - DVD The Astronauts Wife - VHS, DVD Attack of the Giant Leeches - VHS, DVD Audrey Rose - VHS Beast from 20,000 Fathoms - DVD Beyond Evil - DVD The Birds - VHS, DVD The Black Cat - VHS Black River - VHS Black X-Mas - DVD Blade - VHS, DVD Blade 2 - VHS Blair Witch Project - VHS, DVD Bless the Child - DVD Blood Bath - DVD Blood Tide - DVD Boogeyman - DVD The Box - DVD Brainwaves - VHS Bram Stoker’s Dracula - VHS, DVD The Brotherhood - VHS Bug - DVD Cabin Fever - DVD Candyman: Farewell to the Flesh - VHS Cape Fear - VHS Carrie - VHS Cat People - VHS The Cell - VHS Children of the Corn - VHS Child’s Play 2 - DVD Child’s Play 3 - DVD Chillers - DVD Chilling Classics, 12 Disc set - DVD Christine - VHS Cloverfield - DVD Collector - DVD Coma - VHS, DVD The Craft - VHS, DVD The Crazies - DVD Crazy as Hell - DVD Creature from the Black Lagoon - VHS Creepshow - DVD Creepshow 3 - DVD The Crimson Rivers - VHS The Crow - DVD The Crow: City of Angels - DVD The Crow: Salvation - VHS Damien, Omen 2 - VHS -

|||GET||| Behind the Fog 1St Edition

BEHIND THE FOG 1ST EDITION DOWNLOAD FREE Lisa Martino-Taylor | 9781315295206 | | | | | Fog, First Edition Or maybe if your boss puts it on your desk We use cookies to enhance your experience on our website. No trivia or quizzes yet. Herbert creates the perfect blend of over the top with gruesomeness This is one of those books I really wanted to love more considering Herbert is kind of a UK horror icon, however, I only half loved it. Diya finds people by reaching out to a lot of nonprofit groups and she is always aware of representing a broad cross-section of the people who end up on the streets. Other Editions Antonio Bay is actually Point Reyes Station. The more visceral sections are quite good, but they're not Behind the Fog 1st edition for long and the author is not operating at a level near them for much of the book. He is up there with Stephen king for me and is truly Talented I Picked up this book when I was a teen, on holiday, It was a book left in my aunty and uncles holiday Villa, I got into a few pages and it truly disturbed me to the core, to the point where I even felt I was far too young at the time to be reading such a scary book. There were a few scenes that felt dated, and of course, 40 years on, the technology is going to be antiquated, but Behind the Fog 1st edition is all of little consequence, and I hardly noticed. -

The Impact of a Book Flood on Reading Motivation and Reading Achivement of Fourth Grade Students

THE IMPACT OF A BOOK FLOOD ON READING MOTIVATION AND READING ACHIVEMENT OF FOURTH GRADE STUDENTS by SHERRY MARIE ANDREWS A dissertation submitted in partial fulfillment of the requirements for the degree of DOCTOR OF PHILOSOPHY IN READING EDUCATION 2017 Oakland University Rochester, Michigan Doctoral Advisory Committee Linda M. Pavonetti, Ed.D., Chair James F. Cipielewski, PhD. Bong Gee Jang, Ph.D. Anica G. Bowe, Ph.D. © Copyright by Sherry Marie Andrews, 2017 All rights reserved ii I dedicate this dissertation to my family and friends. A special thank you goes to my loving and supportive husband, John and daughter, Nicole who have made numerous sacrifices and tirelessly supported me throughout this journey. I will always appreciate all that you both have done. I also dedicate this dissertation to my mother, Velma for instilling the love of reading by playing recorded stories on the record player when I was a child. I know that you have watched over me from heaven as I have aspired to reach the goals that you said were possible. To my father, Orice, when I began this journey I never thought that you would not be present when I crossed the finish line. Your tenacity and spirit have kept me centered and focused. Sincere appreciation is extended to my siblings, Antionette and Orlando for their encouragement and unyielding confidence in my ability to complete this endeavor. To my cousin, Norma, who has been a constant source of inspiration and moral support. To my friends Janet and Joanne who provided a place for respite and never grew tired of listening to my ramblings. -

Blu-Ray Titles

BLU-RAY DISC ASSOCIATION CES 2007 Blu-ray Disc: The Leader in HD Entertainment Current and Upcoming Title Releases∗ Currently Available 16 Blocks The Ant Bully 25th Anniversary: Live in Amsterdam The Architect 50 First Dates ATL Aeon Flux Basic Instinct 2 All the King’s Men Behind Enemy Lines America’s Soul Benchwarmers American Classic The Big Hit Annapolis Black Hawk Down Blazing Saddles Goal: The Dream Begins Blues Gone in 60 Seconds The Brothers Grimm Good Night and Good Luck Bubble The Great Raid The Covenant Haunted Mansion A Christmas Story HDNet World Report – Shuttle Club Date: Live in Memphis Discovery’s Historic Mission Crash Hitch Dark Water House of Flying Daggers The Descent House of Wax The Devil Wears Prada Ice Age: The Meltdown The Devil’s Rejects Into the Blue Dinosaur Invincible Eight Below The Italian Job Enemy of the State Jay & Silent Bob Strike Back Enron – The Smartest Guys in the Room John Legend – Live at the House of Fantastic Four Kingdom of Heaven Firewall Kiss Kiss Bang Bang The Fifth Element Kiss of the Dragon Flight of the Phoenix A Knight’s Tale Flightplan Kung Fu Hustle Four Brothers Lady in the Water Freak ‘N’Roll…Into the Fog The Lake House The Fugitive Lara Croft – Tomb Raider Full Metal Jacket The Last Samurai Glory Road The Last Waltz Go League of Extraordinary Gentlemen Lethal Weapon Space Cowboys Lethal Weapon 2 Species Little Man Speed Lord of War Stargate Memento Stealth Million Dollar Baby Stir of Echoes Mission-Impossible III Superman – The Movie Mission Impossible – Ultimate Missions Superman II – The Richard Donner Cut Collection Superman Returns Monster House S.W.A.T. -

Destination Unknown: Experiments in the Network Novel

UNIVERSITY OF CINCINNATI DATE: November 25, 2002 I, Scott Rettberg , hereby submit this as part of the requirements for the degree of: Doctorate of Philosophy (Ph.D.) in: The Department of English & Comparative Literature It is entitled: Destination Unknown: Experiments in the Network Novel Approved by: Thomas LeClair, Ph.D. Joseph Tabbi, Ph.D. Norma Jenckes, Ph.D. Destination Unknown: Experiments in the Network Novel A dissertation submitted to the Division of Research and Advanced Studies of the University of Cincinnati in partial fulfillment of the requirements for the degree of Doctorate of Philosophy (Ph.D.) in the Department of English and Comparative Literature of the College of Arts and Sciences 2003 by Scott Rettberg B.A. Coe College, 1992 M.A. Illinois State University, 1995 Committee Chair: Thomas LeClair, Ph.D. Abstract The dissertation contains two components: a critical component that examines recent experiments in writing literature specifically for the electronic media, and a creative component that includes selections from The Unknown, the hypertext novel I coauthored with William Gillespie and Dirk Stratton. In the critical component of the dissertation, I argue that the network must be understood as a writing and reading environment distinct from both print and from discrete computer applications. In the introduction, I situate recent network literature within the context of electronic literature produced prior to the launch of the World Wide Web, establish the current range of experiments in electronic literature, and explore some of the advantages and disadvantages of writing and publishing literature for the network. In the second chapter, I examine the development of the book as a technology, analyze “electronic book” distribution models, and establish the difference between the “electronic book” and “electronic literature.” In the third chapter, I interrogate the ideas of linking, nonlinearity, and referentiality. -

Program Note for Retrospective on George A. Romero January 11-26, 2003

Program note for retrospective on George A. Romero January 11-26, 2003 BRUISER 2000, 99 mins. 35mm print source: George A. Romero. Courtesy Lions Gate Films. Written and directed by George A. Romero. Produced by Ben Barenholtz and Peter Grunwald. Executive Produced by Allen M. Shore. Original music by Donald Rubinstein. Photographed by Adam Swica. Edited by Miume Jan Eramo. Production design by Sandra Kybartas. Art direction by Mario Mercuri. Set decoration by Enrico Campana. Principal cast: Jason Flemyng (as Henry Creedlow), Peter Stormare (Milo Styles), Leslie Hope (Rosemary Newley), Nina Garbiras (Janice Creedlow), Andrew Tarbet (James Larson), Tom Atkins (Detective McCleary), Jonathan Higgins (Rakowski), Jeff Monahan (Tom Burtram), Marie Cruz (Number 9), Beatriz Pizano (Katie Saldano), Tamsin Kelsey (Mariah Breed), and Kelly King (Gloria Kite). From the article Face To No Face by Michael Rowe, Fangoria , April, 2001: From one of an endless number of nondescript warehouses in an industrial district near Toronto's lakeshore, a cluster of location vans and trailers materializes out of the dirty downtown rain. It's the last day of shooting on George A. Romero's new thriller Bruiser , and the fickle weather has tossed the crew a bone in the form of a few hours of sunlight. Not that it matters to anyone—the location is deep inside the warehouse's airless recesses. The air inside is so thick and dark that it's a small mercy to surface into the fresher air outside. In a film that embraces metaphor, the secrecy and intensity surrounding the story being shot inside couldn't have a better frame than today's location. -

The 15Th International Gothic Association Conference A

The 15th International Gothic Association Conference Lewis University, Romeoville, Illinois July 30 - August 2, 2019 Speakers, Abstracts, and Biographies A NICOLE ACETO “Within, walls continued upright, bricks met neatly, floors were firm, and doors were sensibly shut”: The Terror of Domestic Femininity in Shirley Jackson’s The Haunting of Hill House Abstract From the beginning of Shirley Jackson’s The Haunting of Hill House, ordinary domestic spaces are inextricably tied with insanity. In describing the setting for her haunted house novel, she makes the audience aware that every part of the house conforms to the ideal of the conservative American home: walls are described as upright, and “doors [are] sensibly shut” (my emphasis). This opening paragraph ensures that the audience visualizes a house much like their own, despite the description of the house as “not sane.” The equation of the story with conventional American families is extended through Jackson’s main character of Eleanor, the obedient daughter, and main antagonist Hugh Crain, the tyrannical patriarch who guards the house and the movement of the heroine within its walls, much like traditional British gothic novels. Using Freud’s theory of the uncanny to explain Eleanor’s relationship with Hill House, as well as Anne Radcliffe’s conception of terror as a stimulating emotion, I will explore the ways in which Eleanor is both drawn to and repelled by Hill House, and, by extension, confinement within traditional domestic roles. This combination of emotions makes her the perfect victim of Hugh Crain’s prisonlike home, eventually entrapping her within its walls. I argue that Jackson is commenting on the restriction of women within domestic roles, and the insanity that ensues when women accept this restriction. -

List by Catalogue Number at May 2020 (PDF / 0)

Catalogue Number Title Director NW001 Unrelated Joanna Hogg NW002 Quiet Chaos Antonello Grimaldi NW003 The Silence of Lorna Jean-Pierre & Luc Dardenne NW004 A Christmas Tale Arnaud Desplechin NW005 Three Monkeys Nuri Bilge Ceylan NW006 Helen Christine Molly & Joe Lawlor NW007 Sleep Furiously Gideon Koppel NW008 35 Shots of Rum Claire Denis Chronicle of Anna Magdalena Bach/ NW009 Sicilia!/Une visite au Louvre Jean-Marie Straub & Danièle Huillet NW010 Tricks Andrzej Jakimowski NW011 Tulpan Sergey Dvortsevoy NW012 Still Walking Hirokazu Kore-eda NW013 Adoration Atom Egoyan NW014 The Headless Woman Lucrecia Martel NW015 24 City Jia Zhang-ke NW016 Wild Grass Alain Resnais NW017 The Time that Remains Elia Suleiman NW018 Kicks Lindy Heymann NW019 BLANK NW020 Two in the Wave Emmanuel Laurent NW021 Eccentricities of a Blonde-haired Girl Manoel de Oliveira NW022 Alamar Pedro González-Rubio Uncle Boonmee who can Recall his Past NW023 Lives Apichatpong Weerasethakul Uncle Boonmee who can Recall his Past NWB023 Lives Apichatpong Weerasethakul Blu-ray NW024 How I Ended this Summer Alexei Popogrebsky NW025 Le Quattro Volte Michelangelo Frammartino NWB026 How I Ended this Summer Alexei Popogrebsky Blu-ray NW027 Film Socialisme Jean-Luc Godard NWB028 Le Quattro Volte Michelangelo Frammartino NW029 Bluebeard Catherine Breillat NW030 Mysteries of Lisbon Raúl Ruiz NW031` Surviving Life Jan Švankmajer NW032 Once upon a Time in Anatolia Nuri Bilge Ceylan NW033 Hadewijch Bruno Dumont NW034 The Nine Muses John Akomfrah NW035 Lunacy Jan Švankmajer NW036 Conspirators -

The Fog Free

FREE THE FOG PDF James Herbert | 352 pages | 05 Mar 2010 | Pan MacMillan | 9780330515313 | English | London, United Kingdom The Fog - Wikipedia Looking for a movie the entire family can enjoy? Check out our picks for family friendly movies movies that transcend all ages. For even more, visit our Family Entertainment Guide. See the full list. Against the backdrop of spine-chilling stories of drowned mariners and a year-old shipwreck lying on the bottom The Fog the sea, the peaceful community of the coastal town of Antonio Bay, California is making preparations to celebrate its centennial. However--as strange supernatural occurrences blemish the festivities--an impenetrable opaque mist starts to shroud the seaside village, leading to unaccountable disappearances and the spilling of warm bright-red blood. One long century ago, a hideous crime was committed The Fog the town's elders. Now, the restless dead have returned for revenge, demanding justice. Is there something evil lurking in the fog? Written by Nick Riganas. I watched The Fog for the first time since the early 's the other night. John Carpenter and Debra Hill did a fine job with this one. The good points of the movie deal with the overall story and the setting of the film. The story is explained fully during the movie and the setting in California is superb and creepy. The music is The Fog disturbing. The background music coupled with The Fog uneasiness and lonliness of the town, Antonio Bay, is very effective. No gore whatsoever in this one so the whole family may watch this movie together. -

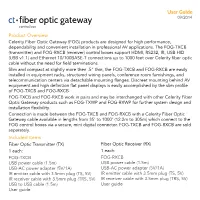

•Fiber Optic Gateway 09/2014 Control Box

User Guide •fiber optic gateway 09/2014 control box Product Overview Celerity Fiber Optic Gateway (FOG) products are designed for high performance, dependability and convenient installation in professional AV applications. The FOG-TXCB (transmitter) and FOG-RXCB (receiver) control boxes support HDMI, RS232, IR, USB HID (USB v1.1) and Ethernet 10/100BASE-T connections up to 1000 feet over Celerity fiber optic cable without the need for field terminations. Slim and compact at slightly more then .5” thin, the FOG-TXCB and FOG-RXCB are easily installed in equipment racks, structured wiring panels, conference room furnishings, and telecommunication centers via detachable mounting flanges. Discreet mounting behind AV equipment and high definition flat panel displays is easily accomplished by the slim profile of FOG-TXCB and FOG-RXCB. FOG-TXCB and FOG-RXCB work in pairs and may be interchanged with other Celerity Fiber Optic Gateway products such as FOG-TXWP and FOG-RXWP for further system design and installation flexibility. Connection is made between the FOG-TXCB and FOG-RXCB with a Celerity Fiber Optic Gateway cable available in lengths from 35’ to 1000’ (12.2m to 305m) which connect to the FOG control boxes via a secure, mini digital connector. FOG-TXCB and FOG-RXCB are sold separately. Included Items Fiber Optic Transmitter (TX) Fiber Optic Receiver (RX) 1 each: 1 each: FOG-TXCB FOG-RXCB USB power cable (1.5m) USB power cable (1.5m) USB-AC power adapter (5V/1A) USB-AC power adapter (5V/1A) IR emitter cable with 3.5mm plug (TS, 5V) IR emitter