Protocol: How Control Exists After Decentralization, Alexander R

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A System of Formal Notation for Scoring Works of Digital and Variable Media Art Richard Rinehart University of California, Berke

A System of Formal Notation for Scoring Works of Digital and Variable Media Art Richard Rinehart University of California, Berkeley Abstract This paper proposes a new approach to conceptualizing digital and media art forms. This theoretical approach will be explored through issues raised in the process of creating a formal declarative model for digital and media art. This methodology of implementing theory as a way of exploring and testing it is intended to mirror the practices of both art making and computer processing. In these practices, higher-level meanings are manifested at lower levels of argument, symbolization, or concretization so that they can be manipulated and engaged, resulting in a new understanding of a theory or the formulation of new higher-level meaning. This practice is inherently interactive and cyclical. Similarly, this paper is informed by previous work and ideas in this area, and is intended to inform and serve as a vehicle for ongoing exchange around media art. The approach presented and explored here is intended to inform a better understanding of media art forms and to provide the lower level 'hooks' that support the creation, use and preservation of media art. In order to accomplish both of those goals, media art works will not be treated here as isolated and idealized entities, but rather as entities in the complex environment of the real world where they encounter various agents, life-cycle events, and practical concerns. This paper defines the Media Art Notation System and provides implementation examples in the appendices. 1 Digital / Media Art and the Need for a Formal Notation System Digital and media art forms include Internet art, software art, computer-mediated installations, as well as other non-traditional art forms such as conceptual art, installation art, performance art, and video. -

HTTP Cookie - Wikipedia, the Free Encyclopedia 14/05/2014

HTTP cookie - Wikipedia, the free encyclopedia 14/05/2014 Create account Log in Article Talk Read Edit View history Search HTTP cookie From Wikipedia, the free encyclopedia Navigation A cookie, also known as an HTTP cookie, web cookie, or browser HTTP Main page cookie, is a small piece of data sent from a website and stored in a Persistence · Compression · HTTPS · Contents user's web browser while the user is browsing that website. Every time Request methods Featured content the user loads the website, the browser sends the cookie back to the OPTIONS · GET · HEAD · POST · PUT · Current events server to notify the website of the user's previous activity.[1] Cookies DELETE · TRACE · CONNECT · PATCH · Random article Donate to Wikipedia were designed to be a reliable mechanism for websites to remember Header fields Wikimedia Shop stateful information (such as items in a shopping cart) or to record the Cookie · ETag · Location · HTTP referer · DNT user's browsing activity (including clicking particular buttons, logging in, · X-Forwarded-For · Interaction or recording which pages were visited by the user as far back as months Status codes or years ago). 301 Moved Permanently · 302 Found · Help 303 See Other · 403 Forbidden · About Wikipedia Although cookies cannot carry viruses, and cannot install malware on 404 Not Found · [2] Community portal the host computer, tracking cookies and especially third-party v · t · e · Recent changes tracking cookies are commonly used as ways to compile long-term Contact page records of individuals' browsing histories—a potential privacy concern that prompted European[3] and U.S. -

Markets Not Capitalism Explores the Gap Between Radically Freed Markets and the Capitalist-Controlled Markets That Prevail Today

individualist anarchism against bosses, inequality, corporate power, and structural poverty Edited by Gary Chartier & Charles W. Johnson Individualist anarchists believe in mutual exchange, not economic privilege. They believe in freed markets, not capitalism. They defend a distinctive response to the challenges of ending global capitalism and achieving social justice: eliminate the political privileges that prop up capitalists. Massive concentrations of wealth, rigid economic hierarchies, and unsustainable modes of production are not the results of the market form, but of markets deformed and rigged by a network of state-secured controls and privileges to the business class. Markets Not Capitalism explores the gap between radically freed markets and the capitalist-controlled markets that prevail today. It explains how liberating market exchange from state capitalist privilege can abolish structural poverty, help working people take control over the conditions of their labor, and redistribute wealth and social power. Featuring discussions of socialism, capitalism, markets, ownership, labor struggle, grassroots privatization, intellectual property, health care, racism, sexism, and environmental issues, this unique collection brings together classic essays by Cleyre, and such contemporary innovators as Kevin Carson and Roderick Long. It introduces an eye-opening approach to radical social thought, rooted equally in libertarian socialism and market anarchism. “We on the left need a good shake to get us thinking, and these arguments for market anarchism do the job in lively and thoughtful fashion.” – Alexander Cockburn, editor and publisher, Counterpunch “Anarchy is not chaos; nor is it violence. This rich and provocative gathering of essays by anarchists past and present imagines society unburdened by state, markets un-warped by capitalism. -

Web Browser a C-Class Article from Wikipedia, the Free Encyclopedia

Web browser A C-class article from Wikipedia, the free encyclopedia A web browser or Internet browser is a software application for retrieving, presenting, and traversing information resources on the World Wide Web. An information resource is identified by a Uniform Resource Identifier (URI) and may be a web page, image, video, or other piece of content.[1] Hyperlinks present in resources enable users to easily navigate their browsers to related resources. Although browsers are primarily intended to access the World Wide Web, they can also be used to access information provided by Web servers in private networks or files in file systems. Some browsers can also be used to save information resources to file systems. Contents 1 History 2 Function 3 Features 3.1 User interface 3.2 Privacy and security 3.3 Standards support 4 See also 5 References 6 External links History Main article: History of the web browser The history of the Web browser dates back in to the late 1980s, when a variety of technologies laid the foundation for the first Web browser, WorldWideWeb, by Tim Berners-Lee in 1991. That browser brought together a variety of existing and new software and hardware technologies. Ted Nelson and Douglas Engelbart developed the concept of hypertext long before Berners-Lee and CERN. It became the core of the World Wide Web. Berners-Lee does acknowledge Engelbart's contribution. The introduction of the NCSA Mosaic Web browser in 1993 – one of the first graphical Web browsers – led to an explosion in Web use. Marc Andreessen, the leader of the Mosaic team at NCSA, soon started his own company, named Netscape, and released the Mosaic-influenced Netscape Navigator in 1994, which quickly became the world's most popular browser, accounting for 90% of all Web use at its peak (see usage share of web browsers). -

Opengis® Web Map Server Cookbook

Open GIS Consortium Inc. OpenGIS® Web Map Server Cookbook August 18, 2003 Editor: Kris Kolodziej OGC Document Number: 03-050r1 Version: 1.0.1 Stage: Draft Language: English OpenGIS® Web Map Server Cookbook Open GIS Consortium Inc. Copyright Notice Copyright 2003 M.I.T. Copyright 2003 ESRI Copyright 2003 Bonn University Copyright 2003 lat/lon Copyright 2003 DM Solutions Group, Inc Copyright 2003 CSC Ploenzke AG Copyright 2003 Wupperverband Copyright 2003 WirelessInfo Copyright 2003 Intergraph Copyright 2003 Harvard University Copyright 2003 International Interfaces (See full text of copyright notice in Appendix 2.) Copyright 2003 York University Copyright 2003 NASA/Ocean ESIP, JPL The companies and organizations listed above have granted the Open GIS Consortium, Inc. (OGC) a nonexclusive, royalty-free, paid up, worldwide license to copy and distribute this document and to modify this document and distribute copies of the modified version. This document does not represent a commitment to implement any portion of this specification in any company’s products. OGC’s Legal, IPR and Copyright Statements are found at http://www.opengis.org/legal/ipr.htm . Permission to use, copy, and distribute this document in any medium for any purpose and without fee or royalty is hereby granted, provided that you include the above list of copyright holders and the entire text of this NOTICE. We request that authorship attribution be provided in any software, documents, or other items or products that you create pursuant to the implementation of the contents of this document, or any portion thereof. No right to create modifications or derivatives of OGC documents is granted pursuant to this license. -

Text of Interview with Jon Ippolito

Smithsonian Institution Time-Based and Digital Art Working Group: Interview Project Interview with Jon Ippolito Jon Ippolito is the co-founder of the Still Water Lab and Digital Curation program at the University of Maine and an Associate Professor of New Media at the University of Maine. He was formerly Associate Curator of Media Arts at the Guggenheim Museum in New York City. June 25, 2013 Interviewers: Crystal Sanchez and Claire Eckert I was a curator at the Guggenheim in the 1990s and 2000s, starting in 1991. At the same time I was an artist. As an artist, I had become interested in the internet, largely because of its egalitarian structure, compared to the mainstream art world. I was trying to conceive of works that would avoid technical obsolescence by being variable from the get-go. At the same time that my collaborators and I were imagining ways that a work could skip from one medium to another, like a stone skips across the surface of a lake, I started to see warning flags about all the works—especially media works—that were about to expire. Films would be exploding in canisters. Video formats from the 1980s were becoming obsolete. Conceptual artists from the 1970s were dying. Newer formats like internet protocols from only a few months before were falling apart. It was like the statute of limitations ran out on everything at the same time, around the late 1990s or 2000. In thinking through some of the problems associated with the death of prominent websites, I began to ask whether we could apply the pro-active solution of variable media retroactively to the problem of rescuing these near-dead art works. -

Redes De Ordenadores WWW

Informática Técnica de Gestión Redes de ordenadores WWW Grupo de sistemas y comunicaciones [email protected] Redes de ordenadores, 1998-1999 GSYC Página 1 Informática Técnica de Gestión 3 8. WWW World Wide Web (la telaraña mundial) es un sistema de información hipermedia que ha revolucionado Internet en los años 90. Se basa en un protocolo de trasferencia de información (HTTP), que utilizan los programas cliente (navegador o browser) para recuperar datos en forma de páginas en un formato normalizado (HTML) provenientes de servidores dispersos por Internet. El usuario puede seleccionar otras páginas definidas mediante URLs que aparecen resaltadas en las páginas como hiperenlaces mediante un interfaz amigable. La publicitación es un método rápido (porque la actualización tiene efectos inmediatos en quienes acceden a la información) y económico de poner información a disposición de cualquiera(en todo el mundo) que tome la iniciativa (igualdad de oportunidades) de pedirla. El modelo inicial de navegar de página en página buscando información se ha enriquecido con la proliferación de herramientas de búsqueda (spiders, crawlers, robots) así como el acceso a páginas generadas dinámicamente como respuesta a consultas interactivas (CGI). Las capacidades iniciales de visualización se han extendido con la evolución de HTML y la aparición de otros lenguajes para representación de objetos tridimensionales (VRML), ejecución en local de aplicaciones (JAVA)... aumentando la interactividad. La riqueza del entorno ha provocado una demanda para incorporarle características de seguridad mejorada (SSL) que permitan extender el modelo de acceso a la información a aspectos que pueden cambiar tanto el mundo como el comercio electrónico (SET). -

The Anarchist Aspects of Nietzsche's Philosophy- Presentation

The Anarchist Aspects of Nietzsche’s Philosophy- Presentation The core of my hypothesis is that Friedrich Nietzsche’s philosophy promotes basic anarchist notions. Hence, what I am intending to show is the existence of a bond between the anarchist tradition and the German philosopher. My main idea is to review Nietzsche’s work from an anarchist angle. This means I will try to re-examine basic concepts of the Nietzschean philosophy by comparing them to the notions of prominent anarchists and libertarians together with using these concepts in order to give a Nietzschean interpretation to certain anarchistic historical incidents like those of the Schism of the 1st International, the Spanish Revolution, May ’68, and even the Revolt of December 2008 in Athens-Greece. The importance of such a connection lies in highlighting the elective affinity between Nietzsche and the anarchists and, actually, the whole research is based on this elective relationship. When I say elective affinity I mean “a special kind of dialectical relationship that develops between two social or cultural configurations, one that cannot be reduced to direct causality or to „influences‟ in the traditional sense”.1 This is Michael Lowy’s definition of elective affinity in his essay on Jewish libertarian thought,2 and that’s the way I am going to follow it. Elective affinity is not something new in Nietzschean scholarship. Almost every dissertation on Nietzsche makes references to the direct or indirect influence the German philosopher had on his contemporaries and successors and therefore, elective affinity is present to most, short or extended, relevant works. -

The Variable Media Approach L'approche Des Médias Variables

Edited by permanenceThe through Variable change Alain Depocas, Jon Ippolito, and Media Approach Caitlin Jones Sous la direction de L’approche des Alain Depocas, la permanence par le changement Jon Ippolito et médias variables Caitlin Jones Permanence Through Change: The Variable Media Approach L’approche des médias variables : la permanence par le changement © 2003 The Solomon R. Guggenheim Foundation, New York, © 2003 The Solomon R. Guggenheim Foundation, and The Daniel Langlois Foundation for Art, Science, and New York, et la fondation Daniel Langlois pour l’art, la science Technology, Montreal. All rights reserved. All works used by et la technologie, Montréal. Tous droits réservés. Textes et permission. reproductions utilisés avec permissions d’usage. Bibliothèque nationale du Québec ISBN: 0-9684693-2-9 Bibliothèque nationale du Canada Published by ISBN: 0-9684693-2-9 Guggenheim Museum Publications 1071 Fifth Avenue Publié par New York, New York 10128 Guggenheim Museum Publications and 1071 Fifth Avenue The Daniel Langlois Foundation for Art, Science, New York, New York 10128 and Technology et 3530 Boulevard Saint-Laurent, suite 402 la fondation Daniel Langlois pour l’art, la science et Montreal, Quebec H2X 2V1 la technologie 3530 boulevard Saint-Laurent, bureau 402 Designer: Marcia Fardella Montréal, Québec H2X 2V1 English editors: Carey Ann Schaefer, Edward Weisberger Concepteur graphique : Marcia Fardella French coordinator: Audrey Navarre French editors: Yves Doucet, Jacques Perron Correcteurs de la version anglaise : Carey Ann -

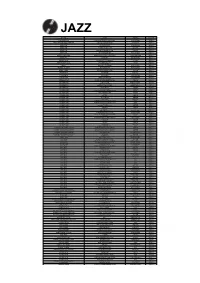

Order Form Full

JAZZ ARTIST TITLE LABEL RETAIL ADDERLEY, CANNONBALL SOMETHIN' ELSE BLUE NOTE RM112.00 ARMSTRONG, LOUIS LOUIS ARMSTRONG PLAYS W.C. HANDY PURE PLEASURE RM188.00 ARMSTRONG, LOUIS & DUKE ELLINGTON THE GREAT REUNION (180 GR) PARLOPHONE RM124.00 AYLER, ALBERT LIVE IN FRANCE JULY 25, 1970 B13 RM136.00 BAKER, CHET DAYBREAK (180 GR) STEEPLECHASE RM139.00 BAKER, CHET IT COULD HAPPEN TO YOU RIVERSIDE RM119.00 BAKER, CHET SINGS & STRINGS VINYL PASSION RM146.00 BAKER, CHET THE LYRICAL TRUMPET OF CHET JAZZ WAX RM134.00 BAKER, CHET WITH STRINGS (180 GR) MUSIC ON VINYL RM155.00 BERRY, OVERTON T.O.B.E. + LIVE AT THE DOUBLET LIGHT 1/T ATTIC RM124.00 BIG BAD VOODOO DADDY BIG BAD VOODOO DADDY (PURPLE VINYL) LONESTAR RECORDS RM115.00 BLAKEY, ART 3 BLIND MICE UNITED ARTISTS RM95.00 BROETZMANN, PETER FULL BLAST JAZZWERKSTATT RM95.00 BRUBECK, DAVE THE ESSENTIAL DAVE BRUBECK COLUMBIA RM146.00 BRUBECK, DAVE - OCTET DAVE BRUBECK OCTET FANTASY RM119.00 BRUBECK, DAVE - QUARTET BRUBECK TIME DOXY RM125.00 BRUUT! MAD PACK (180 GR WHITE) MUSIC ON VINYL RM149.00 BUCKSHOT LEFONQUE MUSIC EVOLUTION MUSIC ON VINYL RM147.00 BURRELL, KENNY MIDNIGHT BLUE (MONO) (200 GR) CLASSIC RECORDS RM147.00 BURRELL, KENNY WEAVER OF DREAMS (180 GR) WAX TIME RM138.00 BYRD, DONALD BLACK BYRD BLUE NOTE RM112.00 CHERRY, DON MU (FIRST PART) (180 GR) BYG ACTUEL RM95.00 CLAYTON, BUCK HOW HI THE FI PURE PLEASURE RM188.00 COLE, NAT KING PENTHOUSE SERENADE PURE PLEASURE RM157.00 COLEMAN, ORNETTE AT THE TOWN HALL, DECEMBER 1962 WAX LOVE RM107.00 COLTRANE, ALICE JOURNEY IN SATCHIDANANDA (180 GR) IMPULSE -

Leaving the Left Behind 115 Post-Left Anarchy?

Anarchy after Leftism 5 Preface . 7 Introduction . 11 Chapter 1: Murray Bookchin, Grumpy Old Man . 15 Chapter 2: What is Individualist Anarchism? . 25 Chapter 3: Lifestyle Anarchism . 37 Chapter 4: On Organization . 43 Chapter 5: Murray Bookchin, Municipal Statist . 53 Chapter 6: Reason and Revolution . 61 Chapter 7: In Search of the Primitivists Part I: Pristine Angles . 71 Chapter 8: In Search of the Primitivists Part II: Primitive Affluence . 83 Chapter 9: From Primitive Affluence to Labor-Enslaving Technology . 89 Chapter 10: Shut Up, Marxist! . 95 Chapter 11: Anarchy after Leftism . 97 References . 105 Post-Left Anarchy: Leaving the Left Behind 115 Prologue to Post-Left Anarchy . 117 Introduction . 118 Leftists in the Anarchist Milieu . 120 Recuperation and the Left-Wing of Capital . 121 Anarchy as a Theory & Critique of Organization . 122 Anarchy as a Theory & Critique of Ideology . 125 Neither God, nor Master, nor Moral Order: Anarchy as Critique of Morality and Moralism . 126 Post-Left Anarchy: Neither Left, nor Right, but Autonomous . 128 Post-Left Anarchy? 131 Leftism 101 137 What is Leftism? . 139 Moderate, Radical, and Extreme Leftism . 140 Tactics and strategies . 140 Relationship to capitalists . 140 The role of the State . 141 The role of the individual . 142 A Generic Leftism? . 142 Are All Forms of Anarchism Leftism . 143 1 Anarchists, Don’t let the Left(overs) Ruin your Appetite 147 Introduction . 149 Anarchists and the International Labor Movement, Part I . 149 Interlude: Anarchists in the Mexican and Russian Revolutions . 151 Anarchists in the International Labor Movement, Part II . 154 Spain . 154 The Left . 155 The ’60s and ’70s . -

Recombinação

2 28/08/2002 Novas percepções para um novo tempo? Talvez. Talvez mais ainda novas visões sobre coisas antigas, o que seja. Não vamos esconder aqui um certo Amigos Leitores, anseio, meio utópico até, de mudar as coisas, as regras do jogo. Impossível? Vai saber... Como diziam os situacionistas: "As futuras revoluções deverão Agora está acionada a máquina de conceitos do Rizoma. Demos a partida inventar elas mesmas suas próprias linguagens". com o formato demo no primeiro semestre deste ano, mas só agora, depois de calibradas e recauchutadas no programa do site, que estamos Pois é, e já que falamos de jogo, é assim que propomos que você navegue começando a acelerar. pelo site. Veja as coisas como uma brincadeira, pequenos pontos para você interligar à medida que lê os textos, pois as conexões estão aí para serem Cheios de combustível e energia incendiária, voltamos à ativa agora, com feitas. Nós jogamos os dados e pontos nodais, mas é você quem põe a toda a disposição para avançar na direção do futuro. máquina conceitual para funcionar e interligar tudo. Vá em frente! Dê a partida no seu cérebro, pise no acelerador do mouse e boa diversão! É sua primeira vez no site? Estranhou o formato? Não se preocupe, o Rizoma é mesmo diferente, diferente até pra quem já conhecia as versões Ricardo Rosas e Marcus Salgado, editores do Rizoma. anteriores. Passamos um longo período de mutação e gestação até chegar nesta versão, que, como tudo neste site, está em permanente transformação. Essa é nossa visão de "work in progress". Mas vamos esclarecer um pouco as coisas.