Causal Architecture, Complexity and Self-Organization in Time Series and Cellular Automata

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Livre-Ovni.Pdf

UN MONDE BIZARRE Le livre des étranges Objets Volants Non Identifiés Chapitre 1 Paranormal Le paranormal est un terme utilisé pour qualifier un en- mé n'est pas considéré comme paranormal par les semble de phénomènes dont les causes ou mécanismes neuroscientifiques) ; ne sont apparemment pas explicables par des lois scien- tifiques établies. Le préfixe « para » désignant quelque • Les différents moyens de communication avec les chose qui est à côté de la norme, la norme étant ici le morts : naturels (médiumnité, nécromancie) ou ar- consensus scientifique d'une époque. Un phénomène est tificiels (la transcommunication instrumentale telle qualifié de paranormal lorsqu'il ne semble pas pouvoir que les voix électroniques); être expliqué par les lois naturelles connues, laissant ain- si le champ libre à de nouvelles recherches empiriques, à • Les apparitions de l'au-delà (fantômes, revenants, des interprétations, à des suppositions et à l'imaginaire. ectoplasmes, poltergeists, etc.) ; Les initiateurs de la parapsychologie se sont donné comme objectif d'étudier d'une manière scientifique • la cryptozoologie (qui étudie l'existence d'espèce in- ce qu'ils considèrent comme des perceptions extra- connues) : classification assez injuste, car l'objet de sensorielles et de la psychokinèse. Malgré l'existence de la cryptozoologie est moins de cultiver les mythes laboratoires de parapsychologie dans certaines universi- que de chercher s’il y a ou non une espèce animale tés, notamment en Grande-Bretagne, le paranormal est inconnue réelle derrière une légende ; généralement considéré comme un sujet d'étude peu sé- rieux. Il est en revanche parfois associé a des activités • Le phénomène ovni et ses dérivés (cercle de culture). -

Order Form Full

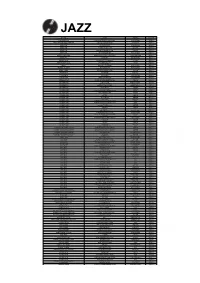

JAZZ ARTIST TITLE LABEL RETAIL ADDERLEY, CANNONBALL SOMETHIN' ELSE BLUE NOTE RM112.00 ARMSTRONG, LOUIS LOUIS ARMSTRONG PLAYS W.C. HANDY PURE PLEASURE RM188.00 ARMSTRONG, LOUIS & DUKE ELLINGTON THE GREAT REUNION (180 GR) PARLOPHONE RM124.00 AYLER, ALBERT LIVE IN FRANCE JULY 25, 1970 B13 RM136.00 BAKER, CHET DAYBREAK (180 GR) STEEPLECHASE RM139.00 BAKER, CHET IT COULD HAPPEN TO YOU RIVERSIDE RM119.00 BAKER, CHET SINGS & STRINGS VINYL PASSION RM146.00 BAKER, CHET THE LYRICAL TRUMPET OF CHET JAZZ WAX RM134.00 BAKER, CHET WITH STRINGS (180 GR) MUSIC ON VINYL RM155.00 BERRY, OVERTON T.O.B.E. + LIVE AT THE DOUBLET LIGHT 1/T ATTIC RM124.00 BIG BAD VOODOO DADDY BIG BAD VOODOO DADDY (PURPLE VINYL) LONESTAR RECORDS RM115.00 BLAKEY, ART 3 BLIND MICE UNITED ARTISTS RM95.00 BROETZMANN, PETER FULL BLAST JAZZWERKSTATT RM95.00 BRUBECK, DAVE THE ESSENTIAL DAVE BRUBECK COLUMBIA RM146.00 BRUBECK, DAVE - OCTET DAVE BRUBECK OCTET FANTASY RM119.00 BRUBECK, DAVE - QUARTET BRUBECK TIME DOXY RM125.00 BRUUT! MAD PACK (180 GR WHITE) MUSIC ON VINYL RM149.00 BUCKSHOT LEFONQUE MUSIC EVOLUTION MUSIC ON VINYL RM147.00 BURRELL, KENNY MIDNIGHT BLUE (MONO) (200 GR) CLASSIC RECORDS RM147.00 BURRELL, KENNY WEAVER OF DREAMS (180 GR) WAX TIME RM138.00 BYRD, DONALD BLACK BYRD BLUE NOTE RM112.00 CHERRY, DON MU (FIRST PART) (180 GR) BYG ACTUEL RM95.00 CLAYTON, BUCK HOW HI THE FI PURE PLEASURE RM188.00 COLE, NAT KING PENTHOUSE SERENADE PURE PLEASURE RM157.00 COLEMAN, ORNETTE AT THE TOWN HALL, DECEMBER 1962 WAX LOVE RM107.00 COLTRANE, ALICE JOURNEY IN SATCHIDANANDA (180 GR) IMPULSE -

Label Chart Action Report in Qtr. Soared in '68 by MIKE GROSS NEW YORK It''s SRO at by GRAEME ANDREWS - NEW YORK - the Atlan- Cent Share and Six Titles

APRIL 5, 1969 SEVENTY -FIFTH YEAR 1.00 COIN MACHINE PAGES 45 TO 50 The International Music -Record Newsweekly U. K.'s Sales, IIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIIII 2 HOTELS SRO Atlantic Tops Billboard's Hot 100 Album Prod. -REGISTRATION TO IMIC OPEN Label Chart Action Report in Qtr. Soared in '68 By MIKE GROSS NEW YORK It''s SRO at By GRAEME ANDREWS - NEW YORK - The Atlan- cent share and six titles. Plac- ords placed second with a 5.5 the two hotels that have been tic Records label topped the ing fourth was Reprise Records per cent share and seven titles; LONDON - Britain's record booked at special conference "Hot 100" field for the first with 4.4 per cent and 10 titles; Columbia came in third with a industry hit an all -time high in rates for the International Mu- quarter of 1969 in the initial Motown Records was fifth with 5.3 per cent share and seven sales, exports and album pro- sic Industry Conference in the survey compiled by Billboard's a 4.2 per cent share and six titles; Uni placed fourth with a duction in 1968, according to Charts Department. Atlantic had titles. Rounding out the "top 4.3 and Bahamas April 20 -23. Accom- per cent share four Ministry of Technology statis- 6.8 per cent of the chart action 10" in their respective order titles, and Tamla took fifth with tics. The results show that the modations have been exhausted during the first three months of were: Epic, Stax, Atco, Colum- a 4.0 per cent share and four industry managed to ride out at the Paradise Island Hotel & the year and placed 20 titles on bia and Uni. -

Surviving Life's Tragedy; Seeking Greater Meaning Approachi Different P

THE GRAND RAPIDS PRESS THURSDAY, OCTOBER 17, 2019 B5 Grand Rapids Press - 10/17/2019 Copy Reduced to 90% from original to fit letter page Page : B05 INTERFAITH INSIGHT ETHICS & RELIGION TALK Surviving life’s tragedy; Approaching people with seeking greater meaning diff erent perspectives Douglas Kindschi Director, Kaufman Interfaith Institute, GVSU Rabbi David Krishef [email protected] “Why religion, of all things? If you go Jason N. asks, “How can I “Reformed and Presbyterian Christians Why not something that has talk to others about climate cherish the approach taught by our great an impact in the real world?” What: Elaine Pagels, Interfaith Consortium change, money in politics, spiritual forebear, Augustine of Hippo: This was the question that religion grad- Conference women’s health, etc., from a faith perspec- Fides quaerens intellectum, ‘a faith that uate student at Harvard University Elaine tive to people with faith, but a diff erent polit- seeks understanding.’ Your aim should be Pagels was asked by her future husband, a Date: Oct. 30 ical perspective?” to understand different perspectives, not quantum physicist faculty member at New to debate or refute them, and certainly not York’s Rockefeller University. She in turn Time: 2 p.m. What do “secret gospels” The Rev. Colleen Squires, minister at to off end others. A good rule is found in the questioned him about why he loved the suggest about Jesus and his teaching?; All Souls Community Church of West Epistle of James: ‘My beloved brethren, let “study of virtually invisible elementary par- and 7 p.m. Why Religion? A Personal Story Michigan, a Unitarian Universalist every man be swift to hear, slow to speak, ticles: hadrons, boson, and quarks.” They Congregation, responds: slow to wrath: for the wrath of man worketh came to realize, as she put it, that “each of us Where: Eberhard Center, GVSU, 301 W. -

QUANTUM CHROMODYNAMICS' William MARCIANO and Heinz

~~KSR~POWS-(Se&m C of Physics Letters) 36, No. 3 (1978) 137- 276 NORTH-HOLLAND PUBL1[SHICNG (;Oli4PANY QUANTUM CHROMODYNAMICS’ William MARCIANO and Heinz PAGELS The Rockejdler Univer arty, New York, N.Y. 10021, U.S.A. Recetved 6 June 1977 Contents 1. Introduction 13’) 5. C alor confinement and perturbation theory 212 I.$. What is Q2D? 140 5.1. The infrared structure of QED 213 1.2. The QCD phase transttlons 142 5.2 Infrared divergences m QCD 216 1.3. Properttes of QCD 145 5.3. The Kinoshtta-Lee-Nauenberg theorem and con- 1 4. What IS m :hm review 148 fint ment 221 2 Renormaliza tron of gauge theortes 149 5 4 Beyond perturbation theory a posstble con- i 1. The pat11 integral 151 f lnemen t scheme 224 2.2. Gaub Gelds and the Faddeev-Popov trtck 154 5 5 Non-perturbattle approaches to QCD 227 2.3. Proof of renormahzabihty for gauge fields IS8 6 Topologtcal soliton\ 230 2 4 Ceynrwa rules for QCD 167 6 1 lntroductton 230 2.5 Slavno v -Taylor Identities 170 6 2 Topologrcal sohtons m D = 1. 2 and 3 dtmen$tons 236 2.6 The Sxwmger-Dyson equattons for QCD 173 6 3 The pseudopartrcte or tnstanton for D = 4 245 3 The renormallzatton group for gauge theortc$ 175 7. Recent developments 256 3 1 Renormahzatlon group equations 17x 7 1 Dtlute gases of topological soIlton\ and quark 3 2 Scaling pt operttes of QCD 1x7 continemcnr 256 3 3 Further dppkatlons of the QCD renormaludtwn 7 2 Qc*D dmphtudtv d\ d functton of the gauge group 195 couphng ‘SC, 4 Two dtmenslonal gdUge theone\ 203 8 Conclustons 265 4.1 The Schwmger model 204 References 268 4.2. -

Capitol 100-800 Main Series (1968 to 1972)

Capitol 100-800 Main Series (1968 to 1972) SWBO 101 - The Beatles - Beatles [1976] Two record set. Reissue of Apple SWBO 101. Back In The U.S.S.R./Dear Prudence/Glass Onion/Ob-La-Di, Ob-La-Da/Wild Honey Pie/The Continuing Story Of Bungalow Bill/While My Guitar Gently Weeps/Happiness Is A Warm Gun//Martha My Dear/I’m So Tired/Blackbird/Piggies/Rocky Raccoon/Don’t Pass Me By/Why Don’t We Do It In The Road?/I Will/Julia//Birthday/Yer Blues/Mother Nature’s Son/Everybody’s Got Something To Hide Except Me And My Monkey/Sexy Sadie/Helter Skelter/Long, Long, Long//Revolution 1/Honey Pie/Savoy Truffle/Cry Baby Cry/Revolution 9/Good Night ST 102 - Soul Knight - Roy Meriwether Trio [1968] Cow Cow Boogaloo/For Your Precious Love/Think/Mrs. Robinson/If You Gotta Make a Fool of Somebody/Walk With Me//Mission Impossible/Soul Serenade/Mean Greens/Chain of Fools/Satisfy My Soul/Norwegian Wood ST 103 - Wichita Lineman - Glen Campbell [1968] Wichita Lineman/(Sittin’ On) The Dock Of The Bay/If You Go Away/Ann/Words/Fate Of Man//Dreams Of The Everyday Housewife/The Straight Life/Reason To Believe/You Better Sit Down Kids/That’s Not Home ST 104 – Talk to Me, Baby – Michael Dees [1968] Talk to Me, Baby/Make Me Rainbows/Eleanor Rigby/For Once/Beautiful Friendship/Windmills of Your Mind/Nice ‘n’ Easy/Gentle Rain/Somewhere/Sweet Memories/Leaves are the Tears ST 105 - Two Shows Nightly - Peggy Lee [1969] Withdrawn shortly after release. -

Case Against Accident and Self Organization

A Case Against Accident and Sell-Organization Dean L. Overman ROWMAN & LITTLEFIELD PUBLISHERS, INC. Lanham • Boulder • New York • Oxford Copyright © 1997 by Dean L. Overman All rights reserved Printed in the United States of America British Library Cataloguing in Publication Information Available Quotation reprintedwith the permission of Simon & Schuster from Catch-22 by Joseph Heller. Copyright© 1955,1961 by Joseph Heller. Copyright renewed (c) 1989 by Joseph Heller. Quotations reprinted with the permission of Adler & Adler from Evolution: A Theoryin Crisis by Michael Denton. Copyright© 1985 by Michael Denton. Quotations reprinted with the permission of Cambridge Univer sity Press from Information Theory and Molecular Biology by Hubert Yockey. Copyright© 1992 by Cambridge University Press. Library of Congress Cataloging-in-Publication Data Overman,Dean L. A case against accident and self-organizationI Dean L. Overman. p. em. Includes bibliographical references and index. ISBN 0-8476-8966-2 (cloth : alk. paper) 1. Life-Origin. 2. Molecular biology. 3. Probabilities. 4. Self-organizing systems. 5. Cosmology. 6. Nuclear astrophysics. 7. Evolution-Philosophy. I. Title QH325.084 1997 576.8'3-dc21 97-25885 CIP ISBN 0-8476-8966-2 (cloth: alk. ppr.) TII e The paper used in this publication meets the minimum requirements of American National Standard for Information Sciences Permanence of Paper for Printed Library Materials, ANSI 239.48-1984. This book is dedicated to Linda, Christiana, and Elisabeth. CONTENTS FOREWORD .................................................................................... -

Part 3 Elaine Pagels and Why Faith Matters Even in Life's Darkest

Extraordinary Persons of Faith: Part 3 Elaine Pagels and Why Faith Matters Even in Life’s Darkest Times Mothers Day Message – May 10/20 Rev. Del Stewart Introduction Elaine Pagels, (nee Hiesey) was born in California in 1943. She is an American Professor of Religion at Princeton University. Her area of academic expertise and research is early Christianity and Gnosticism. *Gnosticism describes the thought and practise of various cults of the late pre-Christian and early Christian centuries distinguished by the conviction that material things are evil and, that the individual person’s liberation comes through gnosis, i.e. the [sometimes secret] knowledge of spiritual mysteries. Pagels’ best-selling book, “The Gnostic Gospels”, published in 1979, examines divisions in the early church and the way women have been viewed throughout both Jewish and Christian history. “The Gnostic Gospels” was named as one of the 100 best books of the 20th century. Pagels is 77 years old. Elaine Pagels with U.S. President Obama 1 Elaine Pagels’ Early Life and Education Born into a fiercely secular family, Elaine Pagels’ career and spiritual journey began with an act of teen rebellion. At age 13, along with some Christian friends, a curious Elaine Pagels went to a revival preached by Billy Graham, at the Cow Palace near San Francisco. When the world renowned evangelist invited the assembled crowd of some 23,000 to be “born again”, the teenage girl was unable to resist his invitation. With her eyes filled with tears, she went forward to be “saved”. Later in a personal memoir, Pagels wrote that the Billy Graham revival experience “changed my life, as the preacher promised it would – although not entirely as he intended”. -

Perfect Symmetry

"The fabric of the world has its center everywhere and its circumference nowhere." —Cardinal Nicolas of Cusa, fifteenth century The attempt to understand the origin of the universe is the greatest challenge confronting the physical sciences. Armed with new concepts, scientists are rising to meet that challenge, although they know that success may be far away. Yet when the origin of the universe is understood, it will open a new vision of reality at the threshold of our imagination, a comprehensive vision that is beautiful, wonderful, and filled with the mystery of existence. It will be our intellectual gift to our progeny and our tribute to the scientific heroes who began this great adventure of the human mind, never to see it completed. —From Perfect Symmetry BANTAM NEW AGE BOOKS This important imprint includes books in a variety of fields and disciplines and deals with the search for meaning, growth and change. They are books that circumscribe our times and our future. Ask your bookseller for the books you have missed. THE ART OF BREATHING by Nancy Zi BETWEEN HEALTH AND ILLNESS by Barbara B. Brown THE CASE FOR REINCARNATION by Joe Fisher THE COSMIC CODE by Heinz R. Pagels CREATIVE VISUALIZATION by Shakti Gawain THE DANCING WU LI MASTERS by Gary Zukav DON'T SHOOT THE DOG: HOW TO IMPROVE YOURSELF AND OTHERS THROUGH BEHAVIORAL TRAINING by Karen Pryor ECOTOPIA bv Ernest Callenbach AN END TO INNOCENCE by Sheldon Kopp ENTROPY by Jeremy Rifkin with Ted Howard THE FIRST THREE MINUTES by Steven Weinberg FOCUSING by Dr. Eugene T. -

Exploring the Cultural Meaning of the Natural Sciences in Contemporary

Rhetoric and Representation: Exploring the Cultural Meaning of the Natural Sciences in Contemporary Popular Science Writing and Literature Juuso Aarnio Doctoral Dissertation, Department of English, University of Helsinki 2008 © Juuso Aarnio 2008 ISBN 978-952-92-3478-3 (paperback) ISBN 978-952-10-4581-3 (PDF) Helsinki University Printing House Helsinki 2008 Abstract During the last twenty-five years, literary critics have become increasingly aware of the complexities surrounding the relationship between the so-called two cultures of science and literature. Instead of regarding them as antagonistic endeavours, many now argue that the two emerge from the common ground of language, and often deal with and respond to similar questions, although their methods of doing it are different. While this thesis does not suggest that science should simply be treated as an instance of discursive practices, it shows that our understanding of scientific ideas is to a considerable extent guided by the employment of linguistic structures that allow genres of science writing such as popular science to express arguments in a persuasive manner. In this task figurative language plays a significant role, as it helps create a close link between content and form, the latter not only stylistically supporting the former but also frequently epitomizing the philosophy behind what is said and establishing various kinds of argumentative logic. As many previous studies have tended to focus only on the use of metaphor in scientific arguments, this thesis seeks to widen the scope by also analysing the use of other figures of speech. Because of its important role in popular science writing, figurative language constitutes a bridge to literature employing scientific ideas. -

By Daisy Xiaohui Yang Submitted to the Graduate Faculty of Arts And

DUSK WITHOUT SUNSET: ACTIVELY AGING IN TRADITIONAL CHINESE MEDICINE by Daisy Xiaohui Yang B.A., Wuhan Univerity, China 1998 Submitted to the Graduate Faculty of Arts and Sciences in partial fulfillment of the requirements for the degree of Doctor of Philosophy University of Pittsburgh 2006 UNIVERSITY OF PITTSBURGH SCHOOL OF ARTS AND SCIENCES This dissertation was presented by Daisy Xiaohui Yang It was defended on [April 21, 2006] and approved by Nicole Constable, Professor, Department of Anthropology Akiko Hashimoto, Professor, Department of Sociology Carol McAllister, Associate Professor, Department of Behavioral and Community Health Sciences Dissertation Advisor: Joseph Alter, Professor, Department of Anthropology ii Copyright © by Daisy Xiaohui Yang 2006 iii DUSK WITHOUT SUNSET: ACTIVELY AGING IN TRADITIONAL CHINESE MEDICINE Daisy Xiaohui Yang, PhD University of Pittsburgh, 2006 Drawing on theoretical perspectives in critical medical anthropology, this dissertation focuses on the intersection between Traditional Chinese Medicine (TCM), aging and identity in urban China. It gives special attention to elderly people’s embodied agency in assimilating, challenging, and resisting political and social discourses about getting old. In most general terms my argument is that embodied agency is expressed by participating in daily health regimens referred to as yangsheng. With its origins in ancient Chinese medical texts and health practices, but also having incorporated many modern elements, yangsheng may be understood as a system of beliefs and practices designed for self-health cultivation. In light of major anthropological theories that provide an understanding of biopower, somatization and agency my argument is two fold. First, the state discourse on healthy aging, prompted by social, economic and demographic changes, has had a tremendous effect on how the elderly think and act with reference to their physical and mental health. -

People and Things

People and things graphy at IBM's own research source i$ being designed and built On people centre. for IBM by Oxford Instruments in X-rays, with their shorter wave the UK, and a $20 million contract length, overcome the resolution has been awarded to Maxwell-Bro- Helen Edwards, Head of Fermilab's problems inherent in optical litho beck of San Diego for a source for Accelerator Division, has been graphy, and the development of the Center for Advanced Micro- awarded a prestigious MacArthur new compact X-ray synchrotron structures and Devices at Louisiana Fellowship by the Chicago-based light sources for chip manufacture State University, Baton Rouge. MacArthur Foundation in recogni is being pushed hard in several tion of her key9role in the construc countries. Brookhaven has been tion and commissioning of Fermi- hosen as the site for a new super lab's Tevatron ring. conducting X-ray lithography The face of chips to come. Electron micro scope picture of a chip developed by IBM source in a $207 million US De using an X-ray beam from the US National partment of Defense programme Synchrotron Light Source at Brookhaven. The 1988 Dirac Medals of the In (story next month). Metal lines, less than a micron across, con nect to transistors (seen as small dark cir ternational Centre for Theoretical Meanwhile a compact X-ray cles) half a micron in diameter. Physics (ICTP), Trieste, Italy, have been awarded to Efim Samoilovich Fradkin of the Lebedev Physical In stitute, Moscow, and to David Gross of Princeton. Fradkin's award marks his many fruitful contributions to quantum field theory and statistics: function al methods, basic results applicable to a range of field theories, the quantization of relativistic systems, etc., which have gone on to play a vital role in modern theories of fields, strings and membranes.