Probabilistic Naming of Functions in Stripped Binaries

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Unemployment Benefits and Reservation Wages: Key Elasticities from a Stripped-Down Job Search Approach

Estudos e Documentos de Trabalho Working Papers 3 | 2008 UNEMPLOYMENT BENEFITS AND RESERVATION WAGES: KEY ELASTICITIES FROM A STRIPPED-DOWN JOB SEARCH APPROACH John T. Addison Mário Centeno Pedro Portugal February 2008 The analyses, opinions and findings of these papers represent the views of the authors, they are not necessarily those of the Banco de Portugal. Please address correspondence to Pedro Portugal Economics and Research Department Banco de Portugal, Av. Almirante Reis no. 71, 1150-012 Lisboa, Portugal; Tel.: 351 213 138 410, Email: [email protected] BANCO DE PORTUGAL Economics and Research Department Av. Almirante Reis, 71-6th floor 1150-012 Lisboa www.bportugal.pt Printed and distributed by Administrative Services Department Av. Almirante Reis, 71-2nd floor 1150-012 Lisboa Number of copies printed 170 issues Legal Deposit no. 3664/83 ISSN 0870-0117 ISBN Unemployment Benefits and Reservation Wages: Key Elasticities from a Stripped-Down Job Search Approach By John T. Addison†,M´ario Centeno‡ and Pedro Portugal§ †Queen’s University Belfast and University of South Carolina ‡Banco de Portugal and Universidade T´ecnica de Lisboa §Banco de Portugal and Universidade NOVA de Lisboa This Version: February 2008 Abstract This paper exploits survey information on reservation wages and data on actual wages from the European Community Household Panel to deduce in the manner of Lancaster and Chesher (1983) additional parameters of a stylized structural search model; specifically, reservation wage and transition/duration elasticities. The informational requirements of this approach are minimal, thereby facilitating comparisons between countries. Further, its policy content is immediate insofar as the impact of unemployment benefit rules and measures increasing the arrival rate of job offers are concerned. -

University of Glasgow, Glasgow, Scotland

UNIVERSITY OF WISCONSIN EAU CLAIRE CENTER FOR INTERNATIONAL EDUCATION Study Abroad UNIVERSITY OF GLASGOW, GLASGOW, SCOTLAND 2020 Program Guide ABLE OF ONTENTS Sexual Harassment and “Lad Culture” in the T C UK ...................................................................... 12 Academics .............................................................. 5 Emergency Contacts ...................................... 13 Pre-departure Planning ..................................... 5 911 Equivalent in the UK ............................... 13 Graduate Courses ............................................. 5 Marijuana and other Illegal Drugs ................ 13 Credits and Course Load .................................. 5 Required Documents .......................................... 14 Registration at Glasgow .................................... 5 Visa ................................................................... 14 Class Attendance ............................................... 5 Why Can’t I fly through Ireland? ................... 14 Grades ................................................................. 6 Visas for Travel to Other Countries .............. 14 Glasgow & UWEC Transcripts ......................... 6 Packing Tips ........................................................ 14 UK Academic System ....................................... 6 Weather ............................................................ 14 Semester Students Service-Learning ............. 9 Clothing............................................................ -

Netflix and the Development of the Internet Television Network

Syracuse University SURFACE Dissertations - ALL SURFACE May 2016 Netflix and the Development of the Internet Television Network Laura Osur Syracuse University Follow this and additional works at: https://surface.syr.edu/etd Part of the Social and Behavioral Sciences Commons Recommended Citation Osur, Laura, "Netflix and the Development of the Internet Television Network" (2016). Dissertations - ALL. 448. https://surface.syr.edu/etd/448 This Dissertation is brought to you for free and open access by the SURFACE at SURFACE. It has been accepted for inclusion in Dissertations - ALL by an authorized administrator of SURFACE. For more information, please contact [email protected]. Abstract When Netflix launched in April 1998, Internet video was in its infancy. Eighteen years later, Netflix has developed into the first truly global Internet TV network. Many books have been written about the five broadcast networks – NBC, CBS, ABC, Fox, and the CW – and many about the major cable networks – HBO, CNN, MTV, Nickelodeon, just to name a few – and this is the fitting time to undertake a detailed analysis of how Netflix, as the preeminent Internet TV networks, has come to be. This book, then, combines historical, industrial, and textual analysis to investigate, contextualize, and historicize Netflix's development as an Internet TV network. The book is split into four chapters. The first explores the ways in which Netflix's development during its early years a DVD-by-mail company – 1998-2007, a period I am calling "Netflix as Rental Company" – lay the foundations for the company's future iterations and successes. During this period, Netflix adapted DVD distribution to the Internet, revolutionizing the way viewers receive, watch, and choose content, and built a brand reputation on consumer-centric innovation. -

A Threshold Crossed Israeli Authorities and the Crimes of Apartheid and Persecution WATCH

HUMAN RIGHTS A Threshold Crossed Israeli Authorities and the Crimes of Apartheid and Persecution WATCH A Threshold Crossed Israeli Authorities and the Crimes of Apartheid and Persecution Copyright © 2021 Human Rights Watch All rights reserved. Printed in the United States of America ISBN: 978-1-62313-900-1 Cover design by Rafael Jimenez Human Rights Watch defends the rights of people worldwide. We scrupulously investigate abuses, expose the facts widely, and pressure those with power to respect rights and secure justice. Human Rights Watch is an independent, international organization that works as part of a vibrant movement to uphold human dignity and advance the cause of human rights for all. Human Rights Watch is an international organization with staff in more than 40 countries, and offices in Amsterdam, Beirut, Berlin, Brussels, Chicago, Geneva, Goma, Johannesburg, London, Los Angeles, Moscow, Nairobi, New York, Paris, San Francisco, Sydney, Tokyo, Toronto, Tunis, Washington DC, and Zurich. For more information, please visit our website: http://www.hrw.org APRIL 2021 ISBN: 978-1-62313-900-1 A Threshold Crossed Israeli Authorities and the Crimes of Apartheid and Persecution Map .................................................................................................................................. i Summary ......................................................................................................................... 2 Definitions of Apartheid and Persecution ................................................................................. -

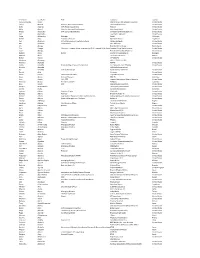

First Name Last Name Title Company Country Anouk Florencia Aaron Warner Bros

First Name Last Name Title Company Country Anouk Florencia Aaron Warner Bros. International Television United States Carlos Abascal Director, Ole Communications Ole Communications United States Kelly Abcarian SVP, Product Leadership Nielsen United States Mike Abend Director, Business Development New Form Digital United States Friday Abernethy SVP, Content Distribution Univision Communications Inc United States Jack Abernethy Twentieth Television United States Salua Abisambra Manager Salabi Colombia Rafael Aboy Account Executive Newsline Report Argentina Cori Abraham SVP of Development and International Oxygen Network United States Mo Abraham Camera Man VIP Television United States Cris Abrego Endemol Shine Group Netherlands Cris Abrego Chairman, Endemol Shine Americas and CEO, Endemol Shine North EndemolAmerica Shine North America United States Steve Abrego Endemol Shine North America United States Patrícia Abreu Dirctor Upstar Comunicações SA Portugal Manuel Abud TV Azteca SAB de CV Mexico Rafael Abudo VIP 2000 TV United States Abraham Aburman LIVE IT PRODUCTIONS Francine Acevedo NATPE United States Hulda Acevedo Programming Acquisitions Executive A+E Networks Latin America United States Kristine Acevedo All3Media International Ric Acevedo Executive Producer North Atlantic Media LLC United States Ronald Acha Univision United States David Acosta Senior Vice President City National Bank United States Jorge Acosta General Manager NTC TV Colombia Juan Acosta EVP, COO Viacom International Media Networks United States Mauricio Acosta President and CEO MAZDOC Colombia Raul Acosta CEO Global Media Federation United States Viviana Acosta-Rubio Telemundo Internacional United States Camilo Acuña Caracol Internacional Colombia Andrea Adams Director of Sales FilmTrack United States Barbara Adams Founder Broken To Reign TV United States Robin C. Adams Executive In Charge of Content and Production Endavo Media and Communications, Inc. -

Multi-Periodic Pulsations of a Stripped Red Giant Star in an Eclipsing Binary

1 Multi-periodic pulsations of a stripped red giant star in an eclipsing binary Pierre F. L. Maxted1, Aldo M. Serenelli2, Andrea Miglio3, Thomas R. Marsh4, Ulrich Heber5, Vikram S. Dhillon6, Stuart Littlefair6, Chris Copperwheat7, Barry Smalley1, Elmé Breedt4, Veronika Schaffenroth5,8 1 Astrophysics Group, Keele University, Keele, Staffordshire, ST5 5BG, UK. 2 Instituto de Ciencias del Espacio (CSIC-IEEC), Facultad de Ciencias, Campus UAB, 08193, Bellaterra, Spain. 3 School of Physics and Astronomy, University of Birmingham, Edgbaston, Birmingham, B15 2TT, UK. 4 Department of Physics, University of Warwick, Coventry, CV4 7AL, UK. 5 Dr. Karl Remeis-Observatory & ECAP, Astronomical Institute, Friedrich-Alexander University Erlangen-Nuremberg, Sternwartstr. 7, 96049, Bamberg, Germany. 6 Department of Physics and Astronomy, University of Sheffield, Sheffield, S3 7RH, UK. 7Astrophysics Research Institute, Liverpool John Moores University, Twelve Quays House, Egerton Wharf, Birkenhead, Wirral, CH41 1LD, UK. 8Institute for Astro- and Particle Physics, University of Innsbruck, Technikerstrasse. 25/8, 6020 Innsbruck, Austria. Low mass white dwarfs are the remnants of disrupted red giant stars in binary millisecond pulsars1 and other exotic binary star systems2–4. Some low mass white dwarfs cool rapidly, while others stay bright for millions of years due to stable 2 fusion in thick surface hydrogen layers5. This dichotomy is not well understood so their potential use as independent clocks to test the spin-down ages of pulsars6,7 or as probes of the extreme environments in which low mass white dwarfs form8–10 cannot be fully exploited. Here we present precise mass and radius measurements for the precursor to a low mass white dwarf. -

Tbivision.Com October/November 2018

Formats TBIvision.com October/November 2018 MIPCOM Stand No: P3.C10 @all3media_int all3mediainternational.com FormatspOFC OctNov18.indd 1 01/10/2018 20:27 The original and best adventure reality format Produced in more than 40 countries 37 seasons in the US Production hubs available FormatspIFC Banijay Survivor OctNov18.indd 1 02/10/2018 10:42 CONTENTS INSIDE THIS ISSUE 6 8 14 This issue 6 Beat the Internet with Vice 14 TV’s love affair Kaltrina Bylykbashi visits the set for Vice Studios and UKTV’s Beat the ITV Studios Global Creative Network MD, Mike Beale, speaks to TBI Internet, a new play-along game show about Love Island’s international success 8 Hot picks Regulars TBI looks at some of the hottest upcoming unscripted titles. From Evil 4. People A round up of the latest movers and shakers in international TV Monkeys to finding The Greatest Dancer, there’s something for everyone 16. Last Word: Endemol Shine Group’s Lisa Perrin in our top list Editor Manori Ravindran • [email protected] • manori_r Television Business International (USPS 003-807) is published bi-monthly (Jan, Mar, Apr, Jun, Aug and Oct) by Informa Telecoms Media, Maple House, 149 Tottenham Court Road, London, W1T 7AD, United Managing editor Kaltrina Bylykbashi • [email protected] • @bylkbashi Kingdom. The 2006 US Institutional subscription price is $255. Airfreight and mailing in the USA by Sales manager Michael Callan • [email protected] Agent named Air Business, C/O Priority Airfreight NY Ltd, 147-29 182nd Street, Jamaica, NY11413. Periodical postage paid at Jamaica NY 11431. -

The Greater Boston Housing Report Card 2015 the Housing Cost Conundrum

UNDERSTANDING BOSTON The Greater Boston Housing Report Card 2015 The Housing Cost Conundrum Barry Bluestone James Huessy Eleanor White Charles Eisenberg Tim Davis with assistance from William Reyelt Prepared by The Kitty and Michael Dukakis Center for Urban and Regional Policy Northeastern University for The Boston Foundation Edited by Rebecca Koepnick Mary Jo Meisner Kathleen Clute The Boston Foundation November 2015 The Boston Foundation, Greater Boston’s community foundation, is one of the largest community foundations in the nation, with net assets of some $1 billion. In 2014, the Foundation and its donors made more than $112 million in grants to nonprofit organizations and received gifts of nearly $112 million. In celebration of its Centennial in 2015, the Boston Foundation has launched the Campaign for Boston to strengthen the Permanent Fund for Boston, the only endowment fund focused on the most pressing needs of Greater Boston. The Foundation is proud to be a partner in philanthropy, with more than 1,000 separate charitable funds established by donors either for the general benefit of the community or for special purposes. The Boston Foundation also serves as a major civic leader, think tank and advocacy organization, commissioning research into the most critical issues of our time and helping to shape public policy designed to advance opportunity for everyone in Greater Boston. The Philanthropic Initiative (TPI), an operating unit of the Foundation, designs and implements customized philanthropic strategies for families, foundations and corporations around the globe. For more information about the Boston Foundation and TPI, visit tbf.org or call 617-338-1700. -

Health of Boston 2014-2015 Martin J

Prepared by the Boston Public Health Commission Health of Boston 2014-2015 Martin J. Walsh, Mayor, City of Boston Paula Johnson, MD, MPH, Chair Board of the Boston Public Health Commission HuyHuy Nguyen,Nguyen, MD,MD, InterimInterim ExecutiveExecutive Director and Medical Director Building a Healthy Boston Boston Public Health Commission Health of Boston 2014-2015 Copyright Information All material contained in this report is in the public domain and may be used and reprinted without special permission; however, citation as to the source is appropriate. Suggested Citation Health of Boston 2014-2015: Boston Public Health Commission Research and Evaluation Office Boston, Massachusetts 2015 Acknowledgements This report was prepared by Snehal N. Shah, MD, MPH; H. Denise Dodds, PhD, MCRP, MEd; Dan Dooley, BA; Phyllis D. Sims, MS; S. Helen Ayanian, BA; Neelesh Batra, MSc; Alan Fossa, MPH; Shannon E. O’Malley, MS; Dinesh Pokhrel, MPH; Elizabeth Russo, MD, MPH; Rashida Taher, MPH; Sarah Thomsen- Ferreira, M.S.; Megan Young, MHS; Jun Zhao, PhD. The cover was designed by Lisa Costanzo, BFA. Health of Boston 2014-2015 Table of Contents Acknowledgements ........................................................................................................................................................................ 1 Introduction ...................................................................................................................................................................................... 5 Executive Summary ...................................................................................................................................................................... -

The Criminal Code of Finland (39/1889, Amendments up to 766/2015 Included)

Translation from Finnish Legally binding only in Finnish and Swedish Ministry of Justice, Finland The Criminal Code of Finland (39/1889, amendments up to 766/2015 included) Chapter 1 - Scope of application of the criminal law of Finland (626/1996) Section 1 - Offence committed in Finland (1) Finnish law applies to an offence committed in Finland. (2) Application of Finnish law to an offence committed in Finland’s economic zone is subject to the Act on the Economic Zone of Finland (1058/2004) and the Act on the Environmental Protection in Navigation (300/1979). (1680/2009) Section 2 - Offence connected with a Finnish vessel (626/1996) (1) Finnish law applies to an offence committed on board a Finnish vessel or air- craft if the offence was committed (1) while the vessel was on the high seas or in territory not belonging to any State or while the aircraft was in or over such territory, or (2) while the vessel was in the territory of a foreign State or the aircraft was in or over such territory and the offence was committed by the master of the vessel or aircraft, a member of its crew, a passenger or a person who otherwise was on board. (2) Finnish law also applies to an offence committed outside of Finland by the master of a Finnish vessel or aircraft or a member of its crew if, by the offence, the perpetrator has violated his or her special statutory duty as the master of the vessel or aircraft or a member of its crew. Section 3 - Offence directed at Finland (626/1996) (1) Finnish law applies to an offence committed outside of Finland that has been directed at Finland. -

Orbridge — Educational Travel Programs for Small Groups

For details or to reserve: wm.orbridge.com (866) 639-0079 APRIL 10, 2021 – APRIL 14, 2021 POST-TOUR: APRIL 14, 2021 — APRIL 16, 2021 CIVIL RIGHTS—A JOURNEY TO FREEDOM The Alabama cities of Montgomery, Birmingham, and Selma birthed the national leadership of the Civil Rights Movement in the 1950s and 1960s, when tens of thousands of people came together to advance the cause of justice against remarkable odds and fierce resistance. In partnership with the non-profit Alabama Civil Rights Tourism Association and in support of local businesses and communities, Orbridge invites you to experience the people, places, and events igniting change and defining a pivotal period for America that continues today. Dive deeper beyond history's headlines to the newsmakers, learning from actual foot soldiers of the struggle whose vivid and compelling stories bring a history of unforgettable tragedy and irrepressible triumph to life. Dear Alumni and Friends, Join us for an intimate and essential opportunity to explore the Deep South with an informative program that highlights America’s civil rights movement in Alabama. Historically, perhaps no other state has played as vital a role, where a fourth of the official U.S. Civil Rights Trail landmarks are located. On this five-day journey, discover sites that advanced social justice and shifted the course of history. Stand in the pulpit at Dexter Avenue King Memorial Baptist Church where Dr. Martin Luther King, Jr. preached, walk over the Edmund Pettus Bridge where law enforcement clashed with voting rights marchers, and gather with our group at Kelly Ingram Park as 1,000 or so students did in the 1963 Children’s Crusade. -

TRULY GLOBAL Worldscreen.Com *LIST 0515 LIS 1006 LISTINGS 5/4/15 2:18 PM Page 2

*LIST_0515_LIS_1006_LISTINGS 5/4/15 2:17 PM Page 1 WWW.WORLDSCREENINGS.COM MAY 2015 L.A. SCREENINGS EDITION TVLISTINGS THE LEADING SOURCE FOR PROGRAM INFORMATION TRULY GLOBAL WorldScreen.com *LIST_0515_LIS_1006_LISTINGS 5/4/15 2:18 PM Page 2 Exhibitor directory 9 Story Media Group 1728 Multicom Entertainment Group 1735 A+E Networks 1712 NBCUniversal International Television Distribution 1460 America Video Films 1747 Nitro Group/Sony Music Latin 1719 American Cinema International 1707 Novovision 1736 Argentina Audiovisual 1742 Paramount Home Media Distribution 1202 Armoza Formats 1928 Pol-Ka Producciones 960 ATV 1715 Polar Star 1714 Azteca 1924 Programas Para Televisión 1740 BBC Worldwide 1918 RCN Televisión 1906 Beta Film 1923 RCTV International 1709 Beverly Hills Entertainment 1901 Record TV Network 1502 BluePrint Original Content 1713 Red Arrow International 702 Calinos Entertainment 1751 Reed MIDEM 1734 Caracol TV Internacional 1909 Rive Gauche Television 1725 CBS Studios International 1402 RMViSTAR/Peace Point Star 1724 CDC United Network 1910 Rose Entertainment 1560 Cisneros Media Distribution 1702 Sato Co. 1739 Content Television 1721 Smilehood Media 1748 DHX Media 1732 SnapTV 1750 Discovery Program Sales 1755 SOMOS Distribution 1602 Disney Media Distribution Latin America 1917 Sony Pictures Television 902 Dori Media Group 1160 Spiral International 702 Dynamic Television 1706 Starz Worldwide Distribution 1745 Eccho Rights 1759 STUDIOCANAL 1723 Endemol Shine Group 802 Telefe 1802 Entertainment Studios 1708 Telefilms 1902 FLY Content