Retrieving Address-Based Locations from the Web

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The German North Sea Ports' Absorption Into Imperial Germany, 1866–1914

From Unification to Integration: The German North Sea Ports' absorption into Imperial Germany, 1866–1914 Henning Kuhlmann Submitted for the award of Master of Philosophy in History Cardiff University 2016 Summary This thesis concentrates on the economic integration of three principal German North Sea ports – Emden, Bremen and Hamburg – into the Bismarckian nation- state. Prior to the outbreak of the First World War, Emden, Hamburg and Bremen handled a major share of the German Empire’s total overseas trade. However, at the time of the foundation of the Kaiserreich, the cities’ roles within the Empire and the new German nation-state were not yet fully defined. Initially, Hamburg and Bremen insisted upon their traditional role as independent city-states and remained outside the Empire’s customs union. Emden, meanwhile, had welcomed outright annexation by Prussia in 1866. After centuries of economic stagnation, the city had great difficulties competing with Hamburg and Bremen and was hoping for Prussian support. This thesis examines how it was possible to integrate these port cities on an economic and on an underlying level of civic mentalities and local identities. Existing studies have often overlooked the importance that Bismarck attributed to the cultural or indeed the ideological re-alignment of Hamburg and Bremen. Therefore, this study will look at the way the people of Hamburg and Bremen traditionally defined their (liberal) identity and the way this changed during the 1870s and 1880s. It will also investigate the role of the acquisition of colonies during the process of Hamburg and Bremen’s accession. In Hamburg in particular, the agreement to join the customs union had a significant impact on the merchants’ stance on colonialism. -

FESTSTELLUNGSENTWURF Für Den

Unterlage 17.1.4.1 Neubau Bundesautobahn A 20 der Ausbau Bundesstraße Von ca. km 100+000 bis ca. km 113+000 Straßenbauverwaltung Nächster Ort: Dringenburg des Landes Niedersachsen Baulänge: 13,00 km Länge der Anschlüsse: FESTSTELLUNGSENTWURF für den Neubau der A 20, von Westerstede bis Drochtersen Abschnitt 1 von der A 28 bei Westerstede bis zur A 29 bei Jaderberg Schalltechnische Untersuchung Nachgeordnetes Straßennetz Aufgestellt: Oldenburg, den 28.04.2015 Niedersächsische Landesbehörde für Straßenbau und Verkehr Geschäftsbereich Oldenburg im Auftrage : gez. Mannl A 20 von Westerstede bis Drochtersen Unterlage 17.1.4.1: Nachgeordnetes Straßennetz Abschnitt 1 von der A 28 bei Westerstede bis zur A 29 bei Jaderberg Seite 2 von 82 Inhaltsverzeichnis Literaturverzeichnis ................................................................................................................. 4 Tabellenverzeichnis ................................................................................................................. 5 Anhänge .................................................................................................................................... 6 1 Aufgabenstellung .............................................................................................................. 8 2 Grundlagen der schalltechnischen Untersuchung ........................................................ 9 2.1 RECHTLICHE GRUNDLAGEN UND BEURTEILUNG ................................................................................... 9 2.2 ABGRENZUNG MÖGLICHER -

Population by Area/Community Churches

Ostfriesen Genealogical Society of America OGSA PO Box 50919 Mendota, MN 55150 651-269-3580 www.ogsa.us 1875 Ostfriesland Census Detail The 1875 census provided insights into the communities and neighborhoods across Ostfriesland. The following table is sorted by Amt (Church district) and Kirchspiel (Church community) or location if the enumerated area does not have its own Church. Region Religion Town/Area Denomination Amt Kirchspiel / Location Lutheran Reformed Catholic Other Jewish Total Christian Aurich Lutheran, Aurich Aurich 3,569 498 282 106 364 4,819 Reformed, Catholic Plaggenburg Lutheran Aurich Aurich 529 40 4 573 Dietrichsfeld Aurich Aurich 201 6 207 Egels Aurich Aurich 235 1 7 243 Ertum Aurich Aurich 337 8 345 Georgßfeld Aurich Aurich 88 4 2 94 Hartum Aurich Aurich 248 18 9 275 Ihlow Aurich Weene 6 6 Kirchdorf Aurich Aurich 527 15 1 3 546 Meerhusen Aurich Aurich 9 9 Pfalsdorf Aurich Aurich 119 41 160 Popens Aurich Aurich 127 4 131 Rahe Aurich Aurich 268 25 3 296 Sandhorst Aurich Aurich 643 48 8 7 706 Tannenhausen Aurich Aurich 337 16 353 © 2018: Ostfriesen Genealogical Society of America 1 1875 Ostfriesland Census Detail Region Religion Town/Area Denomination Amt Kirchspiel / Location Lutheran Reformed Catholic Other Jewish Total Christian Walle Aurich Aurich 779 33 812 Wallinghausen Aurich Aurich 408 17 425 Ostgroßefehn Lutheran Aurich Aurich, Oldendorf 1,968 33 1 28 7 2,037 Aurich-Oldendorf Lutheran Aurich Aurich-Oldendorf 754 4 1 4 1 764 Spetzerfehn Lutheran Aurich Aurich-Oldendorf, 988 1 989 Bagband, Strackholt Bagband -

Regionales Raumordnungsprogramm 2006 - Teil II –

Landkreis Leer Regionales Raumordnungsprogramm 2006 Regionales Raumordnungsprogramm – Vorwort Landkreis Leer Vorwort Das Regionale Raumordnungsprogramm (RROP) soll "den Raum Landkreis Leer ordnen" und legt neben dem Landesraumordnungsprogramm Ziele und Grundsätze fest. Die unter- schiedlichen Nutzungsansprüche an die Fläche sollen so möglichst aufeinander abgestimmt werden. Das Niedersächsische Raumordnungsgesetz schreibt dazu vor, dass die Planungen die nachhaltige Entwicklung des Landes und seiner Teile unter Beachtung der naturräumlichen und sonstigen Gegebenheiten in einer Weise fördern sollen, die der Gesamtheit und dem Einzelnen am besten dient. Dabei müssen die „Anforderungen zur Sicherung, des Schutzes, der Pflege und der Entwicklung der natürlichen Lebensgrundlagen sowie der sozialen, kultu- rellen und wirtschaftlichen Erfordernisse“ berücksichtigt werden. Damit das Raumordnungsprogramm für den Landkreis Leer dieses Ziel erreichen konnte, waren umfangreiche Vorarbeiten sowie ungezählte Abstimmungsgespräche und Beratungen, vor allem mit den Städten und Gemeinden sowie mit der Landwirtschaft, notwendig. Kreis- verwaltung und Kreistag war es während des gesamten Entstehungsprozesses des RROP ein besonderes Anliegen, mit den besonders Betroffenen schon im Vorfeld eine möglichst breite Übereinstimmung zu erzielen. Dass dies gelungen ist, machen der breite politische Konsens im Kreistag und die von Anfang an zu erkennende große Akzeptanz für das RROP deutlich. Es ist eine gute Basis, um gemeinsam die Entwicklung des Landkreises Leer vo- ranzubringen. Um die heutigen strukturpolitischen Herausforderungen zu bewältigen, sind vielfältige Pla- nungen auf ganz unterschiedlichen Ebenen durchzuführen. Dabei gestalten sich Abwä- gungs- und Entscheidungsprozesse angesichts ständig zunehmender verfahrensrechtlicher Anforderungen immer komplexer. Entscheidende Voraussetzung für die Nachhaltigkeit ein- zelner Handlungsmaßnahmen und damit für die weitere positive Entwicklung des Landkrei- ses ist es, diese auf ein gemeinsames gesamträumliches Leitbild auszurichten. -

Annual Report of the Federal Government on the Status of German Unity in 2017 Publishing Details

Annual Report of the Federal Government on the Status of German Unity in 2017 Publishing details Published by Federal Ministry for Economic Affairs and Energy The Federal Ministry for Economic Affairs and (Bundesministerium für Wirtschaft und Energie BMWi) Energy has been given the audit approval Public Relations berufundfamilie® for its family-friendly 11019 Berlin personnel policy. The certificate was issued www.bmwi.de by berufundfamilie gGmbH, an initiative of Editorial staff the Hertie Foundation. Federal Ministry for Economic Affairs and Energy VII D Task Force: Issues of the New Federal States Division VII D 1 Design and production PRpetuum GmbH, Munich Last updated August 2017 This and other brochures can be obtained from: Images Federal Ministry for Economic Affairs and Energy, picture alliance/ZB/euroluftbild Public Relations Division This brochure is published as part of the public relations work E-Mail: [email protected] of the Federal Ministry for Economic Affairs and Energy. www.bmwi.de It is distributed free of charge and is not intended for sale. Distribution at election events and party information stands To order brochures by phone: is prohibited, as is the inclusion in, printing on or attachment Tel.: +49 30 182722721 to information or promotional material. Fax: +49 30 18102722721 1 Annual Report of the Federal Government on the Status of German Unity in 2017 2 Content Part A.......................................................................................................................................................................................................................................................................................... -

Linie 623, WEB, Leer-Augustfehn

Q 623 Y Leer à Augustfehn | Verkehrsverbund Ems-Jade (VEJ), MoBi-Zentrale Leer: 0491/92536-0 Weser-Ems-Bus, Niederlassung Aurich Kontakt: [email protected], www.weser-ems-bus.de Am 24. und 31.12 gilt der Samstagsfahrplan, bitte Verkehrsbeschränkung beachten Montag - Freitag 2623 2623 2623 2623 2623 2623 2623 2623 2623 004 006 008 014 060 016 062 026 018 Verkehrsbeschränkungen S S S S S S S F S Hinweise 99 ELT ELT ELT ELT Leer, ZOB൷ 4 .740 11. 30 12. 30 13. 18 13. 30 15. 10 Leer, Friesenstr./Augustenstr. .741 11. 31 12. 31 13. 20 13. 31 15. 11 Leer, Hajo-Unken-Straße 13.25 Leer, Friesenstr./LZB .742 11. 32 12. 32 13.32 15. 12 Leer, Heisfstr. Apotheke .743 11. 33 12. 33 13. 27 13. 33 15. 13 Leer, B436/Hauptstraße .746 11. 36 12. 36 13. 30 13. 35 15. 16 Leer-Loga, Eichendorffstraße .747 11. 37 12. 37 13. 32 13. 36 15. 17 Leer-Loga, Staklies .748 11. 38 12. 38 13. 34 13. 37 15. 18 Leer-Loga, Leding .748 11. 38 12. 38 13. 35 13. 38 15. 18 Leer-Logabirum, Goldener Stern .749 11. 39 12. 39 13. 36 13. 39 15. 19 Maiburg .750 11. 40 12. 40 13. 37 13. 40 15. 20 Nortmoor, Bruntjer Weg .751 11. 41 12. 41 13. 39 13. 41 15. 21 Nortmoor, Alter Bahnhof .753 11. 43 12. 43 13. 41 13. 43 15. 23 Nortmoor, Mitte .755 11. 45 12. 45 13. 43 13. 44 15. -

Table of Contents Master's Degree 2 Applied Life Sciences • Hochschule Emden/Leer - University of Applied Sciences • Emden 2

Table of Contents Master's degree 2 Applied Life Sciences • Hochschule Emden/Leer - University of Applied Sciences • Emden 2 1 Master's degree Applied Life Sciences Hochschule Emden/Leer - University of Applied Sciences • Emden Overview Degree Master of Engineering Teaching language English Languages The courses are held in English and/or German. It is possible to meet all requirements in English only, but the choice of elective modules is limited with English alone. Programme duration 3 semesters Beginning Winter and summer semester More information on Winter semester: September to February beginning of studies Summer semester: March to August Application deadline Winter semester: Non-EU applicants: 30 May EU applicants: 15 July Summer semester: Non-EU applicants: 30 November EU applicants: 15 January Tuition fees per semester in None EUR Combined Master's degree / No PhD programme Joint degree / double degree No programme Description/content In this course of studies, we will train you to become a qualified expert in the fields of analytics and (bio-)process engineering. The internationally recognised study programme lasts three semesters. At the end of the programme, you will be awarded a Master of Engineering (MEng). Master of Engineering degrees in these fields are in great demand in the job market. Because these degrees are internationally recognised, they open access to higher civil service and entitle the holder to a pursue a doctorate. Prospective students who have knowledge of mechanical engineering, computer science, or electrical -

Compressor Station the Gas Bunde

THE GAS COMPRESSOR STATION BUNDE NATURAL GAS FOR EUROPE On the path to climate-neutral supply with renewable energies, in other words, solar, wind and water, gas plays an important supporting role in Europe, since it acts as a bridge, scoring points with its large reserves, low emissions and secure transport routes. And GASCADE guarantees the latter: We make sure that gas within Germany’s borders reliably reaches its respective destinations. After all, while both industrial and private demand for gas is going up, the production volume within Europe is going down. That’s why gas in our pipeline network moves from the major sources in Russia and Northwest Europe both to consumers in Germany and its neighboring countries of Belgium, France, the Netherlands, Poland and the Czech Republic, and on to Southeastern Europe. PRESSURIZING GAS From the source to where it’s used, natural gas travels many thousands of kilometers in pipelines measuring up to 1.4 meters in diameter. During this journey it loses pressure as the molecules rub against each other and the inside of the pipe. To keep the density and hence the transport speed of the gas constant, it is compressed in natural gas compressors. These are the core of of the eleven GASCADE compressor stations that are spaced at around 250 kilometers apart in the pipeline network. What happens in the compressor? Several impellers are securely arranged behind each other on a rotating, cylindrical shaft in a steel casing and rotate at a speed of up to 3,600 and 10,300 revolutions per minute. -

Tortured, Tolerated, Respected

theological questions, the order in the church and the lifestyle EASTBOUND MIGRATION - THE HISTORY OF MENNONITES IN after their elder Ammann) communities came into being. Due to WW II this arrangement remained in force. While in the WW I a of members of the congregation. Within a few years different POLAND AND RUSSIA the ongoing persecution, the “Amish” emigrated to Pennsylvania. third of the people concerned still made use of it, in the WW II it EXHIBITION INFORMATION movements developed; these were named after those regions where Owing to the ongoing persecution in the Netherlands many The Amish communities which had stayed in Europe gradually no longer played a role. ENGLISH their adherents originally came from. Like the liberal-moderate Mennonites led in the 16th century to West-Prussia. In 1569 fell apart and mostly joined the Mennonite congregations. In the “Waterlanders” or the more conservative-restrictive “Flemish” and they established the irst Mennonite church at the mouth of the German-speaking territories the last Amish community in Ixheim, Text: Matthias Pausch , M.A. “Vriesen”. These deeply religious Flemish and “Vriesen” strove for Vistula. This area is very swampy. Dutch farmers, willing to exploit (now Rhineland-Palatinate) united with the Mennonites in 1937. Translation: Bodo von Preyss a simple life, separated from the outside world, in a closed religious this barren land, were welcome – even if they were Mennonites. The way of life of the Amish People is to this day determined by community. Marriage between members of the different groups Many Dutch merchants and artisans settled in the cities, especially a separation from the non Amish. -

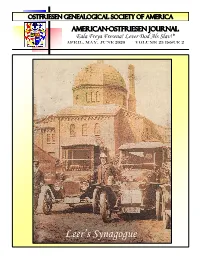

Leer's Synagogue

OSTFRIESEN GENEALOGICAL SOCIETY OF AMERICA AMERICAN-OSTFRIESEN journal Eala Freya Fresena! Lever Dod Als Slav!* April, May, June 2020 Volume 23 issue 2 Leer’s Synagogue AMERICAN–Ostfriesen journal OGSA MEMBERSHIP Ostfriesen Genealogical Society of America MEMBER PRIVILEGES include four issues of the American- Eala Freya Fresena! Lever Dod Als Slav! Ostfriesen Journal (January, April, July, October), four program Volume 23Issue 2 April-June, 2020 meetings each year and one special event, special member order discounts, and access to the OGSA library. The journal of the Ostfriesen Genealogical Society of America is pub- OGSA 2020 MEMBERSHIP—Send your check for $22 (to lished four times a year. Please write: Lin Strong, Editor, OGSA download from our website or have sent as a pdf attachment to Newsletter, 15695-368th Street, Almelund, MN 55012 or email - OGSA, P.O. Box [email protected] with comments or suggestions. an email) or $32 for paper copies payable to 50919, Mendota Heights, MN 55150. Note that if you purchase a We are happy to consider any contributions of genealogical informa- paper copy, you can also have the codes for the online color tion. Whether we can use your material is based on such factors as copy! Just ask! general interest to our members, our need to cover certain subjects, Foreign membership is $22 if downloaded or sent by pdf file. balance through the year and available space. The editor reserves the right to edit all submitted materials for presentation and grammar. The You can deposit your membership at Sparkasse in Emden, Ger- editor will correct errors and may need to determine length of copy. -

NSA/OF/Ports (Aug.).Pages

Niedersachsen/Bremen/Hamburg/Ostfriesland Resources Introduction to Lower Saxony, Bremen & Hamburg Wikipedia states in regard to the regions of this modern German Bundesland: “Lower Saxony has clear regional divisions that manifest themselves both geographically as well as historically and culturally. In the regions that used to be independent, especially the heartlands of the former states of Brunswick, Hanover, Oldenburg and Schaumburg- Lippe, there is a marked local regional awareness. By contrast, the areas surrounding the Hanseatic cities of Bremen and Hamburg are much more oriented towards those centres.” A number of the Map Guides to German Parish Registers will need to be used to find your town if you are studying this region, among them numbers 4 (Oldenburg), 10 (Hessen-Nassau), 27 (Brunswick), 30-32 (Hannover), 39 (Westphalia & Schaumburg- Lippe) Online (a sampling) Niedersächsische Landesarchiv — http://aidaonline.niedersachsen.de Oldenburg emigrants — http://www.auswanderer-oldenburg.de Ahnenforschung.org “Regional Research” — http://forum.genealogy.net Hamburg Gen. Soc. — http://www.genealogy.net/vereine/GGHH/ Osnabrück Genealogical Society (German) — http://www.osfa.de Bremen’s “Mouse” Gen. Soc. (German) — http://www.die-maus-bremen.de/index.php Mailing Lists (for all German regions, plus German-speaking areas in Europe) -- http://list.genealogy.net/mm/listinfo/ Periodicals IGS/German-American Genealogy: “Niedersachsen Research,” by Eliz. Sharp (1990) “Niedersächsische Auswanderer in den U.S.A.” (Spr’98) “Researching Church -

Table of Contents Master's Degree 2 Maritime Operations • Hochschule Emden/Leer - University of Applied Sciences • Leer (Ostfriesland)2

Table of Contents Master's degree 2 Maritime Operations • Hochschule Emden/Leer - University of Applied Sciences • Leer (Ostfriesland)2 1 Master's degree Maritime Operations Hochschule Emden/Leer - University of Applied Sciences • Leer (Ostfriesland) Overview Degree Master of Science, joint degree In cooperation with Western Norway University of Applied Sciences Teaching language English Languages English Programme duration 4 semesters Beginning Winter semester Application deadline Non-EU applicants: 15 October - 1 December EU, EEC and Nordic applicants: 1 March - 15 April Tuition fees per semester in None EUR Combined Master's degree / No PhD programme Joint degree / double degree Yes programme Description/content The priorities in the first semester are scientific work as well as a deeper introduction into international maritime processes. Cultural aspects, communication, safety, and organisational learning are reviewed in detail. The access to maritime technology is achieved by a deeper understanding of stability of floating devices. This is a key for safe operations. In the second semester, the ability to analyse will be promoted by examples of complex projects. With the help of analysis models, a variety of simulations are constructed. These are evaluated commercially, logistically, and from the perspective of technology, quality, and risk. Moreover, optimising methods are presented and applied to simulation models. Here managerial aspects are always considered, and knowledge is imparted in a project-oriented way. In the third semester, the student can expand his/her knowledge, skills, and competencies while assisting in ongoing research projects. In this context, the Faculty of Maritime Studies in Leer offers the profile Sustainable Maritime Operations, and the Stord/Haugesund University College has specialised in Offshore and Subsea Operations.