Daniel Gómez Marín

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

新成立/ 註冊及已更改名稱的公司名單list of Newly Incorporated

This is the text version of a report with Reference Number "RNC063" and entitled "List of Newly Incorporated /Registered Companies and Companies which have changed Names". The report was created on 31-08-2015 and covers a total of 2879 related records from 24-08-2015 to 30-08-2015. 這是報告編號為「RNC063」,名稱為「新成立 / 註冊及已更改名稱的公司名單」的純文字版報告。這份報告在 2015 年 8 月 31 日建立,包含從 2015 年 8 月 24 日到 2015 年 8 月 30 日到共 2879 個相關紀錄。 Each record in this report is presented in a single row with 6 data fields. Each data field is separated by a "Tab". The order of the 6 data fields are "Sequence Number", "Current Company Name in English", "Current Company Name in Chinese", "C.R. Number", "Date of Incorporation / Registration (D-M-Y)" and "Date of Change of Name (D-M-Y)". 每個紀錄會在報告內被設置成一行,每行細分為 6 個資料。 每個資料會被一個「Tab 符號」分開,6 個資料的次序為「順序編號」、「現用英文公司名稱」、「現用中文公司名稱」、「公司註冊編號」、「成立/註 冊日期(日-月-年)」、「更改名稱日期(日-月-年)」。 Below are the details of records in this report. 以下是這份報告的紀錄詳情。 1. (D & A) Development Limited 2280098 28-08-2015 2. 100 Business Limited 100 分顧問有限公司 2278033 24-08-2015 3. 13 GLOBAL GROUP LIMITED 壹三環球集團有限公司 2279402 26-08-2015 4. 262 Island Trading Group Limited 2278529 24-08-2015 5. 3 Mentors Limited 2278378 24-08-2015 6. 3a Network Limited 三俠網絡有限公司 2278169 24-08-2015 7. 3D Systems Hong Kong Co., Limited 1016514 26-08-2015 8. 716 Studio Company Limited 716 工作室有限公司 2280037 28-08-2015 9. 80 INNOVATE TECHNOLOGY LIMITED 百翎創新科技有限公司 2278139 24-08-2015 10. -

A Naturalistic Study of the History of Mormon Quilts and Their Influence on Today's Quilters

Brigham Young University BYU ScholarsArchive Theses and Dissertations 1996 A Naturalistic Study of the History of Mormon Quilts and Their Influence on odat y's Quilters Helen-Louise Hancey Brigham Young University - Provo Follow this and additional works at: https://scholarsarchive.byu.edu/etd Part of the Art and Design Commons, Art Practice Commons, History Commons, and the Mormon Studies Commons BYU ScholarsArchive Citation Hancey, Helen-Louise, "A Naturalistic Study of the History of Mormon Quilts and Their Influence on odat y's Quilters" (1996). Theses and Dissertations. 4748. https://scholarsarchive.byu.edu/etd/4748 This Thesis is brought to you for free and open access by BYU ScholarsArchive. It has been accepted for inclusion in Theses and Dissertations by an authorized administrator of BYU ScholarsArchive. For more information, please contact [email protected], [email protected]. A naturalistic STUDY OF THE HISTORY OF MORMON QUILTS AND THEIR INFLUENCE ON TODAYS QUILTERS A thesis presented to the department of family sciences brigham young university in partial fulfillment of the requirements for the degree master of science helen louise hancey 1996 by helen louise hancey december 1996 this thesis by helen louise hancey is accepted in its present form by the department of family sciences of brigham young university as satisfying the thesis requirement for the degree of master of science LL uj marinymaxinynaxinfinylewislew17JLJrowley commteecommateeComm teee e chairmanChairman cc william A wilson committee member T -

Aaron Spectre «Lost Tracks» (Ad Noiseam, 2007) | Reviews На Arrhythmia Sound

2/22/2018 Aaron Spectre «Lost Tracks» (Ad Noiseam, 2007) | Reviews на Arrhythmia Sound Home Content Contacts About Subscribe Gallery Donate Search Interviews Reviews News Podcasts Reports Home » Reviews Aaron Spectre «Lost Tracks» (Ad Noiseam, 2007) Submitted by: Quarck | 19.04.2008 no comment | 198 views Aaron Spectre , also known for the project Drumcorps , reveals before us completely new side of his work. In protovoves frenzy breakgrindcore Specter produces a chic downtempo, idm album. Lost Tracks the result of six years of work, but despite this, the rumor is very fresh and original. The sound is minimalistic without excesses, but at the same time the melodies are very beautiful, the bit powerful and clear, pronounced. Just want to highlight the composition of Break Ya Neck (Remix) , a bit mystical and mysterious, a feeling, like wandering somewhere in the http://www.arrhythmiasound.com/en/review/aaron-spectre-lost-tracks-ad-noiseam-2007.html 1/3 2/22/2018 Aaron Spectre «Lost Tracks» (Ad Noiseam, 2007) | Reviews на Arrhythmia Sound dusk. There was a place and a vocal, in a track Degrees the gentle voice Kazumi (Pink Lilies) sounds. As a result, Lost Tracks can be safely put on a par with the works of such mastadonts as Lusine, Murcof, Hecq. Tags: downtempo, idm Subscribe Email TOP-2013 Submit TOP-2014 TOP-2015 Tags abstract acid ambient ambient-techno artcore best2012 best2013 best2014 best2015 best2016 best2017 breakcore breaks cyberpunk dark ambient downtempo dreampop drone drum&bass dub dubstep dubtechno electro electronic experimental female vocal future garage glitch hip hop idm indie indietronica industrial jazzy krautrock live modern classical noir oldschool post-rock shoegaze space techno tribal trip hop Latest The best in 2017 - albums, places 1-33 12/30/2017 The best in 2017 - albums, places 66-34 12/28/2017 The best in 2017. -

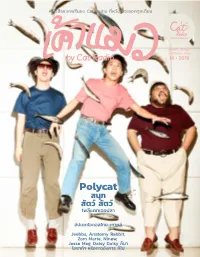

By Cat Radio 16 • 2019

หนังสือแจกฟรีของ Cat Radio ทีหวังว่าจะออกทุกเดือน่ แจกฟรี มีไม่เยอะ เก็บไว้เหอะ ...เมียว้ by Cat Radio 16 • 2019 Polycat สนุก สัตว์ สัตว์ โพลีแคทเจอปลา อัปเดตไอดอลไทย-เกาหลี Jeebbs, Anatomy Rabbit, Zom Marie, Ninew, Jesse Meg, Daisy1 Daisy ก็มา โอซาก้า หรือดาวอังคาร ก็ไป Undercover สืบจากปก by _punchspmngknlp คุณยาย บ.ก. ของเรา เสนอปกเล่มนี้ตอนทุกคนก�าลังยุ่งกับการ เตรียมงาน Cat Tshirt 6 แล้วอาศัยโอกาสลงมือท�าเลย จึงไม่มีโมเมนต์ การอภิปรายหรือเห็นชอบจากใคร อย่าได้ถามถึงความชอบธรรมใดๆ ว่า ท�าไมปกเค้าแมวกลับมาเป็นศิลปินชายอีกแล้ว (วะ) แต่ชีวิตไม่สิ้นก็ดิ้นกันไป เราได้เสนอปกรวมดาวไอดอลชุดแซนตี้ ช่วง คริสมาสต์ไปแล้ว เราสัญญาว่าเราจะไฟต์ ให้ก�าลังใจเราด้วย แต่ถ้าไม่ได้ก็ คือไม่ได้ จบ รักนะ (O,O) 2 3 เข้าช่วงท้ายของพุทธศักราช 2562 แล้ว เราจึงชวนวงดนตรีที่เพิ่งมีคอนเสิร์ตใหญ่ครั้งแรก 2 วงมาขึ้น ในฐานะผู้สังเกตการณ์ นับเป็นปีที่ ปกเค้าแมวฉบับเดียวกัน แม้จะต่างทั้งชั่วโมงบินในการท�างาน น่าชื่นใจของหลายศิลปินที่มีคอนเสิร์ต สไตล์ดนตรี สังกัด แต่เราคิดว่าทั้งคู่คงมีความสุขในการใช้เวลา ใหญ่/คอนเสิร์ตแรก ตลอดจนการไปเล่น ร่วมกับแฟนเพลงในคอนเสิร์ตเต็มรูปแบบของตนเอง รวมถึงการ CAT Intro ในเวที/เทศกาลดนตรีนานาชาติ หรือมี ท�าอะไรหลายอย่างที่น่าสนุกอย่างมาก พูดแบบกันเองก็สนุกสัสๆ RADIO ผลงานใดๆ ที่แฟนเพลงต่างสนับสนุน เราเลยจัดสัตว์มาถ่ายรูปขึ้นปกกับพวกเขาด้วยในคอนเซปต์ “สนุก อุดหนุน และภาคภูมิใจไปด้วย สัตว์ สัตว์” แต่อย่างที่เคยได้ยินกันว่าท�างานกับสัตว์ก็ไม่ง่าย ภาพ DJ Shift อาจเปรียบดังรางวัลหรือก�าลังใจ ที่ได้จึงมีทั้งสัตว์จริงและไม่จริง แต่กล่าวด้วยความสัตย์จริงว่าเรา จากการท�างานหนัก/ทุ่มเทให้สิ่งที่รัก สนุกกับการท�างานกับทั้งสองวง และหวังว่าบทสนทนาที่ปรากฏจะ -

The DIY Careers of Techno and Drum 'N' Bass Djs in Vienna

Cross-Dressing to Backbeats: The Status of the Electroclash Producer and the Politics of Electronic Music Feature Article David Madden Concordia University (Canada) Abstract Addressing the international emergence of electroclash at the turn of the millenium, this article investigates the distinct character of the genre and its related production practices, both in and out of the studio. Electroclash combines the extended pulsing sections of techno, house and other dance musics with the trashier energy of rock and new wave. The genre signals an attempt to reinvigorate dance music with a sense of sexuality, personality and irony. Electroclash also emphasizes, rather than hides, the European, trashy elements of electronic dance music. The coming together of rock and electro is examined vis-à-vis the ongoing changing sociality of music production/ distribution and the changing role of the producer. Numerous women, whether as solo producers, or in the context of collaborative groups, significantly contributed to shaping the aesthetics and production practices of electroclash, an anomaly in the history of popular music and electronic music, where the role of the producer has typically been associated with men. These changes are discussed in relation to the way electroclash producers Peaches, Le Tigre, Chicks on Speed, and Miss Kittin and the Hacker often used a hybrid approach to production that involves the integration of new(er) technologies, such as laptops containing various audio production softwares with older, inexpensive keyboards, microphones, samplers and drum machines to achieve the ironic backbeat laden hybrid electro-rock sound. Keywords: electroclash; music producers; studio production; gender; electro; electronic dance music Dancecult: Journal of Electronic Dance Music Culture 4(2): 27–47 ISSN 1947-5403 ©2011 Dancecult http://dj.dancecult.net DOI: 10.12801/1947-5403.2012.04.02.02 28 Dancecult 4(2) David Madden is a PhD Candidate (A.B.D.) in Communications at Concordia University (Montreal, QC). -

Thehard DATA Spring 2015 A.D

Price: FREE! Also free from fakes, fl akes, and snakes. TheHARD DATA Spring 2015 A.D. Watch out EDM, L.A. HARDCORE is back! Track Reviews, Event Calendar, Knowledge Bombs, Bombast and More! Hardstyle, Breakcore, Shamancore, Speedcore, & other HARD EDM Info inside! http://theharddata.com EDITORIAL Contents Editorial...page 2 Welcome to the fi rst issue of Th e Hard Data! Why did we decided to print something Watch Out EDM, this day and age? Well… because it’s hard! You can hold it in your freaking hand for kick drum’s L.A. Hardcore is Back!... page 4 sake! Th ere’s just something about a ‘zine that I always liked, and always will. It captures a point DigiTrack Reviews... page 6 in time. Th is little ‘zine you hold in your hands is a map to our future, and one day will be a record Photo Credits... page 14 of our past. Also, it calls attention to an important question of our age: Should we adapt to tech- Event Calendar... page 15 nology or should technology adapt to us? Here, we’re using technology to achieve a fun little THD Distributors... page 15 ‘zine you can fold back the page, kick back and chill with. Th e Hard Data Volume 1, issue 1 For a myriad of reasons, periodicals about Publisher, Editor, Layout: Joel Bevacqua hardcore techno have been sporadic at best, a.ka. DJ Deadly Buda despite their success (go fi gure that!) Th is has led Copy Editing: Colby X. Newton to a real dearth of info for fans and the loss of a Writers: Joel Bevacqua, Colby X. -

08.14 | the Burg | 1 Community Publishers

08.14 | The Burg | 1 Community Publishers As members of Harrisburg’s business community, we are proud to support TheBurg, a free publication dedicated to telling the stories of the people of greater Harrisburg. Whether you love TheBurg for its distinctive design, its in-depth reporting or its thoughtful features about the businesses and residents who call our area home, you know the value of having responsible, community-centered coverage. We’re thrilled to help provide greater Harrisburg with the local publication it deserves. Realty Associates, Inc. Wendell Hoover ray davis 2 | The Burg | 08.14 CoNTeNTS |||||||||||||||||||||||||||||||||||||||||||||||||||| General and leTTers NeWS 2601 N. FroNT ST., SuITe 101 • hArrISBurg, PA 17101 WWW.TheBurgNeWS.CoM 7. NEWS DIGEST ediTorial: 717.695.2576 9. cHucklE Bur G ad SALES: 717.695.2621 10. cITy vIEW 12. state strEET PuBLISher: J. ALeX hArTZLer [email protected] COVER arT By: KrisTin KesT eDITor-IN-ChIeF: LAWrANCe BINDA IN The Burg www.KesTillUsTraTion.CoM [email protected] "The old Farmer's Almanac 2015 garden 14. from THE GrouND up Calendar, yankee Publishing Co." SALeS DIreCTor: LAureN MILLS 22. mIlestoNES [email protected] leTTer FroM THe ediTor SeNIor WrITer: PAuL BArker [email protected] “Nobody on the road/Nobody on the beach” BuSINess AccouNT eXeCuTIve: ANDreA Black More than once, I’ve thought of those lyrics [email protected] 26. fAcE of Business from Don henley’s 30-year-old song, “Boys 28. SHop WINDoW of Summer,” after a stroll down 2nd Street ConTriBUTors: or along the riverfront on a hot August day. It seems that everyone has left for TArA Leo AuChey, Today’S The day hArrISBurg the summer—to the shore, the mountains, [email protected] abroad. -

Free Music and Trash Culture: the Reconfiguration of Musical Value Online

University of Wollongong Research Online Faculty of Arts - Papers (Archive) Faculty of Arts, Social Sciences & Humanities 1-1-2010 Free music and trash culture: The reconfiguration of musical value online Andrew M. Whelan University of Wollongong, [email protected] Follow this and additional works at: https://ro.uow.edu.au/artspapers Part of the Arts and Humanities Commons, and the Social and Behavioral Sciences Commons Recommended Citation Whelan, Andrew M., Free music and trash culture: The reconfiguration of musical value online 2010, 67-71. https://ro.uow.edu.au/artspapers/234 Research Online is the open access institutional repository for the University of Wollongong. For further information contact the UOW Library: [email protected] WHELAN Free Music and Trash Culture: The Reconfiguration of Musical Value Online Andrew Whelan, University of Wollongong The unprecedented quantity of music now freely available online appears to have peculiarly contradictory consequences for the ‘value’ of music. Music appears to lose value insofar as it is potentially infinitely reproducible at little cost: claims have been made that music thereby loses economic value; and perhaps affective value also. In the first case, music is not worth what it once was because copyright owners are no longer being paid for it. In losing income (the argument goes), less money will be invested in future music production: music therefore becomes less ‘good’ (culturally and aesthetically valuable). In the second case, it is suggested that online superabundance renders music worth less in that personal engagements with it are transient, frivolous, and ad hoc: nobody has time to listen to all that music, and the surfeit implies that musical value thereby depreciates. -

Marmalade at the Jam Factory

Marmalade at the Jam Factory Droylsden A collection of 2, 3 and 4 bedroom homes ‘ A reputation built on solid foundations Bellway has been building exceptional quality new homes throughout the UK for over 75 years, creating outstanding properties in desirable locations. During this time, Bellway has earned a strong Our high standards are reflected in our dedication to reputation for high standards of design, build customer service and we believe that the process of quality and customer service. From the location of buying and owning a Bellway home is a pleasurable the site, to the design of the home, to the materials and straightforward one. Having the knowledge, selected, we ensure that our impeccable attention support and advice from a committed Bellway team to detail is at the forefront of our build process. member will ensure your home-buying experience is seamless and rewarding, at every step of the way. We create developments which foster strong communities and integrate seamlessly with Bellway abides by The the local area. Each year, Bellway commits Consumer Code, which is to supporting education initiatives, providing an independent industry transport and highways improvements, code developed to make healthcare facilities and preserving - as well as the home buying process creating - open spaces for everyone to enjoy. fairer and more transparent for purchasers. Over 75 years of housebuilding expertise and innovation distilled into our flagship range of new homes. Artisan traditions sit at the heart of Bellway, who refreshed and improved internal specification for more than 75 years have been constructing carefully marries design with practicality, homes and building communities. -

“Knowing Is Seeing”: the Digital Audio Workstation and the Visualization of Sound

“KNOWING IS SEEING”: THE DIGITAL AUDIO WORKSTATION AND THE VISUALIZATION OF SOUND IAN MACCHIUSI A DISSERTATION SUBMITTED TO THE FACULTY OF GRADUATE STUDIES IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF DOCTOR OF PHILOSOPHY GRADUATE PROGRAM IN MUSIC YORK UNIVERSITY TORONTO, ONTARIO September 2017 © Ian Macchiusi, 2017 ii Abstract The computer’s visual representation of sound has revolutionized the creation of music through the interface of the Digital Audio Workstation software (DAW). With the rise of DAW- based composition in popular music styles, many artists’ sole experience of musical creation is through the computer screen. I assert that the particular sonic visualizations of the DAW propagate certain assumptions about music, influencing aesthetics and adding new visually- based parameters to the creative process. I believe many of these new parameters are greatly indebted to the visual structures, interactional dictates and standardizations (such as the office metaphor depicted by operating systems such as Apple’s OS and Microsoft’s Windows) of the Graphical User Interface (GUI). Whether manipulating text, video or audio, a user’s interaction with the GUI is usually structured in the same manner—clicking on windows, icons and menus with a mouse-driven cursor. Focussing on the dialogs from the Reddit communities of Making hip-hop and EDM production, DAW user manuals, as well as interface design guidebooks, this dissertation will address the ways these visualizations and methods of working affect the workflow, composition style and musical conceptions of DAW-based producers. iii Dedication To Ba, Dadas and Mary, for all your love and support. iv Table of Contents Abstract .................................................................................................................. -

Ing the Needs O/ the 960.05 Music & Record Jl -1Yz Gî01axiï0h Industry Oair 13Sní1s CIL7.: D102 S3lbs C,Ft1io$ K.'J O-Z- I

Dedicated To Serving The Needs O/ The 960.05 Music & Record jl -1yZ GÎ01Axiï0H Industry OAIr 13SNí1S CIL7.: d102 S3lbS C,ft1iO$ k.'J o-z- I August 23, 1969 60c In the opinion of the editors, this week the following records are the SINGLE 1'1('It-.%" OE 111/î 11'1î1îA MY BALLOON'S GOING UP (AssortedBMI), 411) Atlantic WHO 2336 ARCHIE BELL AND WE CAN MAKE IT THE DRELLS IN THE RAY CHARLES The Isley Brotiers do more Dorothy Morrison, who led Archie Bell and the Drells Ray Charles should stride of their commercial thing the shouting on "0h Happy will watch "My Balloon's back to chart heights with on "Black Berries Part I" Day," steps out on her Going Up" (Assorted, BMI) Jimmy Lewis' "We Can (Triple 3, BMI) and will own with "All God's Chil- go right up the chart. A Make It" (Tangerine -blew, WORLD pick the coin well (T Neck dren Got (East Soul" -Mem- dancing entry for the fans BMI), a Tangerine produc- 906). phis, BMI) (Elektra 45671). (Atlantic 2663). tion (ABC 11239). PHA'S' 111' THE U lî lî /í The Hardy Boys, who will The Glass House is the Up 'n Adam found that it Wind, a new group with a he supplying the singing first group from Holland - was time to get it together. Wind is a new group with a for TV's animated Hardy Dozier - Holland's Invictus They do with "Time to Get Believe" (Peanut Butter, Boys, sing "Love and Let label. "Crumbs Off the It Together" (Peanut But- BMI), and they'll make it Love" (Fox Fanfare, BMI) Table" (Gold Forever, BMI( ter, BMI(, a together hit way up the list on the new (RCA 74-0228). -

Concrete: Art Design Architecture Education Resource Contents

CONCRETE: ART DESIGN ARCHITECTURE EDUCATION RESOURCE CONTENTS 1 BACKGROUND BRIEFING 1.1 ABOUT THIS EXHIBITION 1.2 CONCRETE: A QUICK HISTORY 1.3 WHY I LIKE CONCRETE: EXTRACTS FROM THE CATALOGUE ESSAYS 1.4 GENERAL GLOSSARY OF CONCRETE TERMS AND TECHNOLOGIES 2 FOR TEACHERS 2.1 THIS EDUCATION RESOURCE 2.2 VISITING THE EXHIBITION WITH STUDENTS 3 FOR STUDENTS GETTING STARTED: THE WHOLE EXHIBITION ACTIVITIES FOR STUDENTS’ CONSIDERATION OF THE EXHIBITION AS A WHOLE 4 THEMES FOR EXPLORING THE EXHIBITION THEME 1. ART: PERSONAL IDENTITY: 3 ARTISTS: ABDULLAH, COPE, RICHARDSON THEME 2. DESIGN: FUNKY FORMS: 3 DESIGNERS: CHEB, CONVIC, GOODRUM THEME 3. ARCHITECTURE: OASES OF FAITH: 3 ARCHITECTS: MURCUTT, BALDASSO CORTESE, CANDALEPAS OTHER PERSPECTIVES: VIEWS BY COMMENTATORS FOLLOW EACH CONTRIBUTOR QUESTIONS, FURTHER RESEARCH AND A GLOSSARY FOLLOW ART AND DESIGN CONTRIBUTORS A COMMON ARCHITECTURE GLOSSARY FOLLOWS ARCHITECTURE: OASES OF FAITH 5 EXTENDED RESEARCH LINKS AND SOURCES IS CONCRETE SUSTAINABLE? 6 CONSIDERING DESIGN 6.1 JAMFACTORY: WHAT IS IT? 6.2 DESIGN: MAKING A MARK 6.3 EXTENDED RESEARCH: DESIGN RESOURCES 6 ACKNOWLEDGEMENTS Cover: Candalepas Associates, Punchbowl Mosque, 2018 “Muqarnas” corner junction. Photo; Rory Gardiner Left: Candalepas Associates, Punchbowl Mosque, 2018 Concrete ring to timber dome and oculus. Photo: Rory Gardiner SECTION 1 1.1 About this exhibition BACKGROUND BRIEFING CONCRETE: ART DESIGN ARCHITECTURE presents 21 exciting concrete projects ranging from jewellery to skateparks, hotel furniture, public sculptures, mosques and commemorative paving plaques. All 21 artists designers and architects were selected for their innovative technical skills and creative talents. These works show how they have explored concrete’s versatility by pushing its technical boundaries to achieve groundbreaking buildings, artworks and design outcomes.