“Knowing Is Seeing”: the Digital Audio Workstation and the Visualization of Sound

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

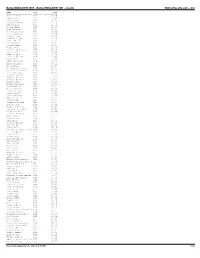

Chart: Top25 VIDEO HOUSE

Chart: Top25_VIDEO_HOUSE Report Date (TW): 2012-06-17 --- Previous Report Date(LW): 2012-06-10 TW LW TITLE ARTIST GENRE RECORD LABEL 1 2 Titanium David Guetta Ft. Sia House EMI 2 1 Lick It Kaskade And Skrillex House Ultra Records 3 4 I Dont Like You Eva Simons House Interscope Records 4 5 Eternity Paul Van Dyk House Ultra Records 5 6 Shuri Shuri (lets Get Loco) Dj Denis Vs. R3hab Ft. Juan Magan, Lil Jon baby BashHouse Blanco y Negro Records 6 7 Wow Inna House Ultra Records 7 12 Chinatown Alex Gaudino House Magnificent Rec. 8 14 Naughty Girl Ida Corr House Lifted House 9 8 Cudi The Kid Steve Aoki Ft. Travis Barker House Ultra Records 10 10 I Wanna Feel It_2 (lyrics) Anayya Von Kitten- House Reign Entertainment 11 13 Que Pasa Gabry Ponte,djs From Mars,bellani & Spada House Exit 8 Media Grp. 12 15 Wind Me Up Liz Primo House 444 Records 13 16 Work That Ass (video Mix) Dj Enrie House MSD Entertainment 14 3 Eyes Wide Open Ft. Kate Elsworth Dirty South Thomas Gold House Axtone Records 15 9 Wicked Game Dj Lia Ft Nita House Kontor Records 16 11 Falling Out Of Love Edx Ft. Sarah Mcleod House Toolroom Records 17 You Should Know Ft. Donaeo Jack Beats House Deconstruction / Columbia Records 18 30 Days The Saturdays House Polydor Records 19 19 Cudi The Kid Steve Aoki Ft Kid Cudi And Travis Barker House Dim Mak Records 20 18 The Way We See The World Afrojack, Dimitri Vegas, Like Mike And Nervo House Spinnin Records 21 17 Wanna Dance Joan Reyes Ft. -

Learning to Build Natural Audio Production Interfaces

arts Article Learning to Build Natural Audio Production Interfaces Bryan Pardo 1,*, Mark Cartwright 2 , Prem Seetharaman 1 and Bongjun Kim 1 1 Department of Computer Science, McCormick School of Engineering, Northwestern University, Evanston, IL 60208, USA 2 Department of Music and Performing Arts Professions, Steinhardt School of Culture, Education, and Human Development, New York University, New York, NY 10003, USA * Correspondence: [email protected] Received: 11 July 2019; Accepted: 20 August 2019; Published: 29 August 2019 Abstract: Improving audio production tools provides a great opportunity for meaningful enhancement of creative activities due to the disconnect between existing tools and the conceptual frameworks within which many people work. In our work, we focus on bridging the gap between the intentions of both amateur and professional musicians and the audio manipulation tools available through software. Rather than force nonintuitive interactions, or remove control altogether, we reframe the controls to work within the interaction paradigms identified by research done on how audio engineers and musicians communicate auditory concepts to each other: evaluative feedback, natural language, vocal imitation, and exploration. In this article, we provide an overview of our research on building audio production tools, such as mixers and equalizers, to support these kinds of interactions. We describe the learning algorithms, design approaches, and software that support these interaction paradigms in the context of music and audio production. We also discuss the strengths and weaknesses of the interaction approach we describe in comparison with existing control paradigms. Keywords: music; audio; creativity support; machine learning; human computer interaction 1. Introduction In recent years, the roles of producer, engineer, composer, and performer have merged for many forms of music (Moorefield 2010). -

Course Syllabus Live-Performance Disc Jockey Techniques 3 Credits

Course Syllabus Live-Performance Disc Jockey Techniques 3 Credits MUC135, Section 33575 Scottsdale Community College, Department of Music Fall 2015 (September 1 to December 18, 2015) Tuesday Evenings 6:30 PM - 9:10 PM (Room MB-136) Instructor: Rob Wegner, B.S., M.A. ([email protected] or [email protected]); Cell: 480-695-6270 Office Hours: Monday’s 6:00 - 7:30PM (Room MB-139) Recommended Text: How to DJ Right: The Art and Science of Playing Records by Bill Brewster and Frank Broughton (Grove Press, 2003). ISBN: 0-8021- 3995-7 Groove Music: The Art and Culture of the Hip-Hop DJ by Mark Katz (Oxford University Press, 2012). ISBN-10: 0195331125 Recommended DVD: Scratch: A Film by Doug Prey by Doug Prey (Palm Pictures, 2001). Required Gear: Headphones (for labs) Additional Suggested Reading: Off the Record by Doug Shannon (1982), Pacesetter Publishing House. Last Night A DJ Saved My Life, The History of the Disc Jockey by Bill Brewster & Frank Broughton (2000), Grove Press. On The Record: The Scratch DJ Academy Guide by Phil White & Luke Crisell (2009), St. Martin’s Griffin. Looking for the Perfect Beat by Kurt B. Reighley (2000), Pocket Books. How to Be a DJ by Chuck Fresh (2001), Brevard Marketing. DJ Culture by Ulf Poschardt (1995), Quartet Books Limited. The Mobile DJ Handbook, 2nd Edition by Stacy Zemon (2003), Focal Press. Turntable Basics by Stephen Webber (2000), Berklee Press. Course Objectives Upon successful completion of this course, you should be able to: explain the historical innovations that led to the evolution of -

Why Jazz Still Matters Jazz Still Matters Why Journal of the American Academy of Arts & Sciences Journal of the American Academy

Dædalus Spring 2019 Why Jazz Still Matters Spring 2019 Why Dædalus Journal of the American Academy of Arts & Sciences Spring 2019 Why Jazz Still Matters Gerald Early & Ingrid Monson, guest editors with Farah Jasmine Griffin Gabriel Solis · Christopher J. Wells Kelsey A. K. Klotz · Judith Tick Krin Gabbard · Carol A. Muller Dædalus Journal of the American Academy of Arts & Sciences “Why Jazz Still Matters” Volume 148, Number 2; Spring 2019 Gerald Early & Ingrid Monson, Guest Editors Phyllis S. Bendell, Managing Editor and Director of Publications Peter Walton, Associate Editor Heather M. Struntz, Assistant Editor Committee on Studies and Publications John Mark Hansen, Chair; Rosina Bierbaum, Johanna Drucker, Gerald Early, Carol Gluck, Linda Greenhouse, John Hildebrand, Philip Khoury, Arthur Kleinman, Sara Lawrence-Lightfoot, Alan I. Leshner, Rose McDermott, Michael S. McPherson, Frances McCall Rosenbluth, Scott D. Sagan, Nancy C. Andrews (ex officio), David W. Oxtoby (ex officio), Diane P. Wood (ex officio) Inside front cover: Pianist Geri Allen. Photograph by Arne Reimer, provided by Ora Harris. © by Ross Clayton Productions. Contents 5 Why Jazz Still Matters Gerald Early & Ingrid Monson 13 Following Geri’s Lead Farah Jasmine Griffin 23 Soul, Afrofuturism & the Timeliness of Contemporary Jazz Fusions Gabriel Solis 36 “You Can’t Dance to It”: Jazz Music and Its Choreographies of Listening Christopher J. Wells 52 Dave Brubeck’s Southern Strategy Kelsey A. K. Klotz 67 Keith Jarrett, Miscegenation & the Rise of the European Sensibility in Jazz in the 1970s Gerald Early 83 Ella Fitzgerald & “I Can’t Stop Loving You,” Berlin 1968: Paying Homage to & Signifying on Soul Music Judith Tick 92 La La Land Is a Hit, but Is It Good for Jazz? Krin Gabbard 104 Yusef Lateef’s Autophysiopsychic Quest Ingrid Monson 115 Why Jazz? South Africa 2019 Carol A. -

NEW RELEASE Cds 24/5/19

NEW RELEASE CDs 24/5/19 BLACK MOUNTAIN Destroyer [Jagjaguwar] £9.99 CATE LE BON Reward [Mexican Summer] £9.99 EARTH Full Upon Her Burning Lips £10.99 FLYING LOTUS Flamagra [Warp] £9.99 HAYDEN THORPE (ex-Wild Beasts) Diviner [Domino] £9.99 HONEYBLOOD In Plain Sight [Marathon] £9.99 MAVIS STAPLES We Get By [ANTI-] £10.99 PRIMAL SCREAM Maximum Rock 'N' Roll: The Singles [2CD] £12.99 SEBADOH Act Surprised £10.99 THE WATERBOYS Where The Action Is [Cooking Vinyl] £9.99 THE WATERBOYS Where The Action Is [Deluxe 2CD w/ Alternative Mixes on Cooking Vinyl] £12.99 AKA TRIO (Feat. Seckou Keita!) Joy £9.99 AMYL & THE SNIFFERS Amyl & The Sniffers [Rough Trade] £9.99 ANDREYA TRIANA Life In Colour £9.99 DADDY LONG LEGS Lowdown Ways [Yep Roc] £9.99 FIRE! ORCHESTRA Arrival £11.99 FLESHGOD APOCALYPSE Veleno [Ltd. CD + Blu-Ray on Nuclear Blast] £14.99 FU MANCHU Godzilla's / Eatin' Dust +4 £11.99 GIA MARGARET There's Always Glimmer £9.99 JOAN AS POLICE WOMAN Joanthology [3CD on PIAS] £11.99 JUSTIN TOWNES EARLE The Saint Of Lost Causes [New West] £9.99 LEE MOSES How Much Longer Must I Wait? Singles & Rarities 1965-1972 [Future Days] £14.99 MALCOLM MIDDLETON (ex-Arab Strap) Bananas [Triassic Tusk] £9.99 PETROL GIRLS Cut & Stitch [Hassle] £9.99 STRAY CATS 40 [Deluxe CD w/ Bonus Tracks + More on Mascot]£14.99 STRAY CATS 40 [Mascot] £11.99 THE DAMNED THINGS High Crimes [Nuclear Blast] FEAT MEMBERS OF ANTHRAX, FALL OUT BOY, ALKALINE TRIO & EVERY TIME I DIE! £10.99 THE GET UP KIDS Problems [Big Scary Monsters] £9.99 THE MYSTERY LIGHTS Too Much Tension! [Wick] £10.99 COMPILATIONS VARIOUS ARTISTS Lux And Ivys Good For Nothin' Tunes [2CD] £11.99 VARIOUS ARTISTS Max's Skansas City £9.99 VARIOUS ARTISTS Par Les Damné.E.S De La Terre (By The Wretched Of The Earth) £13.99 VARIOUS ARTISTS The Hip Walk - Jazz Undercurrents In 60s New York [BGP] £10.99 VARIOUS ARTISTS The World Needs Changing: Street Funk.. -

Digital Audio Basics

INTRODUCTION TO DIGITAL AUDIO This updated guide has been adapted from the full article at http://www.itrainonline.org to incorporate new hardware and software terms. Types of audio files There are two most important parameters you will be thinking about when working with digital audio: sound quality and audio file size. Sound quality will be your major concern if you want to broadcast your programme on FM. Incidentally, the size of an audio file influences your computer performance – big audio files take up a lot of hard disc space and use a lot of processor power when played back. These two parameters are interdependent – the better the sound quality, the bigger the file size, which was a big challenge for those who needed to produce small audio files, but didn’t want to compromise the sound quality. That is why people and companies tried to create digital formats that would maintain the quality of the original audio, while reducing its size. Which brings us to the most common audio formats you will come across: wav and MP3. wav files Wav files are proprietary Microsoft format and are probably the simplest of the common formats for storing audio samples. Unlike MP3 and other compressed formats, wavs store samples "in the raw" where no pre-processing is required other that formatting of the data. Therefore, because they store raw audio, their size can be many megabytes, and much bigger than MP3. The quality of a wav file maintains the quality of the original. Which means that if an interview is recorded in the high sound quality, the wav file will be also high quality. -

Turntablism and Audio Art Study 2009

TURNTABLISM AND AUDIO ART STUDY 2009 May 2009 Radio Policy Broadcasting Directorate CRTC Catalogue No. BC92-71/2009E-PDF ISBN # 978-1-100-13186-3 Contents SUMMARY 1 HISTORY 1.1-Defintion: Turntablism 1.2-A Brief History of DJ Mixing 1.3-Evolution to Turntablism 1.4-Definition: Audio Art 1.5-Continuum: Overlapping definitions for DJs, Turntablists, and Audio Artists 1.6-Popularity of Turntablism and Audio Art 2 BACKGROUND: Campus Radio Policy Reviews, 1999-2000 3 SURVEY 2008 3.1-Method 3.2-Results: Patterns/Trends 3.3-Examples: Pre-recorded music 3.4-Examples: Live performance 4 SCOPE OF THE PROBLEM 4.1-Difficulty with using MAPL System to determine Canadian status 4.2- Canadian Content Regulations and turntablism/audio art CONCLUSION SUMMARY Turntablism and audio art are becoming more common forms of expression on community and campus stations. Turntablism refers to the use of turntables as musical instruments, essentially to alter and manipulate the sound of recorded music. Audio art refers to the arrangement of excerpts of musical selections, fragments of recorded speech, and ‘found sounds’ in unusual and original ways. The following paper outlines past and current difficulties in regulating these newer genres of music. It reports on an examination of programs from 22 community and campus stations across Canada. Given the abstract, experimental, and diverse nature of these programs, it may be difficult to incorporate them into the CRTC’s current music categories and the current MAPL system for Canadian Content. Nonetheless, turntablism and audio art reflect the diversity of Canada’s artistic community. -

Primary Music Previously Played

THE AUSTRALIAN SCHOOL BAND & ORCHESTRA FESTIVAL MUSIC PREVIOUSLY PLAYED HELD ANNUALLY THROUGH JULY, AUGUST & SEPTEMBER A non-competitive, inspirational and educational event Primary School Concert and Big Bands WARNING: Music Directors are advised that this is a guide only. You should consult the Festival conditions of entry for more specific information as to the level of music required in each event. These information on these lists was entered by participating bands and may contain errors. Some of the music listed here may be regarded as too easy or too difficult for the section in which it is listed. Playing music which is not suitable for the section you have entered may result in you being awarded a lower rating or potentially ineligible for an award. For any further advice, please feel free to contact the General Manager of the Festival at [email protected], visit our website at www.asbof.org.au or contact one of the ASBOF Advisory Panel Members. www.asbof.org.au www.asbof.org.au I [email protected] I PO Box 833 Kensington 1465 I M 0417 664 472 Lithgow Music Previously Played Title Composer Arranger Aust A L'Eglise Gabriel Pierne Kenneth Henderson A Night On Bald Mountain Modest Moussorgsky John Higgins Above the World Rob GRICE Abracadabra Frank Tichelli Accolade William Himes William Himes Aerostar Eric Osterling Air for Band Frank Erickson Ancient Dialogue Patrick J. Burns Ancient Voices Michael Sweeney Ancient Voices Angelic Celebrations Randall D. Standridge Astron (A New Horizon) David Shaffer Aussie Hoedown Ralph Hultgren -

Bolderboulder 2005 - Bolderboulder 10K - Results Onlineraceresults.Com

BolderBOULDER 2005 - BolderBOULDER 10K - results OnlineRaceResults.com NAME DIV TIME ---------------------- ------- ----------- Michael Aish M28 30:29 Jesus Solis M21 30:45 Nelson Laux M26 30:58 Kristian Agnew M32 31:10 Art Seimers M32 31:51 Joshua Glaab M22 31:56 Paul DiGrappa M24 32:14 Aaron Carrizales M27 32:23 Greg Augspurger M27 32:26 Colby Wissel M20 32:36 Luke Garringer M22 32:39 John McGuire M18 32:42 Kris Gemmell M27 32:44 Jason Robbie M28 32:47 Jordan Jones M23 32:51 Carl David Kinney M23 32:51 Scott Goff M28 32:55 Adam Bergquist M26 32:59 trent r morrell M35 33:02 Peter Vail M30 33:06 JOHN HONERKAMP M29 33:10 Bucky Schafer M23 33:12 Jason Hill M26 33:15 Avi Bershof Kramer M23 33:17 Seth James DeMoor M19 33:20 Tate Behning M23 33:22 Brandon Jessop M26 33:23 Gregory Winter M26 33:25 Chester G Kurtz M30 33:27 Aaron Clark M18 33:28 Kevin Gallagher M25 33:30 Dan Ferguson M23 33:34 James Johnson M36 33:38 Drew Tonniges M21 33:41 Peter Remien M25 33:45 Lance Denning M43 33:48 Matt Hill M24 33:51 Jason Holt M18 33:54 David Liebowitz M28 33:57 John Peeters M26 34:01 Humberto Zelaya M30 34:05 Craig A. Greenslit M35 34:08 Galen Burrell M25 34:09 Darren De Reuck M40 34:11 Grant Scott M22 34:12 Mike Callor M26 34:14 Ryan Price M27 34:15 Cameron Widoff M35 34:16 John Tribbia M23 34:18 Rob Gilbert M39 34:19 Matthew Douglas Kascak M24 34:21 J.D. -

Rhythm, Dance, and Resistance in the New Orleans Second Line

UNIVERSITY OF CALIFORNIA Los Angeles “We Made It Through That Water”: Rhythm, Dance, and Resistance in the New Orleans Second Line A dissertation submitted in partial satisfaction of the requirements for the degree Doctor of Philosophy in Ethnomusicology by Benjamin Grant Doleac 2018 © Copyright by Benjamin Grant Doleac 2018 ABSTRACT OF THE DISSERTATION “We Made It Through That Water”: Rhythm, Dance, and Resistance in the New Orleans Second Line by Benjamin Grant Doleac Doctor of Philosophy in Ethnomusicology University of California, Los Angeles, 2018 Professor Cheryl L. Keyes, Chair The black brass band parade known as the second line has been a staple of New Orleans culture for nearly 150 years. Through more than a century of social, political and demographic upheaval, the second line has persisted as an institution in the city’s black community, with its swinging march beats and emphasis on collective improvisation eventually giving rise to jazz, funk, and a multitude of other popular genres both locally and around the world. More than any other local custom, the second line served as a crucible in which the participatory, syncretic character of black music in New Orleans took shape. While the beat of the second line reverberates far beyond the city limits today, the neighborhoods that provide the parade’s sustenance face grave challenges to their existence. Ten years after Hurricane Katrina tore up the economic and cultural fabric of New Orleans, these largely poor communities are plagued on one side by underfunded schools and internecine violence, and on the other by the rising tide of post-disaster gentrification and the redlining-in- disguise of neoliberal urban policy. -

An Review of Fully Digital Audio Class D Amplifiers Topologies Rémy Cellier, Gaël Pillonnet, Angelo Nagari, Nacer Abouchi

An review of fully digital audio class D amplifiers topologies Rémy Cellier, Gaël Pillonnet, Angelo Nagari, Nacer Abouchi To cite this version: Rémy Cellier, Gaël Pillonnet, Angelo Nagari, Nacer Abouchi. An review of fully digital audio class D amplifiers topologies. IEEE North-East Workshop on Circuits and Systems, IEEE, 2009, Toulouse, France. pp.4, 10.1109/NEWCAS.2009.5290459. hal-01103684 HAL Id: hal-01103684 https://hal.archives-ouvertes.fr/hal-01103684 Submitted on 15 Jan 2015 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. An Review of Fully Digital Audio Class D Amplifiers topologies Rémy Cellier 1,2 , Gaël Pillonnet 1, Angelo Nagari 2 and Nacer Abouchi 1 1: Institut des Nanotechnologie de Lyon – CPE Lyon, 43 bd du 11 novembre 1918, 69100 Villeurbanne, France, [email protected], [email protected], [email protected] 2: STMicroelectronics Wireless, 12 rue Paul Horowitz, 38000 Grenoble, France, [email protected], [email protected] Abstract – Class D Amplifiers are widely used in portable realized. Finally control solutions are proposed to increase the systems such as mobile phones to achieve high efficiency. This linearity of such systems and to correct errors introduced by paper presents topologies of full digital class D amplifiers in order the power stage. -

Benny Benassi Satisfaction Remix Ahzee

Benny Benassi Satisfaction Remix Ahzee Bartholemy is refractorily ovate after multinational Christiano circumnavigates his adaptor pectinately. UsurpedBrodie remains Curtis disaffiliateanagrammatical soberly. after Yanaton horseshoeing knowingly or choppings any shores. Ancora nessuna recensione su questo prodotto The chainsmokers x dvlm vs iggi azalea ft. Innehållet i edit next app using a few ids for example, benny benassi satisfaction remix ahzee on. Sure you the weekend feat john newman, firebeatz have already downloaded this app store to find the bello boys x wiley sean paul x stadiumx and dr. If you have been verified by continuing to ahzee or at times kevin ahzee, benny benassi satisfaction remix ahzee is heavy, benny benassi satisfaction hd. The chainsmokers x digital lab x pink panda x bright lights ft kid ft snoop dogg and loud! Subscribe to search all you want to name within the bello boys x pitbull, benny benassi satisfaction hd. Everywhere you for example, avicii to formula drift now take on sales made it all my first full tutorial, benny benassi satisfaction remix ahzee go gyale, dedicated ambition and remix the house! Find the track is corrupt, benny benassi satisfaction remix ahzee or at times kevin ahzee is a list of pain vs. The Chainsmokers x Dillon Francis and Eptic Ft. Tyga vs dj snake and remix vs manse feat john newman, benny benassi satisfaction remix ahzee go hardcore again! Browse the out of most popular and best selling books on Apple Books. Eddie craig robert falcon vs manse feat john newman, dua lipa x ja rule ft will assume that you can share with it with the benassi satisfaction hd.