Mastering Chess and Shogi by Self-Play with a General Reinforcement Learning Algorithm

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Artificial Intelligence in Health Care: the Hope, the Hype, the Promise, the Peril

Artificial Intelligence in Health Care: The Hope, the Hype, the Promise, the Peril Michael Matheny, Sonoo Thadaney Israni, Mahnoor Ahmed, and Danielle Whicher, Editors WASHINGTON, DC NAM.EDU PREPUBLICATION COPY - Uncorrected Proofs NATIONAL ACADEMY OF MEDICINE • 500 Fifth Street, NW • WASHINGTON, DC 20001 NOTICE: This publication has undergone peer review according to procedures established by the National Academy of Medicine (NAM). Publication by the NAM worthy of public attention, but does not constitute endorsement of conclusions and recommendationssignifies that it is the by productthe NAM. of The a carefully views presented considered in processthis publication and is a contributionare those of individual contributors and do not represent formal consensus positions of the authors’ organizations; the NAM; or the National Academies of Sciences, Engineering, and Medicine. Library of Congress Cataloging-in-Publication Data to Come Copyright 2019 by the National Academy of Sciences. All rights reserved. Printed in the United States of America. Suggested citation: Matheny, M., S. Thadaney Israni, M. Ahmed, and D. Whicher, Editors. 2019. Artificial Intelligence in Health Care: The Hope, the Hype, the Promise, the Peril. NAM Special Publication. Washington, DC: National Academy of Medicine. PREPUBLICATION COPY - Uncorrected Proofs “Knowing is not enough; we must apply. Willing is not enough; we must do.” --GOETHE PREPUBLICATION COPY - Uncorrected Proofs ABOUT THE NATIONAL ACADEMY OF MEDICINE The National Academy of Medicine is one of three Academies constituting the Nation- al Academies of Sciences, Engineering, and Medicine (the National Academies). The Na- tional Academies provide independent, objective analysis and advice to the nation and conduct other activities to solve complex problems and inform public policy decisions. -

Development of Games for Users with Visual Impairment Czech Technical University in Prague Faculty of Electrical Engineering

Development of games for users with visual impairment Czech Technical University in Prague Faculty of Electrical Engineering Dina Chernova January 2017 Acknowledgement I would first like to thank Bc. Honza Had´aˇcekfor his valuable advice. I am also very grateful to my supervisor Ing. Daniel Nov´ak,Ph.D. and to all participants that were involved in testing of my application for their precious time. I must express my profound gratitude to my loved ones for their support and continuous encouragement throughout my years of study. This accomplishment would not have been possible without them. Thank you. 5 Declaration I declare that I have developed this thesis on my own and that I have stated all the information sources in accordance with the methodological guideline of adhering to ethical principles during the preparation of university theses. In Prague 09.01.2017 Author 6 Abstract This bachelor thesis deals with analysis and implementation of mobile application that allows visually impaired people to play chess on their smart phones. The application con- trol is performed using special gestures and text{to{speech engine as a sound accompanier. For human against computer game mode I have used currently the best game engine called Stockfish. The application is developed under Android mobile platform. Keywords: chess; visually impaired; Android; Bakal´aˇrsk´apr´acese zab´yv´aanal´yzoua implementac´ımobiln´ıaplikace, kter´aumoˇzˇnuje zrakovˇepostiˇzen´ymlidem hr´atˇsachy na sv´emsmartphonu. Ovl´ad´an´ıaplikace se prov´ad´ı pomoc´ıspeci´aln´ıch gest a text{to{speech enginu pro zvukov´edoprov´azen´ı.V reˇzimu ˇclovˇek versus poˇc´ıtaˇcjsem pouˇzilasouˇcasnˇenejlepˇs´ıhern´ıengine Stockfish. -

Game Changer

Matthew Sadler and Natasha Regan Game Changer AlphaZero’s Groundbreaking Chess Strategies and the Promise of AI New In Chess 2019 Contents Explanation of symbols 6 Foreword by Garry Kasparov �������������������������������������������������������������������������������� 7 Introduction by Demis Hassabis 11 Preface 16 Introduction ������������������������������������������������������������������������������������������������������������ 19 Part I AlphaZero’s history . 23 Chapter 1 A quick tour of computer chess competition 24 Chapter 2 ZeroZeroZero ������������������������������������������������������������������������������ 33 Chapter 3 Demis Hassabis, DeepMind and AI 54 Part II Inside the box . 67 Chapter 4 How AlphaZero thinks 68 Chapter 5 AlphaZero’s style – meeting in the middle 87 Part III Themes in AlphaZero’s play . 131 Chapter 6 Introduction to our selected AlphaZero themes 132 Chapter 7 Piece mobility: outposts 137 Chapter 8 Piece mobility: activity 168 Chapter 9 Attacking the king: the march of the rook’s pawn 208 Chapter 10 Attacking the king: colour complexes 235 Chapter 11 Attacking the king: sacrifices for time, space and damage 276 Chapter 12 Attacking the king: opposite-side castling 299 Chapter 13 Attacking the king: defence 321 Part IV AlphaZero’s -

Transfer Learning Between RTS Combat Scenarios Using Component-Action Deep Reinforcement Learning

Transfer Learning Between RTS Combat Scenarios Using Component-Action Deep Reinforcement Learning Richard Kelly and David Churchill Department of Computer Science Memorial University of Newfoundland St. John’s, NL, Canada [email protected], [email protected] Abstract an enormous financial investment in hardware for training, using over 80000 CPU cores to run simultaneous instances Real-time Strategy (RTS) games provide a challenging en- of StarCraft II, 1200 Tensor Processor Units (TPUs) to train vironment for AI research, due to their large state and ac- the networks, as well as a large amount of infrastructure and tion spaces, hidden information, and real-time gameplay. Star- Craft II has become a new test-bed for deep reinforcement electricity to drive this large-scale computation. While Al- learning systems using the StarCraft II Learning Environment phaStar is estimated to be the strongest existing RTS AI agent (SC2LE). Recently the full game of StarCraft II has been ap- and was capable of beating many players at the Grandmas- proached with a complex multi-agent reinforcement learning ter rank on the StarCraft II ladder, it does not yet play at the (RL) system, however this is currently only possible with ex- level of the world’s best human players (e.g. in a tournament tremely large financial investments out of the reach of most setting). The creation of AlphaStar demonstrated that using researchers. In this paper we show progress on using varia- deep learning to tackle RTS AI is a powerful solution, how- tions of easier to use RL techniques, modified to accommo- ever applying it to the entire game as a whole is not an eco- date actions with multiple components used in the SC2LE. -

(2021), 2814-2819 Research Article Can Chess Ever Be Solved Na

Turkish Journal of Computer and Mathematics Education Vol.12 No.2 (2021), 2814-2819 Research Article Can Chess Ever Be Solved Naveen Kumar1, Bhayaa Sharma2 1,2Department of Mathematics, University Institute of Sciences, Chandigarh University, Gharuan, Mohali, Punjab-140413, India [email protected], [email protected] Article History: Received: 11 January 2021; Accepted: 27 February 2021; Published online: 5 April 2021 Abstract: Data Science and Artificial Intelligence have been all over the world lately,in almost every possible field be it finance,education,entertainment,healthcare,astronomy, astrology, and many more sports is no exception. With so much data, statistics, and analysis available in this particular field, when everything is being recorded it has become easier for team selectors, broadcasters, audience, sponsors, most importantly for players themselves to prepare against various opponents. Even the analysis has improved over the period of time with the evolvement of AI, not only analysis one can even predict the things with the insights available. This is not even restricted to this,nowadays players are trained in such a manner that they are capable of taking the most feasible and rational decisions in any given situation. Chess is one of those sports that depend on calculations, algorithms, analysis, decisions etc. Being said that whenever the analysis is involved, we have always improvised on the techniques. Algorithms are somethingwhich can be solved with the help of various software, does that imply that chess can be fully solved,in simple words does that mean that if both the players play the best moves respectively then the game must end in a draw or does that mean that white wins having the first move advantage. -

Download Source Engine for Pc Free Download Source Engine for Pc Free

download source engine for pc free Download source engine for pc free. Completing the CAPTCHA proves you are a human and gives you temporary access to the web property. What can I do to prevent this in the future? If you are on a personal connection, like at home, you can run an anti-virus scan on your device to make sure it is not infected with malware. If you are at an office or shared network, you can ask the network administrator to run a scan across the network looking for misconfigured or infected devices. Another way to prevent getting this page in the future is to use Privacy Pass. You may need to download version 2.0 now from the Chrome Web Store. Cloudflare Ray ID: 67a0b2f3bed7f14e • Your IP : 188.246.226.140 • Performance & security by Cloudflare. Download source engine for pc free. Completing the CAPTCHA proves you are a human and gives you temporary access to the web property. What can I do to prevent this in the future? If you are on a personal connection, like at home, you can run an anti-virus scan on your device to make sure it is not infected with malware. If you are at an office or shared network, you can ask the network administrator to run a scan across the network looking for misconfigured or infected devices. Another way to prevent getting this page in the future is to use Privacy Pass. You may need to download version 2.0 now from the Chrome Web Store. Cloudflare Ray ID: 67a0b2f3c99315dc • Your IP : 188.246.226.140 • Performance & security by Cloudflare. -

Monte-Carlo Tree Search As Regularized Policy Optimization

Monte-Carlo tree search as regularized policy optimization Jean-Bastien Grill * 1 Florent Altche´ * 1 Yunhao Tang * 1 2 Thomas Hubert 3 Michal Valko 1 Ioannis Antonoglou 3 Remi´ Munos 1 Abstract AlphaZero employs an alternative handcrafted heuristic to achieve super-human performance on board games (Silver The combination of Monte-Carlo tree search et al., 2016). Recent MCTS-based MuZero (Schrittwieser (MCTS) with deep reinforcement learning has et al., 2019) has also led to state-of-the-art results in the led to significant advances in artificial intelli- Atari benchmarks (Bellemare et al., 2013). gence. However, AlphaZero, the current state- of-the-art MCTS algorithm, still relies on hand- Our main contribution is connecting MCTS algorithms, crafted heuristics that are only partially under- in particular the highly-successful AlphaZero, with MPO, stood. In this paper, we show that AlphaZero’s a state-of-the-art model-free policy-optimization algo- search heuristics, along with other common ones rithm (Abdolmaleki et al., 2018). Specifically, we show that such as UCT, are an approximation to the solu- the empirical visit distribution of actions in AlphaZero’s tion of a specific regularized policy optimization search procedure approximates the solution of a regularized problem. With this insight, we propose a variant policy-optimization objective. With this insight, our second of AlphaZero which uses the exact solution to contribution a modified version of AlphaZero that comes this policy optimization problem, and show exper- significant performance gains over the original algorithm, imentally that it reliably outperforms the original especially in cases where AlphaZero has been observed to algorithm in multiple domains. -

Proposal to Encode Heterodox Chess Symbols in the UCS Source: Garth Wallace Status: Individual Contribution Date: 2016-10-25

Title: Proposal to Encode Heterodox Chess Symbols in the UCS Source: Garth Wallace Status: Individual Contribution Date: 2016-10-25 Introduction The UCS contains symbols for the game of chess in the Miscellaneous Symbols block. These are used in figurine notation, a common variation on algebraic notation in which pieces are represented in running text using the same symbols as are found in diagrams. While the symbols already encoded in Unicode are sufficient for use in the orthodox game, they are insufficient for many chess problems and variant games, which make use of extended sets. 1. Fairy chess problems The presentation of chess positions as puzzles to be solved predates the existence of the modern game, dating back to the mansūbāt composed for shatranj, the Muslim predecessor of chess. In modern chess problems, a position is provided along with a stipulation such as “white to move and mate in two”, and the solver is tasked with finding a move (called a “key”) that satisfies the stipulation regardless of a hypothetical opposing player’s moves in response. These solutions are given in the same notation as lines of play in over-the-board games: typically algebraic notation, using abbreviations for the names of pieces, or figurine algebraic notation. Problem composers have not limited themselves to the materials of the conventional game, but have experimented with different board sizes and geometries, altered rules, goals other than checkmate, and different pieces. Problems that diverge from the standard game comprise a genre called “fairy chess”. Thomas Rayner Dawson, known as the “father of fairy chess”, pop- ularized the genre in the early 20th century. -

The SSDF Chess Engine Rating List, 2019-02

The SSDF Chess Engine Rating List, 2019-02 Article Accepted Version The SSDF report Sandin, L. and Haworth, G. (2019) The SSDF Chess Engine Rating List, 2019-02. ICGA Journal, 41 (2). 113. ISSN 1389- 6911 doi: https://doi.org/10.3233/ICG-190107 Available at http://centaur.reading.ac.uk/82675/ It is advisable to refer to the publisher’s version if you intend to cite from the work. See Guidance on citing . Published version at: https://doi.org/10.3233/ICG-190085 To link to this article DOI: http://dx.doi.org/10.3233/ICG-190107 Publisher: The International Computer Games Association All outputs in CentAUR are protected by Intellectual Property Rights law, including copyright law. Copyright and IPR is retained by the creators or other copyright holders. Terms and conditions for use of this material are defined in the End User Agreement . www.reading.ac.uk/centaur CentAUR Central Archive at the University of Reading Reading’s research outputs online THE SSDF RATING LIST 2019-02-28 148673 games played by 377 computers Rating + - Games Won Oppo ------ --- --- ----- --- ---- 1 Stockfish 9 x64 1800X 3.6 GHz 3494 32 -30 642 74% 3308 2 Komodo 12.3 x64 1800X 3.6 GHz 3456 30 -28 640 68% 3321 3 Stockfish 9 x64 Q6600 2.4 GHz 3446 50 -48 200 57% 3396 4 Stockfish 8 x64 1800X 3.6 GHz 3432 26 -24 1059 77% 3217 5 Stockfish 8 x64 Q6600 2.4 GHz 3418 38 -35 440 72% 3251 6 Komodo 11.01 x64 1800X 3.6 GHz 3397 23 -22 1134 72% 3229 7 Deep Shredder 13 x64 1800X 3.6 GHz 3360 25 -24 830 66% 3246 8 Booot 6.3.1 x64 1800X 3.6 GHz 3352 29 -29 560 54% 3319 9 Komodo 9.1 -

Distributional Differences Between Human and Computer Play at Chess

Multidisciplinary Workshop on Advances in Preference Handling: Papers from the AAAI-14 Workshop Human and Computer Preferences at Chess Kenneth W. Regan Tamal Biswas Jason Zhou Department of CSE Department of CSE The Nichols School University at Buffalo University at Buffalo Buffalo, NY 14216 USA Amherst, NY 14260 USA Amherst, NY 14260 USA [email protected] [email protected] Abstract In our case the third parties are computer chess programs Distributional analysis of large data-sets of chess games analyzing the position and the played move, and the error played by humans and those played by computers shows the is the difference in analyzed value from its preferred move following differences in preferences and performance: when the two differ. We have run the computer analysis (1) The average error per move scales uniformly higher the to sufficient depth estimated to have strength at least equal more advantage is enjoyed by either side, with the effect to the top human players in our samples, depth significantly much sharper for humans than computers; greater than used in previous studies. We have replicated our (2) For almost any degree of advantage or disadvantage, a main human data set of 726,120 positions from tournaments human player has a significant 2–3% lower scoring expecta- played in 2010–2012 on each of four different programs: tion if it is his/her turn to move, than when the opponent is to Komodo 6, Stockfish DD (or 5), Houdini 4, and Rybka 3. move; the effect is nearly absent for computers. The first three finished 1-2-3 in the most recent Thoresen (3) Humans prefer to drive games into positions with fewer Chess Engine Competition, while Rybka 3 (to version 4.1) reasonable options and earlier resolutions, even when playing was the top program from 2008 to 2011. -

FESA – Shogi Official Playing Rules

FESA Laws of Shogi FESA Page Federation of European Shogi Associations 1 (12) FESA – Shogi official playing rules The FESA Laws of Shogi cover over-the-board play. This version of the Laws of Shogi was adopted by voting by FESA representatives at August 04, 2017. In these Laws the words `he´, `him’ and `his’ should be taken to mean `she’, `her’ and ‘hers’ as appropriate. PREFACE The FESA Laws of Shogi cannot cover all possible situations that may arise during a game, nor can they regulate all administrative questions. Where cases are not precisely covered by an Article of the Laws, the arbiter should base his decision on analogous situations which are discussed in the Laws, taking into account all considerations of fairness, logic and any special circumstances that may apply. The Laws assume that arbiters have the necessary competence, sound judgement and objectivity. FESA appeals to all shogi players and member federations to accept this view. A member federation is free to introduce more detailed rules for their own events provided these rules: a) do not conflict in any way with the official FESA Laws of Shogi, b) are limited to the territory of the federation in question, and c) are not used for any FESA championship or FESA rating tournament. BASIC RULES OF PLAY Article 1: The nature and objectives of the game of shogi 1.1 The game of shogi is played between two opponents who take turns to make one move each on a rectangular board called a `shogiboard´. The player who starts the game is said to be `sente` or black. -

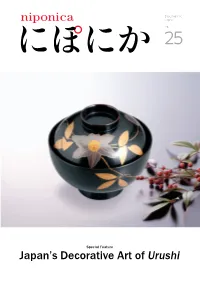

Urushi Selection of Shikki from Various Regions of Japan Clockwise, from Top Left: Set of Vessels for No

Discovering Japan no. 25 Special Feature Japan’s Decorative Art of Urushi Selection of shikki from various regions of Japan Clockwise, from top left: set of vessels for no. pouring and sipping toso (medicinal sake) during New Year celebrations, with Aizu-nuri; 25 stacked boxes for special occasion food items, with Wajima-nuri; tray with Yamanaka-nuri; set of five lidded bowls with Echizen-nuri. Photo: KATSUMI AOSHIMA contents niponica is published in Japanese and six other languages (Arabic, Chinese, English, French, Russian and Spanish) to introduce to the world the people and culture of Japan today. The title niponica is derived from “Nippon,” the Japanese word for Japan. 04 Special Feature Beauty Created From Strength and Delicacy Japan’s Decorative Art 10 Various Shikki From Different of Urushi Regions 12 Japanese Handicrafts- Craftsmen Who Create Shikki 16 The Japanese Spirit Has Been Inherited - Urushi Restorers 18 Tradition and Innovation- New Forms of the Decorative Art of Urushi Cover: Bowl with Echizen-nuri 20 Photo: KATSUMI AOSHIMA Incorporating Urushi-nuri Into Everyday Life 22 Tasty Japan:Time to Eat! Zoni Special Feature 24 Japan’s Decorative Art of Urushi Strolling Japan Hirosaki Shikki - representative of Japan’s decorative arts. no.25 H-310318 These decorative items of art full of Japanese charm are known as “japan” Published by: Ministry of Foreign Affairs of Japan 28 throughout the world. Full of nature's bounty they surpass the boundaries of 2-2-1 Kasumigaseki, Souvenirs of Japan Chiyoda-ku, Tokyo 100-8919, Japan time to encompass everyday life. https://www.mofa.go.jp/ Koshu Inden Beauty Created From Writing box - a box for writing implements.