Final Thesis

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Linux on the Road

Linux on the Road Linux with Laptops, Notebooks, PDAs, Mobile Phones and Other Portable Devices Werner Heuser <wehe[AT]tuxmobil.org> Linux Mobile Edition Edition Version 3.22 TuxMobil Berlin Copyright © 2000-2011 Werner Heuser 2011-12-12 Revision History Revision 3.22 2011-12-12 Revised by: wh The address of the opensuse-mobile mailing list has been added, a section power management for graphics cards has been added, a short description of Intel's LinuxPowerTop project has been added, all references to Suspend2 have been changed to TuxOnIce, links to OpenSync and Funambol syncronization packages have been added, some notes about SSDs have been added, many URLs have been checked and some minor improvements have been made. Revision 3.21 2005-11-14 Revised by: wh Some more typos have been fixed. Revision 3.20 2005-11-14 Revised by: wh Some typos have been fixed. Revision 3.19 2005-11-14 Revised by: wh A link to keytouch has been added, minor changes have been made. Revision 3.18 2005-10-10 Revised by: wh Some URLs have been updated, spelling has been corrected, minor changes have been made. Revision 3.17.1 2005-09-28 Revised by: sh A technical and a language review have been performed by Sebastian Henschel. Numerous bugs have been fixed and many URLs have been updated. Revision 3.17 2005-08-28 Revised by: wh Some more tools added to external monitor/projector section, link to Zaurus Development with Damn Small Linux added to cross-compile section, some additions about acoustic management for hard disks added, references to X.org added to X11 sections, link to laptop-mode-tools added, some URLs updated, spelling cleaned, minor changes. -

Sonexion 1.5 Upgrade Guide ()

Sonexion 1.5 Upgrade Guide () Contents About Sonexion 1.5 Upgrade Guide..........................................................................................................................3 Sonexion 1.5.0 Upgrade Introduction........................................................................................................................4 Prerequisites..............................................................................................................................................................8 Begin Upgrade.........................................................................................................................................................10 Upgrade Sonexion 1600/900 Software....................................................................................................................17 Post-Installation Steps.............................................................................................................................................28 Troubleshoot from Error Messages.........................................................................................................................33 2 -- () About Sonexion 1.5 Upgrade Guide This document provides instructions to upgrade Sonexion systems running software release 1.4.0 to the 1.5.0 software platform. Audience This publication is intended for use by Cray field technicicans who are trained with Sonexion and familiar wtih the software upgrade process. Typographic Conventions Monospace A Monospace font indicates program code, -

Systems Programmer, Heal Thy PC Part 2: Tune-Up Time Session

Systems Programmer, Heal Thy PC Part 2: Tune-Up Time Session 10254, Thursday, March 15, 2012 James Willette, Sunrise e-Services Victor Freyer, Lemon Bay Computer Service Do-It-Yourself PC Tune-Up • Why do it yourself? • Personal privacy • Can’t live without your computer • Sense of accomplishment • Second career? • The Geek Squad™ wants to charge what!? • What do you need? • A plan • A toolkit full of free tools Simple 15-Step Process • Boot to Windows • Remove unneeded • Shutdown Windows programs from startup • Evaluate hard drive health • Remove Internet Explorer • Backup Windows partition toolbars • • Virus review and removal Remove temporary files • • Correct file system errors Defragment Windows • partition Windows System File • Checker Update system BIOS • • Uninstall unnecessary Update programs programs • Install anti-virus software Boot and Shutdown • Computer must not be Suspended or Hibernating • Likelihood of corrupting your file system • Benchmark startup time • So you can compare when you’re done • Shutdown to insure a clean file system close • Save yourself from problems later Evaluate Hard Drive Health • Boot SystemRescueCD • Download the live Linux cd www.sysresccd.org • Burn with isorecorder.alexfeinman.com • Review hard drive SMART statistics • smartctl -a /dev/sda • Run SMART self test • smartctl -t short /dev/sda • smartctl -l selftest /dev/sda SMART Statistics • smartctl -a /dev/sda Model Family: Western Digital Scorpio family Device Model: WDC WD800BEVE-00UYT0 Serial Number: WD-WXE408L96343 Firmware Version: 01.04A01 User Capacity: 80,026,361,856 bytes ... SMART Attributes Data Structure revision number: 16 Vendor Specific SMART Attributes with Thresholds: ID# ATTRIBUTE_NAME FLAG VALUE WORST THRESH TYPE UPDATED WHEN_FAILED RAW_VALUE .. -

Clustering with Openmosix

Clustering with openMosix Maurizio Davini (Department of Physics and INFN Pisa) Presented by Enrico Mazzoni (INFN Pisa) Introduction • What is openMosix? – Single-System Image – Preemptive Process Migration – The openMosix File System (MFS) • Application Fields • openMosix vs Beowulf • The people behind openMosix • The openMosix GNU project • Fork of openMosix code 12/06/2003 HTASC 2 The openMosix Project MileStones • Born early 80s on PDP-11/70. One full PDP and disk-less PDP, therefore process migration idea. • First implementation on BSD/pdp as MS.c thesis. • VAX 11/780 implementation (different word size, different memory architecture) • Motorola / VME bus implementation as Ph.D. thesis in 1993 for under contract from IDF (Israeli Defence Forces) • 1994 BSDi version • GNU and Linux since 1997 • Contributed dozens of patches to the standard Linux kernel • Split Mosix / openMosix November 2001 • Mosix standard in Linux 2.5? 12/06/2003 HTASC 3 What is openMOSIX • Linux kernel extension (2.4.20) for clustering • Single System Image - like an SMP, for: – No need to modify applications – Adaptive resource management to dynamic load characteristics (CPU intensive, RAM intensive, I/O etc.) – Linear scalability (unlike SMP) 12/06/2003 HTASC 4 A two tier technology 1. Information gathering and dissemination – Support scalable configurations by probabilistic dissemination algorithms – Same overhead for 16 nodes or 2056 nodes 2. Pre-emptive process migration that can migrate any process, anywhere, anytime - transparently – Supervised by adaptive -

Netinfo 2009-06-11 Netinfo 2009-06-11

Netinfo 2009-06-11 Netinfo 2009-06-11 Microsoft släppte 2009-06-09 tio uppdateringar som täpper till 31 stycken säkerhetshål i bland annat Windows, Internet Explorer, Word, Excel, Windows Search. 18 av buggfixarna är märkta som kritiska och elva av dem är märkta som viktiga, uppdateringarna finns för både servrar och arbetsstationer. Säkerhetsuppdateringarna finns tillgängliga på Windows Update. Den viktigaste säkerhetsuppdateringen av de som släpptes är den för Internet Explorer 8. Netinfo 2009-06-11 Security Updates available for Adobe Reader and Acrobat Release date: June 9, 2009 Affected software versions Adobe Reader 9.1.1 and earlier versions Adobe Acrobat Standard, Pro, and Pro Extended 9.1.1 and earlier versions Severity rating Adobe categorizes this as a critical update and recommends that users apply the update for their product installations. These vulnerabilities would cause the application to crash and could potentially allow an attacker to take control of the affected system. Netinfo 2009-06-11 SystemRescueCd Description: SystemRescueCd is a Linux system on a bootable CD-ROM for repairing your system and recovering your data after a crash. It aims to provide an easy way to carry out admin tasks on your computer, such as creating and editing the partitions of the hard disk. It contains a lot of system tools (parted, partimage, fstools, ...) and basic tools (editors, midnight commander, network tools). It is very easy to use: just boot the CDROM. The kernel supports most of the important file systems (ext2/ext3/ext4, reiserfs, reiser4, btrfs, xfs, jfs, vfat, ntfs, iso9660), as well as network filesystems (samba and nfs). -

Multiboot Guide Booting Fedora and Other Operating Systems

Fedora 23 Multiboot Guide Booting Fedora and other operating systems. Fedora Documentation Project Copyright © 2013 Fedora Project Contributors. The text of and illustrations in this document are licensed by Red Hat under a Creative Commons Attribution–Share Alike 3.0 Unported license ("CC-BY-SA"). An explanation of CC-BY-SA is available at http://creativecommons.org/licenses/by-sa/3.0/. The original authors of this document, and Red Hat, designate the Fedora Project as the "Attribution Party" for purposes of CC-BY-SA. In accordance with CC-BY-SA, if you distribute this document or an adaptation of it, you must provide the URL for the original version. Red Hat, as the licensor of this document, waives the right to enforce, and agrees not to assert, Section 4d of CC-BY-SA to the fullest extent permitted by applicable law. Red Hat, Red Hat Enterprise Linux, the Shadowman logo, JBoss, MetaMatrix, Fedora, the Infinity Logo, and RHCE are trademarks of Red Hat, Inc., registered in the United States and other countries. For guidelines on the permitted uses of the Fedora trademarks, refer to https:// fedoraproject.org/wiki/Legal:Trademark_guidelines. Linux® is the registered trademark of Linus Torvalds in the United States and other countries. Java® is a registered trademark of Oracle and/or its affiliates. XFS® is a trademark of Silicon Graphics International Corp. or its subsidiaries in the United States and/or other countries. MySQL® is a registered trademark of MySQL AB in the United States, the European Union and other countries. All other trademarks are the property of their respective owners. -

Systems Programmer, Heal Thy PC Part 1: Virus Removal Session

Systems Programmer, Heal Thy PC Part 1: Virus Removal Session 10255, Tuesday, March 13, 2012 James Willette, Sunrise e-Services Victor Freyer, Lemon Bay Computer Service Disclaimer • I use “virus” to refer to the whole class of malware that may infect your PC. • Purists would say that virii are programs that spread themselves. • Most of today’s malware is installed by the end user, and by definition is not a virus. Do-It-Yourself Virus Removal • Why do it yourself? • Company has Draconian rules about PC use • Anger and disbelief • Pride • Second career? • The Geek Squad™ wants to charge what!? • What do you need? • Clean boot environment • An eye for what’s unusual • A toolkit full of free tools So you think you have a virus? • Signs of malware • Computer is slow • Click on a Google result and go to some unrelated page • Lots of “undeliverable message” alerts in your inbox • “Warning, you have 732 viruses!!!!!” • Unable to run Windows updates • Unable to update your anti-virus program • Unable to connect to the Internet • Excessive TCP connections popup warning • “Do you want to allow this program to run?” So you think you have a virus? • Simple five step process • Turn the machine off – no graceful shutdown • Boot to a clean environment • Back up the boot drive • Disable the virus program • Fix corrupted registry and configuration files Boot to a Clean Envionment • WinBuilder – freeware to build Windows boot disks http://reboot.pro/forum/22 (registration required) • XP, Vista, Windows 7, Windows 8, Driveimage XML • EaseUS Todo Backup -

Sonexion Administrator Guide

Sonexion Administrator Guide S2537 Contents Contents About Sonexion Administrator Guide.........................................................................................................................5 Hardware Architecture...............................................................................................................................................7 Software Architecture..............................................................................................................................................10 What Is and Is Not Supported in Sonexion 2.0.0.....................................................................................................12 Log In to CSSM.......................................................................................................................................................14 Change Network Settings........................................................................................................................................16 Create Custom LNET Configuration..............................................................................................................16 Change DNS Resolver Configuration............................................................................................................18 Change Externally Facing IP Addresses.......................................................................................................18 Change LDAP Settings in Daily Mode...........................................................................................................19 -

Formation Debian GNU/Linux

Formation Debian GNU/Linux Alexis de Lattre [email protected] Formation Debian GNU/Linux par Alexis de Lattre Copyright © 2002, 2003 par Alexis de Lattre Ce document est disponible aux formats : • HTML en ligne (http://people.via.ecp.fr/~alexis/formation-linux/) ou HTML zippé (http://people.via.ecp.fr/~alexis/formation-linux/formation-linux-html.zip) (3,6 Mo), • PDF zippé (http://people.via.ecp.fr/~alexis/formation-linux/formation-linux-pdf.zip) (4 Mo), • RTF zippé (http://people.via.ecp.fr/~alexis/formation-linux/formation-linux-rtf.zip) (3,5 Mo), • Texte zippé (http://people.via.ecp.fr/~alexis/formation-linux/formation-linux-txt.zip) (215 Ko). La version la plus récente de ce document se trouve à l’adresse http://people.via.ecp.fr/~alexis/formation-linux/. Vous avez le droit de copier, distribuer et/ou modifier ce document selon les termes de la GNU Free Documentation License, version 1.2 ou n’importe quelle version ultérieure, telle que publiée par la Free Software Foundation. Le texte de la licence se trouve dans l’annexe GNU Free Documentation License. Table des matières A propos de ce document ....................................................................................................................................................... i I. Installation de Debian GNU/Linux.................................................................................................................................... i 1. Linux, GNU, logiciels libres,... c’est quoi ?................................................................................................................1 -

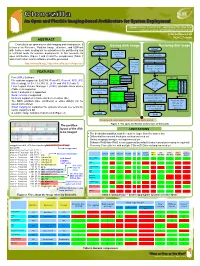

Clonezilla an Open and Flexible Imaging-Based Architecture for System Deployment

Clonezilla An Open and Flexible Imaging-based Architecture for System Deployment Steven Shiau, Ceasar Sun, Jazz Wang, Thomas Tsai {steven , ceasar , jazz , thomas }@ nchc . org . tw Free Software Lab NCHC, Taiwan ABSTRACT Clonezilla is an open-source disk imaging and cloning suite. It Saving disk image Restoring disk image is based on Partclone, Partition Image, ntfsclone, and UDPcast Start with features such as plug-ins for subsystems like partitioning and Start a multicast mode for massive deployments. In this research, its Parse partition Find the file table system of Read image open architecture (Figure 1 and 2) and the comparisons (Table 1 device and 2) with other similar software would be presented. NO Create partition table Use dd http://clonezilla.org , http://clonezilla.sourceforge.net/ to save Smart Create LV if LV Find the copying? image Device to image found in (partition stdout image dir FEATURES /LV) YES Decide partclone Free (GPL) Software device YES partimage File systems supported: Ext2/3/4, ReiserFS, Reiser4, XFS, JFS, imaging p p Save Find the Decide n ntfsclone a a engine t MBR/GPT data r Btrfs (testing), HFS+, FAT, NTFS , UFS+ and VMFS (Table 1) f image r t device s t i c c Linux Logical Volume Manager 2 (LVM2) stackable block device of m l imaging l o o Save device device a n n (Table 2) is supported engine g e Save partition image to stdout e e Grub 1 and grub 2 is supported table gzip NO Serial console is supported Decide bzip2 Tune the file compressing lzma Multicast supported in Clonezilla Server Edition (SE) engine lzip system size to fit Save xz partition size Restore the The MBR, partition table, partition(s) or entire disk(s) can be hardware info image of saved and restored Save stdin as Reinstall grub device to Smart copying on supported file systems whereas use sector-to- file in the device sector copying via dd image dir if assigned A tunable image format is implemented (Figure 2) End End Imaging and compressing engines can be easily added Figure 1. -

Copyrighted Material

Index alias command, 57–58 SYMBOLs alsamixer, 109–110 #! syntax, shells and, 64 AMD-V virtualization support ... (ellipses), omitted information in (svm), 298–299 code, 10 apropos command, 12–13 [ ] (brackets), testing shell scripts APT (Advanced Package Tool). See and, 65 also software, managing with APT ~. (tilde and period), exiting ssh vs. aptitude, 25 sessions and, 262 basics of, 21 > (greater-than sign) commands for security, 295 appending files and, 55 apt-get command to direct output to files, 55 update command, 35, 37 . (dots), in PATH environment clean option, 29–30 variables, 65 installing packages and, 28–29 ! (exclamation points), negating KVMs and, 301 search criterion with, 85 remove option, 29 / (forward slash), IRC commands and, aptitude. See also software, managing 253 with aptitude ; (semicolons), vi and, 315 vs. APT, 25 basics of, 21 A Aptitude Survival Guide, 35 -a option, (debsums), 42 Aptitude User’s Manual, 35 adding apt-key command, 70 content to scripts, 65–68 archives. See also backups to files to archives, 160 compressed archives groups, 286 backing up with SSH, 161–162 passwords, 281 ARP (Address Resolution Protocol), software, virtualization hosts and, 301 checking, 229–230 software collections, 24–25 ASCII art, viewing movies and, 123 text, 91, 93 ASCII text, reading, 99 user accounts,COPYRIGHTED 280–281 at command, MATERIAL 186 Address Resolution Protocol (ARP), Atheros, 225 checking, 229–230 audio, 107–117 administration of remote systems. See adjusting levels, 109–110 remote systems administration encoding, 111–114 Advanced Linux Sound Architecture files, converting, 116–117 (ALSA), 109 playing music, 107–109 Advanced Package Tool. -

White Paper: Group State Quorum

WHITE PAPER DELL EMC POWERSCALE ONEFS CLUSTER COMPOSITION, QUORUM, AND GROUP STATE Abstract This paper explores cluster quorum, group state, and the group management protocol on a Dell EMC PowerScale cluster. April 2021 1 | Dell EMC PowerScale OneFS Cluster Composition, Quorum, & Group State ©202 1 Dell Inc. or its subsidiaries. Revisions Version Date Comment 1.0 November 2017 Updated for OneFS 8.1.1 2.0 February 2019 Updated for OneFS 8.1.3 3.0 April 2019 Updated for OneFS 8.2 4.0 August 2019 Updated for OneFS 8.2.1 5.0 December 2019 Updated for OneFS 8.2.2 6.0 June 2020 Updated for OneFS 9.0 7.0 October 2020 Updated for OneFS 9.1 8.0 April 2021 Updated for OneFS 9.2 Acknowledgements This paper was produced by the following: Author: Nick Trimbee The information in this publication is provided “as is.” Dell Inc. makes no representations or warranties of any kind with respect to the information in this publication, and specifically disclaims implied warranties of merchantability or fitness for a particular purpose. Use, copying, and distribution of any software described in this publication requires an applicable software license. Copyright © Dell Inc. or its subsidiaries. All Rights Reserved. Dell, EMC, Dell EMC and other trademarks are trademarks of Dell Inc. or its subsidiaries. Other trademarks may be trademarks of their respective owners. 2 | Dell EMC PowerScale OneFS Cluster Composition, Quorum, & Group State ©202 1 Dell Inc. or its subsidiaries. TABLE OF CONTENTS Intended Audience ............................................................................................................................... 4 Cluster Composition, Quorum and Group State ............................................................................................................ 4 Cluster Composition and Group State ..........................................................................................................................