Improving Color Reproduction Accuracy on Cameras

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

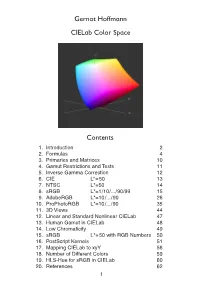

Cielab Color Space

Gernot Hoffmann CIELab Color Space Contents . Introduction 2 2. Formulas 4 3. Primaries and Matrices 0 4. Gamut Restrictions and Tests 5. Inverse Gamma Correction 2 6. CIE L*=50 3 7. NTSC L*=50 4 8. sRGB L*=/0/.../90/99 5 9. AdobeRGB L*=0/.../90 26 0. ProPhotoRGB L*=0/.../90 35 . 3D Views 44 2. Linear and Standard Nonlinear CIELab 47 3. Human Gamut in CIELab 48 4. Low Chromaticity 49 5. sRGB L*=50 with RGB Numbers 50 6. PostScript Kernels 5 7. Mapping CIELab to xyY 56 8. Number of Different Colors 59 9. HLS-Hue for sRGB in CIELab 60 20. References 62 1.1 Introduction CIE XYZ is an absolute color space (not device dependent). Each visible color has non-negative coordinates X,Y,Z. CIE xyY, the horseshoe diagram as shown below, is a perspective projection of XYZ coordinates onto a plane xy. The luminance is missing. CIELab is a nonlinear transformation of XYZ into coordinates L*,a*,b*. The gamut for any RGB color system is a triangle in the CIE xyY chromaticity diagram, here shown for the CIE primaries, the NTSC primaries, the Rec.709 primaries (which are also valid for sRGB and therefore for many PC monitors) and the non-physical working space ProPhotoRGB. The white points are individually defined for the color spaces. The CIELab color space was intended for equal perceptual differences for equal chan- ges in the coordinates L*,a* and b*. Color differences deltaE are defined as Euclidian distances in CIELab. This document shows color charts in CIELab for several RGB color spaces. -

Lesson05 Colorimetry.Pdf

VIDEO SIGNALS Colorimetry WHAT IS COLOR? Electromagnetic Wave Spectral Power Distribution Illuminant D65 (nm) Reflectance Spectrum Spectral Power Distribution Neon Lamp WHAT IS COLOR? Spectral Power Distribution Illuminant F1 Spectral Power Distribution Under D65 Reflectance Spectrum Spectral Power Distribution Under F1 WHAT IS COLOR? Observer Stimulus WHAT IS COLOR? M L Spectral Ganglion Horizontal Sensibility of the Bipolar Rod S L, M and S Cone Cones Light Light Amacrine Retina Optic Nerve Color Vision Rods Cones Cones and Rods5 THE ELECTROMAGNETIC SPECTRUM Incident light prism SPECTRAL EXAMPLES The light emitte d from a Laser isstitltrictly monochromatic and its spectrum is made from a single line where all the energy is concentrated. Laser He - Ne SPECTRAL EXAMPLES The light emitted from the 3 Blue different phosphors of a traditional color CthdCathode Ray Tube (CRT) green red SPECTRAL EXAMPLES The light emitted from a gas vapour lamp is a set of diffent spectral lines. Their value is linked to the allowed energy steps performed by the excited gas electrons. SPECTRAL EXAMPLES Many objects, when heated, emit light with a spectral distribution close to the “Black body” radiation. It follows the Planck law and its shape depends only on the absolute object temperature. Examples: - the stars, -the sun. - incandenscent lamps THE “BLACK BODY” LAW Planck's law states that: where: I(ν,T) dν is the amount of energy per unit surface area per unit time per unit solid angle emitted in the frequency range between ν and ν + dν by a black body at temperature T; h is the Planck constant; c is the speed of light in a vacuum; k is the Boltzmann constant; ν is frequency of electromagnetic radiation; T is the temperature in Kelvin. -

The Art of Digital Black & White by Jeff Schewe There's Just Something

The Art of Digital Black & White By Jeff Schewe There’s just something magical about watching an image develop on a piece of photo paper in the developer tray…to see the paper go from being just a blank white piece of paper to becoming a photograph is what many photographers think of when they think of Black & White photography. That process of watching the image develop is what got me hooked on photography over 30 years ago and Black & White is where my heart really lives even though I’ve done more color work professionally. I used to have the brown stains on my fingers like any good darkroom tech, but commercially, I turned toward color photography. Later, when going digital, I basically gave up being able to ever achieve what used to be commonplace from the darkroom–until just recently. At about the same time Kodak announced it was going to stop making Black & White photo paper, Epson announced their new line of digital ink jet printers and a new ink, Ultrachrome K3 (3 Blacks- hence the K3), that has given me hope of returning to darkroom quality prints but with a digital printer instead of working in a smelly darkroom environment. Combine the new printers with the power of digital image processing in Adobe Photoshop and the capabilities of recent digital cameras and I think you’ll see a strong trend towards photographers going digital to get the best Black & White prints possible. Making the optimal Black & White print digitally is not simply a click of the shutter and push button printing. -

The Crystal and Molecular Structure of Tris(Ortho- Aminobenzoato)Aquoyttrium(Iii)

University of New Hampshire University of New Hampshire Scholars' Repository Doctoral Dissertations Student Scholarship Fall 1979 THE CRYSTAL AND MOLECULAR STRUCTURE OF TRIS(ORTHO- AMINOBENZOATO)AQUOYTTRIUM(III) SHARON MARTIN BOUDREAU Follow this and additional works at: https://scholars.unh.edu/dissertation Recommended Citation BOUDREAU, SHARON MARTIN, "THE CRYSTAL AND MOLECULAR STRUCTURE OF TRIS(ORTHO- AMINOBENZOATO)AQUOYTTRIUM(III)" (1979). Doctoral Dissertations. 1228. https://scholars.unh.edu/dissertation/1228 This Dissertation is brought to you for free and open access by the Student Scholarship at University of New Hampshire Scholars' Repository. It has been accepted for inclusion in Doctoral Dissertations by an authorized administrator of University of New Hampshire Scholars' Repository. For more information, please contact [email protected]. 8009658 Bo u d r e a u , S h a r o n M a r t in THE CRYSTAL AND MOLECULAR STRUCTURE OF TRIS(ORTHO- AMrNOBENZOATO)AQUOYTTRIUM(III) University o f New Hampshire PH.D. 1979 University Microfilms International 300 N. Zeeb Road, Ann Arbor, MI 48106 18 Bedford Row, London WC1R 4EJ, England PLEASE NOTE: In all cases this material has been filmed in the best possible way from the available copy. Problems encountered with this document have been identified here with a check mark . 1. Glossy photographs _ / 2. Colored illustrations _______ 3. Photographs with dark background \/ '4. Illustrations are poor copy 5. Print shows through as there is text on both sides of page ________ 6. Indistinct, broken or small print on several pages \/ throughout 7. Tightly bound copy with print lost in spine 8. Computer printout pages with indistinct print 9. -

Fast and Stable Color Balancing for Images and Augmented Reality

Fast and Stable Color Balancing for Images and Augmented Reality Thomas Oskam 1,2 Alexander Hornung 1 Robert W. Sumner 1 Markus Gross 1,2 1 Disney Research Zurich 2 ETH Zurich Abstract This paper addresses the problem of globally balanc- ing colors between images. The input to our algorithm is a sparse set of desired color correspondences between a source and a target image. The global color space trans- formation problem is then solved by computing a smooth Source Image Target Image Color Balanced vector field in CIE Lab color space that maps the gamut of the source to that of the target. We employ normalized ra- dial basis functions for which we compute optimized shape parameters based on the input images, allowing for more faithful and flexible color matching compared to existing RBF-, regression- or histogram-based techniques. Further- more, we show how the basic per-image matching can be Rendered Objects efficiently and robustly extended to the temporal domain us- Tracked Colors balancing Augmented Image ing RANSAC-based correspondence classification. Besides Figure 1. Two applications of our color balancing algorithm. Top: interactive color balancing for images, these properties ren- an underexposed image is balanced using only three user selected der our method extremely useful for automatic, consistent correspondences to a target image. Bottom: our extension for embedding of synthetic graphics in video, as required by temporally stable color balancing enables seamless compositing applications such as augmented reality. in augmented reality applications by using known colors in the scene as constraints. 1. Introduction even for different scenes. With today’s tools this process re- quires considerable, cost-intensive manual efforts. -

Preparing Images for Delivery

TECHNICAL PAPER Preparing Images for Delivery TABLE OF CONTENTS So, you’ve done a great job for your client. You’ve created a nice image that you both 2 How to prepare RGB files for CMYK agree meets the requirements of the layout. Now what do you do? You deliver it (so you 4 Soft proofing and gamut warning can bill it!). But, in this digital age, how you prepare an image for delivery can make or 13 Final image sizing break the final reproduction. Guess who will get the blame if the image’s reproduction is less than satisfactory? Do you even need to guess? 15 Image sharpening 19 Converting to CMYK What should photographers do to ensure that their images reproduce well in print? 21 What about providing RGB files? Take some precautions and learn the lingo so you can communicate, because a lack of crystal-clear communication is at the root of most every problem on press. 24 The proof 26 Marking your territory It should be no surprise that knowing what the client needs is a requirement of pro- 27 File formats for delivery fessional photographers. But does that mean a photographer in the digital age must become a prepress expert? Kind of—if only to know exactly what to supply your clients. 32 Check list for file delivery 32 Additional resources There are two perfectly legitimate approaches to the problem of supplying digital files for reproduction. One approach is to supply RGB files, and the other is to take responsibility for supplying CMYK files. Either approach is valid, each with positives and negatives. -

Dalmatian's Definitions

T H E B L A C K & W H I T E P A P E R S D A L M A T I A N ’ S D E F I N I T I O N S 8 BIT A bit is the possible number of colors or tones assigned to each pixel. In 8 bit files, 1 of 256 tones is assigned to each pixel. 8-bit Jpeg is the default setting for most cameras; whenever possible set the camera to RAW capture for the best image capture possible. 16 BIT A bit is the possible number of colors or tones assigned to each pixel. In 16 bit files, 1 of 65,536 tones are assigned to each pixel. 16 bit files have the greatest range of tonalities and a more realistic interpretation of continuous tone. Note the increase in tonalities from 8 bit to 16 bit. If your aim is the quality of the image, then 16 bit is a must. ADOBE 1998 COLOR SPACE Adobe ‘98 is the preferred RGB color space that places the captured and therefore printable colors within larger parameters, rendering approximately 50% the visible color space of the human eye. Whenever possible, change the camera’s default sRGB setting to Adobe 1998 in order to increase available color capture. Whenever possible change this setting to Adobe 1998 in order to increase available color capture. When an image is captured in sRGB, it is best to leave the color space unchanged so color rendering is unaffected. ARCHIVAL Archival materials are those with a neutral pH balance that will not degrade over time and are resistant to UV fading. -

Specification of Srgb

How to interpret the sRGB color space (specified in IEC 61966-2-1) for ICC profiles A. Key sRGB color space specifications (see IEC 61966-2-1 https://webstore.iec.ch/publication/6168 for more information). 1. Chromaticity co-ordinates of primaries: R: x = 0.64, y = 0.33, z = 0.03; G: x = 0.30, y = 0.60, z = 0.10; B: x = 0.15, y = 0.06, z = 0.79. Note: These are defined in ITU-R BT.709 (the television standard for HDTV capture). 2. Reference display‘Gamma’: Approximately 2.2 (see precise specification of color component transfer function below). 3. Reference display white point chromaticity: x = 0.3127, y = 0.3290, z = 0.3583 (equivalent to the chromaticity of CIE Illuminant D65). 4. Reference display white point luminance: 80 cd/m2 (includes veiling glare). Note: The reference display white point tristimulus values are: Xabs = 76.04, Yabs = 80, Zabs = 87.12. 5. Reference veiling glare luminance: 0.2 cd/m2 (this is the reference viewer-observed black point luminance). Note: The reference viewer-observed black point tristimulus values are assumed to be: Xabs = 0.1901, Yabs = 0.2, Zabs = 0.2178. These values are not specified in IEC 61966-2-1, and are an additional interpretation provided in this document. 6. Tristimulus value normalization: The CIE 1931 XYZ values are scaled from 0.0 to 1.0. Note: The following scaling equations can be used. These equations are not provided in IEC 61966-2-1, and are an additional interpretation provided in this document. 76.04 X abs 0.1901 XN = = 0.0125313 (Xabs – 0.1901) 80 76.04 0.1901 Yabs 0.2 YN = = 0.0125313 (Yabs – 0.2) 80 0.2 87.12 Zabs 0.2178 ZN = = 0.0125313 (Zabs – 0.2178) 80 87.12 0.2178 7. -

Computational RYB Color Model and Its Applications

IIEEJ Transactions on Image Electronics and Visual Computing Vol.5 No.2 (2017) -- Special Issue on Application-Based Image Processing Technologies -- Computational RYB Color Model and its Applications Junichi SUGITA† (Member), Tokiichiro TAKAHASHI†† (Member) †Tokyo Healthcare University, ††Tokyo Denki University/UEI Research <Summary> The red-yellow-blue (RYB) color model is a subtractive model based on pigment color mixing and is widely used in art education. In the RYB color model, red, yellow, and blue are defined as the primary colors. In this study, we apply this model to computers by formulating a conversion between the red-green-blue (RGB) and RYB color spaces. In addition, we present a class of compositing methods in the RYB color space. Moreover, we prescribe the appropriate uses of these compo- siting methods in different situations. By using RYB color compositing, paint-like compositing can be easily achieved. We also verified the effectiveness of our proposed method by using several experiments and demonstrated its application on the basis of RYB color compositing. Keywords: RYB, RGB, CMY(K), color model, color space, color compositing man perception system and computer displays, most com- 1. Introduction puter applications use the red-green-blue (RGB) color mod- Most people have had the experience of creating an arbi- el3); however, this model is not comprehensible for many trary color by mixing different color pigments on a palette or people who not trained in the RGB color model because of a canvas. The red-yellow-blue (RYB) color model proposed its use of additive color mixing. As shown in Fig. -

Color Printing Techniques

4-H Photography Skill Guide Color Printing Techniques Enlarging Color Negatives Making your own color prints from Color Relations color negatives provides a whole new area of Before going ahead into this fascinating photography for you to enjoy. You can make subject of color printing, let’s make sure we prints nearly any size you want, from small ones understand some basic photographic color and to big enlargements. You can crop pictures for the visual relationships. composition that’s most pleasing to you. You can 1. White light (sunlight or the light from an control the lightness or darkness of the print, as enlarger lamp) is made up of three primary well as the color balance, and you can experiment colors: red, green, and blue. These colors are with control techniques to achieve just the effect known as additive primary colors. When you’re looking for. The possibilities for creating added together in approximately equal beautiful color prints are as great as your own amounts, they produce white light. imagination. You can print color negatives on conventional 2. Color‑negative film has a separate light‑ color printing paper. It’s the kind of paper your sensitive layer to correspond with each photofinisher uses. It requires precise processing of these three additive primary colors. in two or three chemical solutions and several Images recorded on these layers appear as washes in water. It can be processed in trays or a complementary (opposite) colors. drum processor. • A red subject records on the red‑sensitive layer as cyan (blue‑green). • A green subject records on the green‑ sensitive layer as magenta (blue‑red). -

Simplest Color Balance

Published in Image Processing On Line on 2011{10{24. Submitted on 2011{00{00, accepted on 2011{00{00. ISSN 2105{1232 c 2011 IPOL & the authors CC{BY{NC{SA This article is available online with supplementary materials, software, datasets and online demo at http://dx.doi.org/10.5201/ipol.2011.llmps-scb 2014/07/01 v0.5 IPOL article class Simplest Color Balance Nicolas Limare1, Jose-Luis Lisani2, Jean-Michel Morel1, Ana Bel´enPetro2, Catalina Sbert2 1 CMLA, ENS Cachan, France ([email protected], [email protected]) 2 TAMI, Universitat Illes Balears, Spain (fjoseluis.lisani, anabelen.petro, [email protected]) Abstract In this paper we present the simplest possible color balance algorithm. The assumption under- lying this algorithm is that the highest values of R, G, B observed in the image must correspond to white, and the lowest values to obscurity. The algorithm simply stretches, as much as it can, the values of the three channels Red, Green, Blue (R, G, B), so that they occupy the maximal possible range [0, 255] by applying an affine transform ax+b to each channel. Since many images contain a few aberrant pixels that already occupy the 0 and 255 values, the proposed method saturates a small percentage of the pixels with the highest values to 255 and a small percentage of the pixels with the lowest values to 0, before applying the affine transform. Source Code The source code (ANSI C), its documentation, and the online demo are accessible at the IPOL web page of this article1. -

The Crystal and Molecular Structures of Some Organophosphorus Insecticides and Computer Methods for Structure Determination Ricky Lee Lapp Iowa State University

Iowa State University Capstones, Theses and Retrospective Theses and Dissertations Dissertations 1979 The crystal and molecular structures of some organophosphorus insecticides and computer methods for structure determination Ricky Lee Lapp Iowa State University Follow this and additional works at: https://lib.dr.iastate.edu/rtd Part of the Physical Chemistry Commons Recommended Citation Lapp, Ricky Lee, "The crystal and molecular structures of some organophosphorus insecticides and computer methods for structure determination " (1979). Retrospective Theses and Dissertations. 7224. https://lib.dr.iastate.edu/rtd/7224 This Dissertation is brought to you for free and open access by the Iowa State University Capstones, Theses and Dissertations at Iowa State University Digital Repository. It has been accepted for inclusion in Retrospective Theses and Dissertations by an authorized administrator of Iowa State University Digital Repository. For more information, please contact [email protected]. INFORMATION TO USERS This was produced from a copy of a document sent to us for microfilming. While the most advanced technological means to photograph and reproduce this document have been used, the quality is heavily dependent upon the quality of the material submitted. The following explanation of techniques is provided to help you understand markings or notations which may appear on this reproduction. 1. The sign or "target" for pages apparently lacking from the document photographed is "Missing Page(s)". If it was possible to obtain the missing page(s) or section, they are spliced into the film along with adjacent pages. This may have necessitated cutting through an image and duplicating adjacent pages to assure you of complete continuity.