Adaptive Optics in Full-Field Spatially Incoherent Interferometry and Its Retinal Imaging Peng Xiao

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Part I.—Minutes, Proclamations, Appointments, &C

REGISTERED AS A NEWSPAPER IN CEYLON. • 'V PubIt»fjBb frji ftuf IjorifK. No. 6,894 — FRIDAY, AUGUST 24? 1917. P a r t I.__General: Minutes, Proclamations, Appointments, and P a r t III.— Provincial Administration. General Government Notifications. Part IV.— Land Settlement. Past II.—Legal and Judicial. P a r t V.—Mercantile, Marine, Municipal, Local, &e. Separate paging is given to each Part, in order that it may be filed separately. Part I.—Minutes, Proclamations, Appointments, &c PAGE FAGS Minutes by the Governor .. .. • — Miscellaneous Departmental Notices 819 Proclamations by the Governor .. .. .. 789 Notices calling for Tenders .. Appointments by the Governor .. .. 800 Contracts for Supplies of Stores .. .. Appointments, &o., oi Registrars .. .. .. 802 Sales of Unserviceable Articles 825 Government Notifications .. .. 803 Registrar-General’s Vital Statistics 825 Revenue and Expenditure Returns Meteorological Returns .. Notices by the Currency Commissioners Books registered under Ordinance No. I of 1885 PROCLAMATIONS BY THU GOVERNOR. In the Name of His Majesty GEORGE THE FIFTH, of the United Kingdom of Great Britain and Ireland and of the British Dominions beyond the Seas, King, Defender of the Faith. PROCLAMATION. By Has Excellency Sir J ohn Anderson, Knight Grand Cross of the Most Distinguished Order of Saint Michael and Saint George, Knight Commander of the Most Honourable Order of the Bath,. Governor and Commander-in-Chief in and over the Island of, Ceylon, with the Dependencies thereof. John Anderson. HEREAS by section 34 (1) -

Seunghyun Sim Bas Van Genabeek Takuzo Aida Bert Meijer

In association with NASA Takuzo Aida December 10, 2019 Seunghyun Sim Bas van Genabeek Bert Meijer Tokyo University Caltech SyMO-Chem Eindhoven University . cmeacs.org In association with NASA ACS GLOBAL OUTSTANDING GRADUATE STUDENT & MENTOR AWARDS IN POLYMER SCIENCE AND ENGINEERING SPONSORED BY CME Seunghyun Sim Bas van Genabeek Postdoc Researcher Caltech SyMO-Chem Takuzo Aida Bert Meijer Prof. Tokyo University Distinguished Professor Riken Group Director Eindhoven University . December 10, 2019 cmeacs.org In association with NASA 2:00 pm – Awards Presentation and Talk on Engineering self-assembly of protein polymers for functional materials. Seunghyun Sim – Proteins are monodisperse polymers that fold into a specific nanoscale structure. These state-of-art nanoscale machineries exert highly precise mechanical motions and process environmental inputs by the combination of allosteric effects. My research focuses on finding interdisciplinary solutions for designing a library of functional protein materials, mainly from the principles in supramolecular chemistry, molecular biology, and polymer science. In this symposium, I will discuss the design of protein-based macromolecular architectures in multiple dimensions, understanding their property for the therapeutic application, and in situ synthesis of extracellular protein network by living organisms for generating engineered living materials. Profile – Seunghyun Sim graduated from Seoul National University with B.S. degrees in Chemistry and Biological Sciences in 2012. She conducted her doctoral research with professor Takuzo Aida at the University of Tokyo and received her M.Eng. and Ph.D. in 2017. Her thesis work focused on engineering protein-based supramolecular nanostructures and functions. She is currently a postdoctoral fellow at California Institute of Technology in the lab of professor David Tirrell. -

Excise the World of Intoxication

REVENUE EARNING DEPARTMENTS - EXCISE THE WORLD OF INTOXICATION Alcoholic Drinks: Previous Era Alcoholic Drinks: History Alcoholic drinks made from fermented food stuffs have been in used from ancient times. Fermented drinks antedate distilled spirits, though the process of distillation was known to the ancient Assyrians, Chinese, Greeks and Hindus. The manufacture, sale and consumption of intoxicating liquor have been subject to state control from very early times in India. Alcoholic Drinks - in India Drinks were known in India in Vedik and Post Vedik times. The celestial drink of Vedik period is known as Soma. • Sura is fermented beverage during Athavana Veda period. Alcoholic Drinks – Making in different periods • Pulasty’s • Kautilya’s Alcohol making : Pulasty’s Period • Panasa( Liquor from Jack fruit) • Madhvika (Mohowa Liquor) • Draksha (Liquor from Grape) • Saira (Long pepper Liquor) • Madhuka (Honey Liquor) • Arishta (Soap Berry Liquor) • Khajura (Date Liquor) • Maireya (Rum) • Tala (Palm Liquor) • Narikelaja (Coconut Liquor) • Sikhshava (Cane Liquor) • Sura / Arrack. Alcohol making : Kautilya’s Period • Medaka • Prasanna • Asava • Arisha • Maireya • Madhu Indian Alcoholic Beverages Indian Alcoholic Beverages : Types • Traditional Alcoholic Beverages • Non- Traditional Alcoholic Beverages Traditional Alcoholic Beverages • Feni • Hudamaba • Palm Wine • Handia • Hariya • Kaidum • Desidaru • Sonti • Kodo Kojaanr • Apo / Apung • Sulai • Laopani • Arrack • Sundakanji • Luqdi • Bangla • Sura • Mahua • Bitchi • Tati Kallu • Mahuli • Chhaang • Tharra • Mandia Pej • Cholai • Zawlaidi • Manri • Chuak • Zutho • Pendha • Sekmai Non - Traditional Alcoholic Beverages • Indian Beer • Indian Brandy • Indian made Foreign Liquor • Indian Rum • Indian Vodka • Indian Wine Alcoholic Beverages Alcohol Beverages : as a source of Revenue Alcoholic beverages received to distinctions with the advent of the British Rule in India. -

Decent Work for Tea Plantation Workers in Assam Constraints

PROJECT REPORT Decent Work for Tea Plantation Workers in Assam Constraints, Challenges and Prospects Conducted by Debdulal Saha Chitrasen Bhue Rajdeep Singha Tata Institute of Social Sciences Guwahati Campus October 2019 Research Team Principal Investigator Dr Debdulal Saha Core Team Dr Debdulal Saha Dr Chitrasen Bhue Dr Rajdeep Singha Research Assistants Mr Debajit Rajbangshi Mr Syed Parvez Ahmed Ms Juri Baruah Enumerators Ms Majani Das Ms Puspanjali Kalindi Mr Sourin Deb Mr Partha Paul Ms Ananya Saikia Ms Shatabdi Borpatra Gohain Mr Prabhat Konwar Mr Oskar Hazarika Photography Mr Debajit Rajbangshi Ms. Ananya Saikia Dr. Debdulal Saha Contents List of Abbreviations ii List of Figures iv List of Tables vi List of Map, Boxes and Pictures vii Acknowledgements viii Executive Summary ix 1. Tea Industry in India: Overview and Context 1 2. Infrastructure in Tea Estates: Health Centre, Childcare, Schools and Connectivity 16 3. State of Labour: Wage and Workplace 34 4. Consumption Expenditure and Income: Inequality and Deficits 55 5. Living against Odds 70 6. Socio-Economic Insecurity and Vulnerability 88 7. Bridging the Gaps: Conclusion and Recommendations 97 Glossary 102 References 103 i Abbreviations AAY Antyodaya Anna Yojna ABCMS Akhil Bhartiya Cha Mazdoor Sangha ACKS Assam Chah Karmachari Sangha ACMS Assam Chah Mazdoor Sangha APL Above Poverty Line APLR Assam Plantation Labour Rules ATPA Assam Tea Planters’ Association BCP Bharatiya Cha Parishad BLF Bought Leaf Factories BMS Bharatiya Mazdoor Sangha BPL Below Poverty Line CAGR Compound Annual -

Of 16 in the DISTRICT COURT of the UNITED STATES for THE

IN THE DISTRICT COURT OF THE UNITED STATES FOR THE MIDDLE DISTRICT OF ALABAMA EASTERN DIVISION MRINAL THAKUR, ) ) Plaintiff, ) ) v. ) CASE NO. 3:16-cv-811-TFM ) [wo] ERIC BETZIG, et. al., ) ) Defendants, ) MEMORANDUM OPINION AND ORDER This action is assigned to the undersigned magistrate judge to conduct all proceedings and order entry of judgment by consent of all the parties pursuant to 28 U.S.C. § 636(c). See Docs. 24, 25. Now pending before the Court is Defendants’ Motion to Dismiss and brief in support (Docs. 12-13, filed December 5, 2016). After a careful review of all the written pleadings, motions, responses, and replies, the Court GRANTS Plaintiff’s alternative requests (Docs. 20 and 29) that the case be severed and transferred to the more appropriate venues in the Northern District of California and District of Maryland pursuant to 28 U.S.C. § 1404(a). The motion to dismiss pursuant to Fed. R. Civ. P. 12(b)(2) (Doc. 12) is DENIED as moot. Any remaining motions including the remaining portion of the motion to dismiss for 12(b)(6) (Doc. 12) remain pending for the determination of the transferee courts. I. JURISDICTION Plaintiff asserts claims pursuant to 28 U.S.C. § 1332 (diversity jurisdiction). Specifically, that the citizenship of all parties is diverse and the amount in controversy exceeds $75,000.00. He asserts state law claims for (1) fraud and suppression, (2) Negligence, (3) Wantonness, (4) Negligent and/or Wanton Training, Supervision, and Monitoring, (5) Unjust Enrichment, and (6) Page 1 of 16 Conversion. See Doc. -

Decent Work for Tea Plantation Workers in Assam

PROJECT REPORT Decent Work for Tea Plantation Workers in Assam Constraints, Challenges and Prospects Conducted by Debdulal Saha Chitrasen Bhue Rajdeep Singha Tata Institute of Social Sciences Guwahati Campus October 2019 Research Team Principal Investigator Dr Debdulal Saha Core Team Dr Debdulal Saha Dr Chitrasen Bhue Dr Rajdeep Singha Research Assistants Mr Debajit Rajbangshi Mr Syed Parvez Ahmed Ms Juri Baruah Enumerators Ms Majani Das Ms Puspanjali Kalindi Mr Sourin Deb Mr Partha Paul Ms Ananya Saikia Ms Shatabdi Borpatra Gohain Mr Prabhat Konwar Mr Oskar Hazarika Photography Mr Debajit Rajbangshi Ms. Ananya Saikia Dr. Debdulal Saha Contents List of Abbreviations ii List of Figures iv List of Tables vi List of Map, Boxes and Pictures vii Acknowledgements viii Executive Summary ix 1. Tea Industry in India: Overview and Context 1 2. Infrastructure in Tea Estates: Health Centre, Childcare, Schools and Connectivity 16 3. State of Labour: Wage and Workplace 34 4. Consumption Expenditure and Income: Inequality and Deficits 55 5. Living against Odds 70 6. Socio-Economic Insecurity and Vulnerability 88 7. Bridging the Gaps: Conclusion and Recommendations 97 Glossary 102 References 103 i Abbreviations AAY Antyodaya Anna Yojna ABCMS Akhil Bhartiya Cha Mazdoor Sangha ACKS Assam Chah Karmachari Sangha ACMS Assam Chah Mazdoor Sangha APL Above Poverty Line APLR Assam Plantation Labour Rules ATPA Assam Tea Planters’ Association BCP Bharatiya Cha Parishad BLF Bought Leaf Factories BMS Bharatiya Mazdoor Sangha BPL Below Poverty Line CAGR Compound Annual -

Fueled by a Desire to Help Biologists, Eric Betzig Has Spent the Last Decade Devising Ways to Break Free from the Constraints of Defyinglight Microscopy—

Fueled by a desire to help biologists, Eric Betzig has spent the last decade devising ways to break free from the constraints of defyinglight microscopy— work recognized with the limits 2014 Nobel Prize in Chemistry. by jennifer michalowski illustration by markos kay 14 Winter 2015 / HHMI Bulletin for their development of super-resolved fuorescence microscopy—methods of visualizing objects so small that, until recently, distinguishing them with a light microscope was considered a feat that would defy the fundamental laws of physics. Living Color Betzig helped launch a revolution in super-resolution microscopy in 2006, when he developed a method that he and his collaborator Harald Hess called photoactivated localization microscopy, or PALM. The technique creates stunningly detailed images of cells by taking advantage of fluorescent labeling molecules that can be switched on and off with a pulse of light. In PALM, a sample labeled with these fuorescent tags is imaged many times, with a small subset of the fuorescent tags switched on each time. Because just a smattering of molecules is glowing in the image, each one can be pinpointed with precision. Compiling thousands of images yields a picture in which nearly all fuorescently labeled molecules show up as individual and distinct. eric betzig is a physicist and an engineer: he thinks The Janelia campus—where both Betzig and Hess are now in terms of light waves and energy, and when he tinkers group leaders—was still under construction when Betzig first in the lab, it is with lasers and mirrors and beam splitters. learned of the fuorescent probes that would make PALM He’s the first to admit that he is no biologist. -

Nobel Laureates

Nobel Laureates Over the centuries, the Academy has had a number of Nobel Prize winners amongst its members, many of whom were appointed Academicians before they received this prestigious international award. Pieter Zeeman (Physics, 1902) Lord Ernest Rutherford of Nelson (Chemistry, 1908) Guglielmo Marconi (Physics, 1909) Alexis Carrel (Physiology, 1912) Max von Laue (Physics, 1914) Max Planck (Physics, 1918) Niels Bohr (Physics, 1922) Sir Chandrasekhara Venkata Raman (Physics, 1930) Werner Heisenberg (Physics, 1932) Charles Scott Sherrington (Physiology or Medicine, 1932) Paul Dirac and Erwin Schrödinger (Physics, 1933) Thomas Hunt Morgan (Physiology or Medicine, 1933) Sir James Chadwick (Physics, 1935) Peter J.W. Debye (Chemistry, 1936) Victor Francis Hess (Physics, 1936) Corneille Jean François Heymans (Physiology or Medicine, 1938) Leopold Ruzicka (Chemistry, 1939) Edward Adelbert Doisy (Physiology or Medicine, 1943) George Charles de Hevesy (Chemistry, 1943) Otto Hahn (Chemistry, 1944) Sir Alexander Fleming (Physiology, 1945) Artturi Ilmari Virtanen (Chemistry, 1945) Sir Edward Victor Appleton (Physics, 1947) Bernardo Alberto Houssay (Physiology or Medicine, 1947) Arne Wilhelm Kaurin Tiselius (Chemistry, 1948) - 1 - Walter Rudolf Hess (Physiology or Medicine, 1949) Hideki Yukawa (Physics, 1949) Sir Cyril Norman Hinshelwood (Chemistry, 1956) Chen Ning Yang and Tsung-Dao Lee (Physics, 1957) Joshua Lederberg (Physiology, 1958) Severo Ochoa (Physiology or Medicine, 1959) Rudolf Mössbauer (Physics, 1961) Max F. Perutz (Chemistry, 1962) -

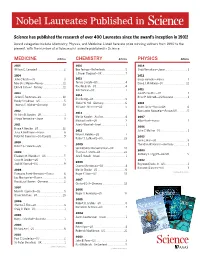

Nobel Laureates Published In

Nobel Laureates Published in Science has published the research of over 400 Laureates since the award’s inception in 1901! Award categories include Chemistry, Physics, and Medicine. Listed here are prize-winning authors from 1990 to the present, with the number of articles each Laureate published in Science. MEDICINE Articles CHEMISTRY Articles PHYSICS Articles 2015 2016 2014 William C. Campbell . 2 Ben Feringa —Netherlands........................5 Shuji Namakura—Japan .......................... 1 J. Fraser Stoddart—UK . 7 2014 2012 John O’Keefe—US...................................3 2015 Serge Haroche—France . 1 May-Britt Moser—Norway .......................11 Tomas Lindahl—US .................................4 David J. Wineland—US ............................12 Edvard I. Moser—Norway ........................11 Paul Modrich—US...................................4 Aziz Sancar—US.....................................7 2011 2013 Saul Perlmutter—US ............................... 1 2014 James E. Rothman—US ......................... 10 Brian P. Schmidt—US/Australia ................. 1 Eric Betzig—US ......................................9 Randy Schekman—US.............................5 Stefan W. Hell—Germany..........................6 2010 Thomas C. Südhof—Germany ..................13 William E. Moerner—US ...........................5 Andre Geim—Russia/UK..........................6 2012 Konstantin Novoselov—Russia/UK ............5 2013 Sir John B. Gurdon—UK . 1 Martin Karplas—Austria...........................4 2007 Shinya Yamanaka—Japan.........................3 -

HHMI Bulletin Winter 2013: First Responders (Full Issue in PDF)

HHMI BULLETIN W INTER ’13 VOL.26 • NO.01 • 4000 Jones Bridge Road Chevy Chase, Maryland 20815-6789 Hughes Medical Institute Howard www.hhmi.org Address Service Requested In This Issue: Celebrating Structural Biology Bhatia Builds an Oasis Molecular Motors Grid Locked Our cells often work in near lockstep with each other. During • development, a variety of cells come together in a specific www.hhmi.org arrangement to create complex organs such as the liver. By fabricating an encapsulated, 3-dimensional matrix of live endothelial (purple) and hepatocyte (teal) cells, as seen in this magnified snapshot, Sangeeta Bhatia can study how spatial relationships and organization impact cell behavior and, ultimately, liver function. In the long term, Bhatia hopes to build engineered tissues useful for organ repair or replacement. Read about Bhatia and her lab team’s work in “A Happy Oasis,” on page 26. FIRST RESPONDERS Robert Lefkowitz revealed a family of vol. vol. cell receptors involved in most body 26 processes—including fight or flight—and Bhatia Lab / no. no. / earned a Nobel Prize. 01 OBSERVATIONS REALLY INTO MUSCLES Whether in a leaping frog, a charging elephant, or an Olympic out that the structural changes involved in contraction were still sprinter, what happens inside a contracting muscle was pure completely unknown. At first I planned to obtain X-ray patterns from mystery until the mid-20th century. Thanks to the advent of x-ray individual A-bands, to identify the additional material present there. I crystallography and other tools that revealed muscle filaments and hoped to do this using some arthropod or insect muscles that have associated proteins, scientists began to get an inkling of what particularly long A-bands, or even using the organism Anoploductylus moves muscles. -

Petroleum and Economic Development of Iraq

University of Montana ScholarWorks at University of Montana Graduate Student Theses, Dissertations, & Professional Papers Graduate School 1963 Petroleum and economic development of Iraq Taha Hussain al-Sabea The University of Montana Follow this and additional works at: https://scholarworks.umt.edu/etd Let us know how access to this document benefits ou.y Recommended Citation al-Sabea, Taha Hussain, "Petroleum and economic development of Iraq" (1963). Graduate Student Theses, Dissertations, & Professional Papers. 8661. https://scholarworks.umt.edu/etd/8661 This Thesis is brought to you for free and open access by the Graduate School at ScholarWorks at University of Montana. It has been accepted for inclusion in Graduate Student Theses, Dissertations, & Professional Papers by an authorized administrator of ScholarWorks at University of Montana. For more information, please contact [email protected]. PETROLEUM AND ECONOMIC DEVELOPMENT OF IRAQ by TARA H. AL-SABEA B.A. Baghdad University, I960 Presented in partial fulfillment of the requirements for the degree of Master of Arts MONTANA STATE UNIVERSITY 1963 Approved ctiaarman, B dard/ofsx^lners Reproduced with permission of the copyright owner. Further reproduction prohibited without permission. UMI Number: EP39462 All rights reserved INFORMATION TO ALL USERS The quality of this reproduction is dependent upon the quality of the copy submitted. In the unlikely event that the author did not send a complete manuscript and there are missing pages, these will be noted. Also, if material had to be removed, a note will indicate the deletion. UMI* OisMTtation Publkhing UMI EP39462 Published by ProQuest LLC (2013). Copyright in the Dissertation held by the Author, Microform Edition © ProQuest LLC. -

Microscopy & Microanalysis Table of Contents

Microscopy & Microanalysis The Official M&M 2017 Proceedings St. Louis, MO, USA • August 6-10, 2017 Table of Contents Title Page i Copyright Page ii MAM Editors iii Copyright Information iv Contents v Welcome from the Society Presidents lxxvii Welcome from the Program Chairs lxxviii Plenary Sessions lxxix Microscopy Society of America lxxxii Microanalysis Society of America lxxxviii International Field Emission Society xcii Meeting Awards xciii Abstracts 2 Author Index 2324 Downloaded from https://www.cambridge.org/core. IP address: 170.106.33.42, on 28 Sep 2021 at 18:43:38, subject to the Cambridge Core terms of use, available at https://www.cambridge.org/core/termsv . https://doi.org/10.1017/S1431927617012284 2017 Plenary Session 2 Imaging Cellular Structure and Dynamics from Molecules to Organisms; E Betzig 4 Detecting Massive Black Holes via Attometry: Gravitational Wave Astronomy Begins; K Riles Analytical and Instrumentation Science Symposia Vendor Symposium 6 Probing the Element Distribution at the Organic-Inorganic Interface Using EDS; M Falke, A Kaeppel, B Yu, T Salge, R Terborg 8 ZEISS Crossbeam – Advancing Capabilities in High Throughput 3D Analysis and Sample Preparation; T Volkenandt, F Pérez-Willard, M Rauscher, PM Anger 10 A Dedicated Backscattered Electron Detector for High Speed Imaging and Defect Inspection; M Schmid, A Liebel, R Lackner, D Steigenhöfer, A Niculae, H Soltau 12 Large Area 3D Structural Characterization by Serial Sectioning Using Broad Ion Beam Argon Ion Milling; P NOWAKOWSKI, ML Ray, PE Fischione 14 New