Camera Processing Pipeline Kari Pulli VP Computational Imaging Light Imaging Without Optics?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Breaking Down the “Cosine Fourth Power Law”

Breaking Down The “Cosine Fourth Power Law” By Ronian Siew, inopticalsolutions.com Why are the corners of the field of view in the image captured by a camera lens usually darker than the center? For one thing, camera lenses by design often introduce “vignetting” into the image, which is the deliberate clipping of rays at the corners of the field of view in order to cut away excessive lens aberrations. But, it is also known that corner areas in an image can get dark even without vignetting, due in part to the so-called “cosine fourth power law.” 1 According to this “law,” when a lens projects the image of a uniform source onto a screen, in the absence of vignetting, the illumination flux density (i.e., the optical power per unit area) across the screen from the center to the edge varies according to the fourth power of the cosine of the angle between the optic axis and the oblique ray striking the screen. Actually, optical designers know this “law” does not apply generally to all lens conditions.2 – 10 Fundamental principles of optical radiative flux transfer in lens systems allow one to tune the illumination distribution across the image by varying lens design characteristics. In this article, we take a tour into the fascinating physics governing the illumination of images in lens systems. Relative Illumination In Lens Systems In lens design, one characterizes the illumination distribution across the screen where the image resides in terms of a quantity known as the lens’ relative illumination — the ratio of the irradiance (i.e., the power per unit area) at any off-axis position of the image to the irradiance at the center of the image. -

Sample Manuscript Showing Specifications and Style

Information capacity: a measure of potential image quality of a digital camera Frédéric Cao 1, Frédéric Guichard, Hervé Hornung DxO Labs, 3 rue Nationale, 92100 Boulogne Billancourt, FRANCE ABSTRACT The aim of the paper is to define an objective measurement for evaluating the performance of a digital camera. The challenge is to mix different flaws involving geometry (as distortion or lateral chromatic aberrations), light (as luminance and color shading), or statistical phenomena (as noise). We introduce the concept of information capacity that accounts for all the main defects than can be observed in digital images, and that can be due either to the optics or to the sensor. The information capacity describes the potential of the camera to produce good images. In particular, digital processing can correct some flaws (like distortion). Our definition of information takes possible correction into account and the fact that processing can neither retrieve lost information nor create some. This paper extends some of our previous work where the information capacity was only defined for RAW sensors. The concept is extended for cameras with optical defects as distortion, lateral and longitudinal chromatic aberration or lens shading. Keywords: digital photography, image quality evaluation, optical aberration, information capacity, camera performance database 1. INTRODUCTION The evaluation of a digital camera is a key factor for customers, whether they are vendors or final customers. It relies on many different factors as the presence or not of some functionalities, ergonomic, price, or image quality. Each separate criterion is itself quite complex to evaluate, and depends on many different factors. The case of image quality is a good illustration of this topic. -

Invention of Digital Photograph

Invention of Digital photograph Digital photography uses cameras containing arrays of electronic photodetectors to capture images focused by a lens, as opposed to an exposure on photographic film. The captured images are digitized and stored as a computer file ready for further digital processing, viewing, electronic publishing, or digital printing. Until the advent of such technology, photographs were made by exposing light sensitive photographic film and paper, which was processed in liquid chemical solutions to develop and stabilize the image. Digital photographs are typically created solely by computer-based photoelectric and mechanical techniques, without wet bath chemical processing. The first consumer digital cameras were marketed in the late 1990s.[1] Professionals gravitated to digital slowly, and were won over when their professional work required using digital files to fulfill the demands of employers and/or clients, for faster turn- around than conventional methods would allow.[2] Starting around 2000, digital cameras were incorporated in cell phones and in the following years, cell phone cameras became widespread, particularly due to their connectivity to social media websites and email. Since 2010, the digital point-and-shoot and DSLR formats have also seen competition from the mirrorless digital camera format, which typically provides better image quality than the point-and-shoot or cell phone formats but comes in a smaller size and shape than the typical DSLR. Many mirrorless cameras accept interchangeable lenses and have advanced features through an electronic viewfinder, which replaces the through-the-lens finder image of the SLR format. While digital photography has only relatively recently become mainstream, the late 20th century saw many small developments leading to its creation. -

Section 10 Vignetting Vignetting the Stop Determines Determines the Stop the Size of the Bundle of Rays That Propagates On-Axis an the System for Through Object

10-1 I and Instrumentation Design Optical OPTI-502 © Copyright 2019 John E. Greivenkamp E. John 2019 © Copyright Section 10 Vignetting 10-2 I and Instrumentation Design Optical OPTI-502 Vignetting Greivenkamp E. John 2019 © Copyright On-Axis The stop determines the size of Ray Aperture the bundle of rays that propagates Bundle through the system for an on-axis object. As the object height increases, z one of the other apertures in the system (such as a lens clear aperture) may limit part or all of Stop the bundle of rays. This is known as vignetting. Vignetted Off-Axis Ray Rays Bundle z Stop Aperture 10-3 I and Instrumentation Design Optical OPTI-502 Ray Bundle – On-Axis Greivenkamp E. John 2018 © Copyright The ray bundle for an on-axis object is a rotationally-symmetric spindle made up of sections of right circular cones. Each cone section is defined by the pupil and the object or image point in that optical space. The individual cone sections match up at the surfaces and elements. Stop Pupil y y y z y = 0 At any z, the cross section of the bundle is circular, and the radius of the bundle is the marginal ray value. The ray bundle is centered on the optical axis. 10-4 I and Instrumentation Design Optical OPTI-502 Ray Bundle – Off Axis Greivenkamp E. John 2019 © Copyright For an off-axis object point, the ray bundle skews, and is comprised of sections of skew circular cones which are still defined by the pupil and object or image point in that optical space. -

Alternative Processes a Few Essentials Introduction

Alternative Processes A Few Essentials Introduction Chapter 1. Capture Techniques From Alternative Photographic Processes: Crafting Handmade Images Chapter 2. Digital Negatives for Gum From Gum Printing: A Step-by-Step Manual, Highlighting Artists and Their Creative Practice Chapter 3. Fugitive and Not-So-Fugitive Printing From Jill Enfield?s Guide to Photographic Alternative Processes: Popular Historical and Contemporary Techniques 2 Featured Books on Alternative Process Photography from Routledge | Focal Press Use discount code FLR40 to take 20% off all Routledge titles. Simply visit www.routledge.com/photography to browse and purchase books of interest. 3 Introduction A young art though it may be, photography already has a rich history. As media moves full steam ahead into the digital revolution and beyond, it is a natural instinct to look back at where we?ve come from. With more artists rediscovering photography?s historical processes, the practice of photography continually redefines and re-contextualizes itself. The creative possibilities of these historical processes are endless, spawning a growing arena of practice - alternative processes, which combines past, present and everything in between, in the creation of art. This collection is an introduction to and a sample of these processes and possibilities. With Alternative Photographic Processes, Brady Wilks demonstrates techniques for manipulating photographs, negatives and prints ? emphasizing the ?hand-made? touch. Bridging the gap between the simplest of processes to the most complex, Wilks? introduction demonstrates image-manipulation pre-capture, allowing the artist to get intimate with his or her images long before development. In the newly-released Gum Printing, leading gum expert Christina Z. -

Multi-Agent Recognition System Based on Object Based Image Analysis Using Worldview-2

intervals, between −3 and +3, for a total of 19 different EVs To mimic airborne imagery collection from a terrestrial captured (see Table 1). WB variable images were captured location, we attempted to approximate an equivalent path utilizing the camera presets listed in Table 1 at the EVs of 0, radiance to match a range of altitudes (750 to 1500 m) above −1 and +1, for a total of 27 images. Variable light metering ground level (AGL), that we commonly use as platform alti- tests were conducted using the “center weighted” light meter tudes for our simulated damage assessment research. Optical setting, at the EVs listed in Table 1, for a total of seven images. depth increases horizontally along with the zenith angle, from The variable ISO tests were carried out at the EVs of −1, 0, and a factor of one at zenith angle 0°, to around 40° at zenith angle +1, using the ISO values listed in Table 1, for a total of 18 im- 90° (Allen, 1973), and vertically, with a zenith angle of 0°, the ages. The variable aperture tests were conducted using 19 dif- magnitude of an object at the top of the atmosphere decreases ferent apertures ranging from f/2 to f/16, utilizing a different by 0.28 at sea level, 0.24 at 500 m, and 0.21 at 1,000 m above camera system from all the other tests, with ISO = 50, f = 105 sea level, to 0 at around 100 km (Green, 1992). With 25 mm, four different degrees of onboard vignetting control, and percent of atmospheric scattering due to atmosphere located EVs of −⅓, ⅔, 0, and +⅔, for a total of 228 images. -

Holographic Optics for Thin and Lightweight Virtual Reality

Holographic Optics for Thin and Lightweight Virtual Reality ANDREW MAIMONE, Facebook Reality Labs JUNREN WANG, Facebook Reality Labs Fig. 1. Left: Photo of full color holographic display in benchtop form factor. Center: Prototype VR display in sunglasses-like form factor with display thickness of 8.9 mm. Driving electronics and light sources are external. Right: Photo of content displayed on prototype in center image. Car scenes by komba/Shutterstock. We present a class of display designs combining holographic optics, direc- small text near the limit of human visual acuity. This use case also tional backlighting, laser illumination, and polarization-based optical folding brings VR out of the home and in to work and public spaces where to achieve thin, lightweight, and high performance near-eye displays for socially acceptable sunglasses and eyeglasses form factors prevail. virtual reality. Several design alternatives are proposed, compared, and ex- VR has made good progress in the past few years, and entirely perimentally validated as prototypes. Using only thin, flat films as optical self-contained head-worn systems are now commercially available. components, we demonstrate VR displays with thicknesses of less than 9 However, current headsets still have box-like form factors and pro- mm, fields of view of over 90◦ horizontally, and form factors approach- ing sunglasses. In a benchtop form factor, we also demonstrate a full color vide only a fraction of the resolution of the human eye. Emerging display using wavelength-multiplexed holographic lenses that uses laser optical design techniques, such as polarization-based optical folding, illumination to provide a large gamut and highly saturated color. -

A N E W E R a I N O P T I

A NEW ERA IN OPTICS EXPERIENCE AN UNPRECEDENTED LEVEL OF PERFORMANCE NIKKOR Z Lens Category Map New-dimensional S-Line Other lenses optical performance realized NIKKOR Z NIKKOR Z NIKKOR Z Lenses other than the with the Z mount 14-30mm f/4 S 24-70mm f/2.8 S 24-70mm f/4 S S-Line series will be announced at a later date. NIKKOR Z 58mm f/0.95 S Noct The title of the S-Line is reserved only for NIKKOR Z lenses that have cleared newly NIKKOR Z NIKKOR Z established standards in design principles and quality control that are even stricter 35mm f/1.8 S 50mm f/1.8 S than Nikon’s conventional standards. The “S” can be read as representing words such as “Superior”, “Special” and “Sophisticated.” Top of the S-Line model: the culmination Versatile lenses offering a new dimension Well-balanced, of the NIKKOR quest for groundbreaking in optical performance high-performance lenses Whichever model you choose, every S-Line lens achieves richly detailed image expression optical performance These lenses bring a higher level of These lenses strike an optimum delivering a sense of reality in both still shooting and movie creation. It offers a new This lens has the ability to depict subjects imaging power, achieving superior balance between advanced in ways that have never been seen reproduction with high resolution even at functionality, compactness dimension in optical performance, including overwhelming resolution, bringing fresh before, including by rendering them with the periphery of the image, and utilizing and cost effectiveness, while an extremely shallow depth of field. -

A High Full Well Capacity CMOS Image Sensor for Space Applications

sensors Article A High Full Well Capacity CMOS Image Sensor for Space Applications Woo-Tae Kim 1 , Cheonwi Park 1, Hyunkeun Lee 1 , Ilseop Lee 2 and Byung-Geun Lee 1,* 1 School of Electrical Engineering and Computer Science, Gwangju Institute of Science and Technology, Gwangju 61005, Korea; [email protected] (W.-T.K.); [email protected] (C.P.); [email protected] (H.L.) 2 Korea Aerospace Research Institute, Daejeon 34133, Korea; [email protected] * Correspondence: [email protected]; Tel.: +82-62-715-3231 Received: 24 January 2019; Accepted: 26 March 2019; Published: 28 March 2019 Abstract: This paper presents a high full well capacity (FWC) CMOS image sensor (CIS) for space applications. The proposed pixel design effectively increases the FWC without inducing overflow of photo-generated charge in a limited pixel area. An MOS capacitor is integrated in a pixel and accumulated charges in a photodiode are transferred to the in-pixel capacitor multiple times depending on the maximum incident light intensity. In addition, the modulation transfer function (MTF) and radiation damage effect on the pixel, which are especially important for space applications, are studied and analyzed through fabrication of the CIS. The CIS was fabricated using a 0.11 µm 1-poly 4-metal CIS process to demonstrate the proposed techniques and pixel design. A measured FWC of 103,448 electrons and MTF improvement of 300% are achieved with 6.5 µm pixel pitch. Keywords: CMOS image sensors; wide dynamic range; multiple charge transfer; space applications; radiation damage effects 1. Introduction Imaging devices are essential components in the space environment for a range of applications including earth observation, star trackers on satellites, lander and rover cameras [1]. -

Irix 45Mm F1.4

Explore the magic of medium format photography with the Irix 45mm f/1.4 lens equipped with the native mount for Fujifilm GFX cameras! The Irix Lens brand introduces a standard 45mm wide-angle lens with a dedicated mount that can be used with Fujifilm GFX series cameras equipped with medium format sensors. Digital medium format is a nod to traditional analog photography and a return to the roots that defined the vividness and quality of the image captured in photos. Today, the Irix brand offers creators, who seek iconic image quality combined with mystical vividness, a tool that will allow them to realize their wildest creative visions - the Irix 45mm f / 1.4 G-mount lens. It is an innovative product because as a precursor, it paves the way for standard wide-angle lenses with low aperture, which are able to cope with medium format sensors. The maximum aperture value of f/1.4 and the sensor size of Fujifilm GFX series cameras ensure not only a shallow depth of field, but also smooth transitions between individual focus areas and a high dynamic range. The wide f/1.4 aperture enables you to capture a clear background separation and work in low light conditions, and thanks to the excellent optical performance, which consists of high sharpness, negligible amount of chromatic aberration and great microcontrast - this lens can successfully become the most commonly used accessory that will help you create picturesque shots. The Irix 45mm f / 1.4 GFX is a professional lens designed for FujiFilm GFX cameras. It has a high-quality construction, based on the knowledge of Irix Lens engineers gained during the design and production of full-frame lenses. -

Lecture Notes 3 Charge-Coupled Devices (Ccds) – Part II • CCD

Lecture Notes 3 Charge-Coupled Devices (CCDs) { Part II • CCD array architectures and pixel layout ◦ One-dimensional CCD array ◦ Two-dimensional CCD array • Smear • Readout circuits • Anti-blooming, electronic shuttering, charge reset operation • Window of interest, pixel binning • Pinned photodiode EE 392B: CCDs{Part II 3-1 One-Dimensional (Linear) CCD Operation A. Theuwissen, \Solid State Imaging with Charge-Coupled Devices," Kluwer (1995) EE 392B: CCDs{Part II 3-2 • A line of photodiodes or photogates is used for photodetection • After integration, charge from the entire row is transferred in parallel to the horizontal CCD (HCCD) through transfer gates • New integration period begins while charge packets are transferred through the HCCD (serial transfer) to the output readout circuit (to be discussed later) • The scene can be mechanically scanned at a speed commensurate with the pixel size in the vertical direction to obtain 2D imaging • Applications: scanners, scan-and-print photocopiers, fax machines, barcode readers, silver halide film digitization, DNA sequencing • Advantages: low cost (small chip size) EE 392B: CCDs{Part II 3-3 Two-Dimensional (Area) CCD • Frame transfer CCD (FT-CCD) ◦ Full frame CCD • Interline transfer CCD (IL-CCD) • Frame-interline transfer CCD (FIT-CCD) • Time-delay-and-integration CCD (TDI-CCD) EE 392B: CCDs{Part II 3-4 Frame Transfer CCD Light−sensitive CCD array Frame−store CCD array Amplifier Output Horizontal CCD Integration Vertical shift Operation Vertical shift Horizotal shift Time EE 392B: CCDs{Part II 3-5 Pixel Layout { FT-CCD D. N. Nichols, W. Chang, B. C. Burkey, E. G. Stevens, E. A. Trabka, D. -

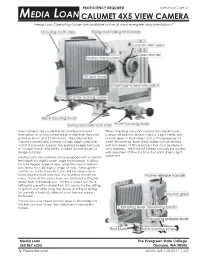

Basic View Camera

PROFICIENCY REQUIRED Operating Guide for MEDIA LOAN CALUMET 4X5 VIEW CAMERA Media Loan Operating Guides are available online at www.evergreen.edu/medialoan/ View cameras are usually tripod mounted and lend When checking out a 4x5 camera from Media Loan, themselves to a more contemplative style than the more patrons will need to obtain a tripod, a light meter, one portable 35mm and 2 1/4 formats. The Calumet 4x5 or both types of film holders, and a changing bag for Standard model view camera is a lightweight, portable sheet film loading. Each sheet holder can be loaded tool that produces superior, fine grained images because with two sheets of film, a process that must be done in of its large format and ability to adjust for a minimum of total darkness. The Polaroid holders can only be loaded image distortion. with one sheet of film at a time, but each sheet is light Media Loan's 4x5 cameras come equipped with a 150mm protected. lens which is a slightly wider angle than normal. It allows for a 44 degree angle of view, while the normal 165mm lens allows for a 40 degree angle of view. Although the controls on each of Media Loan's 4x5 lens may vary in terms of placement and style, the functions remain the same. Some of the lenses have an additional setting for strobe flash or flashbulb use. On these lenses, use the X setting for use with a strobe flash (It’s crucial for the setting to remain on X while using the studio) and the M setting for use with a flashbulb (Media Loan does not support flashbulbs).