The Ubiquity of Large Graphs and Surprising Challenges of Graph Processing: Extended Survey

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Gephi Tools for Network Analysis and Visualization

Frontiers of Network Science Fall 2018 Class 8: Introduction to Gephi Tools for network analysis and visualization Boleslaw Szymanski CLASS PLAN Main Topics • Overview of tools for network analysis and visualization • Installing and using Gephi • Gephi hands-on labs Frontiers of Network Science: Introduction to Gephi 2018 2 TOOLS OVERVIEW (LISTED ALPHABETICALLY) Tools for network analysis and visualization • Computing model and interface – Desktop GUI applications – API/code libraries, Web services – Web GUI front-ends (cloud, distributed, HPC) • Extensibility model – Only by the original developers – By other users/developers (add-ins, modules, additional packages, etc.) • Source availability model – Open-source – Closed-source • Business model – Free of charge – Commercial Frontiers of Network Science: Introduction to Gephi 2018 3 TOOLS CINET CyberInfrastructure for NETwork science • Accessed via a Web-based portal (http://cinet.vbi.vt.edu/granite/granite.html) • Supported by grants, no charge for end users • Aims to provide researchers, analysts, and educators interested in Network Science with an easy-to-use cyber-environment that is accessible from their desktop and integrates into their daily work • Users can contribute new networks, data, algorithms, hardware, and research results • Primarily for research, teaching, and collaboration • No programming experience is required Frontiers of Network Science: Introduction to Gephi 2018 4 TOOLS Cytoscape Network Data Integration, Analysis, and Visualization • A standalone GUI application -

Performing Knowledge Requirements Analysis for Public Organisations in a Virtual Learning Environment: a Social Network Analysis

chnology Te & n S o o ti ft a w a m r r e Fontenele et al., J Inform Tech Softw Eng 2014, 4:2 o f E Journal of n n I g f i o n DOI: 10.4172/2165-7866.1000134 l e a e n r r i n u g o J ISSN: 2165-7866 Information Technology & Software Engineering Research Article Open Access Performing Knowledge Requirements Analysis for Public Organisations in a Virtual Learning Environment: A Social Network Analysis Approach Fontenele MP1*, Sampaio RB2, da Silva AIB2, Fernandes JHC2 and Sun L1 1University of Reading, School of Systems Engineering, Whiteknights, Reading, RG6 6AH, United Kingdom 2Universidade de Brasília, Campus Universitário Darcy Ribeiro, Brasília, DF, 70910-900, Brasil Abstract This paper describes an application of Social Network Analysis methods for identification of knowledge demands in public organisations. Affiliation networks established in a postgraduate programme were analysed. The course was ex- ecuted in a distance education mode and its students worked on public agencies. Relations established among course participants were mediated through a virtual learning environment using Moodle. Data available in Moodle may be ex- tracted using knowledge discovery in databases techniques. Potential degrees of closeness existing among different organisations and among researched subjects were assessed. This suggests how organisations could cooperate for knowledge management and also how to identify their common interests. The study points out that closeness among organisations and research topics may be assessed through affiliation networks. This opens up opportunities for ap- plying knowledge management between organisations and creating communities of practice. -

A Comparative Analysis of Large-Scale Network Visualization Tools

A Comparative Analysis of Large-scale Network Visualization Tools Md Abdul Motaleb Faysal and Shaikh Arifuzzaman Department of Computer Science, University of New Orleans New Orleans, LA 70148, USA. Email: [email protected], [email protected] Abstract—Network (Graph) is a powerful abstraction for scalability for large network analysis. Such study will help a representing underlying relations and structures in large complex person to decide which tool to use for a specific case based systems. Network visualization provides a convenient way to ex- on his need. plore and study such structures and reveal useful insights. There exist several network visualization tools; however, these vary in One such study in [3] compares a couple of tools based on terms of scalability, analytics feature, and user-friendliness. Due scalability. Comparative studies on other visualization metrics to the huge growth of social, biological, and other scientific data, should be conducted to let end users have freedom in choosing the corresponding network data is also large. Visualizing such the specific tool he needs to use. In this paper, we have chosen large network poses another level of difficulty. In this paper, we five widely used visualization tools for an empirical and identify several popular network visualization tools and provide a comparative analysis based on the features and operations comparative analysis. Our comparisons are based on factors these tools support. We demonstrate empirically how those tools such as supported file formats, scalability in visualization scale to large networks. We also provide several case studies of and analysis, interacting capability with the drawn network, visual analytics on large network data and assess performances end user-friendliness (e.g., users with no programming back- of the tools. -

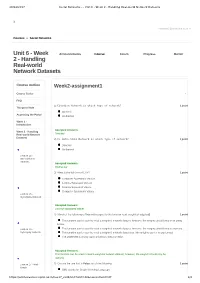

Week 2 - Handling Real-World Network Datasets

28/12/2017 Social Networks - - Unit 6 - Week 2 - Handling Real-world Network Datasets X [email protected] ▼ Courses » Social Networks Unit 6 - Week Announcements Course Forum Progress Mentor 2 - Handling Real-world Network Datasets Course outline Week2-assignment1 Course Trailer . FAQ 1) Citation Network is which type of network? 1 point Things to Note Directed Accessing the Portal Undirected Week 1 - Introduction Accepted Answers: Week 2 - Handling Real-world Network Directed Datasets 2) Co-authorship Network is which type of network? 1 point Directed Undirected Lecture 14 - Introduction to Datasets Accepted Answers: Undirected 3) What is the full form of CSV? 1 point Computer Systematic Version Comma Separated Version Comma Separated Values Computer Systematic Values Lecture 15 - Ingredients Network Accepted Answers: Comma Separated Values 4) Which of the following is True with respect to the function read_weighted_edgelist() 1 point This function can be used to read a weighted network dataset, however, the weights should only be in string format. Lecture 16 - This function can be used to read a weighted network dataset, however, the weights should only be numeric. Synonymy Network This function can be used to read a weighted network dataset and the weights can be in any format. The statement is wrong; such a function does not exist. Accepted Answers: This function can be used to read a weighted network dataset, however, the weights should only be numeric. Lecture 17 - Web 5) Choose the one that is False out of the following: 1 point Graph GML stands for Graph Modeling Language. https://onlinecourses.nptel.ac.in/noc17_cs41/unit?unit=42&assessment=47 1/4 28/12/2017 Social Networks - - Unit 6 - Week 2 - Handling Real-world Network Datasets GML stores the data in the form of tags just like XML. -

Networkx: Network Analysis with Python

NetworkX: Network Analysis with Python Dionysis Manousakas (special thanks to Petko Georgiev, Tassos Noulas and Salvatore Scellato) Social and Technological Network Data Analytics Computer Laboratory, University of Cambridge February 2017 Outline 1. Introduction to NetworkX 2. Getting started with Python and NetworkX 3. Basic network analysis 4. Writing your own code 5. Ready for your own analysis! 2 1. Introduction to NetworkX 3 Introduction: networks are everywhere… Social Mobile phone networks Vehicular flows networks Web pages/citations Internet routing How can we analyse these networks? Python + NetworkX 4 Introduction: why Python? Python is an interpreted, general-purpose high-level programming language whose design philosophy emphasises code readability + - Clear syntax, indentation Can be slow Multiple programming paradigms Beware when you are Dynamic typing, late binding analysing very large Multiple data types and structures networks Strong on-line community Rich documentation Numerous libraries Expressive features Fast prototyping 5 Introduction: Python’s Holy Trinity Python’s primary NumPy is an extension to Matplotlib is the library for include primary plotting mathematical and multidimensional arrays library in Python. statistical computing. and matrices. Contains toolboxes Supports 2-D and 3-D for: Both SciPy and NumPy plotting. All plots are • Numeric rely on the C library highly customisable optimization LAPACK for very fast and ready for implementation. professional • Signal processing publication. • Statistics, and more… -

Using Computer Games Techniques for Improving Graph Visualization Efficiency

Poster Abstracts at Eurographics/ IEEE-VGTC Symposium on Visualization (2010) Using Computer Games Techniques for Improving Graph Visualization Efficiency Mathieu Bastian1, Sebastien Heymann1;2 and Mathieu Jacomy3 1INIST-CNRS, France 2IMS CNRS - ENSC Bordeaux, France 3WebAtlas, Telecom ParisTech, France Abstract Creating an efficient, interactive and flexible unified graph visualization system is a difficult problem. We present a hardware accelerated OpenGL graph drawing engine, in conjunction with a flexible preview package. While the interactive OpenGL visualization focuses on performance, the preview focuses on aesthetics and simple network map creation. The system is implemented as Gephi, a modular and extensible open-source Java application built on top of the Netbeans Platform, currently in alpha version 0.7. Categories and Subject Descriptors (according to ACM CCS): H.5.2 [Information Systems]: User Interfaces—I.3.6 [Computing Methodologies]: Methodology and Techniques—Interaction techniques 1. Introduction For guaranteeing best performance, the 3-D visualization engine leaves apart effects and complex shapes, for instance Large scale graph drawing remains a technological chal- curved edges and focus on basics, i.e. nodes, edges and la- lenge, actively developed by the infovis community. Many bels. As it is specialized in a particular task, the visual- systems have successfully brought interactive graph visu- ization engine is thus less flexible. That’s why a comple- alization [Aub03], [Ada06], [TAS09] but still have visu- mentary drawing library, named Preview is needed to bring alization limitations to render large graphs at some mo- parametrization and flexibility. The following sections de- ments. Various techniques have been used to either optimize scibe the role granted to each visualization module. -

Mastering Gephi Network Visualization

Mastering Gephi Network Visualization Produce advanced network graphs in Gephi and gain valuable insights into your network datasets Ken Cherven BIRMINGHAM - MUMBAI Mastering Gephi Network Visualization Copyright © 2015 Packt Publishing All rights reserved. No part of this book may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, without the prior written permission of the publisher, except in the case of brief quotations embedded in critical articles or reviews. Every effort has been made in the preparation of this book to ensure the accuracy of the information presented. However, the information contained in this book is sold without warranty, either express or implied. Neither the author, nor Packt Publishing, and its dealers and distributors will be held liable for any damages caused or alleged to be caused directly or indirectly by this book. Packt Publishing has endeavored to provide trademark information about all of the companies and products mentioned in this book by the appropriate use of capitals. However, Packt Publishing cannot guarantee the accuracy of this information. First published: January 2015 Production reference: 1220115 Published by Packt Publishing Ltd. Livery Place 35 Livery Street Birmingham B3 2PB, UK. ISBN 978-1-78398-734-4 www.packtpub.com Credits Author Project Coordinator Ken Cherven Leena Purkait Reviewers Proofreaders Ladan Doroud Cathy Cumberlidge Miro Marchi Paul Hindle David Edward Polley Samantha Lyon Mollie Taylor George G. Vega Yon Indexer Monica Ajmera Mehta Commissioning Editor Ashwin Nair Graphics Abhinash Sahu Acquisition Editor Sam Wood Production Coordinator Conidon Miranda Content Development Editor Amey Varangaonkar Cover Work Conidon Miranda Technical Editors Shruti Rawool Shali Sasidharan Copy Editors Rashmi Sawant Stuti Srivastava Neha Vyas About the Author Ken Cherven is a Detroit-based data visualization and open source enthusiast, with 20 years of experience working with data and visualization tools. -

Review Article Empirical Comparison of Visualization Tools for Larger-Scale Network Analysis

Hindawi Advances in Bioinformatics Volume 2017, Article ID 1278932, 8 pages https://doi.org/10.1155/2017/1278932 Review Article Empirical Comparison of Visualization Tools for Larger-Scale Network Analysis Georgios A. Pavlopoulos,1 David Paez-Espino,1 Nikos C. Kyrpides,1 and Ioannis Iliopoulos2 1 Department of Energy, Joint Genome Institute, Lawrence Berkeley Labs, 2800 Mitchell Drive, Walnut Creek, CA 94598, USA 2Division of Basic Sciences, University of Crete Medical School, Andrea Kalokerinou Street, Heraklion, Greece Correspondence should be addressed to Georgios A. Pavlopoulos; [email protected] and Ioannis Iliopoulos; [email protected] Received 22 February 2017; Revised 14 May 2017; Accepted 4 June 2017; Published 18 July 2017 Academic Editor: Klaus Jung Copyright © 2017 Georgios A. Pavlopoulos et al. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. Gene expression, signal transduction, protein/chemical interactions, biomedical literature cooccurrences, and other concepts are often captured in biological network representations where nodes represent a certain bioentity and edges the connections between them. While many tools to manipulate, visualize, and interactively explore such networks already exist, only few of them can scale up and follow today’s indisputable information growth. In this review, we shortly list a catalog of available network visualization tools and, from a user-experience point of view, we identify four candidate tools suitable for larger-scale network analysis, visualization, and exploration. We comment on their strengths and their weaknesses and empirically discuss their scalability, user friendliness, and postvisualization capabilities. -

The Ubiquity of Large Graphs and Surprising Challenges of Graph Processing

The Ubiquity of Large Graphs and Surprising Challenges of Graph Processing Siddhartha Sahu, Amine Mhedhbi, Semih Salihoglu, Jimmy Lin, M. Tamer Özsu David R. Cheriton School of Computer Science University of Waterloo {s3sahu,amine.mhedhbi,semih.salihoglu,jimmylin,tamer.ozsu}@uwaterloo.ca ABSTRACT 52, 55], and distributed graph processing systems [17, 21, 27]. In Graph processing is becoming increasingly prevalent across many the academic literature, a large number of publications that study application domains. In spite of this prevalence, there is little re- numerous topics related to graph processing regularly appear across search about how graphs are actually used in practice. We conducted a wide spectrum of research venues. an online survey aimed at understanding: (i) the types of graphs Despite their prevalence, there is little research on how graph data users have; (ii) the graph computations users run; (iii) the types is actually used in practice and the major challenges facing users of graph software users use; and (iv) the major challenges users of graph data, both in industry and research. In April 2017, we face when processing their graphs. We describe the participants’ conducted an online survey across 89 users of 22 different software responses to our questions highlighting common patterns and chal- products, with the goal of answering 4 high-level questions: lenges. We further reviewed user feedback in the mailing lists, bug (i) What types of graph data do users have? reports, and feature requests in the source repositories of a large (ii) What computations do users run on their graphs? suite of software products for processing graphs. -

Graph/Network Visualization

Graph/Network Visualization Data model: graph structures (relations, knowledge) and networks. Applications: – Telecommunication systems, – Internet and WWW, – Retailers’ distribution networks – knowledge representation – Trade – Collaborations – literature citations, etc. 1 What is a Graph? Vertices (nodes) Edges (links) Adjacency list 1: 2 2: 1, 3 3: 2 1 2 3 1 1 0 1 0 2 1 0 1 3 0 1 0 2 3 Drawing Adjacency matrix 2 Graph Terminology Graphs can have cycles Graph edges can be directed or undirected The degree of a vertex is the number of edges connected to it – In-degree and out-degree for directed graphs Graph edges can have values (weights) on them (nominal, ordinal or quantitative) 3 Trees are Different Subcase of general graph No cycles Typically directed edges Special designated root vertex 4 Issues in Graph visualization Graph drawing Layout and positioning Scale: large scale graphs are difficult Navigation: changing focus and scale 5 Vertex Issues Shape Color Size Location Label 6 Edge Issues Color Size Label Form – Polyline, straight line, orthogonal, grid, curved, planar, upward/downward, ... 7 Aesthetic Considerations Crossings -- minimize number of edge crossings Total Edge Length -- minimize total length Area -- minimize towards efficiency Maximum Edge Length -- minimize longest edge Uniform Edge Lengths -- minimize variances Total Bends -- minimize orthogonal towards straight-line 8 Graph drawing optimization 3D-Graph Drawing Graph visualization techniques Node-link approach – Layered graph drawing (Sugiyama) – Force-directed layout – Multi-dimensional scaling (MDS) Adjacency Matrix 11 Sugiyama (layered) method Suitable for directed graphs with natural hierarchies: All edges are oriented in a consistent direction and no pairs of edges cross Sugiyama : Building Hierarchy Assign layers according to the longest path of each vertex Dummy vertices are used to avoid path across multiple layers. -

Open Source Libraries and Frameworks for Biological Data Visualisation: a Guide for Developers

www.proteomics-journal.com Page 1 Proteomics Open source libraries and frameworks for biological data visualisation: A guide for developers Rui Wang1,#, Yasset Perez-Riverol1,#, Henning Hermjakob1, Juan Antonio Vizcaíno1,* 1European Molecular Biology Laboratory, European Bioinformatics Institute (EMBL-EBI), Wellcome Trust Genome Campus, Hinxton, Cambridge, CB10 1SD, UK. #Both authors contributed equally to this manuscript. * Corresponding author: Dr. Juan Antonio Vizcaíno, European Molecular Biology Laboratory, European Bioinformatics Institute (EMBL-EBI), Wellcome Trust Genome Campus, Hinxton, Cambridge, CB10 1SD, UK. Tel.: +44 1223 492 610; Fax: +44 1223 494 484; E-mail: [email protected]. Running title: Data visualisation libraries and frameworks Keywords: bioinformatics, chart, data visualization, framework, hierarchy, network, software library. Abbreviations Received: 07-Aug-2014; Revised: 21-Oct-2014; Accepted: 26-Nov-2014 This article has been accepted for publication and undergone full peer review but has not been through the copyediting, typesetting, pagination and proofreading process, which may lead to differences between this version and the Version of Record. Please cite this article as doi: 10.1002/pmic.201400377. This article is protected by copyright. All rights reserved. API Application Programming Interface AWT Abstract Window Toolkit CATH Class, Architecture, Topology, Homology (classification) EBI European Bioinformatics Institute ENA European Nucleotide Archive CSS, CSS3 Cascading Style Sheets DOT Graph Description Language -

Network Analysis of Tweets

Network Analysis of Tweets Building and graphing networks of users and tweets Outline ¤ Introduction to Graphs ¤ Not "charting" ¤ Constructing Network Structure ¤ build_graph.py ¤ Parsing tweets ¤ Using networkx ¤ Presentation of Graph data ¤ Gephi Basic Idea of a Graph ¤ “A” is related to “B” ¤ Three things we want to represent ¤ Item, person, concept, thing: “A” ¤ Item, person, concept, thing: “B” ¤ The “is related to” relation Basic Idea of a Graph ¤ “A” is related to “B” ¤ Three things we want to represent ¤ Item, person, concept, thing: “A” ¤ Item, person, concept, thing: “B” ¤ The “is related to” relation is related to Basic Idea of a Graph ¤ “A” is related to “B” ¤ Three things we want to represent ¤ Item, person, concept, thing: “A” ¤ Item, person, concept, thing: “B” ¤ The “is related to” relation ¤ Relation can be directed, directional is related to Who Retweets Whom? ¤ AidanKellyUSA: RT @BreeSchaaf: Quick turn on @NBCSports Men's Singles Luge final! @mazdzer @AidanKellyUSA @TuckerWest1 are laying it all on the line tonig… ¤ Setrice93: RT @NBCOlympics: #Gold for @sagekotsenburg! First gold at #Sochi2014 and first-ever Olympic gold in snowboard slopestyle! http://t.co/ 0F8ys… ¤ adore_knob: RT @drdloveswater: I have waited 4 years to do this. Thank you @NBCOlympics & all your interns for such awesome coverage. #Sochi2014 http:/… ¤ MattJanik: RT @NBCOlympics Yeah, it's not good for your health. ¤ LisaKSimone: RT @robringham: Tired of @nbc / @NBCOlympics holding the Olympics hostage. Time for them to lose exclusivity. #NBCFail ¤ TS_Krupa: RT @NBCOlympics: RT @RedSox: Go Team USA!! @USOlympic #Sochi2014 http://t.co/anvneh5Mmy Who Retweets Whom? ¤ AidanKellyUSA: RT @BreeSchaaf: Quick turn on @NBCSports Men's Singles Luge final! @mazdzer @AidanKellyUSA @TuckerWest1 are laying it all on the line tonig… ¤ Setrice93: RT @NBCOlympics: #Gold for @sagekotsenburg! First gold at #Sochi2014 and first-ever Olympic gold in snowboard slopestyle! http://t.co/ 0F8ys… ¤ adore_knob: RT @drdloveswater: I have waited 4 years to do this.