Applied and Computational Mathematics Division

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Algebra of Open and Interconnected Systems

The Algebra of Open and Interconnected Systems Brendan Fong Hertford College University of Oxford arXiv:1609.05382v1 [math.CT] 17 Sep 2016 A thesis submitted for the degree of Doctor of Philosophy in Computer Science Trinity 2016 For all those who have prepared food so I could eat and created homes so I could live over the past four years. You too have laboured to produce this; I hope I have done your labours justice. Abstract Herein we develop category-theoretic tools for understanding network- style diagrammatic languages. The archetypal network-style diagram- matic language is that of electric circuits; other examples include signal flow graphs, Markov processes, automata, Petri nets, chemical reaction networks, and so on. The key feature is that the language is comprised of a number of components with multiple (input/output) terminals, each possibly labelled with some type, that may then be connected together along these terminals to form a larger network. The components form hyperedges between labelled vertices, and so a diagram in this language forms a hypergraph. We formalise the compositional structure by intro- ducing the notion of a hypergraph category. Network-style diagrammatic languages and their semantics thus form hypergraph categories, and se- mantic interpretation gives a hypergraph functor. The first part of this thesis develops the theory of hypergraph categories. In particular, we introduce the tools of decorated cospans and corela- tions. Decorated cospans allow straightforward construction of hyper- graph categories from diagrammatic languages: the inputs, outputs, and their composition are modelled by the cospans, while the `decorations' specify the components themselves. -

Joint Force Quarterly 97

Issue 97, 2nd Quarter 2020 JOINT FORCE QUARTERLY Broadening Traditional Domains Commercial Satellites and National Security Ulysses S. Grant and the U.S. Navy ISSUE NINETY-SEVEN, 2 ISSUE NINETY-SEVEN, ND QUARTER 2020 Joint Force Quarterly Founded in 1993 • Vol. 97, 2nd Quarter 2020 https://ndupress.ndu.edu GEN Mark A. Milley, USA, Publisher VADM Frederick J. Roegge, USN, President, NDU Editor in Chief Col William T. Eliason, USAF (Ret.), Ph.D. Executive Editor Jeffrey D. Smotherman, Ph.D. Production Editor John J. Church, D.M.A. Internet Publications Editor Joanna E. Seich Copyeditor Andrea L. Connell Associate Editor Jack Godwin, Ph.D. Book Review Editor Brett Swaney Art Director Marco Marchegiani, U.S. Government Publishing Office Advisory Committee Ambassador Erica Barks-Ruggles/College of International Security Affairs; RDML Shoshana S. Chatfield, USN/U.S. Naval War College; Col Thomas J. Gordon, USMC/Marine Corps Command and Staff College; MG Lewis G. Irwin, USAR/Joint Forces Staff College; MG John S. Kem, USA/U.S. Army War College; Cassandra C. Lewis, Ph.D./College of Information and Cyberspace; LTG Michael D. Lundy, USA/U.S. Army Command and General Staff College; LtGen Daniel J. O’Donohue, USMC/The Joint Staff; Brig Gen Evan L. Pettus, USAF/Air Command and Staff College; RDML Cedric E. Pringle, USN/National War College; Brig Gen Kyle W. Robinson, USAF/Dwight D. Eisenhower School for National Security and Resource Strategy; Brig Gen Jeremy T. Sloane, USAF/Air War College; Col Blair J. Sokol, USMC/Marine Corps War College; Lt Gen Glen D. VanHerck, USAF/The Joint Staff Editorial Board Richard K. -

Gephi Tools for Network Analysis and Visualization

Frontiers of Network Science Fall 2018 Class 8: Introduction to Gephi Tools for network analysis and visualization Boleslaw Szymanski CLASS PLAN Main Topics • Overview of tools for network analysis and visualization • Installing and using Gephi • Gephi hands-on labs Frontiers of Network Science: Introduction to Gephi 2018 2 TOOLS OVERVIEW (LISTED ALPHABETICALLY) Tools for network analysis and visualization • Computing model and interface – Desktop GUI applications – API/code libraries, Web services – Web GUI front-ends (cloud, distributed, HPC) • Extensibility model – Only by the original developers – By other users/developers (add-ins, modules, additional packages, etc.) • Source availability model – Open-source – Closed-source • Business model – Free of charge – Commercial Frontiers of Network Science: Introduction to Gephi 2018 3 TOOLS CINET CyberInfrastructure for NETwork science • Accessed via a Web-based portal (http://cinet.vbi.vt.edu/granite/granite.html) • Supported by grants, no charge for end users • Aims to provide researchers, analysts, and educators interested in Network Science with an easy-to-use cyber-environment that is accessible from their desktop and integrates into their daily work • Users can contribute new networks, data, algorithms, hardware, and research results • Primarily for research, teaching, and collaboration • No programming experience is required Frontiers of Network Science: Introduction to Gephi 2018 4 TOOLS Cytoscape Network Data Integration, Analysis, and Visualization • A standalone GUI application -

Obituary: FELIX POLLACZEK Felix Pollaczek Was Born on 1 December

Obituary: FELIX POLLACZEK Felix Pollaczek was born on 1 December 1892 in Vienna, Austria; he was the descendant of a well-known mid-European Jewish family which for several centuries had counted many learned men amongst its members. He became a Frenchman by naturalization in 1947. He obtained his scientific education and training at the Latin grammar school of Vienna, and at the Technical Univer- sities of Vienna and Brno (Czechoslovakia); he later studied mathematics at the University of Berlin. A master's degree in electrical engineering in 1920 from Brno was followed by a doctoral degree in mathematics in 1922 from Berlin with a thesis on number theory. This was concerned with the fields generated by the Ith and 12th roots of one, I being an irregular prime number. His first paper, on number theory, dates from 1917. He was employed in 1921 as an engineer by A.E.G. in Berlin. Then, in 1923, he became scientific adviser to the German Postal, Telephone and Telegraph Services at Reichspost-Zentralamt Berlin-Tempelhof. As a result of the political situation in Germany in 1933, he was dismissed from his post and then left the country. In Paris he made his living as a consulting engineer for the Societe d'Etudes pour Liaisons Telephoniques et Telegraphiques. In 1939-1940 and again from 1944 on, he held the position of Maitre de Recherches at the Centre National de la Recherche Scientifique in Paris. Felix Pollaczek's fruitful life came to an end on 29 April 1981 at Boulogne- Billancourt. In 1977 Pollaczek was awarded the John von Neumann Theory Prize by the ORSA-TIMS prize committee. -

IT Project Quality Management

10 IT Project Quality Management CHAPTER OVERVIEW The focus of this chapter will be on several concepts and philosophies of quality man- agement. By learning about the people who founded the quality movement over the last fifty years, we can better understand how to apply these philosophies and teach- ings to develop a project quality management plan. After studying this chapter, you should understand and be able to: • Describe the Project Management Body of Knowledge (PMBOK) area called project quality management (PQM) and how it supports quality planning, qual ity assurance, quality control, and continuous improvement of the project's products and supporting processes. • Identify several quality gurus, or founders of the quality movement, and their role in shaping quality philosophies worldwide. • Describe some of the more common quality initiatives and management sys tems that include ISO certification, Six Sigma, and the Capability Maturity Model (CMM) for software engineering. • Distinguish between validation and verification activities and how these activi ties support IT project quality management. • Describe the software engineering discipline called configuration management and how it is used to manage the changes associated with all of the project's deliverables and work products. • Apply the quality concepts, methods, and tools introduced in this chapter to develop a project quality plan. GLOBAL TECHNOLOGY SOLUTIONS It was mid-afternoon when Tim Williams walked into the GTS conference room. Two of the Husky Air team members, Sitaraman and Yan, were already seated at the 217 218 CHAPTER 10 / IT PROJECT QUALITY MANAGEMENT conference table. Tim took his usual seat, and asked "So how did the demonstration of the user interface go this morning?" Sitaraman glanced at Yan and then focused his attention on Tim's question. -

Computer Algebra and Mathematics with the HP40G Version 1.0

Computer Algebra and Mathematics with the HP40G Version 1.0 Renée de Graeve Lecturer at Grenoble I Exact Calculation and Mathematics with the HP40G Acknowledgments It was not believed possible to write an efficient program for computer algebra all on one’s own. But one bright person by the name of Bernard Parisse didn’t know that—and did it! This is his program for computer algebra (called ERABLE), built for the second time into an HP calculator. The development of this calculator has led Bernard Parisse to modify his program somewhat so that the computer algebra functions could be edited and cause the appropriate results to be displayed in the Equation Editor. Explore all the capabilities of this calculator, as set out in the following pages. I would like to thank: • Bernard Parisse for his invaluable counsel, his remarks on the text, his reviews, and for his ability to provide functions on demand both efficiently and graciously. • Jean Tavenas for the concern shown towards the completion of this guide. • Jean Yves Avenard for taking on board our requests, and for writing the PROMPT command in the very spirit of promptness—and with no advance warning. (refer to 6.4.2.). © 2000 Hewlett-Packard, http://www.hp.com/calculators The reproduction, distribution and/or the modification of this document is authorised according to the terms of the GNU Free Documentation License, Version 1.1 or later, published by the Free Software Foundation. A copy of this license exists under the section entitled “GNU Free Documentation License” (Chapter 8, p. 141). -

CAS, an Introduction to the HP Computer Algebra System

CAS, An introduction to the HP Computer Algebra System Background Any mathematician will quickly appreciate the advantages offered by a CAS, or Computer Algebra System1, which allows the user to perform complex symbolic algebraic manipulations on the calculator. Algebraic integration by parts and by substitution, the solution of differential equations, inequalities, simultaneous equations with algebraic or complex coefficients, the evaluation of limits and many other problems can be solved quickly and easily using a CAS. Importantly, solutions can be obtained as exact values such as 5−1, 25≤ x < or 4π rather than the usual decimal values given by numeric methods of successive approximation. Values can be displayed to almost any degree of accuracy required, allowing the user to view, for example, the exact value of a number such as 100 factorial. The HP CAS The HP CAS system was created by Bernard Parisse, Université de Grenoble, for the HP 49g calculator. It was improved and adapted for inclusion on the HP 40g with the help of Renée De Graeve, Jean-Yves Avenard and Jean Tavenas2. The HP CAS system offers the user a vast array of functions and abilities as well as an easy user interface which displays equations as they appear on the page. It also includes the ability to display many algebraic calculations in ‘step-by-step’ mode, making it an invaluable teaching tool in universities and schools. Functions are grouped by category and accessed via menus at the bottom of the screen. Copyright© 2005, Applications in Mathematics Learning to use the CAS Learning to use the CAS is very easy but, as with any powerful tool, truly effective use requires familiarity and time. -

Mathematicians Fleeing from Nazi Germany

Mathematicians Fleeing from Nazi Germany Mathematicians Fleeing from Nazi Germany Individual Fates and Global Impact Reinhard Siegmund-Schultze princeton university press princeton and oxford Copyright 2009 © by Princeton University Press Published by Princeton University Press, 41 William Street, Princeton, New Jersey 08540 In the United Kingdom: Princeton University Press, 6 Oxford Street, Woodstock, Oxfordshire OX20 1TW All Rights Reserved Library of Congress Cataloging-in-Publication Data Siegmund-Schultze, R. (Reinhard) Mathematicians fleeing from Nazi Germany: individual fates and global impact / Reinhard Siegmund-Schultze. p. cm. Includes bibliographical references and index. ISBN 978-0-691-12593-0 (cloth) — ISBN 978-0-691-14041-4 (pbk.) 1. Mathematicians—Germany—History—20th century. 2. Mathematicians— United States—History—20th century. 3. Mathematicians—Germany—Biography. 4. Mathematicians—United States—Biography. 5. World War, 1939–1945— Refuges—Germany. 6. Germany—Emigration and immigration—History—1933–1945. 7. Germans—United States—History—20th century. 8. Immigrants—United States—History—20th century. 9. Mathematics—Germany—History—20th century. 10. Mathematics—United States—History—20th century. I. Title. QA27.G4S53 2008 510.09'04—dc22 2008048855 British Library Cataloging-in-Publication Data is available This book has been composed in Sabon Printed on acid-free paper. ∞ press.princeton.edu Printed in the United States of America 10 987654321 Contents List of Figures and Tables xiii Preface xvii Chapter 1 The Terms “German-Speaking Mathematician,” “Forced,” and“Voluntary Emigration” 1 Chapter 2 The Notion of “Mathematician” Plus Quantitative Figures on Persecution 13 Chapter 3 Early Emigration 30 3.1. The Push-Factor 32 3.2. The Pull-Factor 36 3.D. -

Performing Knowledge Requirements Analysis for Public Organisations in a Virtual Learning Environment: a Social Network Analysis

chnology Te & n S o o ti ft a w a m r r e Fontenele et al., J Inform Tech Softw Eng 2014, 4:2 o f E Journal of n n I g f i o n DOI: 10.4172/2165-7866.1000134 l e a e n r r i n u g o J ISSN: 2165-7866 Information Technology & Software Engineering Research Article Open Access Performing Knowledge Requirements Analysis for Public Organisations in a Virtual Learning Environment: A Social Network Analysis Approach Fontenele MP1*, Sampaio RB2, da Silva AIB2, Fernandes JHC2 and Sun L1 1University of Reading, School of Systems Engineering, Whiteknights, Reading, RG6 6AH, United Kingdom 2Universidade de Brasília, Campus Universitário Darcy Ribeiro, Brasília, DF, 70910-900, Brasil Abstract This paper describes an application of Social Network Analysis methods for identification of knowledge demands in public organisations. Affiliation networks established in a postgraduate programme were analysed. The course was ex- ecuted in a distance education mode and its students worked on public agencies. Relations established among course participants were mediated through a virtual learning environment using Moodle. Data available in Moodle may be ex- tracted using knowledge discovery in databases techniques. Potential degrees of closeness existing among different organisations and among researched subjects were assessed. This suggests how organisations could cooperate for knowledge management and also how to identify their common interests. The study points out that closeness among organisations and research topics may be assessed through affiliation networks. This opens up opportunities for ap- plying knowledge management between organisations and creating communities of practice. -

Access to Communications Technology

A Access to Communications Technology ABSTRACT digital divide: the Pew Research Center reported in 2019 that 42 percent of African American adults and Early proponents of digital communications tech- 43 percent of Hispanic adults did not have a desk- nology believed that it would be a powerful tool for top or laptop computer at home, compared to only disseminating knowledge and advancing civilization. 18 percent of Caucasian adults. Individuals without While there is little dispute that the Internet has home computers must instead use smartphones or changed society radically in a relatively short period public facilities such as libraries (which restrict how of time, there are many still unable to take advantage long a patron can remain online), which severely lim- of the benefits it confers because of a lack of access. its their ability to fill out job applications and com- Whether the lack is due to economic, geographic, or plete homework effectively. demographic factors, this “digital divide” has serious There is also a marked divide between digital societal repercussions, particularly as most aspects access in highly developed nations and that which of life in the twenty-first century, including banking, is available in other parts of the world. Globally, the health care, and education, are increasingly con- International Telecommunication Union (ITU), a ducted online. specialized agency within the United Nations that deals with information and communication tech- DIGITAL DIVIDE nologies (ICTs), estimates that as many as 3 billion people living in developing countries may still be In its simplest terms, the digital divide refers to the gap unconnected by 2023. -

A Comparative Analysis of Large-Scale Network Visualization Tools

A Comparative Analysis of Large-scale Network Visualization Tools Md Abdul Motaleb Faysal and Shaikh Arifuzzaman Department of Computer Science, University of New Orleans New Orleans, LA 70148, USA. Email: [email protected], [email protected] Abstract—Network (Graph) is a powerful abstraction for scalability for large network analysis. Such study will help a representing underlying relations and structures in large complex person to decide which tool to use for a specific case based systems. Network visualization provides a convenient way to ex- on his need. plore and study such structures and reveal useful insights. There exist several network visualization tools; however, these vary in One such study in [3] compares a couple of tools based on terms of scalability, analytics feature, and user-friendliness. Due scalability. Comparative studies on other visualization metrics to the huge growth of social, biological, and other scientific data, should be conducted to let end users have freedom in choosing the corresponding network data is also large. Visualizing such the specific tool he needs to use. In this paper, we have chosen large network poses another level of difficulty. In this paper, we five widely used visualization tools for an empirical and identify several popular network visualization tools and provide a comparative analysis based on the features and operations comparative analysis. Our comparisons are based on factors these tools support. We demonstrate empirically how those tools such as supported file formats, scalability in visualization scale to large networks. We also provide several case studies of and analysis, interacting capability with the drawn network, visual analytics on large network data and assess performances end user-friendliness (e.g., users with no programming back- of the tools. -

Week 2 - Handling Real-World Network Datasets

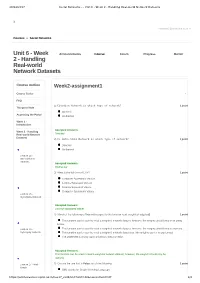

28/12/2017 Social Networks - - Unit 6 - Week 2 - Handling Real-world Network Datasets X [email protected] ▼ Courses » Social Networks Unit 6 - Week Announcements Course Forum Progress Mentor 2 - Handling Real-world Network Datasets Course outline Week2-assignment1 Course Trailer . FAQ 1) Citation Network is which type of network? 1 point Things to Note Directed Accessing the Portal Undirected Week 1 - Introduction Accepted Answers: Week 2 - Handling Real-world Network Directed Datasets 2) Co-authorship Network is which type of network? 1 point Directed Undirected Lecture 14 - Introduction to Datasets Accepted Answers: Undirected 3) What is the full form of CSV? 1 point Computer Systematic Version Comma Separated Version Comma Separated Values Computer Systematic Values Lecture 15 - Ingredients Network Accepted Answers: Comma Separated Values 4) Which of the following is True with respect to the function read_weighted_edgelist() 1 point This function can be used to read a weighted network dataset, however, the weights should only be in string format. Lecture 16 - This function can be used to read a weighted network dataset, however, the weights should only be numeric. Synonymy Network This function can be used to read a weighted network dataset and the weights can be in any format. The statement is wrong; such a function does not exist. Accepted Answers: This function can be used to read a weighted network dataset, however, the weights should only be numeric. Lecture 17 - Web 5) Choose the one that is False out of the following: 1 point Graph GML stands for Graph Modeling Language. https://onlinecourses.nptel.ac.in/noc17_cs41/unit?unit=42&assessment=47 1/4 28/12/2017 Social Networks - - Unit 6 - Week 2 - Handling Real-world Network Datasets GML stores the data in the form of tags just like XML.