Overview of Social Networks Research Software

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Sagemath and Sagemathcloud

Viviane Pons Ma^ıtrede conf´erence,Universit´eParis-Sud Orsay [email protected] { @PyViv SageMath and SageMathCloud Introduction SageMath SageMath is a free open source mathematics software I Created in 2005 by William Stein. I http://www.sagemath.org/ I Mission: Creating a viable free open source alternative to Magma, Maple, Mathematica and Matlab. Viviane Pons (U-PSud) SageMath and SageMathCloud October 19, 2016 2 / 7 SageMath Source and language I the main language of Sage is python (but there are many other source languages: cython, C, C++, fortran) I the source is distributed under the GPL licence. Viviane Pons (U-PSud) SageMath and SageMathCloud October 19, 2016 3 / 7 SageMath Sage and libraries One of the original purpose of Sage was to put together the many existent open source mathematics software programs: Atlas, GAP, GMP, Linbox, Maxima, MPFR, PARI/GP, NetworkX, NTL, Numpy/Scipy, Singular, Symmetrica,... Sage is all-inclusive: it installs all those libraries and gives you a common python-based interface to work on them. On top of it is the python / cython Sage library it-self. Viviane Pons (U-PSud) SageMath and SageMathCloud October 19, 2016 4 / 7 SageMath Sage and libraries I You can use a library explicitly: sage: n = gap(20062006) sage: type(n) <c l a s s 'sage. interfaces .gap.GapElement'> sage: n.Factors() [ 2, 17, 59, 73, 137 ] I But also, many of Sage computation are done through those libraries without necessarily telling you: sage: G = PermutationGroup([[(1,2,3),(4,5)],[(3,4)]]) sage : G . g a p () Group( [ (3,4), (1,2,3)(4,5) ] ) Viviane Pons (U-PSud) SageMath and SageMathCloud October 19, 2016 5 / 7 SageMath Development model Development model I Sage is developed by researchers for researchers: the original philosophy is to develop what you need for your research and share it with the community. -

Networkx Tutorial

5.03.2020 tutorial NetworkX tutorial Source: https://github.com/networkx/notebooks (https://github.com/networkx/notebooks) Minor corrections: JS, 27.02.2019 Creating a graph Create an empty graph with no nodes and no edges. In [1]: import networkx as nx In [2]: G = nx.Graph() By definition, a Graph is a collection of nodes (vertices) along with identified pairs of nodes (called edges, links, etc). In NetworkX, nodes can be any hashable object e.g. a text string, an image, an XML object, another Graph, a customized node object, etc. (Note: Python's None object should not be used as a node as it determines whether optional function arguments have been assigned in many functions.) Nodes The graph G can be grown in several ways. NetworkX includes many graph generator functions and facilities to read and write graphs in many formats. To get started though we'll look at simple manipulations. You can add one node at a time, In [3]: G.add_node(1) add a list of nodes, In [4]: G.add_nodes_from([2, 3]) or add any nbunch of nodes. An nbunch is any iterable container of nodes that is not itself a node in the graph. (e.g. a list, set, graph, file, etc..) In [5]: H = nx.path_graph(10) file:///home/szwabin/Dropbox/Praca/Zajecia/Diffusion/Lectures/1_intro/networkx_tutorial/tutorial.html 1/18 5.03.2020 tutorial In [6]: G.add_nodes_from(H) Note that G now contains the nodes of H as nodes of G. In contrast, you could use the graph H as a node in G. -

Networkx: Network Analysis with Python

NetworkX: Network Analysis with Python Salvatore Scellato Full tutorial presented at the XXX SunBelt Conference “NetworkX introduction: Hacking social networks using the Python programming language” by Aric Hagberg & Drew Conway Outline 1. Introduction to NetworkX 2. Getting started with Python and NetworkX 3. Basic network analysis 4. Writing your own code 5. You are ready for your project! 1. Introduction to NetworkX. Introduction to NetworkX - network analysis Vast amounts of network data are being generated and collected • Sociology: web pages, mobile phones, social networks • Technology: Internet routers, vehicular flows, power grids How can we analyze this networks? Introduction to NetworkX - Python awesomeness Introduction to NetworkX “Python package for the creation, manipulation and study of the structure, dynamics and functions of complex networks.” • Data structures for representing many types of networks, or graphs • Nodes can be any (hashable) Python object, edges can contain arbitrary data • Flexibility ideal for representing networks found in many different fields • Easy to install on multiple platforms • Online up-to-date documentation • First public release in April 2005 Introduction to NetworkX - design requirements • Tool to study the structure and dynamics of social, biological, and infrastructure networks • Ease-of-use and rapid development in a collaborative, multidisciplinary environment • Easy to learn, easy to teach • Open-source tool base that can easily grow in a multidisciplinary environment with non-expert users -

Graph Database Fundamental Services

Bachelor Project Czech Technical University in Prague Faculty of Electrical Engineering F3 Department of Cybernetics Graph Database Fundamental Services Tomáš Roun Supervisor: RNDr. Marko Genyk-Berezovskyj Field of study: Open Informatics Subfield: Computer and Informatic Science May 2018 ii Acknowledgements Declaration I would like to thank my advisor RNDr. I declare that the presented work was de- Marko Genyk-Berezovskyj for his guid- veloped independently and that I have ance and advice. I would also like to thank listed all sources of information used Sergej Kurbanov and Herbert Ullrich for within it in accordance with the methodi- their help and contributions to the project. cal instructions for observing the ethical Special thanks go to my family for their principles in the preparation of university never-ending support. theses. Prague, date ............................ ........................................... signature iii Abstract Abstrakt The goal of this thesis is to provide an Cílem této práce je vyvinout webovou easy-to-use web service offering a database službu nabízející databázi neorientova- of undirected graphs that can be searched ných grafů, kterou bude možno efektivně based on the graph properties. In addi- prohledávat na základě vlastností grafů. tion, it should also allow to compute prop- Tato služba zároveň umožní vypočítávat erties of user-supplied graphs with the grafové vlastnosti pro grafy zadané uži- help graph libraries and generate graph vatelem s pomocí grafových knihoven a images. Last but not least, we implement zobrazovat obrázky grafů. V neposlední a system that allows bulk adding of new řadě je také cílem navrhnout systém na graphs to the database and computing hromadné přidávání grafů do databáze a their properties. -

Gephi Tools for Network Analysis and Visualization

Frontiers of Network Science Fall 2018 Class 8: Introduction to Gephi Tools for network analysis and visualization Boleslaw Szymanski CLASS PLAN Main Topics • Overview of tools for network analysis and visualization • Installing and using Gephi • Gephi hands-on labs Frontiers of Network Science: Introduction to Gephi 2018 2 TOOLS OVERVIEW (LISTED ALPHABETICALLY) Tools for network analysis and visualization • Computing model and interface – Desktop GUI applications – API/code libraries, Web services – Web GUI front-ends (cloud, distributed, HPC) • Extensibility model – Only by the original developers – By other users/developers (add-ins, modules, additional packages, etc.) • Source availability model – Open-source – Closed-source • Business model – Free of charge – Commercial Frontiers of Network Science: Introduction to Gephi 2018 3 TOOLS CINET CyberInfrastructure for NETwork science • Accessed via a Web-based portal (http://cinet.vbi.vt.edu/granite/granite.html) • Supported by grants, no charge for end users • Aims to provide researchers, analysts, and educators interested in Network Science with an easy-to-use cyber-environment that is accessible from their desktop and integrates into their daily work • Users can contribute new networks, data, algorithms, hardware, and research results • Primarily for research, teaching, and collaboration • No programming experience is required Frontiers of Network Science: Introduction to Gephi 2018 4 TOOLS Cytoscape Network Data Integration, Analysis, and Visualization • A standalone GUI application -

Networkx Reference Release 1.9.1

NetworkX Reference Release 1.9.1 Aric Hagberg, Dan Schult, Pieter Swart September 20, 2014 CONTENTS 1 Overview 1 1.1 Who uses NetworkX?..........................................1 1.2 Goals...................................................1 1.3 The Python programming language...................................1 1.4 Free software...............................................2 1.5 History..................................................2 2 Introduction 3 2.1 NetworkX Basics.............................................3 2.2 Nodes and Edges.............................................4 3 Graph types 9 3.1 Which graph class should I use?.....................................9 3.2 Basic graph types.............................................9 4 Algorithms 127 4.1 Approximation.............................................. 127 4.2 Assortativity............................................... 132 4.3 Bipartite................................................. 141 4.4 Blockmodeling.............................................. 161 4.5 Boundary................................................. 162 4.6 Centrality................................................. 163 4.7 Chordal.................................................. 184 4.8 Clique.................................................. 187 4.9 Clustering................................................ 190 4.10 Communities............................................... 193 4.11 Components............................................... 194 4.12 Connectivity.............................................. -

Exploring Network Structure, Dynamics, and Function Using Networkx

Proceedings of the 7th Python in Science Conference (SciPy 2008) Exploring Network Structure, Dynamics, and Function using NetworkX Aric A. Hagberg ([email protected])– Los Alamos National Laboratory, Los Alamos, New Mexico USA Daniel A. Schult ([email protected])– Colgate University, Hamilton, NY USA Pieter J. Swart ([email protected])– Los Alamos National Laboratory, Los Alamos, New Mexico USA NetworkX is a Python language package for explo- and algorithms, to rapidly test new hypotheses and ration and analysis of networks and network algo- models, and to teach the theory of networks. rithms. The core package provides data structures The structure of a network, or graph, is encoded in the for representing many types of networks, or graphs, edges (connections, links, ties, arcs, bonds) between including simple graphs, directed graphs, and graphs nodes (vertices, sites, actors). NetworkX provides ba- with parallel edges and self-loops. The nodes in Net- sic network data structures for the representation of workX graphs can be any (hashable) Python object simple graphs, directed graphs, and graphs with self- and edges can contain arbitrary data; this flexibil- loops and parallel edges. It allows (almost) arbitrary ity makes NetworkX ideal for representing networks objects as nodes and can associate arbitrary objects to found in many different scientific fields. edges. This is a powerful advantage; the network struc- In addition to the basic data structures many graph ture can be integrated with custom objects and data algorithms are implemented for calculating network structures, complementing any pre-existing code and properties and structure measures: shortest paths, allowing network analysis in any application setting betweenness centrality, clustering, and degree dis- without significant software development. -

Performing Knowledge Requirements Analysis for Public Organisations in a Virtual Learning Environment: a Social Network Analysis

chnology Te & n S o o ti ft a w a m r r e Fontenele et al., J Inform Tech Softw Eng 2014, 4:2 o f E Journal of n n I g f i o n DOI: 10.4172/2165-7866.1000134 l e a e n r r i n u g o J ISSN: 2165-7866 Information Technology & Software Engineering Research Article Open Access Performing Knowledge Requirements Analysis for Public Organisations in a Virtual Learning Environment: A Social Network Analysis Approach Fontenele MP1*, Sampaio RB2, da Silva AIB2, Fernandes JHC2 and Sun L1 1University of Reading, School of Systems Engineering, Whiteknights, Reading, RG6 6AH, United Kingdom 2Universidade de Brasília, Campus Universitário Darcy Ribeiro, Brasília, DF, 70910-900, Brasil Abstract This paper describes an application of Social Network Analysis methods for identification of knowledge demands in public organisations. Affiliation networks established in a postgraduate programme were analysed. The course was ex- ecuted in a distance education mode and its students worked on public agencies. Relations established among course participants were mediated through a virtual learning environment using Moodle. Data available in Moodle may be ex- tracted using knowledge discovery in databases techniques. Potential degrees of closeness existing among different organisations and among researched subjects were assessed. This suggests how organisations could cooperate for knowledge management and also how to identify their common interests. The study points out that closeness among organisations and research topics may be assessed through affiliation networks. This opens up opportunities for ap- plying knowledge management between organisations and creating communities of practice. -

The Hitchhiker's Guide to Graph Exchange Formats

The Hitchhiker’s Guide to Graph Exchange Formats Prof. Matthew Roughan [email protected] http://www.maths.adelaide.edu.au/matthew.roughan/ Work with Jono Tuke UoA June 4, 2015 M.Roughan (UoA) Hitch Hikers Guide June 4, 2015 1 / 31 Graphs Graph: G(N; E) I N = set of nodes (vertices) I E = set of edges (links) Often we have additional information, e.g., I link distance I node type I graph name M.Roughan (UoA) Hitch Hikers Guide June 4, 2015 2 / 31 Why? To represent data where “connections” are 1st class objects in their own right I storing the data in the right format improves access, processing, ... I it’s natural, elegant, efficient, ... Many, many datasets M.Roughan (UoA) Hitch Hikers Guide June 4, 2015 3 / 31 ISPs: Internode: layer 3 http: //www.internode.on.net/pdf/network/internode-domestic-ip-network.pdf M.Roughan (UoA) Hitch Hikers Guide June 4, 2015 4 / 31 ISPs: Level 3 (NA) http://www.fiberco.org/images/Level3-Metro-Fiber-Map4.jpg M.Roughan (UoA) Hitch Hikers Guide June 4, 2015 5 / 31 Telegraph submarine cables http://en.wikipedia.org/wiki/File:1901_Eastern_Telegraph_cables.png M.Roughan (UoA) Hitch Hikers Guide June 4, 2015 6 / 31 Electricity grid M.Roughan (UoA) Hitch Hikers Guide June 4, 2015 7 / 31 Bus network (Adelaide CBD) M.Roughan (UoA) Hitch Hikers Guide June 4, 2015 8 / 31 French Rail http://www.alleuroperail.com/europe-map-railways.htm M.Roughan (UoA) Hitch Hikers Guide June 4, 2015 9 / 31 Protocol relationships M.Roughan (UoA) Hitch Hikers Guide June 4, 2015 10 / 31 Food web M.Roughan (UoA) Hitch Hikers -

A Comparative Analysis of Large-Scale Network Visualization Tools

A Comparative Analysis of Large-scale Network Visualization Tools Md Abdul Motaleb Faysal and Shaikh Arifuzzaman Department of Computer Science, University of New Orleans New Orleans, LA 70148, USA. Email: [email protected], [email protected] Abstract—Network (Graph) is a powerful abstraction for scalability for large network analysis. Such study will help a representing underlying relations and structures in large complex person to decide which tool to use for a specific case based systems. Network visualization provides a convenient way to ex- on his need. plore and study such structures and reveal useful insights. There exist several network visualization tools; however, these vary in One such study in [3] compares a couple of tools based on terms of scalability, analytics feature, and user-friendliness. Due scalability. Comparative studies on other visualization metrics to the huge growth of social, biological, and other scientific data, should be conducted to let end users have freedom in choosing the corresponding network data is also large. Visualizing such the specific tool he needs to use. In this paper, we have chosen large network poses another level of difficulty. In this paper, we five widely used visualization tools for an empirical and identify several popular network visualization tools and provide a comparative analysis based on the features and operations comparative analysis. Our comparisons are based on factors these tools support. We demonstrate empirically how those tools such as supported file formats, scalability in visualization scale to large networks. We also provide several case studies of and analysis, interacting capability with the drawn network, visual analytics on large network data and assess performances end user-friendliness (e.g., users with no programming back- of the tools. -

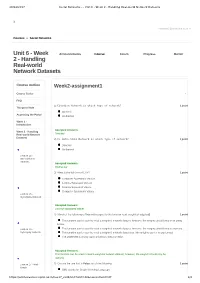

Week 2 - Handling Real-World Network Datasets

28/12/2017 Social Networks - - Unit 6 - Week 2 - Handling Real-world Network Datasets X [email protected] ▼ Courses » Social Networks Unit 6 - Week Announcements Course Forum Progress Mentor 2 - Handling Real-world Network Datasets Course outline Week2-assignment1 Course Trailer . FAQ 1) Citation Network is which type of network? 1 point Things to Note Directed Accessing the Portal Undirected Week 1 - Introduction Accepted Answers: Week 2 - Handling Real-world Network Directed Datasets 2) Co-authorship Network is which type of network? 1 point Directed Undirected Lecture 14 - Introduction to Datasets Accepted Answers: Undirected 3) What is the full form of CSV? 1 point Computer Systematic Version Comma Separated Version Comma Separated Values Computer Systematic Values Lecture 15 - Ingredients Network Accepted Answers: Comma Separated Values 4) Which of the following is True with respect to the function read_weighted_edgelist() 1 point This function can be used to read a weighted network dataset, however, the weights should only be in string format. Lecture 16 - This function can be used to read a weighted network dataset, however, the weights should only be numeric. Synonymy Network This function can be used to read a weighted network dataset and the weights can be in any format. The statement is wrong; such a function does not exist. Accepted Answers: This function can be used to read a weighted network dataset, however, the weights should only be numeric. Lecture 17 - Web 5) Choose the one that is False out of the following: 1 point Graph GML stands for Graph Modeling Language. https://onlinecourses.nptel.ac.in/noc17_cs41/unit?unit=42&assessment=47 1/4 28/12/2017 Social Networks - - Unit 6 - Week 2 - Handling Real-world Network Datasets GML stores the data in the form of tags just like XML. -

Comm 645 Handout – Nodexl Basics

COMM 645 HANDOUT – NODEXL BASICS NodeXL: Network Overview, Discovery and Exploration for Excel. Download from nodexl.codeplex.com Plugin for social media/Facebook import: socialnetimporter.codeplex.com Plugin for Microsoft Exchange import: exchangespigot.codeplex.com Plugin for Voson hyperlink network import: voson.anu.edu.au/node/13#VOSON-NodeXL Note that NodeXL requires MS Office 2007 or 2010. If your system does not support those (or you do not have them installed), try using one of the computers in the PhD office. Major sections within NodeXL: • Edges Tab: Edge list (Vertex 1 = source, Vertex 2 = destination) and attributes (Fig.1→1a) • Vertices Tab: Nodes and attribute (nodes can be imported from the edge list) (Fig.1→1b) • Groups Tab: Groups of nodes defined by attribute, clusters, or components (Fig.1→1c) • Groups Vertices Tab: Nodes belonging to each group (Fig.1→1d) • Overall Metrics Tab: Network and node measures & graphs (Fig.1→1e) Figure 1: The NodeXL Interface 3 6 8 2 7 9 13 14 5 12 4 10 11 1 1a 1b 1c 1d 1e Download more network handouts at www.kateto.net / www.ognyanova.net 1 After you install the NodeXL template, a new NodeXL tab will appear in your Excel interface. The following features will be available in it: Fig.1 → 1: Switch between different data tabs. The most important two tabs are "Edges" and "Vertices". Fig.1 → 2: Import data into NodeXL. The formats you can use include GraphML, UCINET DL files, and Pajek .net files, among others. You can also import data from social media: Flickr, YouTube, Twitter, Facebook (requires a plugin), or a hyperlink networks (requires a plugin).