A Unified Memory Network Architecture for In-Memory

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Hypertransport Extending Technology Leadership

HyperTransport Extending Technology Leadership International HyperTransport Symposium 2009 February 11, 2009 Mario Cavalli General Manager HyperTransport Technology Consortium Copyright HyperTransport Consortium 2009 HyperTransport Extending Technology Leadership HyperTransport and Consortium Snapshot Industry Status and Trends HyperTransport Leadership Role February 11, 2009 Mario Cavalli General Manager HyperTransport Technology Consortium Copyright HyperTransport Consortium 2009 HyperTransport Snapshot Low Latency, High Bandwidth, High Efficiency Point-to-Point Interconnect Leadership CPU-to-I/O CPU-to-CPU CPU-to-Coprocessor Copyright HyperTransport Consortium 2009 Adopted by Industry Leaders in Widest Range of Applications than Any Other Interconnect Technology Copyright HyperTransport Consortium 2009 Snapshot Formed 2001 Controls, Licenses, Promotes HyperTransport as Royalty-Free Open Standard World Technology Leaders among Commercial and Academic Members Newly Elected President Mike Uhler VP Accelerated Computing Advanced Micro Devices Copyright HyperTransport Consortium 2009 Industry Status and Trends Copyright HyperTransport Consortium 2009 Global Economic Downturn Tough State of Affairs for All Industries Consumer Markets Crippled with Long-Term to Recovery Commercial Markets Strongly Impacted Copyright HyperTransport Consortium 2009 Consequent Business Focus Cost Effectiveness No Redundancy Frugality Copyright HyperTransport Consortium 2009 Downturn Breeds Opportunities Reinforced Need for More Optimized, Cost-Effective Computing -

Introduction to AGP-8X

W H I T E P A P E R Introduction to AGP-8X Allan Chen Jessie Johnson Angel Suhrstedt ADVANCED MICRO DEVICES, INC. One AMD Place Sunnyvale, CA 94088 Page 1 Introduction to AGP-8X May 10, 2002 W H I T E P A P E R Background Tremendous efforts have been made to improve computing system performance through increases in CPU processing power; however, I/O bottlenecks throughout the computing platform can limit the system performance. To eliminate system bottlenecks, AMD has been working with industry leaders to implement innovative technologies including AGP, DDR SDRAM, USB2.0, and HyperTransport™ technology. This white paper will provide an overview of the latest graphics subsystem innovation: AGP-8X. Introduction The Accelerated Graphics Port (AGP) was developed as a high-performance graphics interface. It alleviates the PCI graphics bottleneck by providing high bandwidth throughput and direct access to system memory. The new AGP3.0 specification adds an 8X mode definition, which doubles the maximum data transfer rate from the previous high reached with AGP-4X by doubling the amount of data transferred per AGP bus cycle. Figure 1 shows the graphic interface bandwidth performance evolution from PCI to AGP-8X. In this figure, AGP3.0 refers to the specific AGP interface specification. AGP-1X, AGP-2X, AGP-4X and AGP-8X represent the data transfer speed mode. Graphics Interface Peak Bandwidth 2500 2000 1500 1000 Bandwidth (MB/s) 500 0 PCI AGP1X AGP2X AGP4X AGP8X AGP 1.0 Specification AGP 2.0 Specification AGP 3.0 Specification Figure 1: Available bandwidth of different graphic interfaces. -

AMD Opteron™ 4000 Series Platform Quick Reference Guide

AMD Opteron™ 4000 Series Platform Quick Reference Guide The AMD Opteron™ 4100 Series processor, the world’s lowest power per core processor1, sets the foundation for cloud workloads and affordability for mainstream infrastructure servers with prices starting at $992. AMD Opteron™ 4100 Series Processor END USER BENEFITS Outstanding Performance-Per-Watt > Designed from the ground up to handle demanding server workloads at the lowest available energy draw, beating the competition by as much as 40% (per/core).1 Business Value > The world’s first 1P and 2P capable processor at sub $100 pricing.2 Easy to Purchase and Operate > Scalable solutions with feature, component and platform consistency. PRODUCT FEATURES New AMD-P 2.0 Power Savings Features: > Ultra-low power platforms provide power efficiency beyond just the processor, 3 SOUNDBITE for both 1P and 2P server configurations. THE WORLD’S LOWEST POWER PER CORE SERVER PROCESSOR1 > APML (Advanced Platform Management Link)4 provides an interface for processor and systems management monitoring and controlling of system resources such as platform power Quick Features consumption via p-state limits and CPU thermals to closely monitor power and cooling. AMD-P 2.0: > Link Width PowerCap which changes all 16-bit links to 8-bit links5 can help power conscious Ultra-low power platform > customers improve performance-per-watt. > Advanced Platform Management Link (APML)4 > AMD CoolSpeed Technology reduces p-states when a temperature limit is reached to allow a > Link Width PowerCap server to operate if the processor’s thermal environment exceeds safe operational limits. > AMD CoolSpeed Technology > When C1E5,6 is enabled, the cores, southbridge and memory controller enter a sleep state that > C1E6 can equate to significant power savings in the datacenter depending on system configuration. -

An FPGA Based Verification Platform for Hypertransport 3.X

An FPGA based Verification Platform for HyperTransport 3.x Heiner Litz Holger Froening Maximilian Thuermer Ulrich Bruening University of Heidelberg Computer Architecture Group Germany {heiner.litz, holger.froening, ulrich.bruening}@ziti.uni-heidelberg.de ABSTRACT ware (HW/SW) co-verification Field Programmable Gate In this paper we present a verification platform designed Arrays (FPGAs) have several advantages compared to for HyperTransport 3.x (HT3) applications. It is intended to Electronic Design Automation (EDA) tools. FPGAs allow be used in computing environments in which it is directly to run prototypes in real world environments, in which both connected over a HyperTransport link to the main CPUs. hardware modules and software layers can be verified in an No protocol conversions or intermediate bridges are neces- early prototyping stage. However, the drawback is that sary, which results in a very low latency. An FPGA tech- FPGA-based verification platforms have to be specially nology is chosen due to of its reconfigurability. The main designed for certain applications, in particular if the appli- challenge of this work is the implementation of an HT3 cation is based on a new technology. link using FPGA technology. We provide a design space The verification platform presented here connects to a exploration in order to find the most suitable I/O technol- HyperTransport (HT) link. HyperTransport 2.x (HT2) ogy. This includes verification of the HyperTransport-spe- [1][2] is an established technology for chip-to-chip connec- cific signal levelling, the development of an I/O tions offering a very low latency and a high bandwidth. It is architecture supporting a link width of up to 16 lanes with used in all major AMD processors, including the Opteron source synchronous clocking and a clocking scheme to [3]. -

AMD-XXXX(Tm) Device Data Sheet

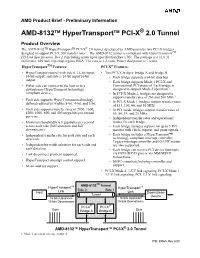

AMD Product Brief - Preliminary Information AMD-8132TM HyperTransportTM PCI-X® 2.0 Tunnel Product Overview The AMD-8132TM HyperTransportTM PCI-X® 2.0 tunnel developed by AMD provides two PCI-X bridges designed to support PCI-X 266 transfer rates1.. The AMD-8132 tunnel is compliant with HyperTransportTM I/O Link Specification, Rev 2.0 including errata up to specification Rev 1.05c. The package is a 31 x 31 millimeter, 829 ball, flip-chip organic BGA. The core is 1.2 volts. Power dissipation is 7 watts. HyperTransportTM Features: PCI-X® Features: • HyperTransport tunnel with side 0, 16-bit input/ • Two PCI-X bridges: bridge A and bridge B. 16-bit output; and side 1, 16-bit input/16-bit • Each bridge supports a 64-bit data bus. output. • Each bridge supports Mode 1 PCI-X and • Either side can connect to the host or to a Conventional PCI protocol. Each bridge is downstream HyperTransport technology designed to support Mode 2 operation.1. compliant device. • In PCI-X Mode 2, bridges are designed to support transfer rates of 266 and 200 MHz.1. • Each side supports HyperTransport technology- defined reduced bit widths: 8-bit, 4-bit, and 2-bit. • In PCI-X Mode 1, bridges support transfer rates of 133, 100, 66, and 50 MHz. • Each side supports transfer rates of 2000, 1600, • In PCI mode, bridges support transfer rates of 1200, 1000, 800, and 400 mega-bits per second 66, 50, 33, and 25 MHz. per wire. • Independent transfer rates and operational • Maximum bandwidth is 8 gigabytes per second modes for each bridge. -

Dell EMC Poweredge R240 Technical Guide

Dell EMC PowerEdge R240 Technical Guide Regulatory Model: E57S Series Regulatory Type: E57S001 June 2021 Rev. A03 Notes, cautions, and warnings NOTE: A NOTE indicates important information that helps you make better use of your product. CAUTION: A CAUTION indicates either potential damage to hardware or loss of data and tells you how to avoid the problem. WARNING: A WARNING indicates a potential for property damage, personal injury, or death. © 2018 2021 Dell Inc. or its subsidiaries. All rights reserved. Dell, EMC, and other trademarks are trademarks of Dell Inc. or its subsidiaries. Other trademarks may be trademarks of their respective owners. Contents Chapter 1: Product overview......................................................................................................... 5 Introduction...........................................................................................................................................................................5 New technologies................................................................................................................................................................ 5 Chapter 2: System features...........................................................................................................7 Product comparison............................................................................................................................................................ 7 Product specifications........................................................................................................................................................8 -

2.2 Memory Hierarchies for Reconfigurable

Computer Engineering 2010 Mekelweg 4, 2628 CD Delft The Netherlands http://ce.et.tudelft.nl/ MSc THESIS General Purpose Computing with Reconfigurable Acceleration Anthony Arthur Courtenay Brandon Abstract In this thesis we describe a new generic approach for accelerating software functions using a reconfigurable device connected through a high-speed link to a general purpose system. In order for our solu- tion to be generic, as opposed to related ISA extension approaches, we insert system calls into the original program to control the re- configurable accelerator using a compiler plug-in. We define specific mechanisms for the communication between the reconfigurable de- vice, the host general purpose processor and the main memory. The reconfigurable device is controlled by the host through system calls provided by the device driver, and initiates communication by raising interrupts; it further has direct accesses to the main memory (DMA) operating in the virtual address space. To do so, the reconfigurable device supports address translation, while the driver serves the de- vice interrupts, ensures that shared data in the host-cache remain coherent, and handles memory protection and paging. The system is implemented using a machine which provides a HyperTransport CE-MS-2010-28 bus connecting a Xilinx Virtex4-100 FPGA to the host. We evaluate alternative design choices of our proposal using an AES application and accelerating its most computationally intensive function. Our experimental results show that our solution is up to 5 faster than × software -

Unlocking Bandwidth for Gpus in CC-NUMA Systems

Appears in the Proceedings of the 2015 International Symposium on High Performance Computer Architecture (HPCA) Unlocking Bandwidth for GPUs in CC-NUMA Systems Neha Agarwalz ∗ David Nellansy Mike O’Connory Stephen W. Kecklery Thomas F. Wenischz University of Michiganz NVIDIAy [email protected], fdnellans,moconnor,[email protected], [email protected] Abstract—Historically, GPU-based HPC applications have had a substantial memory bandwidth advantage over CPU-based workloads due to using GDDR rather than DDR memory. How- ever, past GPUs required a restricted programming model where 0 application data was allocated up front and explicitly copied into GPU memory before launching a GPU kernel by the programmer. Recently, GPUs have eased this requirement and now can employ !"#$% &'! on-demand software page migration between CPU and GPU memory to obviate explicit copying. In the near future, CC- NUMA GPU-CPU systems will appear where software page migration is an optional choice and hardware cache-coherence can also support the GPU accessing CPU memory directly. 0 In this work, we describe the trade-offs and considerations in relying on hardware cache-coherence mechanisms versus using software page migration to optimize the performance of memory- $ "#( %#)$*# &!#+# # intensive GPU workloads. We show that page migration decisions based on page access frequency alone are a poor solution and that a broader solution using virtual address-based program locality to enable aggressive memory prefetching combined with bandwidth balancing is required to maximize performance. We present a software runtime system requiring minimal hardware support that, on average, outperforms CC-NUMA-based accesses by 1.95×, performs 6% better than the legacy CPU to GPU ,$$ "# # # - %.%# memcpy regime by intelligently using both CPU and GPU # #/'* memory bandwidth, and comes within 28% of oracular page placement, all while maintaining the relaxed memory semantics Fig. -

AMD Opteron™ 3300 Series Processor Real-World Performance

AMD Opteron™ 3300 Series processor Real-World Performance. Real-World Value. Do the Math. Quick Reference Guide AMD Opteron™ 3300 Series processor Entry level, enterprise-class servers at desktop pricing – a single-socket, money-in-pocket server solution What’s New? Second generation of AMD’s modular core architecture – codenamed “Piledriver” 13% higher performance expected1 New 25W EE power band offering at 6.25W/core Product Specifications Model Core Base Frequency/ AMD Turbo AMD Turbo Power Max L2 Cache L3 Cache Number Count North Bridge CORE CORE Band DDR3 speed Frequency P1 Frequency P0 2.6 GHz/ 3380 8 2.9 GHz 3.6 GHz 65W 1866 Mhz 4 x 2M 8M 2.0 GHz 2.8 GHz/ 3350 HE 4 3.1 GHz 3.8 GHz 45W 1866 Mhz 2 x 2M 8M 2.0 GHz 1.9 GHz/ 3320 EE 4 2.1 GHz 2.5 GHz 25W 1333 Mhz 2 x 2M 8M 1.4 GHz 1 Estimate based on preliminary SPECint®_rate2006 results in AMD labs as of October 2012 comparing AMD Opteron™ processor Models 3380 and 3280. SVR-310. AMD Opteron™ 3300 Series Processor Specifications Cache Sizes Total Cache: 16MB (8 core), 12MB (4 core) AMD SP5100 Southbridge Product Specifications L2 Cache: up to 8MB total USB Ports 12 USB 2.0 + 2 USB 1.1 L3 Cache: up to 8MB total PCI Bus Support PCI rev 2.3 Processor Technology 32nm technology Serial ATA AHCI 1.1 SATA 3.0Gb/s with SW RAID Support HyperTransport™ 1x HT3 at up to 5.2GT/s* per link SATA Ports 6 (can be independently disabled) Technology Links Max TDP/Idle 4W/1W Memory Integrated DDR3 memory controller with up to 32 GB memory per socket Process Technology TSMC .13um Number of Channels/ Dual Channel DDR3 ECC UDIMM, SODIMM support; Package 528 ball FCBGA, 21x21mm, 0.8mm pitch Types of Memory 1333, 1600, 1866* MHz memory speeds * Die Size 315 mm2 Package AM3+ *1866MHz supported only with a single physical DIMM per memory channel in AMD Opteron processor models 3350 HE and 3380. -

Chapter 4 Review CPU

For the A+ Certification exams, you should be able to identify the names and characteristics of Intel and AMD CPUs as well as select the appropriate CPU for an upgrade. Practice 4.1 Fill in the blanks for the statements about programming. assembly high-level instructions registers compiler instruction set RAM 1. The __ programming language is one step above 1. machine language. 2. C++ is an example of a(n) __ language. 2. 3. The commands a CPU follows are called 3. 4. Each processor has a set of commands called a(n) __. 4. 5. A(n) __ translates a high-level language into machine 5. language. 6. The small pockets of memory used to store data that is 6. being processed by the CPU are called __. Practice 4.2 Match the CPU part to its definition. Not all of the definitions will be used. 1. __ arithmetic logic unit (ALU) a. Decodes instructions and data and transmits the data to other areas in an understandable format. 2. bus unit b. Contains many separate, smaller storage units. 3. cache c. Network of circuitry that connects all the other major 4. control unit components together, accepts data, and sends data 5. decode unit through the input and output bus sections. 6. __ register unit d. Small pocket of memory. e. Controls the overall operation of the cpu. f. Performs mathematical functions on data stored in the register area. g. Small temporary memory area that separates and stores incoming data and instructions. Copyright by Goodheart-Willcox Co., Inc. 27 Practice 4.3 Fill in the blanks for the statements about CPU enhancements. -

Hypertransport Und PCI Express Moderne Systeme Verlangen Schnelle I/O-Verbindungen (Teil 1)

BAUELEMENTE HyperTransport und PCI Express Moderne Systeme verlangen schnelle I/O-Verbindungen (Teil 1) Siegfried W. Best, Redaktion elektronik industrie ICs werden immer schneller und komplexer. Prozessoren arbei- ten heute mit 3 GHz, werden bald aber die 10-GHz-Marke überschreiten und mehr als 1 Mrd. Transistoren beinhalten. Die Geschwindigkeit der Speicher wird sich verdoppeln oder gar vervierfachen. Die zunehmenden Geschwindigkeitsanforde- rungen führen zur Ablösung paralleler Verbindungsbusse und zu seriellen I/O-Lösungen sowohl auf Chip- wie auch auf Boardebene wie die im Folgenden betrachteten HyperTrans- port und PCI Express. ie Notwendigkeit neuer schneller len nennen gar 38 Mio. Verbindungstechnologien wurde vor Z. Z. sind über 45 Hyper- D Jahren erkannt und mit Erfolg wur- Transport-fähige ICs ver- den Systeme entwickelt, die den Fla- fügbar. Angefangen von schenhals-Bussysteme beseitigen helfen. Da den 32- und 64-Bit-Pro- gibt es den von Intel favorisierten PCI Ex- zessoren von AMD (Op- HyperTransport-Verbindung im Vergleich mit AGP-Routing. press als Nachfolger von PCI und seinen teron, Athlon64), Broad- schnelleren Derivaten sowie das stark von com, PMC-Sierra MIPS) und Transmeta und verbessern kann und dies unter Beibe- AMD gepuschte HyperTransport Consorti- (Efficeon T8400) über Core-Logik und haltung der Kompatibilität zur PCI-I/O-Tech- um. Denkt man an die nächste Generation South/North-Bridges von AMD, Ali, Nvidia nologie. HyperTransport bietet unter Ver- von Telekomgeräten sind weiter zu nennen und VIA bis hin zu HyperTransport-Bridges wendung einfach herzustellender einseitig (zu anderen I/O-Systemen) von AMD, Allian- gerichteter Punkt-zu-Punkt-Zweidrahtver- ce Semi und PLX. Auch bedeutende FPGA- bindungen (Links) eine Transferrate von Hersteller wie Altera und Xilinx bieten IPs 1600 Mbit/s pro Link. -

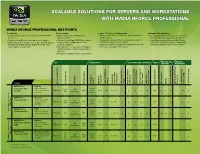

Scalable Solutions for Servers and Workstations with Nvidia Nforce Professional

SCALABLE SOLUTIONS FOR SERVERS AND WORKSTATIONS WITH NVIDIA NFORCE PROFESSIONAL NVIDIA NFORCE PROFESSIONAL KEY POINTS Scalability Connectivity Lower Total Cost of Ownership Advanced Networking • Same architecture for workstations and 1P to 8P+ • Highest throughput PCI Express • Advanced reliability, availability, and serviceability • Native Gigabit Ethernet solution with lowest servers implementation (RAS) features CPU Utilization and highest performance • Support for multi-core, multi-processor designs • Support for multiple SATA 3Gb/s drives, • Designed to enable smaller, low-power systems • Load Balancing and Failover (LBFO) with • Highly integrated, feature-rich architecture integrates multiple RAID configurations and including rack and blade designs TCP/IP Acceleration for greater bandwidth, PCI Express (PCIe), Gigabit Ethernet, SATA, and hotplug capability • Support for IMPI 2.0 remote management software system performance, and fault tolerance legacy I/O on a single chip • NVIDIA® SLI™ Technology for intelligent • Enclosure management features and transparent scaling of workstation graphics • Integrated USB and legacy connectivity AVANCED RAS ADVANCED CPU CONNECTIVITY OS SUPPORT AND SOFTWARE AUDIO FEATURES NETWORKING ® ™ ® Windows ™ ® Capable ™ Product Ideal for Processor Supported Socket Supported FSB Speed Memory Support PCI Express Support NVIDIA SLI Technology** 3.0/PATA SATA Drive Support MediaShield supported RAID Configurations USB 2.0 and Legacy support Support for popular Linux installs Support for existing Microsoft Windows