Efficient Digital Color Image Demosaicing Directly to Ycbcr 4:2:0

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Color Models

Color Models Jian Huang CS456 Main Color Spaces • CIE XYZ, xyY • RGB, CMYK • HSV (Munsell, HSL, IHS) • Lab, UVW, YUV, YCrCb, Luv, Differences in Color Spaces • What is the use? For display, editing, computation, compression, …? • Several key (very often conflicting) features may be sought after: – Additive (RGB) or subtractive (CMYK) – Separation of luminance and chromaticity – Equal distance between colors are equally perceivable CIE Standard • CIE: International Commission on Illumination (Comission Internationale de l’Eclairage). • Human perception based standard (1931), established with color matching experiment • Standard observer: a composite of a group of 15 to 20 people CIE Experiment CIE Experiment Result • Three pure light source: R = 700 nm, G = 546 nm, B = 436 nm. CIE Color Space • 3 hypothetical light sources, X, Y, and Z, which yield positive matching curves • Y: roughly corresponds to luminous efficiency characteristic of human eye CIE Color Space CIE xyY Space • Irregular 3D volume shape is difficult to understand • Chromaticity diagram (the same color of the varying intensity, Y, should all end up at the same point) Color Gamut • The range of color representation of a display device RGB (monitors) • The de facto standard The RGB Cube • RGB color space is perceptually non-linear • RGB space is a subset of the colors human can perceive • Con: what is ‘bloody red’ in RGB? CMY(K): printing • Cyan, Magenta, Yellow (Black) – CMY(K) • A subtractive color model dye color absorbs reflects cyan red blue and green magenta green blue and red yellow blue red and green black all none RGB and CMY • Converting between RGB and CMY RGB and CMY HSV • This color model is based on polar coordinates, not Cartesian coordinates. -

An Improved SPSIM Index for Image Quality Assessment

S S symmetry Article An Improved SPSIM Index for Image Quality Assessment Mariusz Frackiewicz * , Grzegorz Szolc and Henryk Palus Department of Data Science and Engineering, Silesian University of Technology, Akademicka 16, 44-100 Gliwice, Poland; [email protected] (G.S.); [email protected] (H.P.) * Correspondence: [email protected]; Tel.: +48-32-2371066 Abstract: Objective image quality assessment (IQA) measures are playing an increasingly important role in the evaluation of digital image quality. New IQA indices are expected to be strongly correlated with subjective observer evaluations expressed by Mean Opinion Score (MOS) or Difference Mean Opinion Score (DMOS). One such recently proposed index is the SuperPixel-based SIMilarity (SPSIM) index, which uses superpixel patches instead of a rectangular pixel grid. The authors of this paper have proposed three modifications to the SPSIM index. For this purpose, the color space used by SPSIM was changed and the way SPSIM determines similarity maps was modified using methods derived from an algorithm for computing the Mean Deviation Similarity Index (MDSI). The third modification was a combination of the first two. These three new quality indices were used in the assessment process. The experimental results obtained for many color images from five image databases demonstrated the advantages of the proposed SPSIM modifications. Keywords: image quality assessment; image databases; superpixels; color image; color space; image quality measures Citation: Frackiewicz, M.; Szolc, G.; Palus, H. An Improved SPSIM Index 1. Introduction for Image Quality Assessment. Quantitative domination of acquired color images over gray level images results in Symmetry 2021, 13, 518. https:// the development not only of color image processing methods but also of Image Quality doi.org/10.3390/sym13030518 Assessment (IQA) methods. -

Package 'Magick'

Package ‘magick’ August 18, 2021 Type Package Title Advanced Graphics and Image-Processing in R Version 2.7.3 Description Bindings to 'ImageMagick': the most comprehensive open-source image processing library available. Supports many common formats (png, jpeg, tiff, pdf, etc) and manipulations (rotate, scale, crop, trim, flip, blur, etc). All operations are vectorized via the Magick++ STL meaning they operate either on a single frame or a series of frames for working with layers, collages, or animation. In RStudio images are automatically previewed when printed to the console, resulting in an interactive editing environment. The latest version of the package includes a native graphics device for creating in-memory graphics or drawing onto images using pixel coordinates. License MIT + file LICENSE URL https://docs.ropensci.org/magick/ (website) https://github.com/ropensci/magick (devel) BugReports https://github.com/ropensci/magick/issues SystemRequirements ImageMagick++: ImageMagick-c++-devel (rpm) or libmagick++-dev (deb) VignetteBuilder knitr Imports Rcpp (>= 0.12.12), magrittr, curl LinkingTo Rcpp Suggests av (>= 0.3), spelling, jsonlite, methods, knitr, rmarkdown, rsvg, webp, pdftools, ggplot2, gapminder, IRdisplay, tesseract (>= 2.0), gifski Encoding UTF-8 RoxygenNote 7.1.1 Language en-US NeedsCompilation yes Author Jeroen Ooms [aut, cre] (<https://orcid.org/0000-0002-4035-0289>) Maintainer Jeroen Ooms <[email protected]> 1 2 analysis Repository CRAN Date/Publication 2021-08-18 10:10:02 UTC R topics documented: analysis . .2 animation . .3 as_EBImage . .6 attributes . .7 autoviewer . .7 coder_info . .8 color . .9 composite . 12 defines . 14 device . 15 edges . 17 editing . 18 effects . 22 fx .............................................. 23 geometry . 24 image_ggplot . -

COLOR SPACE MODELS for VIDEO and CHROMA SUBSAMPLING

COLOR SPACE MODELS for VIDEO and CHROMA SUBSAMPLING Color space A color model is an abstract mathematical model describing the way colors can be represented as tuples of numbers, typically as three or four values or color components (e.g. RGB and CMYK are color models). However, a color model with no associated mapping function to an absolute color space is a more or less arbitrary color system with little connection to the requirements of any given application. Adding a certain mapping function between the color model and a certain reference color space results in a definite "footprint" within the reference color space. This "footprint" is known as a gamut, and, in combination with the color model, defines a new color space. For example, Adobe RGB and sRGB are two different absolute color spaces, both based on the RGB model. In the most generic sense of the definition above, color spaces can be defined without the use of a color model. These spaces, such as Pantone, are in effect a given set of names or numbers which are defined by the existence of a corresponding set of physical color swatches. This article focuses on the mathematical model concept. Understanding the concept Most people have heard that a wide range of colors can be created by the primary colors red, blue, and yellow, if working with paints. Those colors then define a color space. We can specify the amount of red color as the X axis, the amount of blue as the Y axis, and the amount of yellow as the Z axis, giving us a three-dimensional space, wherein every possible color has a unique position. -

Camera Raw Workflows

RAW WORKFLOWS: FROM CAMERA TO POST Copyright 2007, Jason Rodriguez, Silicon Imaging, Inc. Introduction What is a RAW file format, and what cameras shoot to these formats? How does working with RAW file-format cameras change the way I shoot? What changes are happening inside the camera I need to be aware of, and what happens when I go into post? What are the available post paths? Is there just one, or are there many ways to reach my end goals? What post tools support RAW file format workflows? How do RAW codecs like CineForm RAW enable me to work faster and with more efficiency? What is a RAW file? In simplest terms is the native digital data off the sensor's A/D converter with no further destructive DSP processing applied Derived from a photometrically linear data source, or can be reconstructed to produce data that directly correspond to the light that was captured by the sensor at the time of exposure (i.e., LOG->Lin reverse LUT) Photometrically Linear 1:1 Photons Digital Values Doubling of light means doubling of digitally encoded value What is a RAW file? In film-analogy would be termed a “digital negative” because it is a latent representation of the light that was captured by the sensor (up to the limit of the full-well capacity of the sensor) “RAW” cameras include Thomson Viper, Arri D-20, Dalsa Evolution 4K, Silicon Imaging SI-2K, Red One, Vision Research Phantom, noXHD, Reel-Stream “Quasi-RAW” cameras include the Panavision Genesis In-Camera Processing Most non-RAW cameras on the market record to 8-bit YUV formats -

Robust Pulse-Rate from Chrominance-Based Rppg Gerard De Haan and Vincent Jeanne

IEEE TRANSACTIONS ON BIOMEDICAL ENGINEERING, VOL. ?, NO. ?, MONTH 2013 1 Robust pulse-rate from chrominance-based rPPG Gerard de Haan and Vincent Jeanne Abstract—Remote photoplethysmography (rPPG) enables con- PPG signal into two independent signals built as a linear tactless monitoring of the blood volume pulse using a regular combination of two color channels [8]. One combination camera. Recent research focused on improved motion robustness, approximated the clean pulse-signal, the other the motion but the proposed blind source separation techniques (BSS) in RGB color space show limited success. We present an analysis of artifact, and the energy in the pulse-signal was minimized the motion problem, from which far superior chrominance-based to optimize the combination. Poh et al. extended this work methods emerge. For a population of 117 stationary subjects, we proposing a linear combination of all three color channels show our methods to perform in 92% good agreement (±1:96σ) defining three independent signals with Independent Compo- with contact PPG, with RMSE and standard deviation both a nent Analysis (ICA) using non-Gaussianity as the criterion factor of two better than BSS-based methods. In a fitness setting using a simple spectral peak detector, the obtained pulse-rate for independence [5]. Lewandowska et al. varied this con- for modest motion (bike) improves from 79% to 98% correct, cept defining three independent linear combinations of the and for vigorous motion (stepping) from less than 11% to more color channels with Principal Component Analysis (PCA) [6]. than 48% correct. We expect the greatly improved robustness to With both Blind Source Separation (BSS) techniques, the considerably widen the application scope of the technology. -

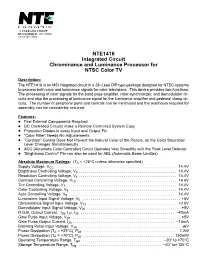

NTE1416 Integrated Circuit Chrominance and Luminance Processor for NTSC Color TV

NTE1416 Integrated Circuit Chrominance and Luminance Processor for NTSC Color TV Description: The NTE1416 is an MSI integrated circuit in a 28–Lead DIP type package designed for NTSC systems to process both color and luminance signals for color televisions. This device provides two functions: The processing of color signals for the band pass amplifier, color synchronizer, and demodulator cir- cuits and also the processing of luminance signal for the luminance amplifier and pedestal clamp cir- cuits. The number of peripheral parts and controls can be minimized and the manhours required for assembly can be considerbly reduced. Features: D Few External Components Required D DC Controlled Circuits make a Remote Controlled System Easy D Protection Diodes in every Input and Output Pin D “Color Killer” Needs No Adjustements D “Contrast” Control Does Not Prevent the Natural Color of the Picture, as the Color Saturation Level Changes Simultaneously D ACC (Automatic Color Controller) Circuit Operates Very Smoothly with the Peak Level Detector D “Brightness Control” Pin can also be used for ABL (Automatic Beam Limitter) Absolute Maximum Ratings: (TA = +25°C unless otherwise specified) Supply Voltage, VCC . 14.4V Brightness Controlling Voltage, V3 . 14.4V Resolution Controlling Voltage, V4 . 14.4V Contrast Controlling Voltage, V10 . 14.4V Tint Controlling Voltage, V7 . 14.4V Color Controlling Voltage, V9 . 14.4V Auto Controlling Voltage, V8 . 14.4V Luminance Input Signal Voltage, V5 . +5V Chrominance Signal Input Voltage, V13 . +2.5V Demodulator Input Signal Voltage, V25 . +5V R.G.B. Output Current, I26, I27, I28 . –40mA Gate Pulse Input Voltage, V20 . +5V Gate Pulse Output Current, I20 . -

Measuring Camera Shannon Information Capacity with a Siemens Star Image

https://doi.org/10.2352/ISSN.2470-1173.2020.9.IQSP-347 © 2020, Society for Imaging Science and Technology Measuring camera Shannon Information Capacity with a Siemens Star Image Norman L. Koren, Imatest LLC, Boulder, Colorado, USA Abstract Measurement background Shannon information capacity, which can be expressed as bits per To measure signal and noise at the same location, we use an pixel or megabits per image, is an excellent figure of merit for pre- image of a sinusoidal Siemens-star test chart consisting of ncycles dicting camera performance for a variety of machine vision appli- total cycles, which we analyze by dividing the star into k radial cations, including medical and automotive imaging systems. Its segments (32 or 64), each of which is subdivided into m angular strength is that is combines the effects of sharpness (MTF) and segments (8, 16, or 24) of length Pseg. The number sine wave noise, but it has not been widely adopted because it has been cycles in each angular segment is 푛 = 푛푐푦푐푙푒푠/푚. difficult to measure and has never been standardized. We have developed a method for conveniently measuring inform- ation capacity from images of the familiar sinusoidal Siemens Star chart. The key is that noise is measured in the presence of the image signal, rather than in a separate location where image processing may be different—a commonplace occurrence with bilateral filters. The method also enables measurement of SNRI, which is a key performance metric for object detection. Information capacity is strongly affected by sensor noise, lens quality, ISO speed (Exposure Index), and the demosaicing algo- rithm, which affects aliasing. -

Image Sensors and Image Quality in Mobile Phones

Image Sensors and Image Quality in Mobile Phones Juha Alakarhu Nokia, Technology Platforms, Camera Entity, P.O. Box 1000, FI-33721 Tampere [email protected] (+358 50 4860226) Abstract 2. Performance metric This paper considers image sensors and image quality A reliable sensor performance metric is needed in order in camera phones. A method to estimate the image to have meaningful discussion on different sensor quality and performance of an image sensor using its options, future sensor performance, and effects of key parameters is presented. Subjective image quality different technologies. Comparison of individual and mapping camera technical parameters to its technical parameters does not provide general view to subjective image quality are discussed. The developed the image quality and can be misleading. A more performance metrics are used to optimize sensor comprehensive performance metric based on low level performance for best possible image quality in camera technical parameters is developed as follows. phones. Finally, the future technology and technology First, conversion from photometric units to trends are discussed. The main development trend for radiometric units is needed. Irradiation E [W/m2] is images sensors is gradually changing from pixel size calculated as follows using illuminance Ev [lux], reduction to performance improvement within the same energy spectrum of the illuminant (any unit), and the pixel size. About 30% performance improvement is standard luminosity function V. observed between generations if the pixel size is kept Equation 1: Radiometric unit conversion the same. Image sensor is also the key component to offer new features to the user in the future. s(λ) d λ E = ∫ E lm v 683 s()()λ V λ d λ 1. -

Creating 4K/UHD Content Poster

Creating 4K/UHD Content Colorimetry Image Format / SMPTE Standards Figure A2. Using a Table B1: SMPTE Standards The television color specification is based on standards defined by the CIE (Commission 100% color bar signal Square Division separates the image into quad links for distribution. to show conversion Internationale de L’Éclairage) in 1931. The CIE specified an idealized set of primary XYZ SMPTE Standards of RGB levels from UHDTV 1: 3840x2160 (4x1920x1080) tristimulus values. This set is a group of all-positive values converted from R’G’B’ where 700 mv (100%) to ST 125 SDTV Component Video Signal Coding for 4:4:4 and 4:2:2 for 13.5 MHz and 18 MHz Systems 0mv (0%) for each ST 240 Television – 1125-Line High-Definition Production Systems – Signal Parameters Y is proportional to the luminance of the additive mix. This specification is used as the color component with a color bar split ST 259 Television – SDTV Digital Signal/Data – Serial Digital Interface basis for color within 4K/UHDTV1 that supports both ITU-R BT.709 and BT2020. 2020 field BT.2020 and ST 272 Television – Formatting AES/EBU Audio and Auxiliary Data into Digital Video Ancillary Data Space BT.709 test signal. ST 274 Television – 1920 x 1080 Image Sample Structure, Digital Representation and Digital Timing Reference Sequences for The WFM8300 was Table A1: Illuminant (Ill.) Value Multiple Picture Rates 709 configured for Source X / Y BT.709 colorimetry ST 296 1280 x 720 Progressive Image 4:2:2 and 4:4:4 Sample Structure – Analog & Digital Representation & Analog Interface as shown in the video ST 299-0/1/2 24-Bit Digital Audio Format for SMPTE Bit-Serial Interfaces at 1.5 Gb/s and 3 Gb/s – Document Suite Illuminant A: Tungsten Filament Lamp, 2854°K x = 0.4476 y = 0.4075 session display. -

Multi-Frame Demosaicing and Super-Resolution of Color Images

1 Multi-Frame Demosaicing and Super-Resolution of Color Images Sina Farsiu∗, Michael Elad ‡ , Peyman Milanfar § Abstract In the last two decades, two related categories of problems have been studied independently in the image restoration literature: super-resolution and demosaicing. A closer look at these problems reveals the relation between them, and as conventional color digital cameras suffer from both low-spatial resolution and color-filtering, it is reasonable to address them in a unified context. In this paper, we propose a fast and robust hybrid method of super-resolution and demosaicing, based on a MAP estimation technique by minimizing a multi-term cost function. The L 1 norm is used for measuring the difference between the projected estimate of the high-resolution image and each low-resolution image, removing outliers in the data and errors due to possibly inaccurate motion estimation. Bilateral regularization is used for spatially regularizing the luminance component, resulting in sharp edges and forcing interpolation along the edges and not across them. Simultaneously, Tikhonov regularization is used to smooth the chrominance components. Finally, an additional regularization term is used to force similar edge location and orientation in different color channels. We show that the minimization of the total cost function is relatively easy and fast. Experimental results on synthetic and real data sets confirm the effectiveness of our method. ∗Corresponding author: Electrical Engineering Department, University of California Santa Cruz, Santa Cruz CA. 95064 USA. Email: [email protected], Phone:(831)-459-4141, Fax: (831)-459-4829 ‡ Computer Science Department, The Technion, Israel Institute of Technology, Israel. -

Demosaicking: Color Filter Array Interpolation [Exploring the Imaging

[Bahadir K. Gunturk, John Glotzbach, Yucel Altunbasak, Ronald W. Schafer, and Russel M. Mersereau] FLOWER PHOTO © PHOTO FLOWER MEDIA, 1991 21ST CENTURY PHOTO:CAMERA AND BACKGROUND ©VISION LTD. DIGITAL Demosaicking: Color Filter Array Interpolation [Exploring the imaging process and the correlations among three color planes in single-chip digital cameras] igital cameras have become popular, and many people are choosing to take their pic- tures with digital cameras instead of film cameras. When a digital image is recorded, the camera needs to perform a significant amount of processing to provide the user with a viewable image. This processing includes correction for sensor nonlinearities and nonuniformities, white balance adjustment, compression, and more. An important Dpart of this image processing chain is color filter array (CFA) interpolation or demosaicking. A color image requires at least three color samples at each pixel location. Computer images often use red (R), green (G), and blue (B). A camera would need three separate sensors to completely meas- ure the image. In a three-chip color camera, the light entering the camera is split and projected onto each spectral sensor. Each sensor requires its proper driving electronics, and the sensors have to be registered precisely. These additional requirements add a large expense to the system. Thus, many cameras use a single sensor covered with a CFA. The CFA allows only one color to be measured at each pixel. This means that the camera must estimate the missing two color values at each pixel. This estimation process is known as demosaicking. Several patterns exist for the filter array.