Christopher Alexander, Cedric Price, and Nicholas Negroponte & Mit’S Architecture Machine Group

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Neuroscience, the Natural Environment, and Building Design

Neuroscience, the Natural Environment, and Building Design. Nikos A. Salingaros, Professor of Mathematics, University of Texas at San Antonio. Kenneth G. Masden II, Associate Professor of Architecture, University of Texas at San Antonio. Presented at the Bringing Buildings to Life Conference, Yale University, 10-12 May 2006. Chapter 5 of: Biophilic Design: The Theory, Science and Practice of Bringing Buildings to Life, edited by Stephen R. Kellert, Judith Heerwagen, and Martin Mador (John Wiley, New York, 2008), pages 59-83. Abstract: The human mind is split between genetically-structured neural systems, and an internally-generated worldview. This split occurs in a way that can elicit contradictory mental processes for our actions, and the interpretation of our environment. Part of our perceptive system looks for information, whereas another part looks for meaning, and in so doing gives rise to cultural, philosophical, and ideological constructs. Architects have come to operate in this second domain almost exclusively, effectively neglecting the first domain. By imposing an artificial meaning on the built environment, contemporary architects contradict physical and natural processes, and thus create buildings and cities that are inhuman in their form, scale, and construction. A new effort has to be made to reconnect human beings to the buildings and places they inhabit. Biophilic design, as one of the most recent and viable reconnection theories, incorporates organic life into the built environment in an essential manner. Extending this logic, the building forms, articulations, and textures could themselves follow the same geometry found in all living forms. Empirical evidence confirms that designs which connect humans to the lived experience enhance our overall sense of wellbeing, with positive and therapeutic consequences on physiology. -

Open Source Architecture, Began in Much the Same Way As the Domus Article

About the Authors Carlo Ratti is an architect and engineer by training. He practices in Italy and teaches at the Massachusetts Institute of Technology, where he directs the Senseable City Lab. His work has been exhibited at the Venice Biennale and MoMA in New York. Two of his projects were hailed by Time Magazine as ‘Best Invention of the Year’. He has been included in Blueprint Magazine’s ‘25 People who will Change the World of Design’ and Wired’s ‘Smart List 2012: 50 people who will change the world’. Matthew Claudel is a researcher at MIT’s Senseable City Lab. He studied architecture at Yale University, where he was awarded the 2013 Sudler Prize, Yale’s highest award for the arts. He has taught at MIT, is on the curatorial board of the Media Architecture Biennale, is an active protagonist of Hans Ulrich Obrist’s 89plus, and has presented widely as a critic, speaker, and artist in-residence. Adjunct Editors The authorship of this book was a collective endeavor. The text was developed by a team of contributing editors from the worlds of art, architecture, literature, and theory. Assaf Biderman Michele Bonino Ricky Burdett Pierre-Alain Croset Keller Easterling Giuliano da Empoli Joseph Grima N. John Habraken Alex Haw Hans Ulrich Obrist Alastair Parvin Ethel Baraona Pohl Tamar Shafrir Other titles of interest published by Thames & Hudson include: The Elements of Modern Architecture The New Autonomous House World Architecture: The Masterworks Mediterranean Modern See our websites www.thamesandhudson.com www.thamesandhudsonusa.com Contents -

Is Parallel Programming Hard, And, If So, What Can You Do About It?

Is Parallel Programming Hard, And, If So, What Can You Do About It? Edited by: Paul E. McKenney Linux Technology Center IBM Beaverton [email protected] December 16, 2011 ii Legal Statement This work represents the views of the authors and does not necessarily represent the view of their employers. IBM, zSeries, and Power PC are trademarks or registered trademarks of International Business Machines Corporation in the United States, other countries, or both. Linux is a registered trademark of Linus Torvalds. i386 is a trademarks of Intel Corporation or its subsidiaries in the United States, other countries, or both. Other company, product, and service names may be trademarks or service marks of such companies. The non-source-code text and images in this doc- ument are provided under the terms of the Creative Commons Attribution-Share Alike 3.0 United States li- cense (http://creativecommons.org/licenses/ by-sa/3.0/us/). In brief, you may use the contents of this document for any purpose, personal, commercial, or otherwise, so long as attribution to the authors is maintained. Likewise, the document may be modified, and derivative works and translations made available, so long as such modifications and derivations are offered to the public on equal terms as the non-source-code text and images in the original document. Source code is covered by various versions of the GPL (http://www.gnu.org/licenses/gpl-2.0.html). Some of this code is GPLv2-only, as it derives from the Linux kernel, while other code is GPLv2-or-later. -

Graduationl Speakers

Graduationl speakers ~~~~~~~~*L-- --- I - I -· P 8-·1111~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~ stress public service By Andrew L. Fish san P. Thomas, MIT's Lutheran MIT President Paul E. Gray chaplain, who delivered the inlvo- '54 told graduating students that cation. "Grant that we may use their education is "more than a the privilege of this MIT educa- meal ticket" and should be used tion and degree wisely - not as to serve "the public interest and an entitlement to power or re- the common good." His remarks gard, but as a means to serve," were made at MIT's 122nd com- Thomas said. "May the technol- mencement on May 27. A total ogy that we use and develop be of 1733 students received 1899 humane, and the world we create degrees at the ceremony, which with it one in which people can was held in Killian Court under live more fully human lives rather sunny skies, than less, a world where clean air The importance of public ser- and water, adequate food and vice was also emphasized by Su- shelter, and freedom from fear and want are commonplace rath- Prof. IVMurman er than exceptional." named to Proj. Text of CGray's commencement address. Page 2. Athena post In his commencement address, By Irene Kuo baseball's National League Presi- Professor Earll Murman of the dent A. Bartlett Giamatti urged Department of Aeronautics and graduates to "have the courage to Astronautics was recently named connect" with people of all ideo- the new director of Project Athe- logies. Equality will come only ~~~~~~~~~~~~~~~~~,,4. na by Gerald L. -

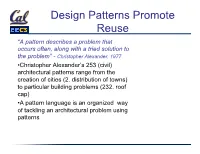

Design Patterns Promote Reuse

Design Patterns Promote Reuse “A pattern describes a problem that occurs often, along with a tried solution to the problem” - Christopher Alexander, 1977 • Christopher Alexander’s 253 (civil) architectural patterns range from the creation of cities (2. distribution of towns) to particular building problems (232. roof cap) • A pattern language is an organized way of tackling an architectural problem using patterns Kinds of Patterns in Software • Architectural (“macroscale”) patterns • Model-view-controller • Pipe & Filter (e.g. compiler, Unix pipeline) • Event-based (e.g. interactive game) • Layering (e.g. SaaS technology stack) • Computation patterns • Fast Fourier transform • Structured & unstructured grids • Dense linear algebra • Sparse linear algebra • GoF (Gang of Four) Patterns: structural, creational, behavior The Gang of Four (GoF) • 23 structural design patterns • description of communicating objects & classes • captures common (and successful) solution to a category of related problem instances • can be customized to solve a specific (new) problem in that category • Pattern ≠ • individual classes or libraries (list, hash, ...) • full design—more like a blueprint for a design The GoF Pattern Zoo 1. Factory 13. Observer 14. Mediator 2. Abstract factory 15. Chain of responsibility 3. Builder Creation 16. Command 4. Prototype 17. Interpreter 18. Iterator 5. Singleton/Null obj 19. Memento (memoization) 6. Adapter Behavioral 20. State 21. Strategy 7. Composite 22. Template 8. Proxy 23. Visitor Structural 9. Bridge 10. Flyweight 11. -

Gen John W. Vessey, Jr Interviewer: Thomas Saylor, Ph.D

Narrator: Gen John W. Vessey, Jr Interviewer: Thomas Saylor, Ph.D. Date of interview: 19 February 2013 Location: Vessey residence, North Oaks, MN Transcribed by: Linda Gerber, May 2013 Edited for clarity by: Thomas Saylor, Ph.D., September 2013 and February 2014 (00:00:00) = elapsed time on digital recording TS: Today is Tuesday, 19 February 2013. This is another of our ongoing interview cycle with General John W. Vessey, Jr. My name is Thomas Saylor. Today we’re at the Vessey residence in North Oaks, Minnesota, on a bright, clear and very cold winter day. General Vessey, we wanted at first to add some additional information and perspective on Lebanon, going back to 1983. I’ll let you put the conversation in motion here. JV: After we talked last week I got to thinking that we hadn’t really explained as fully as we might have the confusion and the multiple points of view that existed both in the United States and in the world in general about Lebanon and our involvement. I’m not sure that what I remembered after you left will add any clarity to (chuckles) your reader’s understanding, but at least they’ll understand the muddled picture that I was looking at, at the time. TS: And that’s important, because even in the contemporary news accounts of the time there is a sense of confusion and wondering really what the Americans are trying to accomplish, as well as the fact that the Americans aren’t the only Western force even in Lebanon at the time. -

Campusmap06.Pdf

A B C D E F MIT Campus Map Welcome to MIT #HARLES3TREET All MIT buildings are designated .% by numbers. Under this numbering "ROAD 1 )NSTITUTE 1 system, a single room number "ENT3TREET serves to completely identify any &ULKERSON3TREET location on the campus. In a 2OGERS3TREET typical room number, such as 7-121, .% 5NIVERSITY (ARVARD3QUARE#ENTRAL3QUARE the figure(s) preceding the hyphen 0ARK . gives the building number, the first .% -)4&EDERAL number following the hyphen, the (OTEL -)4 #REDIT5NION floor, and the last two numbers, 3TATE3TREET "INNEY3TREET .7 43 the room. 6ILLAGE3T -)4 -USEUM 7INDSOR3TREET .% 4HE#HARLES . 3TARK$RAPER 2ANDOM . 43 3IDNEY 0ACIFIC 3IDNEY3TREET (ALL ,ABORATORY )NC Please refer to the building index on 0ACIFIC3TREET .7 .% 'RADUATE2ESIDENCE 3IDNEY 43 0ACIFIC3TREET ,ANDSDOWNE 3TREET 0ORTLAND3TREET 43 the reverse side of this map, 3TREET 7INDSOR .% ,ANDSDOWNE -ASS!VE 3TREET,OT .7 3TREET .% 4ECHNOLOGY if the room number is unknown. 3QUARE "ROADWAY ,ANDSDOWNE3TREET . 43 2 -AIN3TREET 2 3MART3TREET ,ANDSDOWNE #ROSS3TREET ,ANDSDOWNE 43 An interactive map of MIT 3TREETGARAGE 3TREET 43 .% 2ESIDENCE)NN -C'OVERN)NSTITUTEFOR BY-ARRIOTT can be found at 0ACIFIC "RAIN2ESEARCH 3TREET,OT %DGERTON (OUSE 'ALILEO7AY http://whereis.mit.edu/. .7 !LBANY3TREET 0LASMA .7 .7 7HITEHEAD !LBANY3TREET )NSTITUTE 0ACIFIC3TREET,OT 3CIENCE .7 .! .!NNEX,OT "RAINAND#OGNITIVE AND&USION 0ARKING'ARAGE Parking -ASS 3CIENCES#OMPLEX 0ARSONS .% !VE,OT . !LBANY3TREET #ENTER ,ABORATORY "ROAD)NSTITUTE 'RADUATE2ESIDENCE .UCLEAR2EACTOR ,OT #YCLOTRON ¬ = -

State, Foreign Operations, and Related Programs Appropriations for Fiscal Year 2018

STATE, FOREIGN OPERATIONS, AND RELATED PROGRAMS APPROPRIATIONS FOR FISCAL YEAR 2018 TUESDAY, MAY 23, 2017 U.S. SENATE, SUBCOMMITTEE OF THE COMMITTEE ON APPROPRIATIONS, Washington, DC. The subcommittee met at 2:30 p.m. in room SD–124, Dirksen Senate Office Building, Hon. Lindsey Graham (chairman) pre- siding. Present: Senators Graham, Shaheen, Lankford, Leahy, Daines, Boozman, Merkley, and Van Hollen. U.S. ASSISTANCE FOR THE NORTHERN TRIANGLE OF CENTRAL AMERICA STATEMENTS OF: HON. JOHN D. NEGROPONTE, VICE CHAIRMAN OF McLARTY ASSO- CIATES, U.S. CO-CHAIR, NORTHERN TRIANGLE SECURITY AND ECONOMIC OPPORTUNITY TASK FORCE, ATLANTIC COUNCIL ADRIANA BELTRA´ N, SENIOR ASSOCIATE FOR CITIZEN SECURITY, WASHINGTON OFFICE ON LATIN AMERICA ERIC FARNSWORTH, VICE PRESIDENT OF THE COUNCIL OF THE AMERICAS JOHN WINGLE, COUNTRY DIRECTOR FOR HONDURAS AND GUATE- MALA, MILLENNIUM CHALLENGE CORPORATION OPENING STATEMENT OF SENATOR LINDSEY GRAHAM Senator GRAHAM. The hearing will come to order. Senator Leahy is on his way. We have Senator Shaheen and Senator Lankford, along with myself. We have a great panel here. John Negroponte, Vice Chairman at McLarty Associates, who has had about every job you can have from Director of National Intelligence to ambassadorships all over the world, and has been involved in this part of the world for a very long time. Thanks, John, for taking time out to pariticipate. Eric Farnsworth, Vice President, Council of the Americas. Thank you for coming. John Wingle, the Millennium Challenge Corpora- tion Country Director for Honduras and Guatemala. Adriana Beltra´n, Senior Associate for Citizen Security, Washington Office on Latin America, an NGO heavily involved in rule of law issues. -

Holistic Approach to Mental Illnesses at the Toby of Ambohibao Madagascar Daniel A

Luther Seminary Digital Commons @ Luther Seminary Doctor of Ministry Theses Student Theses 2002 Holistic Approach to Mental Illnesses at the Toby of Ambohibao Madagascar Daniel A. Rakotojoelinandrasana Luther Seminary Follow this and additional works at: http://digitalcommons.luthersem.edu/dmin_theses Part of the Christianity Commons, Mental and Social Health Commons, and the Practical Theology Commons Recommended Citation Rakotojoelinandrasana, Daniel A., "Holistic Approach to Mental Illnesses at the Toby of Ambohibao Madagascar" (2002). Doctor of Ministry Theses. 19. http://digitalcommons.luthersem.edu/dmin_theses/19 This Thesis is brought to you for free and open access by the Student Theses at Digital Commons @ Luther Seminary. It has been accepted for inclusion in Doctor of Ministry Theses by an authorized administrator of Digital Commons @ Luther Seminary. For more information, please contact [email protected]. HOLISTIC APPROACH TO MENTAL ILLNESSES AT THE TOBY OF AMBOHIBAO MADAGASCAR by DANIEL A. RAKOTOJOELINANDRASANA A Thesis Submitted to, the Faculty of Luther Seminary And The Minnesota Consortium of Seminary Faculties In Partial Fulfillments of The Requirements for the Degree of DOCTOR OF MINISTRY THESIS ADVISOR: RICHARD WALLA CE. SAINT PAUL, MINNESOTA 2002 LUTHER SEMINARY USRARY 22-75Como Aver:ue 1 ©2002 by Daniel Rakotojoelinandrasana All rights reserved 2 Thesis Project Committee Dr. Richard Wallace, Luther Seminary, Thesis Advisor Dr. Craig Moran, Luther Seminary, Reader Dr. J. Michael Byron, St. Paul Seminary, School of Divinity, Reader ABSTRACT The Church is to reclaim its teaching and praxis of the healing ministry at the example of Jesus Christ who preached, taught and healed. Healing is holistic, that is, caring for the whole person, physical, mental, social and spiritual. -

The Daily Me

1 The Daily Me It is some time in the future. Technology has greatly increased people's ability to “filter” what they want to read, see, and hear. With the aid of the Internet, you are able to design your own newspapers and magazines. You can choose your own programming, with movies, game shows, sports, shopping, and news of your choice. You mix and match. You need not come across topics and views that you have not sought out. Without any difficulty, you are able to see exactly what you want to see, no more and no less. You can easily find out what “people like you” tend to like and dis like. You avoid what they dislike. You take a close look at what they like. Maybe you want to focus on sports all the time, and to avoid anything dealing with business or government. It is easy to do exactly that. Maybe you choose replays of your favorite tennis matches in the early evening, live baseball from New York at night, and professional football on the weekends. If you hate sports and want to learn about the Middle East in the evening from the perspective you find most congenial, you can do that too. If you care only about the United States and want to avoid international issues entirely, you can restrict yourself to material involving the United States. So too if you care only about Paris, or London, or Chicago, or Berlin, or Cape Town, or Beijing, or your hometown. 1 CHAPTER ONE Perhaps you have no interest at all in “news.” Maybe you find “news” impossibly boring. -

Lobsinger Mary Louise, "Cybernetic Theory and the Architecture Of

MARISTELLA CASCIATO 0l1}Jr. · MONIQUE ELEB lOlls SARAH WILLIAMS GOLDHAGEN SANDY ISENSTADT 1\1())) 1~I~NIS1\IS MARY LOUISE LOBS INGER Experimentation REINHOLD MARTIN in Postwar FRANCESCA ROGIER Architectural Culture TIMOTHY M. ROHAN FELICITY SCOTT JEAN-LOUIS VIOLEAU CORNELIS WAGENAAR CHERIE WENDELKEN Canadian Centre for Architecture, Montreal The MIT Press, Cambridge, Massachusetts, and London, England (OJ >000 Centre Canadien d'Architecture/ PHOTO CREDITS Preface 9 Canadian Centre for Architecture Allantic Film and Imaging: figs. 6.9,6.10, Calavas: and Massachusetts Institute of Technology fig. 9·7: CCA Photographic Services: figs. 305, 5.1-5.9, Introduction: Critical Themes of Postwar Modernism '0-4; Ian Vriihoftrhe Netherlands Photo Archives: SARAH WILLIAMS GOLDHAGEN AND REjEAN LEGAULT II The Canadian Centre for Architecture figs. 11.3-11.7: John Maltby: fig. ,.2; John R. Paollin: "po rue Baile, Montrbl, Quebec, Canada H3H lS6 fig. 3-'; Peter Smithson: fig. 3.,. 1 Neorealism in Italian Architecture MARISTELLA CASCIATO 25 ISBN 0-.62-0"/208'4 (MIT) COPYRICHTS Contents The MIT Press (, Alison and Peter Smithson Architects: figs. ;,I-B, ;.5, 2 An Alternative to Functionalist Universalism; Five Cambrid~ Center, C.mbri~, MA 02'42 10.6; © Arata Iso"'i: figs. 12.7, u.S; © Balthazar Ecochard, Candilis, and ATBAT-Afrique cover, figs. 6.2, 6.3: © Bertha RudofSL),: figs. 9.2, MONIQUE ELEB 55 All righ.. reserved. No part of this hook may be repro 9.4; © Courtesy of Kevin Roche John Kindeloo and duced in any form by any electronic or mechanical Associales: figs. 6.9,6.10; © IBM Corporation; figs. 6.1, 3 Freedom's Domiciles: means (incl~ding photo~opying, recording, or infor, 64 6.6-<i.8; © Immtut gta, ETIl Zurich: fig. -

“Community” in the Work of Cedric Price

TRANSFORMATIONS Journal of Media & Culture ISSN 1444-3775 2007 Issue No. 14 — Accidental Environments Calculated Uncertainty: Computers, Chance Encounters, and "Community" in the Work of Cedric Price By Rowan Wilken “All great work is preparing yourself for the accident to happen.” —— Sidney Lumet Iconoclastic British architect and theorist Cedric Price is noted for the comparatively early incorporation of computing and other communications technologies into his designs, which he employed as part of an ongoing critique of the conventions of architectural form, and as part of his explorations of questions of mobility. Three key unrealised projects of his are examined here which explore these concerns. These are his “Potteries Thinkbelt” (1964-67), “Generator” (1978-80), and “Fun Palace” (1961-74) projects, with greatest attention given to the last of them. In these projects, Price saw technology as a way of testing non-authoritarian ideas of use (multiplicity of use and undreamt of use), encouraging chance encounters and, it was hoped, chance “community.” These aims also situate Price’s work within an established context of twentieth-century experimental urban critique, a context that includes the investigations of the Dadaists, the Surrealists, Fluxus, and the Situationist International, among others. What distinguishes Price’s work from these other examples is his explicit engagement with communications technologies in tandem with systems, game and cybernetic theory. These influences, it will be argued, are interesting for the way that Price employed them in his development of a structurally complex and programmatically rich architectural environment which was, simultaneously and somewhat paradoxically, to be experienced as an “accidental environment.” Much of the existing critical work on Price gives greatest attention to exploring and documenting these theoretical influences and uses of computing and communications equipment, as well as the architectural and wider socio-cultural context in which his work was produced.