Conference Abstracts

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Talk Bank: a Multimodal Database of Communicative Interaction

Talk Bank: A Multimodal Database of Communicative Interaction 1. Overview The ongoing growth in computer power and connectivity has led to dramatic changes in the methodology of science and engineering. By stimulating fundamental theoretical discoveries in the analysis of semistructured data, we can to extend these methodological advances to the social and behavioral sciences. Specifically, we propose the construction of a major new tool for the social sciences, called TalkBank. The goal of TalkBank is the creation of a distributed, web- based data archiving system for transcribed video and audio data on communicative interactions. We will develop an XML-based annotation framework called Codon to serve as the formal specification for data in TalkBank. Tools will be created for the entry of new and existing data into the Codon format; transcriptions will be linked to speech and video; and there will be extensive support for collaborative commentary from competing perspectives. The TalkBank project will establish a framework that will facilitate the development of a distributed system of allied databases based on a common set of computational tools. Instead of attempting to impose a single uniform standard for coding and annotation, we will promote annotational pluralism within the framework of the abstraction layer provided by Codon. This representation will use labeled acyclic digraphs to support translation between the various annotation systems required for specific sub-disciplines. There will be no attempt to promote any single annotation scheme over others. Instead, by promoting comparison and translation between schemes, we will allow individual users to select the custom annotation scheme most appropriate for their purposes. -

A Data-Driven Framework for Assisting Geo-Ontology Engineering Using a Discrepancy Index

University of California Santa Barbara A Data-Driven Framework for Assisting Geo-Ontology Engineering Using a Discrepancy Index A Thesis submitted in partial satisfaction of the requirements for the degree Master of Arts in Geography by Bo Yan Committee in charge: Professor Krzysztof Janowicz, Chair Professor Werner Kuhn Professor Emerita Helen Couclelis June 2016 The Thesis of Bo Yan is approved. Professor Werner Kuhn Professor Emerita Helen Couclelis Professor Krzysztof Janowicz, Committee Chair May 2016 A Data-Driven Framework for Assisting Geo-Ontology Engineering Using a Discrepancy Index Copyright c 2016 by Bo Yan iii Acknowledgements I would like to thank the members of my committee for their guidance and patience in the face of obstacles over the course of my research. I would like to thank my advisor, Krzysztof Janowicz, for his invaluable input on my work. Without his help and encour- agement, I would not have been able to find the light at the end of the tunnel during the last stage of the work. Because he provided insight that helped me think out of the box. There is no better advisor. I would like to thank Yingjie Hu who has offered me numer- ous feedback, suggestions and inspirations on my thesis topic. I would like to thank all my other intelligent colleagues in the STKO lab and the Geography Department { those who have moved on and started anew, those who are still in the quagmire, and those who have just begun { for their support and friendship. Last, but most importantly, I would like to thank my parents for their unconditional love. -

Semi-Automated Ontology Based Question Answering System Open Access

Journal of Advanced Research in Computing and Applications 17, Issue 1 (2019) 1-5 Journal of Advanced Research in Computing and Applications Journal homepage: www.akademiabaru.com/arca.html ISSN: 2462-1927 Open Semi-automated Ontology based Question Answering System Access 1, Khairul Nurmazianna Ismail 1 Faculty of Computer Science, Universiti Teknologi MARA Melaka, Malaysia ABSTRACT Question answering system enable users to retrieve exact answer for questions submit using natural language. The demand of this system increases since it able to deliver precise answer instead of list of links. This study proposes ontology-based question answering system. Research consist explanation of question answering system architecture. This question answering system used semi-automatic ontology development (Ontology Learning) approach to develop its ontology. Keywords: Question answering system; knowledge Copyright © 2019 PENERBIT AKADEMIA BARU - All rights reserved base; ontology; online learning 1. Introduction In the era of WWW, people need to search and get information fast online or offline which make people rely on Question Answering System (QAS). One of the common and traditionally use QAS is Frequently Ask Question site which contains common and straight forward answer. Currently, QAS have emerge as powerful platform using various techniques such as information retrieval, Knowledge Base, Natural Language Processing and Hybrid Based which enable user to retrieve exact answer for questions posed in natural language using either pre-structured database or a collection of natural language documents [1,4]. The demand of QAS increases day by day since it delivers short, precise and question-specific answer [10]. QAS with using knowledge base paradigm are better in Restricted- domain QA system since it ability to focus [12]. -

CLIB 2016 Proceedings

The Second International Conference Computational Linguistics in Bulgaria (CLIB 2016) is organised within the Operation for Support for International Scientific Conferences Held in Bulgaria of the National Science Fund Grant № ДПМНФ 01/9 of 11 Aug 2016. National Science Fund CLIB 2016 is organised by: The Department of Computational Linguistics, Institute for Bulgarian Language, Bulgarian Academy of Sciences PUBLICATION AND CATALOGUING INFORMATION Title: Proceedings of the Second International Conference Computational Linguistics in Bulgaria (CLIB 2016) ISSN: 23675675 (online) Published and The Institute for Bulgarian Language Prof. Lyubomir distributed by: Andreychin, Bulgarian Academy of Sciences Editorial address: Institute for Bulgarian Language Prof. Lyubomir Andreychin, Bulgarian Academy of Sciences 52 Shipchenski prohod blvd., bldg. 17 Sofia 1113, Bulgaria +359 2/ 872 23 02 Copyright of each paper stays with the respective authors. The works in the Proceedings are licensed under a Creative Commons Attribution 4.0 International Licence (CC BY 4.0). Licence details: http://creativecommons.org/licenses/by/4.0 Proceedings of the Second International Conference Computational Linguistics in Bulgaria 9 September 2016 Sofia, Bulgaria PREFACE We are excited to welcome you to the second edition of the International Conference Computational Linguistics in Bulgaria (CLIB 2016) in Sofia, Bulgaria! CLIB aspires to foster the NLP community in Bulgaria and further the cooperation among researchers working in NLP for Bulgarian around the world. The need for a conference dedicated to NLP research dealing with or applicable to Bulgarian has been felt for quite some time. We believe that building a strong community of researchers and teams who have chosen to work on Bulgarian is a key factor to meeting the challenges and requirements posed to computational linguistics and NLP in Bulgaria. -

Collection of Usage Information for Language Resources from Academic Articles

Collection of Usage Information for Language Resources from Academic Articles Shunsuke Kozaway, Hitomi Tohyamayy, Kiyotaka Uchimotoyyy, Shigeki Matsubaray yGraduate School of Information Science, Nagoya University yyInformation Technology Center, Nagoya University Furo-cho, Chikusa-ku, Nagoya, 464-8601, Japan yyyNational Institute of Information and Communications Technology 4-2-1 Nukui-Kitamachi, Koganei, Tokyo, 184-8795, Japan fkozawa,[email protected], [email protected], [email protected] Abstract Recently, language resources (LRs) are becoming indispensable for linguistic researches. However, existing LRs are often not fully utilized because their variety of usage is not well known, indicating that their intrinsic value is not recognized very well either. Regarding this issue, lists of usage information might improve LR searches and lead to their efficient use. In this research, therefore, we collect a list of usage information for each LR from academic articles to promote the efficient utilization of LRs. This paper proposes to construct a text corpus annotated with usage information (UI corpus). In particular, we automatically extract sentences containing LR names from academic articles. Then, the extracted sentences are annotated with usage information by two annotators in a cascaded manner. We show that the UI corpus contributes to efficient LR searches by combining the UI corpus with a metadata database of LRs and comparing the number of LRs retrieved with and without the UI corpus. 1. Introduction Thesaurus1. In recent years, such language resources (LRs) as corpora • He also employed Roget’s Thesaurus in 100 words of and dictionaries are being widely used for research in the window to implement WSD. -

Student Research Workshop Associated with RANLP 2011, Pages 1–8, Hissar, Bulgaria, 13 September 2011

RANLPStud 2011 Proceedings of the Student Research Workshop associated with The 8th International Conference on Recent Advances in Natural Language Processing (RANLP 2011) 13 September, 2011 Hissar, Bulgaria STUDENT RESEARCH WORKSHOP ASSOCIATED WITH THE INTERNATIONAL CONFERENCE RECENT ADVANCES IN NATURAL LANGUAGE PROCESSING’2011 PROCEEDINGS Hissar, Bulgaria 13 September 2011 ISBN 978-954-452-016-8 Designed and Printed by INCOMA Ltd. Shoumen, BULGARIA ii Preface The Recent Advances in Natural Language Processing (RANLP) conference, already in its eight year and ranked among the most influential NLP conferences, has always been a meeting venue for scientists coming from all over the world. Since 2009, we decided to give arena to the younger and less experienced members of the NLP community to share their results with an international audience. For this reason, further to the first successful and highly competitive Student Research Workshop associated with the conference RANLP 2009, we are pleased to announce the second edition of the workshop which is held during the main RANLP 2011 conference days on 13 September 2011. The aim of the workshop is to provide an excellent opportunity for students at all levels (Bachelor, Master, and Ph.D.) to present their work in progress or completed projects to an international research audience and receive feedback from senior researchers. We have received 31 high quality submissions, among which 6 papers have been accepted as regular oral papers, and 18 as posters. Each submission has been reviewed by -

Child Language

ABSTRACTS 14TH INTERNATIONAL CONGRESS FOR THE STUDY OF CHILD LANGUAGE IN LYON, IASCL FRANCE 2017 WELCOME JULY, 17TH21ST 2017 SPECIAL THANKS TO - 2 - SUMMARY Plenary Day 1 4 Day 2 5 Day 3 53 Day 4 101 Day 5 146 WELCOME! Symposia Day 2 6 Day 3 54 Day 4 102 Day 5 147 Poster Day 2 189 Day 3 239 Day 4 295 - 3 - TH DAY MONDAY, 17 1 18:00-19:00, GRAND AMPHI PLENARY TALK Bottom-up and top-down information in infants’ early language acquisition Sharon Peperkamp Laboratoire de Sciences Cognitives et Psycholinguistique, Paris, France Decades of research have shown that before they pronounce their first words, infants acquire much of the sound structure of their native language, while also developing word segmentation skills and starting to build a lexicon. The rapidity of this acquisition is intriguing, and the underlying learning mechanisms are still largely unknown. Drawing on both experimental and modeling work, I will review recent research in this domain and illustrate specifically how both bottom-up and top-down cues contribute to infants’ acquisition of phonetic cat- egories and phonological rules. - 4 - TH DAY TUESDAY, 18 2 9:00-10:00, GRAND AMPHI PLENARY TALK What do the hands tell us about lan- guage development? Insights from de- velopment of speech, gesture and sign across languages Asli Ozyurek Max Planck Institute for Psycholinguistics, Nijmegen, The Netherlands Most research and theory on language development focus on children’s spoken utterances. However language development starting with the first words of children is multimodal. Speaking children produce gestures ac- companying and complementing their spoken utterances in meaningful ways through pointing or iconic ges- tures. -

Semantically Enhanced and Minimally Supervised Models for Ontology Construction, Text Classification, and Document Recommendation Alkhatib, Wael (2020)

Semantically Enhanced and Minimally Supervised Models for Ontology Construction, Text Classification, and Document Recommendation Alkhatib, Wael (2020) DOI (TUprints): https://doi.org/10.25534/tuprints-00011890 Lizenz: CC-BY-ND 4.0 International - Creative Commons, Namensnennung, keine Bearbei- tung Publikationstyp: Dissertation Fachbereich: 18 Fachbereich Elektrotechnik und Informationstechnik Quelle des Originals: https://tuprints.ulb.tu-darmstadt.de/11890 SEMANTICALLY ENHANCED AND MINIMALLY SUPERVISED MODELS for Ontology Construction, Text Classification, and Document Recommendation Dem Fachbereich Elektrotechnik und Informationstechnik der Technischen Universität Darmstadt zur Erlangung des akademischen Grades eines Doktor-Ingenieurs (Dr.-Ing.) genehmigte Dissertation von wael alkhatib, m.sc. Geboren am 15. October 1988 in Hama, Syrien Vorsitz: Prof. Dr.-Ing. Jutta Hanson Referent: Prof. Dr.-Ing. Ralf Steinmetz Korreferent: Prof. Dr.-Ing. Steffen Staab Tag der Einreichung: 28. January 2020 Tag der Disputation: 10. June 2020 Hochschulkennziffer D17 Darmstadt 2020 This document is provided by tuprints, e-publishing service of the Technical Univer- sity Darmstadt. http://tuprints.ulb.tu-darmstadt.de [email protected] Please cite this document as: URN:nbn:de:tuda-tuprints-118909 URL:https://tuprints.ulb.tu-darmstadt.de/id/eprint/11890 This publication is licensed under the following Creative Commons License: Attribution-No Derivatives 4.0 International https://creativecommons.org/licenses/by-nd/4.0/deed.en Wael Alkhatib, M.Sc.: Semantically Enhanced and Minimally Supervised Models, for Ontology Construction, Text Classification, and Document Recommendation © 28. January 2020 supervisors: Prof. Dr.-Ing. Ralf Steinmetz Prof. Dr.-Ing. Steffen Staab location: Darmstadt time frame: 28. January 2020 ABSTRACT The proliferation of deliverable knowledge on the web, along with the rapidly in- creasing number of accessible research publications, make researchers, students, and educators overwhelmed. -

Download Special Issue

Scientific Programming Scientific Programming Techniques and Algorithms for Data-Intensive Engineering Environments Lead Guest Editor: Giner Alor-Hernandez Guest Editors: Jezreel Mejia-Miranda and José María Álvarez-Rodríguez Scientific Programming Techniques and Algorithms for Data-Intensive Engineering Environments Scientific Programming Scientific Programming Techniques and Algorithms for Data-Intensive Engineering Environments Lead Guest Editor: Giner Alor-Hernández Guest Editors: Jezreel Mejia-Miranda and José María Álvarez-Rodríguez Copyright © 2018 Hindawi. All rights reserved. This is a special issue published in “Scientific Programming.” All articles are open access articles distributed under the Creative Com- mons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. Editorial Board M. E. Acacio Sanchez, Spain Christoph Kessler, Sweden Danilo Pianini, Italy Marco Aldinucci, Italy Harald Köstler, Germany Fabrizio Riguzzi, Italy Davide Ancona, Italy José E. Labra, Spain Michele Risi, Italy Ferruccio Damiani, Italy Thomas Leich, Germany Damian Rouson, USA Sergio Di Martino, Italy Piotr Luszczek, USA Giuseppe Scanniello, Italy Basilio B. Fraguela, Spain Tomàs Margalef, Spain Ahmet Soylu, Norway Carmine Gravino, Italy Cristian Mateos, Argentina Emiliano Tramontana, Italy Gianluigi Greco, Italy Roberto Natella, Italy Autilia Vitiello, Italy Bormin Huang, USA Francisco Ortin, Spain Jan Weglarz, Poland Chin-Yu Huang, Taiwan Can Özturan, Turkey -

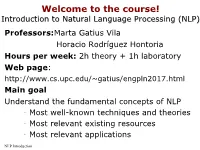

Lexical Ambiguity • Syntactic Ambiguity • Semantic Ambiguity • Pragmatic Ambiguity

Welcome to the course! IntroductionIntroduction toto NaturalNatural LanguageLanguage ProcessingProcessing (NLP)(NLP) Professors:Marta Gatius Vila Horacio Rodríguez Hontoria Hours per week: 2h theory + 1h laboratory Web page: http://www.cs.upc.edu/~gatius/engpln2017.html Main goal Understand the fundamental concepts of NLP • Most well-known techniques and theories • Most relevant existing resources • Most relevant applications NLP Introduction 1 Welcome to the course! IntroductionIntroduction toto NaturalNatural LanguageLanguage ProcessingProcessing Content 1. Introduction to Language Processing 2. Applications. 3. Language models. 4. Morphology and lexicons. 5. Syntactic processing. 6. Semantic and pragmatic processing. 7. Generation NLP Introduction 2 Welcome to the course! IntroductionIntroduction toto NaturalNatural LanguageLanguage ProcessingProcessing Assesment • Exams Mid-term exam- November End-of-term exam – Final exams period- all the course contents • Development of 2 Programs – Groups of two or three students Course grade = maximum ( midterm exam*0.15 + final exam*0.45, final exam * 0.6) + assigments *0.4 NLP Introduction 3 Welcome to the course! IntroductionIntroduction toto NaturalNatural LanguageLanguage ProcessingProcessing Related (or the same) disciplines: •Computational Linguistics, CL •Natural Language Processing, NLP •Linguistic Engineering, LE •Human Language Technology, HLT NLP Introduction 4 Linguistic Engineering (LE) • LE consists of the application of linguistic knowledge to the development of computer systems able to recognize, understand, interpretate and generate human language in all its forms. • LE includes: • Formal models (representations of knowledge of language at the different levels) • Theories and algorithms • Techniques and tools • Resources (Lingware) • Applications NLP Introduction 5 Linguistic knowledge levels – Phonetics and phonology. Language models – Morphology: Meaningful components of words. Lexicon doors is plural – Syntax: Structural relationships between words. -

Multimedia Corpora (Media Encoding and Annotation) (Thomas Schmidt, Kjell Elenius, Paul Trilsbeek)

Multimedia Corpora (Media encoding and annotation) (Thomas Schmidt, Kjell Elenius, Paul Trilsbeek) Draft submitted to CLARIN WG 5.7. as input to CLARIN deliverable D5.C3 “Interoperability and Standards” [http://www.clarin.eu/system/files/clarindeliverableD5C3_v1_5finaldraft.pdf] Table of Contents 1 General distinctions / terminology................................................................................................................................... 1 1.1 Different types of multimedia corpora: spoken language vs. speech vs. phonetic vs. multimodal corpora vs. sign language corpora......................................................................................................................................................... 1 1.2 Media encoding vs. Media annotation................................................................................................................... 3 1.3 Data models/file formats vs. Transcription systems/conventions.......................................................................... 3 1.4 Transcription vs. Annotation / Coding vs. Metadata ............................................................................................. 3 2 Media encoding ............................................................................................................................................................... 5 2.1 Audio encoding ..................................................................................................................................................... 5 2.2 -

Morphological Doublets in Croatian: a Multi-Methodological Analysis

Morphological Doublets in Croatian: A multi-methodological analysis By: Dario Lečić A thesis submitted in partial fulfilment of the requirements for the degree of Doctor of Philosophy The University of Sheffield Faculty of Arts and Humanities Department of Russian and Slavonic Studies 20 January, 2017 Acknowledgments Many a PhD student past and present will agree that doing a PhD is a time-consuming process with lots of ups and downs, motivational issues and even a number of nervous breakdowns. Having experienced all of these, I can only say that they were right. However, having reached the end of the tunnel, I have to admit that the feeling is great. I would like to use this opportunity to thank all the people who made this possible and who have helped me during these four years spent researching the intricate world of morphological doublets in Croatian. First of all, I would like to express my immense gratitude to my primary supervisor, Professor Neil Bermel from the Department or Russian and Slavonic Studies at the University of Sheffield for offering his guidance from day one. Our regular supervisory meetings as well as numerous e-mail exchanges have been eye-opening and I would not have been able to do this without you. I hope this dissertation will justify all the effort you have put into me as your PhD student. Even though the jurisdiction of the second supervisor as defined by the University of Sheffield officially stretches mostly to matters of the Doctoral Development Programme, my second supervisor, Dr Dagmar Divjak, nevertheless played a major role in this research as well, primarily in matters of statistics.