A Data-Driven Framework for Assisting Geo-Ontology Engineering Using a Discrepancy Index

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Semi-Automated Ontology Based Question Answering System Open Access

Journal of Advanced Research in Computing and Applications 17, Issue 1 (2019) 1-5 Journal of Advanced Research in Computing and Applications Journal homepage: www.akademiabaru.com/arca.html ISSN: 2462-1927 Open Semi-automated Ontology based Question Answering System Access 1, Khairul Nurmazianna Ismail 1 Faculty of Computer Science, Universiti Teknologi MARA Melaka, Malaysia ABSTRACT Question answering system enable users to retrieve exact answer for questions submit using natural language. The demand of this system increases since it able to deliver precise answer instead of list of links. This study proposes ontology-based question answering system. Research consist explanation of question answering system architecture. This question answering system used semi-automatic ontology development (Ontology Learning) approach to develop its ontology. Keywords: Question answering system; knowledge Copyright © 2019 PENERBIT AKADEMIA BARU - All rights reserved base; ontology; online learning 1. Introduction In the era of WWW, people need to search and get information fast online or offline which make people rely on Question Answering System (QAS). One of the common and traditionally use QAS is Frequently Ask Question site which contains common and straight forward answer. Currently, QAS have emerge as powerful platform using various techniques such as information retrieval, Knowledge Base, Natural Language Processing and Hybrid Based which enable user to retrieve exact answer for questions posed in natural language using either pre-structured database or a collection of natural language documents [1,4]. The demand of QAS increases day by day since it delivers short, precise and question-specific answer [10]. QAS with using knowledge base paradigm are better in Restricted- domain QA system since it ability to focus [12]. -

QUERY-DRIVEN TEXT ANALYTICS for KNOWLEDGE EXTRACTION, RESOLUTION, and INFERENCE by CHRISTAN EARL GRANT a DISSERTATION PRESENTED

QUERY-DRIVEN TEXT ANALYTICS FOR KNOWLEDGE EXTRACTION, RESOLUTION, AND INFERENCE By CHRISTAN EARL GRANT A DISSERTATION PRESENTED TO THE GRADUATE SCHOOL OF THE UNIVERSITY OF FLORIDA IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF DOCTOR OF PHILOSOPHY UNIVERSITY OF FLORIDA 2015 c 2015 Christan Earl Grant To Jesus my Savior, Vanisia my wife, my daughter Caliah, soon to be born son and my parents and siblings, whom I strive to impress. Also, to all my brothers and sisters battling injustice while I battled bugs and deadlines. ACKNOWLEDGMENTS I had an opportunity to see my dad, a software engineer from Jamaica work extremely hard to get a master's degree and work as a software engineer. I even had the privilege of sitting in some of his classes as he taught at a local university. Watching my dad work towards intellectual endeavors made me believe that anything is possible. I am extremely privileged to have someone I could look up to as an example of being a man, father, and scholar. I had my first taste of research when Dr. Joachim Hammer went out of his way to find a task for me on one of his research projects because I was interested in attending graduate school. After working with the team for a few weeks he was willing to give me increased responsibility | he let me attend the 2006 SIGMOD Conference in Chicago. It was at this that my eyes were opened to the world of database research. As an early graduate student Dr. Joseph Wilson exercised superhuman patience with me as I learned to grasp the fundamentals of paper writing. -

Usage-Dependent Maintenance of Structured Web Data Sets

Usage-dependent maintenance of structured Web data sets Dissertation zur Erlangung des akademischen Grades eines Doktors der Naturwissenschaften (Dr. rer. nat) am Institut f¨urInformatik des Fachbereichs Mathematik und Informatik der Freien Unviersit¨atBerlin vorgelegt von Dipl. Inform. Markus Luczak-R¨osch Berlin, August 2013 Referent: Prof. Dr.-Ing. Robert Tolksdorf (Freie Universit¨atBerlin) Erste Korreferentin: Natalya F. Noy, PhD (Stanford University) Zweite Korreferentin: Dr. rer. nat. Elena Simperl (University of Southampton) Tag der Disputation: 13.01.2014 To Johanna. To Levi, Yael and Mili. Vielen Dank, dass ich durch Euch eine Lebenseinstellung lernen durfte, \. die bereit ist, auf kritische Argumente zu h¨oren und von der Erfahrung zu lernen. Es ist im Grunde eine Einstellung, die zugibt, daß ich mich irren kann, daß Du Recht haben kannst und daß wir zusammen vielleicht der Wahrheit auf die Spur kommen." { Karl Popper Abstract The Web of Data is the current shape of the Semantic Web that gained momentum outside of the research community and becomes publicly visible. It is a matter of fact that the Web of Data does not fully exploit the primarily intended technology stack. Instead, the so called Linked Data design issues [BL06], which are the basis for the Web of Data, rely on the much more lightweight technologies. Openly avail- able structured Web data sets are at the beginning of being used in real-world applications. The Linked Data research community investigates the overall goal to approach the Web-scale data integration problem in a way that distributes efforts between three contributing stakeholders on the Web of Data { the data publishers, the data consumers, and third parties. -

Semantically Enhanced and Minimally Supervised Models for Ontology Construction, Text Classification, and Document Recommendation Alkhatib, Wael (2020)

Semantically Enhanced and Minimally Supervised Models for Ontology Construction, Text Classification, and Document Recommendation Alkhatib, Wael (2020) DOI (TUprints): https://doi.org/10.25534/tuprints-00011890 Lizenz: CC-BY-ND 4.0 International - Creative Commons, Namensnennung, keine Bearbei- tung Publikationstyp: Dissertation Fachbereich: 18 Fachbereich Elektrotechnik und Informationstechnik Quelle des Originals: https://tuprints.ulb.tu-darmstadt.de/11890 SEMANTICALLY ENHANCED AND MINIMALLY SUPERVISED MODELS for Ontology Construction, Text Classification, and Document Recommendation Dem Fachbereich Elektrotechnik und Informationstechnik der Technischen Universität Darmstadt zur Erlangung des akademischen Grades eines Doktor-Ingenieurs (Dr.-Ing.) genehmigte Dissertation von wael alkhatib, m.sc. Geboren am 15. October 1988 in Hama, Syrien Vorsitz: Prof. Dr.-Ing. Jutta Hanson Referent: Prof. Dr.-Ing. Ralf Steinmetz Korreferent: Prof. Dr.-Ing. Steffen Staab Tag der Einreichung: 28. January 2020 Tag der Disputation: 10. June 2020 Hochschulkennziffer D17 Darmstadt 2020 This document is provided by tuprints, e-publishing service of the Technical Univer- sity Darmstadt. http://tuprints.ulb.tu-darmstadt.de [email protected] Please cite this document as: URN:nbn:de:tuda-tuprints-118909 URL:https://tuprints.ulb.tu-darmstadt.de/id/eprint/11890 This publication is licensed under the following Creative Commons License: Attribution-No Derivatives 4.0 International https://creativecommons.org/licenses/by-nd/4.0/deed.en Wael Alkhatib, M.Sc.: Semantically Enhanced and Minimally Supervised Models, for Ontology Construction, Text Classification, and Document Recommendation © 28. January 2020 supervisors: Prof. Dr.-Ing. Ralf Steinmetz Prof. Dr.-Ing. Steffen Staab location: Darmstadt time frame: 28. January 2020 ABSTRACT The proliferation of deliverable knowledge on the web, along with the rapidly in- creasing number of accessible research publications, make researchers, students, and educators overwhelmed. -

Download Special Issue

Scientific Programming Scientific Programming Techniques and Algorithms for Data-Intensive Engineering Environments Lead Guest Editor: Giner Alor-Hernandez Guest Editors: Jezreel Mejia-Miranda and José María Álvarez-Rodríguez Scientific Programming Techniques and Algorithms for Data-Intensive Engineering Environments Scientific Programming Scientific Programming Techniques and Algorithms for Data-Intensive Engineering Environments Lead Guest Editor: Giner Alor-Hernández Guest Editors: Jezreel Mejia-Miranda and José María Álvarez-Rodríguez Copyright © 2018 Hindawi. All rights reserved. This is a special issue published in “Scientific Programming.” All articles are open access articles distributed under the Creative Com- mons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. Editorial Board M. E. Acacio Sanchez, Spain Christoph Kessler, Sweden Danilo Pianini, Italy Marco Aldinucci, Italy Harald Köstler, Germany Fabrizio Riguzzi, Italy Davide Ancona, Italy José E. Labra, Spain Michele Risi, Italy Ferruccio Damiani, Italy Thomas Leich, Germany Damian Rouson, USA Sergio Di Martino, Italy Piotr Luszczek, USA Giuseppe Scanniello, Italy Basilio B. Fraguela, Spain Tomàs Margalef, Spain Ahmet Soylu, Norway Carmine Gravino, Italy Cristian Mateos, Argentina Emiliano Tramontana, Italy Gianluigi Greco, Italy Roberto Natella, Italy Autilia Vitiello, Italy Bormin Huang, USA Francisco Ortin, Spain Jan Weglarz, Poland Chin-Yu Huang, Taiwan Can Özturan, Turkey -

Ontology Learning and Its Application to Automated Terminology Translation

Natural Language Processing Ontology Learning and Its Application to Automated Terminology Translation Roberto Navigli and Paola Velardi, Università di Roma La Sapienza Aldo Gangemi, Institute of Cognitive Sciences and Technology lthough the IT community widely acknowledges the usefulness of domain ontolo- A gies, especially in relation to the Semantic Web,1,2 we must overcome several bar- The OntoLearn system riers before they become practical and useful tools. Thus far, only a few specific research for automated ontology environments have ontologies. (The “What Is an Ontology?” sidebar on page 24 provides learning extracts a definition and some background.) Many in the and is part of a more general ontology engineering computational-linguistics research community use architecture.4,5 Here, we describe the system and an relevant domain terms WordNet,3 but large-scale IT applications based on experiment in which we used a machine-learned it require heavy customization. tourism ontology to automatically translate multi- from a corpus of text, Thus, a critical issue is ontology construction— word terms from English to Italian. The method can identifying, defining, and entering concept defini- apply to other domains without manual adaptation. relates them to tions. In large, complex application domains, this task can be lengthy, costly, and controversial, because peo- OntoLearn architecture appropriate concepts in ple can have different points of view about the same Figure 1 shows the elements of the architecture. concept. Two main approaches aid large-scale ontol- Using the Ariosto language processor,6 OntoLearn a general-purpose ogy construction. The first one facilitates manual extracts terminology from a corpus of domain text, ontology engineering by providing natural language such as specialized Web sites and warehouses or doc- ontology, and detects processing tools, including editors, consistency uments exchanged among members of a virtual com- checkers, mediators to support shared decisions, and munity. -

Ontology-Driven Automation of Iot-Based Human-Machine Interfaces Development?

Ontology-Driven Automation of IoT-Based Human-Machine Interfaces Development? Konstantin Ryabinin1[0000−0002−8353−7641], Svetlana Chuprina1[0000−0002−2103−3771], and Konstantin Belousov1[0000−0003−4447−1288] Perm State University, Bukireva Str. 15, 614990, Perm, Russia [email protected], [email protected], [email protected] Abstract. The paper is devoted to the development of high-level tools to automate tangible human-machine interfaces creation bringing to- gether IoT technologies and ontology engineering methods. We propose using ontology-driven approach to enable automatic generation of firmware for the devices and middleware for the applications to design from scratch or transform the existing M2M ecosystem with respect to new human needs and, if necessary, to transform M2M systems into human-centric ones. Thanks to our previous research, we developed the firmware and middleware generator on top of SciVi scientific visualization system that was proven to be a handy tool to integrate different data sources, in- cluding software solvers and hardware data providers, for monitoring and steering purposes. The high-level graphical user SciVi interface en- ables to design human-machine communication in terms of data flow and ontological specifications. Thereby the SciVi platform capabilities are sufficient to automatically generate all the necessary components for IoT ecosystem software. We tested our approach tackling the real-world problems of creating hardware device turning human gestures into se- mantics of spatiotemporal deixis, which relates to the verbal behavior of people having different psychological types. The device firmware gener- ated by means of SciVi tools enables researchers to understand complex matters and helps them analyze the linguistic behavior of users of so- cial networks with different psychological characteristics, and identify patterns inherent in their communication in social networks. -

Semi-Automatic Terminology Ontology Learning Based on Topic Modeling

The paper has been accepted at Engineering Applications of Artificial Intelligence on 9 May 2017. This paper can cite as: Monika Rani, Amit Kumar Dhar, O.P. Vyas, Semi-automatic terminology ontology learning based on topic modeling, Engineering Applications of Artificial Intelligence, Volume 63, August 2017, Pages 108-125,ISSN 0952- 1976,https://doi.org/10.1016/j.engappai.2017.05.006. (http://www.sciencedirect.com/science/article/pii/S0952197617300891. Semi-Automatic Terminology Ontology Learning Based on Topic Modeling Monika Rani*, Amit Kumar Dhar and O. P. Vyas Department of Information Technology, Indian Institute of Information Technology, Allahabad, India [email protected] Abstract- Ontologies provide features like a common vocabulary, reusability, machine-readable content, and also allows for semantic search, facilitate agent interaction and ordering & structuring of knowledge for the Semantic Web (Web 3.0) application. However, the challenge in ontology engineering is automatic learning, i.e., the there is still a lack of fully automatic approach from a text corpus or dataset of various topics to form ontology using machine learning techniques. In this paper, two topic modeling algorithms are explored, namely LSI & SVD and Mr.LDA for learning topic ontology. The objective is to determine the statistical relationship between document and terms to build a topic ontology and ontology graph with minimum human intervention. Experimental analysis on building a topic ontology and semantic retrieving corresponding topic ontology for the user‟s query demonstrating the effectiveness of the proposed approach. Keywords: Ontology Learning (OL), Latent Semantic Indexing (LSI), Singular Value Decomposition (SVD), Probabilistic Latent Semantic Indexing (pLSI), MapReduce Latent Dirichlet Allocation (Mr.LDA), Correlation Topic Modeling (CTM). -

A Comparative Analysis for English and Ukrainian Texts Processing Based on Semantics and Syntax Approach

A Comparative Analysis for English and Ukrainian Texts Processing Based on Semantics and Syntax Approach Victoria Vysotska, Svitlana Holoshchuk and Roman Holoshchuk Lviv Polytechnic National University, S. Bandera Street, 12, Lviv, 79013, Ukraine Abstract The article deals with the application of generating grammars in linguistic modelling. Analysis of sentence syntax modelling is used to automate studying and synthesising natural language texts. The complex research of automatic processing of English and Ukrainian texts considering the semantics and syntax of natural languages is conducted. A comparative linguistic approach to the syntax and semantics of English and Ukrainian languages synthesises the corpora of natural language texts. The automatic operation of relevant text data is carried out. Comparative analysis of these languages facilitates the development of the linguistic algorithm for converting natural language texts of Germanic and Slavic type. Keywords 1 Natural language processing, word order, Ukrainian language, NLP, English language, noun group, verb group, direct word order, grammatical category, base form, structural scheme, information resource processing, natural language text, terminal chain identification, terminal chain production, keywords identification, blocked words, rubrics 1. Introduction Each language is unique in its structure and characteristics of the linguistic unit’s construction for delivering meaningful texts. For automatic processing of coherent data texts, developing the algorithms for analysis and -

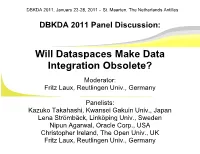

L Dataspaces Make Data Ntegration Obsolete?

DBKDA 2011, January 23-28, 2011 – St. Maarten, The Netherlands Antilles DBKDA 2011 Panel Discussion: Will Dataspaces Make Data Integration Obsolete? Moderator: Fritz Laux, Reutlingen Univ., Germany Panelists: Kazuko Takahashi, Kwansei Gakuin Univ., Japan Lena Strömbäck, Linköping Univ., Sweden Nipun Agarwal, Oracle Corp., USA Christopher Ireland, The Open Univ., UK Fritz Laux, Reutlingen Univ., Germany DBKDA 2011, January 23-28, 2011 – St. Maarten, The Netherlands Antilles The Dataspace Idea Space of Data Management Scalable Functionality and Costs far Web Search Functionality virtual Organization pay-as-you-go, Enterprise Dataspaces Admin. Portal Schema Proximity Federated first, DBMS DBMS scient. Desktop Repository Search DBMS schemaless, near unstructured high Semantic Integration low Time and Cost adopted from [Franklin, Halvey, Maier, 2005] DBKDA 2011, January 23-28, 2011 – St. Maarten, The Netherlands Antilles Dataspaces (DS) [Franklin, Halevy, Maier, 2005] is a new abstraction for Information Management ● DS are [paraphrasing and commenting Franklin, 2009] – Inclusive ● Deal with all the data of interest, in whatever form => but semantics matters ● We need access to the metadata! ● derive schema from instances? ● Discovering new data sources => The Münchhausen bootstrap problem? Theodor Hosemann (1807-1875) DBKDA 2011, January 23-28, 2011 – St. Maarten, The Netherlands Antilles Dataspaces (DS) [Franklin, Halevy, Maier, 2005] is a new abstraction for Information Management ● DS are [paraphrasing and commenting Franklin, 2009] – Co-existence -

Exploring Digital Preservation Strategies Using DLT in the Context Of

Forget-me-block Exploring digital preservation strategies using Distributed Ledger Technology in the context of personal information management By JAMES DAVID HACKMAN Department of Computer Science UNIVERSITY OF BRISTOL A dissertation submitted to the University of Bristol in accordance with the requirements of the degree of Master of Science by advanced study in Computer Science in the Faculty of Engineering. 15TH SEPTEMBER 2020 arXiv:2011.05759v1 [cs.CY] 2 Nov 2020 EXECUTIVE SUMMARY eceived wisdom portrays digital records as guaranteeing perpetuity; as the New York Times wrote a decade ago: “the web means the end of forgetting” [1]. The Rreality however is that digital records suffer similar risks of access loss as the analogue versions they replaced - but through the mechanisms of software, hardware and organisational change. The first two of these mechanisms are straightforward. Software change relates to how data is encoded - for instance later versions of Microsoft Word often cannot access documents written with earlier versions [2]. Likewise hardware formats obsolesce; even popular technologies such as the floppy disk reach a point where accessing data on these formats becomes increasingly difficult [3]. The third mechanism is however more abstract as it relates to societal structures, and ironically is often generated as a by-product of attempts to escape the first two risks. In our efforts to rid ourselves of hardware and software change these risks are often delegated to specialised external parties. Common use cases are those of conveying information to a future self, e.g. calendars, diaries, tasks, etc. These applications, categorised as Personal Information Management (PIM) [4, p. -

Patterns in Ontology Engineering: Classification of Ontology Patterns

PATTERNS IN ONTOLOGY ENGINEERING: CLASSIFICATION OF ONTOLOGY PATTERNS Eva Blomqvist School of Engineering, Jonk¨ oping¨ University P.O. Box 1026, SE-551 11 Jonk¨ oping,¨ Sweden [email protected] Kurt Sandkuhl School of Engineering, Jonk¨ oping¨ University P.O. Box 1026, SE-551 11 Jonk¨ oping,¨ Sweden [email protected] Keywords: Ontology Engineering, Ontology Patterns, Pattern Classification. Abstract: In Software Engineering, patterns are an accepted way to facilitate and support reuse. This paper focuses on patterns in the field of Ontology Engineering and proposes a classification scheme for ontology patterns. The scheme divides ontology patterns into five levels: Application Patterns, Architecture Patterns, Design Patterns, Semantic Patterns, and Syntactic Patterns. Semantic and Syntactic Patterns are quite well-researched but the higher levels of pattern abstraction are so far almost unexplored. To illustrate the possibilities of patterns on these levels some examples are discussed, together with ideas of future work. 1 INTRODUCTION 2 ONTOLOGY PATTERNS TODAY Recent developments in the area of Ontology En- Ontology is a popular term today, used in many ar- gineering involve semi-automatic ontology construc- eas and defined in many different ways. In this paper tion to reduce time and effort of constructing an on- ontology is defined as: tology. In particular for small-scale application con- texts, reduction of effort and expert involvement is an An ontology is a hierarchically structured set of important requirement. concepts describing a specific domain of knowledge that can be used to create a knowledge base. An on- Ways of reducing this effort are to further facil- tology contains concepts, a subsumption hierarchy, itate semi-automatic construction of ontologies, but arbitrary relations between concepts, and axioms.