Week 2: Causal Inference

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Causal Inference with Information Fields

Causal Inference with Information Fields Benjamin Heymann Michel De Lara Criteo AI Lab, Paris, France CERMICS, École des Ponts, Marne-la-Vallée, France [email protected] [email protected] Jean-Philippe Chancelier CERMICS, École des Ponts, Marne-la-Vallée, France [email protected] Abstract Inferring the potential consequences of an unobserved event is a fundamental scientific question. To this end, Pearl’s celebrated do-calculus provides a set of inference rules to derive an interventional probability from an observational one. In this framework, the primitive causal relations are encoded as functional dependencies in a Structural Causal Model (SCM), which maps into a Directed Acyclic Graph (DAG) in the absence of cycles. In this paper, by contrast, we capture causality without reference to graphs or functional dependencies, but with information fields. The three rules of do-calculus reduce to a unique sufficient condition for conditional independence: the topological separation, which presents some theoretical and practical advantages over the d-separation. With this unique rule, we can deal with systems that cannot be represented with DAGs, for instance systems with cycles and/or ‘spurious’ edges. We provide an example that cannot be handled – to the extent of our knowledge – with the tools of the current literature. 1 Introduction As the world shifts toward more and more data-driven decision-making, causal inference is taking more space in applied sciences, statistics and machine learning. This is because it allows for better, more robust decision-making, and provides a way to interpret the data that goes beyond correlation Pearl and Mackenzie [2018]. -

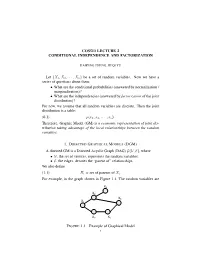

Cos513 Lecture 2 Conditional Independence and Factorization

COS513 LECTURE 2 CONDITIONAL INDEPENDENCE AND FACTORIZATION HAIPENG ZHENG, JIEQI YU Let {X1,X2, ··· ,Xn} be a set of random variables. Now we have a series of questions about them: • What are the conditional probabilities (answered by normalization / marginalization)? • What are the independencies (answered by factorization of the joint distribution)? For now, we assume that all random variables are discrete. Then the joint distribution is a table: (0.1) p(x1, x2, ··· , xn). Therefore, Graphic Model (GM) is a economic representation of joint dis- tribution taking advantage of the local relationships between the random variables. 1. DIRECTED GRAPHICAL MODELS (DGM) A directed GM is a Directed Acyclic Graph (DAG) G(V, E), where •V, the set of vertices, represents the random variables; •E, the edges, denotes the “parent of” relationships. We also define (1.1) Πi = set of parents of Xi. For example, in the graph shown in Figure 1.1, The random variables are X4 X4 X2 X2 X 6 X X1 6 X1 X3 X5 X3 X5 XZY XZY FIGURE 1.1. Example of Graphical Model 1 Y X Y Y X Y XZZ XZZ Y XYY XY XZZ XZZ XZY XZY 2 HAIPENG ZHENG, JIEQI YU {X1,X2 ··· ,X6}, and (1.2) Π6 = {X2,X3}. This DAG represents the following joint distribution: (1.3) p(x1:6) = p(x1)p(x2|x1)p(x3|x1)p(x4|x2)p(x5|x3)p(x6|x2, x5). In general, n Y (1.4) p(x1:n) = p(xi|xπi ) i=1 specifies a particular joint distribution. Note here πi stands for the set of indices of the parents of i. -

Data-Mining Probabilists Or Experimental Determinists?

Gopnik-CH_13.qxd 10/10/2006 1:39 PM Page 208 13 Data-Mining Probabilists or Experimental Determinists? A Dialogue on the Principles Underlying Causal Learning in Children Thomas Richardson, Laura Schulz, & Alison Gopnik This dialogue is a distillation of a real series of conver- Meno: Leaving aside the question of how children sations that took place at that most platonic of acade- learn for a while, can we agree on some basic prin- mies, the Center for Advanced Studies in the ciples for causal inference by anyone—child, Behavioral Sciences, between the first and third adult, or scientist? \edq1\ authors.\edq1\ The second author had determinist leanings to begin with and acted as an intermediary. Laplace: I think so. I think we can both generally agree that, subject to various caveats, two variables will not be dependent in probability unless there is Determinism, Faithfulness, and Causal some kind of causal connection present. Inference Meno: Yes—that’s what I’d call the weak causal Narrator: [Meno and Laplace stand in the corridor Markov condition. I assume that the kinds of of a daycare, observing toddlers at play through a caveats you have in mind are to restrict this princi- window.] ple to situations in which a discussion of cause and effect might be reasonable in the first place? Meno: Do you ever wonder how it is that children manage to learn so many causal relations so suc- Laplace: Absolutely. I don’t want to get involved in cessfully, so quickly? They make it all seem effort- discussions of whether X causes 2X or whether the less. -

Bayesian Networks

Bayesian Networks Read R&N Ch. 14.1-14.2 Next lecture: Read R&N 18.1-18.4 You will be expected to know • Basic concepts and vocabulary of Bayesian networks. – Nodes represent random variables. – Directed arcs represent (informally) direct influences. – Conditional probability tables, P( Xi | Parents(Xi) ). • Given a Bayesian network: – Write down the full joint distribution it represents. • Given a full joint distribution in factored form: – Draw the Bayesian network that represents it. • Given a variable ordering and some background assertions of conditional independence among the variables: – Write down the factored form of the full joint distribution, as simplified by the conditional independence assertions. Computing with Probabilities: Law of Total Probability Law of Total Probability (aka “summing out” or marginalization) P(a) = Σb P(a, b) = Σb P(a | b) P(b) where B is any random variable Why is this useful? given a joint distribution (e.g., P(a,b,c,d)) we can obtain any “marginal” probability (e.g., P(b)) by summing out the other variables, e.g., P(b) = Σa Σc Σd P(a, b, c, d) Less obvious: we can also compute any conditional probability of interest given a joint distribution, e.g., P(c | b) = Σa Σd P(a, c, d | b) = (1 / P(b)) Σa Σd P(a, c, d, b) where (1 / P(b)) is just a normalization constant Thus, the joint distribution contains the information we need to compute any probability of interest. Computing with Probabilities: The Chain Rule or Factoring We can always write P(a, b, c, … z) = P(a | b, c, …. -

(Ω, F,P) Be a Probability Spac

Lecture 9: Independence, conditional independence, conditional distribution Definition 1.7. Let (Ω, F,P ) be a probability space. (i) Let C be a collection of subsets in F. Events in C are said to be independent if and only if for any positive integer n and distinct events A1,...,An in C, P (A1 ∩ A2 ∩···∩ An)= P (A1)P (A2) ··· P (An). (ii) Collections Ci ⊂ F, i ∈ I (an index set that can be uncountable), are said to be independent if and only if events in any collection of the form {Ai ∈ Ci : i ∈ I} are independent. (iii) Random elements Xi, i ∈ I, are said to be independent if and only if σ(Xi), i ∈ I, are independent. A useful result for checking the independence of σ-fields. Lemma 1.3. Let Ci, i ∈ I, be independent collections of events. Suppose that each Ci has the property that if A ∈ Ci and B ∈ Ci, then A∩B ∈ Ci. Then σ(Ci), i ∈ I, are independent. Random variables Xi, i =1, ..., k, are independent according to Definition 1.7 if and only if k F(X1,...,Xk)(x1, ..., xk)= FX1 (x1) ··· FXk (xk), (x1, ..., xk) ∈ R Take Ci = {(a, b] : a ∈ R, b ∈ R}, i =1, ..., k If X and Y are independent random vectors, then so are g(X) and h(Y ) for Borel functions g and h. Two events A and B are independent if and only if P (B|A) = P (B), which means that A provides no information about the probability of the occurrence of B. Proposition 1.11. -

A Bunched Logic for Conditional Independence

A Bunched Logic for Conditional Independence Jialu Bao Simon Docherty University of Wisconsin–Madison University College London Justin Hsu Alexandra Silva University of Wisconsin–Madison University College London Abstract—Independence and conditional independence are fun- For instance, criteria ensuring that algorithms do not discrim- damental concepts for reasoning about groups of random vari- inate based on sensitive characteristics (e.g., gender or race) ables in probabilistic programs. Verification methods for inde- can be formulated using conditional independence [8]. pendence are still nascent, and existing methods cannot handle conditional independence. We extend the logic of bunched impli- Aiming to prove independence in probabilistic programs, cations (BI) with a non-commutative conjunction and provide a Barthe et al. [9] recently introduced Probabilistic Separation model based on Markov kernels; conditional independence can be Logic (PSL) and applied it to formalize security for several directly captured as a logical formula in this model. Noting that well-known constructions from cryptography. The key ingre- Markov kernels are Kleisli arrows for the distribution monad, dient of PSL is a new model of the logic of bunched implica- we then introduce a second model based on the powerset monad and show how it can capture join dependency, a non-probabilistic tions (BI), in which separation is interpreted as probabilistic analogue of conditional independence from database theory. independence. While PSL enables formal reasoning about Finally, we develop a program logic for verifying conditional independence, it does not support conditional independence. independence in probabilistic programs. The core issue is that the model of BI underlying PSL provides no means to describe the distribution of one set of variables I. -

Automating Path Analysis for Building Causal Models from Data

From: AAAI Technical Report WS-93-02. Compilation copyright © 1993, AAAI (www.aaai.org). All rights reserved. AutomatingPath Analysis for Building Causal Models from Data: First Results and Open Problems Paul R. Cohen Lisa BaUesteros AdamCarlson Robert St.Amant Experimental KnowledgeSystems Laboratory Department of Computer Science University of Massachusetts, Amherst [email protected]@cs.umass.edu [email protected] [email protected] Abstract mathematical basis than path analysis; they rely on evidence of nonindependence, a weaker criterion than Path analysis is a generalization of multiple linear regres- path analysis, which relies on evidence of correlation. sion that builds modelswith causal interpretations. It is Thosealgorithms will be discussed in a later section. an exploratory or discovery procedure for finding causal structure in correlational data. Recently, we have applied Wedeveloped the path analysis algorithm to help us path analysis to the problem of building models of AI discover causal explanations of how a complex AI programs, which are generally complex and poorly planning system works. The system, called Phoenix understood. For example, we built by hand a path- [Cohenet al., 89], simulates forest fires and the activities analytic causal model of the behavior of the Phoenix of agents such as bulldozers and helicopters. One agent, planner. Path analysis has a huge search space, however. called the fireboss, plans howthe others, whichare semi- If one measures N parameters of a system, then one can autonomous,should fight the fire; but things inevitably go build O(2N2) causal mbdels relating these parameters. wrong,winds shift, plans fail, bulldozers run out of gas, For this reason, we have developed an algorithm that and the fireboss soon has a crisis on its hands. -

Causal Inference and Data Fusion in Econometrics∗

TECHNICAL REPORT R-51 First version: Dec, 2019 Last revision: March, 2021 Causal Inference and Data Fusion in Econometrics∗ Paul Hunermund¨ Elias Bareinboim Copenhagen Business School Columbia University [email protected] [email protected] Learning about cause and effect is arguably the main goal in applied economet- rics. In practice, the validity of these causal inferences is contingent on a number of critical assumptions regarding the type of data that has been collected and the substantive knowledge that is available about the phenomenon under investiga- tion. For instance, unobserved confounding factors threaten the internal validity of estimates, data availability is often limited to non-random, selection-biased sam- ples, causal effects need to be learned from surrogate experiments with imperfect compliance, and causal knowledge has to be extrapolated across structurally hetero- geneous populations. A powerful causal inference framework is required in order to tackle all of these challenges, which plague essentially any data analysis to varying degrees. Building on the structural approach to causality introduced by Haavelmo (1943) and the graph-theoretic framework proposed by Pearl (1995), the artificial intelligence (AI) literature has developed a wide array of techniques for causal learn- ing that allow to leverage information from various imperfect, heterogeneous, and biased data sources (Bareinboim and Pearl, 2016). In this paper, we discuss recent advances made in this literature that have the potential to contribute to econo- metric methodology along three broad dimensions. First, they provide a unified and comprehensive framework for causal inference, in which the above-mentioned problems can be addressed in full generality. -

Path Analysis in Mplus: a Tutorial Using a Conceptual Model of Psychological and Behavioral Antecedents of Bulimic Symptoms in Young Adults

¦ 2019 Vol. 15 no. 1 Path ANALYSIS IN Mplus: A TUTORIAL USING A CONCEPTUAL MODEL OF PSYCHOLOGICAL AND BEHAVIORAL ANTECEDENTS OF BULIMIC SYMPTOMS IN YOUNG ADULTS Kheana Barbeau a, B, KaYLA Boileau A, FATIMA Sarr A & KEVIN Smith A A School OF Psychology, University OF Ottawa AbstrACT ACTING Editor Path ANALYSIS IS A WIDELY USED MULTIVARIATE TECHNIQUE TO CONSTRUCT CONCEPTUAL MODELS OF psychological, cognitive, AND BEHAVIORAL phenomena. Although CAUSATION CANNOT BE INFERRED BY Roland PfiSTER (Uni- VERSIT¨AT Wurzburg)¨ USING THIS technique, RESEARCHERS UTILIZE PATH ANALYSIS TO PORTRAY POSSIBLE CAUSAL LINKAGES BETWEEN Reviewers OBSERVABLE CONSTRUCTS IN ORDER TO BETTER UNDERSTAND THE PROCESSES AND MECHANISMS BEHIND A GIVEN phenomenon. The OBJECTIVES OF THIS TUTORIAL ARE TO PROVIDE AN OVERVIEW OF PATH analysis, step-by- One ANONYMOUS re- VIEWER STEP INSTRUCTIONS FOR CONDUCTING PATH ANALYSIS IN Mplus, AND GUIDELINES AND TOOLS FOR RESEARCHERS TO CONSTRUCT AND EVALUATE PATH MODELS IN THEIR RESPECTIVE fiELDS OF STUDY. These OBJECTIVES WILL BE MET BY USING DATA TO GENERATE A CONCEPTUAL MODEL OF THE psychological, cognitive, AND BEHAVIORAL ANTECEDENTS OF BULIMIC SYMPTOMS IN A SAMPLE OF YOUNG adults. KEYWORDS TOOLS Path analysis; Tutorial; Eating Disorders. Mplus. B [email protected] KB: 0000-0001-5596-6214; KB: 0000-0002-1132-5789; FS:NA; KS:NA 10.20982/tqmp.15.1.p038 Introduction QUESTIONS AND IS COMMONLY EMPLOYED FOR GENERATING AND EVALUATING CAUSAL models. This TUTORIAL AIMS TO PROVIDE ba- Path ANALYSIS (also KNOWN AS CAUSAL modeling) IS A multi- SIC KNOWLEDGE IN EMPLOYING AND INTERPRETING PATH models, VARIATE STATISTICAL TECHNIQUE THAT IS OFTEN USED TO DETERMINE GUIDELINES FOR CREATING PATH models, AND UTILIZING Mplus TO IF A PARTICULAR A PRIORI CAUSAL MODEL fiTS A RESEARCHER’S DATA CONDUCT PATH analysis. -

STATS 361: Causal Inference

STATS 361: Causal Inference Stefan Wager Stanford University Spring 2020 Contents 1 Randomized Controlled Trials 2 2 Unconfoundedness and the Propensity Score 9 3 Efficient Treatment Effect Estimation via Augmented IPW 18 4 Estimating Treatment Heterogeneity 27 5 Regression Discontinuity Designs 35 6 Finite Sample Inference in RDDs 43 7 Balancing Estimators 52 8 Methods for Panel Data 61 9 Instrumental Variables Regression 68 10 Local Average Treatment Effects 74 11 Policy Learning 83 12 Evaluating Dynamic Policies 91 13 Structural Equation Modeling 99 14 Adaptive Experiments 107 1 Lecture 1 Randomized Controlled Trials Randomized controlled trials (RCTs) form the foundation of statistical causal inference. When available, evidence drawn from RCTs is often considered gold statistical evidence; and even when RCTs cannot be run for ethical or practical reasons, the quality of observational studies is often assessed in terms of how well the observational study approximates an RCT. Today's lecture is about estimation of average treatment effects in RCTs in terms of the potential outcomes model, and discusses the role of regression adjustments for causal effect estimation. The average treatment effect is iden- tified entirely via randomization (or, by design of the experiment). Regression adjustments may be used to decrease variance, but regression modeling plays no role in defining the average treatment effect. The average treatment effect We define the causal effect of a treatment via potential outcomes. For a binary treatment w 2 f0; 1g, we define potential outcomes Yi(1) and Yi(0) corresponding to the outcome the i-th subject would have experienced had they respectively received the treatment or not. -

Conditional Independence and Its Representations*

Kybernetika, Vol. 25:2, 33-44, 1989. TECHNICAL REPORT R-114-S CONDITIONAL INDEPENDENCE AND ITS REPRESENTATIONS* JUDEA PEARL, DAN GEIGER, THOMAS VERMA This paper summarizes recent investigations into the nature of informational dependencies and their representations. Axiomatic and graphical representations are presented which are both sound and complete for specialized types of independence statements. 1. INTRODUCTION A central requirement for managing reasoning systems is to articulate the condi tions under which one item of information is considered relevant to another, given what we already know, and to encode knowledge in structures that display these conditions vividly as the knowledge undergoes changes. Different formalisms give rise to different definitions of relevance. However, the essence of relevance can be captured by a structure common to all formalisms, which can be represented axio- matically or graphically. A powerful formalism for informational relevance is provided by probability theory, where the notion of relevance is identified with dependence or, more specifically, conditional independence. Definition. If X, Y, and Z are three disjoint subsets of variables in a distribution P, then X and Yare said to be conditionally independent given Z, denotedl(X, Z, Y)P, iff P(x, y j z) = P(x | z) P(y \ z) for all possible assignments X = x, Y = y and Z = z for which P(Z — z) > 0.1(X, Z, Y)P is called a (conditional independence) statement. A conditional independence statement a logically follows from a set E of such statements if a holds in every distribution that obeys I. In such case we also say that or is a valid consequence of I. -

Causality, Conditional Independence, and Graphical Separation in Settable Systems

ARTICLE Communicated by Terrence Sejnowski Causality, Conditional Independence, and Graphical Separation in Settable Systems Karim Chalak Downloaded from http://direct.mit.edu/neco/article-pdf/24/7/1611/1065694/neco_a_00295.pdf by guest on 26 September 2021 [email protected] Department of Economics, Boston College, Chestnut Hill, MA 02467, U.S.A. Halbert White [email protected] Department of Economics, University of California, San Diego, La Jolla, CA 92093, U.S.A. We study the connections between causal relations and conditional in- dependence within the settable systems extension of the Pearl causal model (PCM). Our analysis clearly distinguishes between causal notions and probabilistic notions, and it does not formally rely on graphical representations. As a foundation, we provide definitions in terms of suit- able functional dependence for direct causality and for indirect and total causality via and exclusive of a set of variables. Based on these founda- tions, we provide causal and stochastic conditions formally characterizing conditional dependence among random vectors of interest in structural systems by stating and proving the conditional Reichenbach principle of common cause, obtaining the classical Reichenbach principle as a corol- lary. We apply the conditional Reichenbach principle to show that the useful tools of d-separation and D-separation can be employed to estab- lish conditional independence within suitably restricted settable systems analogous to Markovian PCMs. 1 Introduction This article studies the connections between