Tricore Architecture Overview

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Schedule 14A Employee Slides Supertex Sunnyvale

UNITED STATES SECURITIES AND EXCHANGE COMMISSION Washington, D.C. 20549 SCHEDULE 14A Proxy Statement Pursuant to Section 14(a) of the Securities Exchange Act of 1934 Filed by the Registrant Filed by a Party other than the Registrant Check the appropriate box: Preliminary Proxy Statement Confidential, for Use of the Commission Only (as permitted by Rule 14a-6(e)(2)) Definitive Proxy Statement Definitive Additional Materials Soliciting Material Pursuant to §240.14a-12 Supertex, Inc. (Name of Registrant as Specified In Its Charter) Microchip Technology Incorporated (Name of Person(s) Filing Proxy Statement, if other than the Registrant) Payment of Filing Fee (Check the appropriate box): No fee required. Fee computed on table below per Exchange Act Rules 14a-6(i)(1) and 0-11. (1) Title of each class of securities to which transaction applies: (2) Aggregate number of securities to which transaction applies: (3) Per unit price or other underlying value of transaction computed pursuant to Exchange Act Rule 0-11 (set forth the amount on which the filing fee is calculated and state how it was determined): (4) Proposed maximum aggregate value of transaction: (5) Total fee paid: Fee paid previously with preliminary materials. Check box if any part of the fee is offset as provided by Exchange Act Rule 0-11(a)(2) and identify the filing for which the offsetting fee was paid previously. Identify the previous filing by registration statement number, or the Form or Schedule and the date of its filing. (1) Amount Previously Paid: (2) Form, Schedule or Registration Statement No.: (3) Filing Party: (4) Date Filed: Filed by Microchip Technology Incorporated Pursuant to Rule 14a-12 of the Securities Exchange Act of 1934 Subject Company: Supertex, Inc. -

LINEAR ALGEBRA METHODS in COMBINATORICS László Babai

LINEAR ALGEBRA METHODS IN COMBINATORICS L´aszl´oBabai and P´eterFrankl Version 2.1∗ March 2020 ||||| ∗ Slight update of Version 2, 1992. ||||||||||||||||||||||| 1 c L´aszl´oBabai and P´eterFrankl. 1988, 1992, 2020. Preface Due perhaps to a recognition of the wide applicability of their elementary concepts and techniques, both combinatorics and linear algebra have gained increased representation in college mathematics curricula in recent decades. The combinatorial nature of the determinant expansion (and the related difficulty in teaching it) may hint at the plausibility of some link between the two areas. A more profound connection, the use of determinants in combinatorial enumeration goes back at least to the work of Kirchhoff in the middle of the 19th century on counting spanning trees in an electrical network. It is much less known, however, that quite apart from the theory of determinants, the elements of the theory of linear spaces has found striking applications to the theory of families of finite sets. With a mere knowledge of the concept of linear independence, unexpected connections can be made between algebra and combinatorics, thus greatly enhancing the impact of each subject on the student's perception of beauty and sense of coherence in mathematics. If these adjectives seem inflated, the reader is kindly invited to open the first chapter of the book, read the first page to the point where the first result is stated (\No more than 32 clubs can be formed in Oddtown"), and try to prove it before reading on. (The effect would, of course, be magnified if the title of this volume did not give away where to look for clues.) What we have said so far may suggest that the best place to present this material is a mathematics enhancement program for motivated high school students. -

IBM Z Systems Introduction May 2017

IBM z Systems Introduction May 2017 IBM z13s and IBM z13 Frequently Asked Questions Worldwide ZSQ03076-USEN-15 Table of Contents z13s Hardware .......................................................................................................................................................................... 3 z13 Hardware ........................................................................................................................................................................... 11 Performance ............................................................................................................................................................................ 19 z13 Warranty ............................................................................................................................................................................ 23 Hardware Management Console (HMC) ..................................................................................................................... 24 Power requirements (including High Voltage DC Power option) ..................................................................... 28 Overhead Cabling and Power ..........................................................................................................................................30 z13 Water cooling option .................................................................................................................................................... 31 Secure Service Container ................................................................................................................................................. -

UNIT-I Mathematical Logic Statements and Notations

UNIT-I Mathematical Logic Statements and notations: A proposition or statement is a declarative sentence that is either true or false (but not both). For instance, the following are propositions: “Paris is in France” (true), “London is in Denmark” (false), “2 < 4” (true), “4 = 7 (false)”. However the following are not propositions: “what is your name?” (this is a question), “do your homework” (this is a command), “this sentence is false” (neither true nor false), “x is an even number” (it depends on what x represents), “Socrates” (it is not even a sentence). The truth or falsehood of a proposition is called its truth value. Connectives: Connectives are used for making compound propositions. The main ones are the following (p and q represent given propositions): Name Represented Meaning Negation ¬p “not p” Conjunction p q “p and q” Disjunction p q “p or q (or both)” Exclusive Or p q “either p or q, but not both” Implication p ⊕ q “if p then q” Biconditional p q “p if and only if q” Truth Tables: Logical identity Logical identity is an operation on one logical value, typically the value of a proposition that produces a value of true if its operand is true and a value of false if its operand is false. The truth table for the logical identity operator is as follows: Logical Identity p p T T F F Logical negation Logical negation is an operation on one logical value, typically the value of a proposition that produces a value of true if its operand is false and a value of false if its operand is true. -

Micro-Circuits for High Energy Physics*

MICRO-CIRCUITS FOR HIGH ENERGY PHYSICS* Paul F. Kunz Stanford Linear Accelerator Center Stanford University, Stanford, California, U.S.A. ABSTRACT Microprogramming is an inherently elegant method for implementing many digital systems. It is a mixture of hardware and software techniques with the logic subsystems controlled by "instructions" stored Figure 1: Basic TTL Gate in a memory. In the past, designing microprogrammed systems was difficult, tedious, and expensive because the available components were capable of only limited number of functions. Today, however, large blocks of microprogrammed systems have been incorporated into a A input B input C output single I.e., thus microprogramming has become a simple, practical method. false false true false true true true false true true true false 1. INTRODUCTION 1.1 BRIEF HISTORY OF MICROCIRCUITS Figure 2: Truth Table for NAND Gate. The first question which arises when one talks about microcircuits is: What is a microcircuit? The answer is simple: a complete circuit within a single integrated-circuit (I.e.) package or chip. The next question one might ask is: What circuits are available? The answer to this question is also simple: it depends. It depends on the economics of the circuit for the semiconductor manufacturer, which depends on the technology he uses, which in turn changes as a function of time. Thus to understand Figure 3: Logical NOT Circuit. what microcircuits are available today and what makes them different from those of yesterday it is interesting to look into the economics of producing microcircuits. The basic element in a logic circuit is a gate, which is a circuit with a number of inputs and one output and it performs a basic logical function such as AND, OR, or NOT. -

New Approaches for Memristive Logic Computations

Portland State University PDXScholar Dissertations and Theses Dissertations and Theses 6-6-2018 New Approaches for Memristive Logic Computations Muayad Jaafar Aljafar Portland State University Let us know how access to this document benefits ouy . Follow this and additional works at: https://pdxscholar.library.pdx.edu/open_access_etds Part of the Electrical and Computer Engineering Commons Recommended Citation Aljafar, Muayad Jaafar, "New Approaches for Memristive Logic Computations" (2018). Dissertations and Theses. Paper 4372. 10.15760/etd.6256 This Dissertation is brought to you for free and open access. It has been accepted for inclusion in Dissertations and Theses by an authorized administrator of PDXScholar. For more information, please contact [email protected]. New Approaches for Memristive Logic Computations by Muayad Jaafar Aljafar A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Electrical and Computer Engineering Dissertation Committee: Marek A. Perkowski, Chair John M. Acken Xiaoyu Song Steven Bleiler Portland State University 2018 © 2018 Muayad Jaafar Aljafar Abstract Over the past five decades, exponential advances in device integration in microelectronics for memory and computation applications have been observed. These advances are closely related to miniaturization in integrated circuit technologies. However, this miniaturization is reaching the physical limit (i.e., the end of Moore’s Law). This miniaturization is also causing a dramatic problem of heat dissipation in integrated circuits. Additionally, approaching the physical limit of semiconductor devices in fabrication process increases the delay of moving data between computing and memory units hence decreasing the performance. The market requirements for faster computers with lower power consumption can be addressed by new emerging technologies such as memristors. -

Download a PDF Version of the 2017 Annual Review

Front Back Cover for Avisan.qxp_Layout 1 11/15/17 1:27 PM Page 1 Secure Technology Alliance 191 Clarksville Road Princeton Junction, New Jersey 08550 Annual Review 2017 Alliance A Secure Publication Technology 7 Volume www.securetechalliance.org Annual Review 2017 SCA-ad 2017 resized.indd 1 10/30/2017 10:40:22 AM EXECUTIVE DIRECTOR’S LETTER: A MESSAGE FROM RANDY VANDERHOOF Delivering Value to a Diverse Market Thank you for taking the time to read the 2017 Annual Review. This publication captures the best aspects of the membership experience for 2017 that hundreds of individual members and their organizations helped to provide. This year was especially sig- nificant, as the organization expanded its mission beyond smart cards and was re-branded as the Secure Technology Alliance. The new name and scope allows the Alliance to include embedded chip technology, hardware and software, and the future of digital security in all forms. The vast number of deliverables and member-driven activities recorded in the publication illustrates the diversity of the markets we serve and the commitment of all the industry professionals who contribute their knowledge and leadership toward expanding the market for smart card and related secure chip technologies. CHANGE COMES WITH NEW OPPORTUNITIES The decision to expand the mission and rebrand the organization was driven THE DECISION TO EXPAND by the changes in the secure chip industry, mostly from mobile technol- ogy and the growth of Internet-connected devices. This does not mean the THE MISSION AND REBRAND market for smart cards has disappeared. In fact, over the last few years, the THE ORGANIZATION WAS U.S. -

Computer Architecture Out-Of-Order Execution

Computer Architecture Out-of-order Execution By Yoav Etsion With acknowledgement to Dan Tsafrir, Avi Mendelson, Lihu Rappoport, and Adi Yoaz 1 Computer Architecture 2013– Out-of-Order Execution The need for speed: Superscalar • Remember our goal: minimize CPU Time CPU Time = duration of clock cycle × CPI × IC • So far we have learned that in order to Minimize clock cycle ⇒ add more pipe stages Minimize CPI ⇒ utilize pipeline Minimize IC ⇒ change/improve the architecture • Why not make the pipeline deeper and deeper? Beyond some point, adding more pipe stages doesn’t help, because Control/data hazards increase, and become costlier • (Recall that in a pipelined CPU, CPI=1 only w/o hazards) • So what can we do next? Reduce the CPI by utilizing ILP (instruction level parallelism) We will need to duplicate HW for this purpose… 2 Computer Architecture 2013– Out-of-Order Execution A simple superscalar CPU • Duplicates the pipeline to accommodate ILP (IPC > 1) ILP=instruction-level parallelism • Note that duplicating HW in just one pipe stage doesn’t help e.g., when having 2 ALUs, the bottleneck moves to other stages IF ID EXE MEM WB • Conclusion: Getting IPC > 1 requires to fetch/decode/exe/retire >1 instruction per clock: IF ID EXE MEM WB 3 Computer Architecture 2013– Out-of-Order Execution Example: Pentium Processor • Pentium fetches & decodes 2 instructions per cycle • Before register file read, decide on pairing Can the two instructions be executed in parallel? (yes/no) u-pipe IF ID v-pipe • Pairing decision is based… On data -

Performance of a Computer (Chapter 4) Vishwani D

ELEC 5200-001/6200-001 Computer Architecture and Design Fall 2013 Performance of a Computer (Chapter 4) Vishwani D. Agrawal & Victor P. Nelson epartment of Electrical and Computer Engineering Auburn University, Auburn, AL 36849 ELEC 5200-001/6200-001 Performance Fall 2013 . Lecture 1 What is Performance? Response time: the time between the start and completion of a task. Throughput: the total amount of work done in a given time. Some performance measures: MIPS (million instructions per second). MFLOPS (million floating point operations per second), also GFLOPS, TFLOPS (1012), etc. SPEC (System Performance Evaluation Corporation) benchmarks. LINPACK benchmarks, floating point computing, used for supercomputers. Synthetic benchmarks. ELEC 5200-001/6200-001 Performance Fall 2013 . Lecture 2 Small and Large Numbers Small Large 10-3 milli m 103 kilo k 10-6 micro μ 106 mega M 10-9 nano n 109 giga G 10-12 pico p 1012 tera T 10-15 femto f 1015 peta P 10-18 atto 1018 exa 10-21 zepto 1021 zetta 10-24 yocto 1024 yotta ELEC 5200-001/6200-001 Performance Fall 2013 . Lecture 3 Computer Memory Size Number bits bytes 210 1,024 K Kb KB 220 1,048,576 M Mb MB 230 1,073,741,824 G Gb GB 240 1,099,511,627,776 T Tb TB ELEC 5200-001/6200-001 Performance Fall 2013 . Lecture 4 Units for Measuring Performance Time in seconds (s), microseconds (μs), nanoseconds (ns), or picoseconds (ps). Clock cycle Period of the hardware clock Example: one clock cycle means 1 nanosecond for a 1GHz clock frequency (or 1GHz clock rate) CPU time = (CPU clock cycles)/(clock rate) Cycles per instruction (CPI): average number of clock cycles used to execute a computer instruction. -

Parallel Computing

Lecture 1: Computer Organization 1 Outline • Overview of parallel computing • Overview of computer organization – Intel 8086 architecture • Implicit parallelism • von Neumann bottleneck • Cache memory – Writing cache-friendly code 2 Why parallel computing • Solving an × linear system Ax=b by using Gaussian elimination takes ≈ flops. 1 • On Core i7 975 @ 4.0 GHz,3 which is capable of about 3 60-70 Gigaflops flops time 1000 3.3×108 0.006 seconds 1000000 3.3×1017 57.9 days 3 What is parallel computing? • Serial computing • Parallel computing https://computing.llnl.gov/tutorials/parallel_comp 4 Milestones in Computer Architecture • Analytic engine (mechanical device), 1833 – Forerunner of modern digital computer, Charles Babbage (1792-1871) at University of Cambridge • Electronic Numerical Integrator and Computer (ENIAC), 1946 – Presper Eckert and John Mauchly at the University of Pennsylvania – The first, completely electronic, operational, general-purpose analytical calculator. 30 tons, 72 square meters, 200KW. – Read in 120 cards per minute, Addition took 200µs, Division took 6 ms. • IAS machine, 1952 – John von Neumann at Princeton’s Institute of Advanced Studies (IAS) – Program could be represented in digit form in the computer memory, along with data. Arithmetic could be implemented using binary numbers – Most current machines use this design • Transistors was invented at Bell Labs in 1948 by J. Bardeen, W. Brattain and W. Shockley. • PDP-1, 1960, DEC – First minicomputer (transistorized computer) • PDP-8, 1965, DEC – A single bus -

Safe and Secure Model-Driven Design for Embedded Systems Letitia Li

Safe and secure model-driven design for embedded systems Letitia Li To cite this version: Letitia Li. Safe and secure model-driven design for embedded systems. Embedded Systems. Université Paris-Saclay, 2018. English. NNT : 2018SACLT002. tel-01894734 HAL Id: tel-01894734 https://pastel.archives-ouvertes.fr/tel-01894734 Submitted on 12 Oct 2018 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Approche Orientee´ Modeles` pour la Suretˆ e´ et la Securit´ e´ des Systemes` Embarques´ These` de doctorat de l’Universite´ Paris-Saclay prepar´ ee´ a` Telecom ParisTech Ecole doctorale n◦580 Denomination´ (STIC) NNT : 2018SACLT002 Specialit´ e´ de doctorat: Informatique These` present´ ee´ et soutenue a` Biot, le 3 septembre` 2018, par LETITIA W. LI Composition du Jury : Prof. Philippe Collet Professeur, Universite´ Coteˆ d’Azur President´ Prof. Guy Gogniat Professeur, Universite´ de Bretagne Sud Rapporteur Prof. Maritta Heisel Professeur, University Duisburg-Essen Rapporteur Prof. Jean-Luc Danger Professeur, Telecom ParisTech Examinateur Dr. Patricia Guitton -

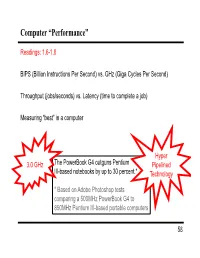

Computer “Performance”

Computer “Performance” Readings: 1.6-1.8 BIPS (Billion Instructions Per Second) vs. GHz (Giga Cycles Per Second) Throughput (jobs/seconds) vs. Latency (time to complete a job) Measuring “best” in a computer Hyper 3.0 GHz The PowerBook G4 outguns Pentium Pipelined III-based notebooks by up to 30 percent.* Technology * Based on Adobe Photoshop tests comparing a 500MHz PowerBook G4 to 850MHz Pentium III-based portable computers 58 Performance Example: Homebuilders Builder Time per Houses Per House Dollars Per House Month Options House Self-build 24 months 1/24 Infinite $200,000 Contractor 3 months 1 100 $400,000 Prefab 6 months 1,000 1 $250,000 Which is the “best” home builder? Homeowner on a budget? Rebuilding Haiti? Moving to wilds of Alaska? Which is the “speediest” builder? Latency: how fast is one house built? Throughput: how long will it take to build a large number of houses? 59 Computer Performance Primary goal: execution time (time from program start to program completion) 1 Performance ExecutionTime To compare machines, we say “X is n times faster than Y” Performance ExecutionTime n x y Performancey ExecutionTimex Example: Machine Orange and Grape run a program Orange takes 5 seconds, Grape takes 10 seconds Orange is _____ times faster than Grape 60 Execution Time Elapsed Time counts everything (disk and memory accesses, I/O , etc.) a useful number, but often not good for comparison purposes CPU time doesn't count I/O or time spent running other programs can be broken up into system time, and user time Example: Unix “time” command linux15.ee.washington.edu> time javac CircuitViewer.java 3.370u 0.570s 0:12.44 31.6% Our focus: user CPU time time spent executing the lines of code that are "in" our program 61 CPU Time CPU execution time CPU clock cycles =*Clock period for a program for a program CPU execution time CPU clock cycles 1 =* for a program for a program Clock rate Application example: A program takes 10 seconds on computer Orange, with a 400MHz clock.