Multimedia Terminal for Digital Television

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

TV Channel Distribution in Europe: Table of Contents

TV Channel Distribution in Europe: Table of Contents This report covers 238 international channels/networks across 152 major operators in 34 EMEA countries. From the total, 67 channels (28%) transmit in high definition (HD). The report shows the reader which international channels are carried by which operator – and which tier or package the channel appears on. The report allows for easy comparison between operators, revealing the gaps and showing the different tiers on different operators that a channel appears on. Published in September 2012, this 168-page electronically-delivered report comes in two parts: A 128-page PDF giving an executive summary, comparison tables and country-by-country detail. A 40-page excel workbook allowing you to manipulate the data between countries and by channel. Countries and operators covered: Country Operator Albania Digitalb DTT; Digitalb Satellite; Tring TV DTT; Tring TV Satellite Austria A1/Telekom Austria; Austriasat; Liwest; Salzburg; UPC; Sky Belgium Belgacom; Numericable; Telenet; VOO; Telesat; TV Vlaanderen Bulgaria Blizoo; Bulsatcom; Satellite BG; Vivacom Croatia Bnet Cable; Bnet Satellite Total TV; Digi TV; Max TV/T-HT Czech Rep CS Link; Digi TV; freeSAT (formerly UPC Direct); O2; Skylink; UPC Cable Denmark Boxer; Canal Digital; Stofa; TDC; Viasat; You See Estonia Elion nutitv; Starman; ZUUMtv; Viasat Finland Canal Digital; DNA Welho; Elisa; Plus TV; Sonera; Viasat Satellite France Bouygues Telecom; CanalSat; Numericable; Orange DSL & fiber; SFR; TNT Sat Germany Deutsche Telekom; HD+; Kabel -

Grelha De Canais Pacotes Zap

GRELHA DE CANAIS PACOTES ZAP CANAIS CATEGORIA IDIOMA POSIÇÃO MINI MAX PREMIUM PLUS TPA Internacional Internacional Português 4 x x x TVM 1 Nacional Português 5 x x x STV Nacional Português 6 x x x TIM Nacional Português 7 x x x TVM 2 Nacional Português 8 x x x STV Notícias Informação Português 9 x x x Globo HD Generalista Português 10 x Globo SD Generalista Português 11 x TVI Internacional Generalista Português 12 x x SIC Mulher Lifestyle Português 13 x x RTP África Generalista Português 14 x x x RTP Informação Informação Português 15 x x x TVI 24 Informação Português 16 x x x SIC Notícias Informação Português 17 x x CMTV Informação Português 18 x x x RTP INTERNACIONAL Informação Português 19 x x x SportTV África Desporto Português 20 x SportTV África HD Desporto Português 21 x SportTV África 2 HD Desporto Português 23 x x Benfica TV Desporto Português 24 x NBA TV Desporto Inglês 25 x x FOX Sports Desporto Inglês 26 x x PFC Desporto Português 27 x x Eurosport News Desporto Português 28 x x x Porto Canal Generalista Português 29 x x Abola TV Desporto Português 30 x x x EXTREME SPORTS CHANNEL Desporto Inglês 31 x x x Motorvision Desporto Alemão 32 x x Caça e Pesca Desporto Português 33 x x Fight Network Desporto Inglês 34 x Nautical Channel Lifestyle Inglês 35 x x Outdoor Desporto Francês 36 x Real Madrid TV Desporto Português 37 x x Manchester United TV Desporto Inglês 38 x x Sporting TV Desporto Português 39 x x Panda Infantil Português 40 x x x Panda Biggs Infantil Português 41 x x SIC K Infantil Português 42 x x x Baby First TV Infantil -

Chellomedia Overviewvf.Pdf

Chellomedia Overview June 2013 Company Overview • Chellomedia produces and distributes channels in over 125 countries and 27 languages – Reaches over 375M TV households in EMEA and Latin America • Owns 48 channels and has 20 channel JVs with third parties including CBS, Pulsat and Zon Multimedia1 – Includes brands across lifestyle, entertainment, movies, sports and dramas • Serves as the international content division of Liberty Global (“Liberty”), an approximately $45BN in enterprise value, public company – Considers Chellomedia non-core and is starting an auction sales process CY 2013E TV Revenue by Geography CY 2013E TV Revenue by Genre Other Lifestyle 13% 12% Sports Netherlands Czech 23% 21% 3% Entertainment 8% Poland 7% Portugal Hungary 8% 13% Childrens 15% LatAm Movies 12% Spain 32% Factual UK 13% 9% 10% Source: Preliminary financials based on estimated or proprietary information provided by investment banks 1 Channel count and data as of 31-Dec-2012 2 Business Units 3 Operator of global Largest Leading Pay-TV Provider of Provider of play- Provider of Pay- thematic channels independent channels provider premium channels out services, TV TV channels in channel operator across the CEE in the Netherlands distribution and Latin America in Spain & region content delivery Channels Portugal and JVs1 17 Channels 22 Channels 13 Channels 4 Channels 12 Channels (of which 8 (of which 7 (of which 1 (of which 4 through JVs) through JVs) through JV) through JVs) JV Partners Miami/Buenos Headquarters London Madrid Budapest Amsterdam Amsterdam Aires -

Directory English

DIRECTORY ENGLISH LOCATION Vila Monte Farm House is located in Moncarapacho, between Olhão and Tavira, only 10 minutes away from the coast. With 55 rooms and 4 different buildings , Vila Monte bewitches you with its timeless simplicity, graceful peacefulness and the perfect re- interpretation of the Algarve spirit, now translated into a laid-back, genuine atmosphere and a boho-chic lifestyle. Due to its privileged location between the Algarve mountain range and the ocean, Vila Monte Farm House offers a unique opportunity to discover different angles from a region mostly reputed for its sunshine and alluring beaches. One of these “angles” is the town of Olhão… a complete surprise… a town which is mostly a combination of scenarios and sounds which reveal a lifestyle centred around the sea. Fishing has always been the typical livelihood of its inhabitants and its importance is patent everywhere you look: from the harbour which becomes noisily alive when boats return from their fishing trips, to the amazing diversity of fish and seafood displayed on typical market stalls. Olhão fishermen are mythical figures and are widely reputed for their mastery – both as fishermen as well as chefs / cooks. Saturday is a special day …. a real pilgrimage takes place when little producers from the Algarve hills arrive at the market to sell their fruits and vegetables. Surrender yourself to Olhão, a lively fishing town which is home to some of the most charming landscapes in the region as well as some of Europe’s best beaches. The seafood abundance allied to the local talent to transform it into heavenly culinary dishes, has conquered Olhão the reputation of being Algarve’s gastronomic capital. -

![Arxiv:1901.04985V1 [Cs.DC] 12 Jan 2019 Computing Power of Embedded Or Edge Devices, Neural Net- Tion, Saving Computing Resources](https://docslib.b-cdn.net/cover/1322/arxiv-1901-04985v1-cs-dc-12-jan-2019-computing-power-of-embedded-or-edge-devices-neural-net-tion-saving-computing-resources-1051322.webp)

Arxiv:1901.04985V1 [Cs.DC] 12 Jan 2019 Computing Power of Embedded Or Edge Devices, Neural Net- Tion, Saving Computing Resources

NNStreamer: Stream Processing Paradigm for Neural Networks, Toward Efficient Development and Execution of On-Device AI Applications MyungJoo Ham1 Ji Joong Moon1 Geunsik Lim1 Wook Song1 Jaeyun Jung1 Hyoungjoo Ahn1 Sangjung Woo1 Youngchul Cho1 Jinhyuck Park2 Sewon Oh1 Hong-Seok Kim1,3 1Samsung Research, Samsung Electronics 2Biotech Academy, Samsung BioLogics 3Left the affiliation 1,2{myungjoo.ham, jijoong.moon, geunsik.lim, wook16.song, jy1210.jung, hello.ahn, sangjung.woo, rams.cho, jinhyuck83.park, sewon.oh, hongse.kim}@samsung.com Abstract 3. Reduce operating cost by off-loading computation to de- We propose nnstreamer, a software system that handles vices from servers. This cost is often neglected; it is, how- neural networks as filters of stream pipelines, applying the ever, significant if billions of devices are to be deployed. stream processing paradigm to neural network applications. We do not discuss the potential advantage, distributing work- A new trend with the wide-spread of deep neural network loads across edge devices, because it is not in the scope of applications is on-device AI; i.e., processing neural networks this paper, but of future work. directly on mobile devices or edge/IoT devices instead of On-device AI achieves such advantages by processing di- cloud servers. Emerging privacy issues, data transmission rectly in the nodes where data exist so that data transmis- costs, and operational costs signifies the need for on-device sion is reduced and sensitive data are kept inside. However, AI especially when a huge number of devices with real-time on-device AI induces significant challenges. Normally, edge data processing are deployed. -

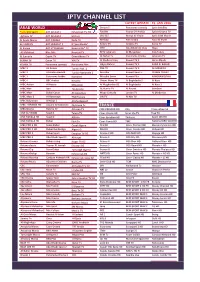

Iptv Channel List Latest Update : 15

IPTV CHANNEL LIST LATEST UPDATE : 15. JAN 2016 ARAB WORLD Dream 2 Panorama comedy Syria Satellite *new Nile Sport ART AFLAM 1 Echourouk TV HD Fatafet France 24 Arabia Syrian Drama TV Aghapy TV ART AFLAM 2 Elsharq LBC SAT Rusiya Al-Yaum Syria 18th March Al Aoula Maroc ART CINEMA Kaifa TV Melody FOX arabia Nour El Sham AL HANEEN ART HEKAYAT 2 N*geo Abuda* Future TV Arabica TV Sama TV Al Karma ART H*ZAMANE Noorsat Sh* TV OTV Abu Dhabi AL Oula Heya Al Malakoot Blue Nile Noorsat TV MTV Lebanon Al Mayadeen Iraq Today Al Sumaria Coptic TV Orient News TV Al Nahar Tv Panorama Drama Lebanon TV BENNA TV Coran TV VIN TV Al Rasheed Iraq Kuwait TV 1 Hona Alquds YEMEN TV Panorama comedy Panorama Film Libya Alahrar Kuwait TV 2 SADA EL BALAD MBC 1 AL Arabia Tunisie Nat. 1 DW-TV Kuwait TV 3 AL MASRIYA MBC 2 Al Arabia Hadath Tunisie Nationale 2 Mazzika Kuwait Sport + YEMEN TODAY MBC 3 Euronews Arabia Hannibal Mazzika Zoom Kuwait Plus KAHERAWELNAS MBC 4 BBC Arabia Nessma Orient News TV Al Baghdadia 2 Al Kass MBC Action Al Resala Ettounisia Al Magharibia DZ Al Baghdadia Al Kass 2 MBC Max Iqra Tounessna AL Hurria TV Al Fourat SemSem MBC Masr Dubai Coran Al Janoubiya Moga Comedy Jordan TV Al Ekhbariya MBC Masr 2 Al Haramayn TNN Tunisia ON TV Al Nas TV MBC Bolywood Al Majd 3 Al Moutawasit MBC + DRAMA HD Assuna Annabaouia Zaytouna Tv FRANCE MBC Drama Rahma TV Al Insen TV CINE PREMIER HD City Trace urban hd OSN AL YAWM Saudi 1 Telvza TV Cine+ Classic HD Sci et Vie TV Trek TV OSN YAHALA HD Saudi 2 Attasia Cine+ Emotion HD Thalassa Sport 365 HD OSN YAHALA HD Dubai -

Grelha-De-Frequencias-Tv-Analogica.Pdf

GRELHA DE FREQUÊNCIAS TV ANALÓGICA Distrito de Lisboa e Setúbal Região do Algarve PALMELA PORTIMÃO PROG EMISSÃO FREQ CANAL PROG EMISSÃO FREQ CANAL 1 RTP1 182,25 CC6 1 RTP1 189,25 CC7 2 RTP2 196,25 CC8 2 RTP2 203,25 CC9 3 SIC 217,25 CC11 3 SIC 217,25 CC11 4 TVI 224,25 CC12 4 TVI 224,25 CC12 5 RTP Informação 231,25 S11 5 RTP Informação 231,25 S11 6 SIC Notícias 238,25 S12 6 SIC Notícias 238,25 S12 7 TVI 24 245,25 S13 7 TVI 24 245,25 S13 8 SIC Mulher 679,25 CC47 8 SIC Mulher 679,25 CC47 9 SIC Radical 687,25 CC48 9 SIC Radical 687,25 CC48 10 Globo 703,25 CC50 10 Globo 703,25 CC50 11 RTP África 695,25 CC49 11 RTP África 695,25 CC49 12 RTP Memória 252,25 S14 12 RTP Memória 252,25 S14 13 Discovery 119,25 S3 13 Discovery 119,25 S3 14 Odisseia 126,25 S4 14 Odisseia 126,25 S4 15 História 133,25 S5 15 História 133,25 S5 National National 16 Geographic 655,25 CC44 16 Geographic 655,25 CC44 Channel Channel 17 A&E 140,25 S6 17 A&E 140,25 S6 18 AXN 280,25 S18 18 AXN 280,25 S18 19 FOX 711,25 CC51 19 FOX 711,25 CC51 20 Fox Movies 175,25 CC5 20 Fox Movies 175,25 CC5 21 Hollywood 287,25 S19 21 Hollywood 287,25 S19 22 AMC 259,25 S15 22 AMC 259,25 S15 23 Disney Channel 743,25 CC55 23 Disney Channel 743,25 CC55 24 Cartoon Network 719,25 CC52 24 Cartoon Network 719,25 CC52 25 Panda 266,25 S16 25 Panda 266,25 S16 26 Eurosport 1 273,25 S17 26 Eurosport 1 273,25 S17 27 Fox Life 294,25 S20 27 Fox Life 294,25 S20 28 MTV 671,25 CC46 28 MTV 671,25 CC46 29 VH1 663,26 CC45 29 VH1 663,25 CC45 30 TLC 735,25 CC54 30 TLC 735,25 CC54 31 24 Kitchen 727,25 CC53 31 24 Kitchen -

Does That Look Right?

Gregory Helmstetter Digital Preservation Final Project December 15, 2017 Does That Look Right? Playback Software in Digital Preservation With the ubiquity of digital files persisting throughout every aspect of modern life, from cell phone videos to time-based art to video preservation and archiving, one major question is often asked when playing back a digital file: Does that look right? This is an important question for several reasons. First, this is often the first question conservators, preservationists, or archivists ask when playing back video on any platform, whether it be in digital playback software or on analog machines. Second, as of the writing of this paper, I am frankly still questioning whether or not particular digital videos look the way they were intended to look and still questioning how software plays a role in the presentation of videos. And third, the results of this paper and the informal case study I conducted specifically to test playback software may generate more questions that conservators, et al, should ask when playing back digital files. This paper, therefore, will survey a number of playback software tools that archives rely on in quality control processes (particularly as they pertain to large-scale digital preservation workflows); it will consider the critical role that software plays when displaying digital content for preservation and quality control processes and how software is integrated into these workflows; and it will outline and address issues one might encounter when playing digital video files on different software. The Case Study First it will be beneficial to discuss from where this idea originated. -

2.5 Gstreamer

VYSOKÉ UČENÍ TECHNICKÉ V BRNĚ BRNO UNIVERSITY OF TECHNOLOGY FAKULTA INFORMAČNÍCH TECHNOLOGIÍ ÚSTAV POČÍTAČOVÉ GRAFIKY A MULTIMÉDIÍ FACULTY OF INFORMATION TECHNOLOGY DEPARTMENT OF COMPUTER GRAPHICS AND MULTIMEDIA SROVNÁNÍ MULTIMEDIÁLNÍCH FRAMEWORKŮ COMPARISON OF MULTIMEDIA FRAMEWORKS BAKALÁŘSKÁ PRÁCE BACHELOR‘S THESIS AUTOR PRÁCE ZDENKO BRANDEJS AUTHOR VEDOUCÍ PRÁCE ING. DAVID BAŘINA SUPERVISOR BRNO 2016 Abstrakt Cílem této práce je vytvořit srovnání nejznámějších multimediálních frameworků podle podporovaných platforem, formátů a využití. Seznámit se s multimediálními frameworky a jejich knihovnami pro práci s multimédii. Konkrétně se jedná o Video for Windows, DirectShow, Media Foundation, FFmpeg, GStreamer, xine a QuickTime. Detailně popsat jak jednotlivé technologie fungují včetně jejich architektury. Vytvoření webového tutoriálu, kde se uplatní teoretické znalosti při tvorbě přehrávačů. Abstract The aim of this work is to create a comparison of the most famous multimedia frameworks according to supported platforms, formats and usage. To familiarize with a multimedia frameworks and their libraries for work with multimedia. Concretely with Video for Windows, DirectShow, Media Foundation, FFmpeg, GStreamer, xine and QuickTime. Describe in detail, how separate technologies work including their architectures. Building web tutorial, where will apply theoretical knowledge during creating players. Klíčová slova Multimédia, framework, Video for Windows, DirectShow, GStreamer, FFmpeg, xine, QuickTime, Media Foundation, srovnání, tutoriál, přehrávač, graf filtrů, řetězení. Keywords Multimedia, framework, Video for Windows, DirectShow, GStreamer, FFmpeg, xine, QuickTime, Media Foundation, comparison, tutorial, player, filter graph, pipeline. Citace BRANDEJS, Zdenko. Srovnání multimediálních frameworků. Brno, 2016. 20 s. Bakalářská práce. Vysoké učení technické v Brně, Fakulta informačních technologií. Vedoucí práce David Bařina. Název bakalářské práce v jazyce práce Prohlášení Prohlašuji, že jsem tuto bakalářskou práci vypracoval samostatně pod vedením Ing. -

Australia ########## 7Flix AU 7Mate AU 7Two

########## Australia ########## 7Flix AU 7Mate AU 7Two AU 9Gem AU 9Go! AU 9Life AU ABC AU ABC Comedy/ABC Kids NSW AU ABC Me AU ABC News AU ACCTV AU Al Jazeera AU Channel 9 AU Food Network AU Fox Sports 506 HD AU Fox Sports News AU M?ori Television NZ AU NITV AU Nine Adelaide AU Nine Brisbane AU Nine GO Sydney AU Nine Gem Adelaide AU Nine Gem Brisbane AU Nine Gem Melbourne AU Nine Gem Perth AU Nine Gem Sydney AU Nine Go Adelaide AU Nine Go Brisbane AU Nine Go Melbourne AU Nine Go Perth AU Nine Life Adelaide AU Nine Life Brisbane AU Nine Life Melbourne AU Nine Life Perth AU Nine Life Sydney AU Nine Melbourne AU Nine Perth AU Nine Sydney AU One HD AU Pac 12 AU Parliament TV AU Racing.Com AU Redbull TV AU SBS AU SBS Food AU SBS HD AU SBS Viceland AU Seven AU Sky Extreme AU Sky News Extra 1 AU Sky News Extra 2 AU Sky News Extra 3 AU Sky Racing 1 AU Sky Racing 2 AU Sonlife International AU Te Reo AU Ten AU Ten Sports AU Your Money HD AU ########## Crna Gora MNE ########## RTCG 1 MNE RTCG 2 MNE RTCG Sat MNE TV Vijesti MNE Prva TV CG MNE Nova M MNE Pink M MNE Atlas TV MNE Televizija 777 MNE RTS 1 RS RTS 1 (Backup) RS RTS 2 RS RTS 2 (Backup) RS RTS 3 RS RTS 3 (Backup) RS RTS Svet RS RTS Drama RS RTS Muzika RS RTS Trezor RS RTS Zivot RS N1 TV HD Srb RS N1 TV SD Srb RS Nova TV SD RS PRVA Max RS PRVA Plus RS Prva Kick RS Prva RS PRVA World RS FilmBox HD RS Filmbox Extra RS Filmbox Plus RS Film Klub RS Film Klub Extra RS Zadruga Live RS Happy TV RS Happy TV (Backup) RS Pikaboo RS O2.TV RS O2.TV (Backup) RS Studio B RS Nasha TV RS Mag TV RS RTV Vojvodina -

Roberta De Almeida Corrêa Cultura E

1 ROBERTA DE ALMEIDA CORRÊA CULTURA E PADRONIZAÇÃO: Produção e novas técnicas noticiosas nas seções “últimas notícias”, da Sic Online e da Globo.com (G1). FACULDADE DE LETRAS DA UNIVERSIDADE DE COIMBRA COIMBRA – PORTUGAL 2010 2 ROBERTA DE ALMEIDA CORRÊA CULTURA E PADRONIZAÇÃO: Produção e novas técnicas noticiosas nas seções “últimas notícias”, da Sic Online e da Globo.com (G1). FACULDADE DE LETRAS DA UNIVERSIDADE DE COIMBRA Dissertação de Mestrado em Comunicação e Jornalismo, especialidade em webjornalismo, apresentada à Faculdade de Letras da Universidade de Coimbra, sob a orientação do Dr. Sílvio Correia Santos e da Drª. Ana Lúcia Prado. COIMBRA – PORTUGAL 2010 3 Ao meu Deus fiel que proporcionou proveitosos e inesquecíveis momentos em Portugal; aos meus pais que apostaram em meu sonho como se fosse deles sem medir esforços para realizá-lo; ao meu namorado pelo apoio durante as inesgotáveis idas às bibliotecas e também aos meus amigos brasileiros e portugueses, que ao longo de minha trajetória científica amenizaram a saudade que tive do lar. 4 AGRADECIMENTOS Ao meu orientador Sílvio Correia Santos, da Universidade de Coimbra, pelo incentivo, paciência e persistência em desenvolver um trabalho de qualidade; À co-orientadora Ana Lúcia Prado, que aceitou prosseguir comigo em mais uma etapa acadêmica. À equipe da Rede Globo, em especial da Globo Universidade, que dá suporte aos jovens cientistas de maneira esplendorosa e também à editora-chefe do portal G1, Márcia Menezes, que com muito sacrifício me encaixou num horário para visita técnica. À jornalista da Sic Online Tânia Mateus, aos diretores Ricardo Rosa, Etiano Branco e Sandra Varandas, e em especial ao diretor de informação, senhor Carlos Rodrigues por compreender a necessidade de minha visita à redação da Sic. -

CLIX SMARTV CELEBRATES TV GLOBO's 1St

Lisbon, October 2 nd , 2008 CLIX SMARTV CELEBRATES TV GLOBO’s 1st ANNIVERSARY WITH OPEN SIGNAL TRANSMISSION Lisbon, October 2 nd , 2008 - To celebrate the TV Globo Portugal’s 1st anniversary, Clix SmarTV offers this channel with open signal to its customer, on the weekend of 3rd , 4 th and 5 th October! • Clix SMARTV offers the TV Globo Portugal transmission for 3 days with open signal, to celebrate the channel’s 1st anniversary • The Premium Channel is available on Clix SMARTV, at the grid position 11, for € 9.92 per month only. With this offer, the major programs with the TV GLOBO’s seal of quality (such as the Jô Program, Mais Você, Mothern, Caldeirão do Hulk or Ivete Sangalo’s Concert) can be watched by Clix SMARTV’s customers at no additional cost, during this period. Variety and entertainment mark a programming that provides mini-series and the the most striking soap operas, exclusive interviews with the most famous stars, children's programs, documentaries, news, sports, music, television backstage scenes and a lot of humor as well as several successful programs in Brazil. The grid is a selection of TV Globo’s programming and the best channels highlights as GNT, Multishow, GloboNews and Futura. This channel is available on Clix SmarTV as a Premium Channel, on the grid position 11, for € 9.92 per month only. About Clix SmarTV This is the first digital television (IPTV) service to operate in the country. With more than 100 television channels and more than 800 titles in the Home Video service, in areas covered by Sonaecom’s last generation network, Clix SmarTV is available in 4 packages which fit each customer’s profile, and that cover from the more complete options that enable the full access to all content, to a free option offered by Clix to all Voice and Internet service customers.