Automatic Detection and Removal of Control-Flow Side Channel Attacks

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Instruction Set Architecture

Quiz 0 Lecture 2: The Instruction Set Architecture COS / ELE 375 Computer Architecture and Organization Princeton University Fall 2015 Prof. David August 1 2 Quiz 0 CD 3 Miles of Music 3 4 Pits and Lands Interpretation 0 1 1 1 0 1 0 1 As Music: 011101012 = 117/256 position of speaker As Number: Transition represents a bit state (1/on/red/female/heads) 01110101 = 1 + 4 + 16 + 32 + 64 = 117 = 75 No change represents other state (0/off/white/male/tails) 2 10 16 (Get comfortable with base 2, 8, 10, and 16.) As Text: th 011101012 = 117 character in the ASCII codes = “u” 5 6 Interpretation – ASCII Princeton Computer Science Building West Wall 7 8 Interpretation Binary Code and Data (Hello World!) • Programs consist of Code and Data • Code and Data are Encoded in Bits IA-64 Binary (objdump) As Music: 011101012 = 117/256 position of speaker As Number: 011101012 = 1 + 4 + 16 + 32 + 64 = 11710 = 7516 As Text: th 011101012 = 117 character in the ASCII codes = “u” CAN ALSO BE INTERPRETED AS MACHINE INSTRUCTION! 9 Interfaces in Computer Systems Instructions Sequential Circuit!! Software: Produce Bits Instructing Machine to Manipulate State or Produce I/O Computers process information State Applications • Input/Output (I/O) Operating System • State (memory) • Computation (processor) Compiler Firmware Instruction Set Architecture Input Output Instruction Set Processor I/O System Datapath & Control Computation Digital Design Circuit Design • Instructions instruct processor to manipulate state Layout • Instructions instruct processor to produce I/O in the same way Hardware: Read and Obey Instruction Bits 12 State State – Main Memory Typical modern machine has this architectural state: Main Memory (AKA: RAM – Random Access Memory) 1. -

Simple Computer Example Register Structure

Simple Computer Example Register Structure Read pp. 27-85 Simple Computer • To illustrate how a computer operates, let us look at the design of a very simple computer • Specifications 1. Memory words are 16 bits in length 2. 2 12 = 4 K words of memory 3. Memory can be accessed in one clock cycle 4. Single Accumulator for ALU (AC) 5. Registers are fully connected Simple Computer Continued 4K x 16 Memory MAR 12 MDR 16 X PC 12 ALU IR 16 AC Simple Computer Specifications (continued) 6. Control signals • INCPC – causes PC to increment on clock edge - [PC] +1 PC •ACin - causes output of ALU to be stored in AC • GMDR2X – get memory data register to X - [MDR] X • Read (Write) – Read (Write) contents of memory location whose address is in MAR To implement instructions, control unit must break down the instruction into a series of register transfers (just like a complier must break down C program into a series of machine level instructions) Simple Computer (continued) • Typical microinstruction for reading memory State Register Transfer Control Line(s) Next State 1 [[MAR]] MDR Read 2 • Timing State 1 State 2 During State 1, Read set by control unit CLK - Data is read from memory - MDR changes at the Read beginning of State 2 - Read is completed in one clock cycle MDR Simple Computer (continued) • Study: how to write the microinstructions to implement 3 instructions • ADD address • ADD (address) • JMP address ADD address: add using direct addressing 0000 address [AC] + [address] AC ADD (address): add using indirect addressing 0001 address [AC] + [[address]] AC JMP address 0010 address address PC Instruction Format for Simple Computer IR OP 4 AD 12 AD = address - Two phases to implement instructions: 1. -

PIC Family Microcontroller Lesson 02

Chapter 13 PIC Family Microcontroller Lesson 02 Architecture of PIC 16F877 Internal hardware for the operations in a PIC family MCU direct Internal ID, control, sequencing and reset circuits address 7 14-bit Instruction register 8 MUX program File bus Select 8 Register 14 8 8 W Register (Accumulator) ADDR Status Register MUX Flash Z, C and DC 9 Memory Data Internal EEPROM RAM 13 Peripherals 256 Byte Program Registers counter Ports data 368 Byte 13 bus 2011 Microcontrollers-... 2nd Ed. Raj KamalA to E 3 8-level stack (13-bit) Pearson Education 8 ALU Features • Supports 8-bit operations • Internal data bus is of 8-bits 2011 Microcontrollers-... 2nd Ed. Raj Kamal 4 Pearson Education ALU Features • ALU operations between the Working (W) register (accumulator) and register (or internal RAM) from a register-file • ALU operations can also be between the W and 8-bits operand from instruction register (IR) • The operations also use three flags Z, C and DC/borrow. [Zero flag, Carry flag and digit (nibble) carry flag] 2011 Microcontrollers-... 2nd Ed. Raj Kamal 5 Pearson Education ALU features • The destination of result from ALU operations can be either W or register (f) in file • The flags save at status register (STATUS) • PIC CPU is a one-address machine (one operand specified in the instruction for ALU) 2011 Microcontrollers-... 2nd Ed. Raj Kamal 6 Pearson Education ALU features • Two operands are used in an arithmetic or logic operations • One is source operand from one a register file/RAM (or operand from instruction) and another is W-register • Advantage—ALU directly operates on a register or memory similar to 8086 CPU 2011 Microcontrollers-.. -

A Simple Processor

CS 240251 SpringFall 2019 2020 FoundationsPrinciples of Programming of Computer Languages Systems λ Ben Wood A Simple Processor 1. A simple Instruction Set Architecture 2. A simple microarchitecture (implementation): Data Path and Control Logic https://cs.wellesley.edu/~cs240/s20/ A Simple Processor 1 Program, Application Programming Language Compiler/Interpreter Software Operating System Instruction Set Architecture Microarchitecture Digital Logic Devices (transistors, etc.) Hardware Solid-State Physics A Simple Processor 2 Instruction Set Architecture (HW/SW Interface) processor memory Instructions • Names, Encodings Instruction Encoded • Effects Logic Instructions • Arguments, Results Registers Data Local storage • Names, Size • How many Large storage • Addresses, Locations Computer A Simple Processor 3 Computer Microarchitecture (Implementation of ISA) Instruction Fetch and Registers ALU Memory Decode A Simple Processor 4 (HW = Hardware or Hogwarts?) HW ISA An example made-up instruction set architecture Word size = 16 bits • Register size = 16 bits. • ALU computes on 16-bit values. Memory is byte-addressable, accesses full words (byte pairs). 16 registers: R0 - R15 • R0 always holds hardcoded 0 Address Contents • R1 always holds hardcoded 1 0 First instruction, low-order byte • R2 – R15: general purpose 1 First instruction, Instructions are 1 word in size. high-order byte 2 Second instruction, Separate instruction memory. low-order byte Program Counter (PC) register ... ... • holds address of next instruction to execute. A Simple Processor 5 R: Register File M: Data Memory Reg Contents Reg Contents Address Contents R0 0x0000 R8 0x0 – 0x1 R1 0x0001 R9 0x2 – 0x3 R2 R10 0x4 – 0x5 R3 R11 0x6 – 0x7 R4 R12 0x8 – 0x9 R5 R13 0xA – 0xB R6 R14 0xC – 0xD R7 R15 … Program Counter IM: Instruction Memory PC Address Contents 0x0 – 0x1 ß Processor 1. -

MIPS: Design and Implementation

FACULTEIT INGENIEURSWETENSCHAPPEN MIPS: Design and Implementation Computerarchitectuur 1MA Industriele¨ Wetenschappen Elektronica-ICT 2014-10-20 Laurent Segers Contents Introduction......................................3 1 From design to VHDL4 1.1 The factorial algorithm.............................4 1.2 Building modules................................5 1.2.1 A closer look..............................6 1.2.2 VHDL..................................7 1.3 Design in VHDL................................8 1.3.1 Program Counter............................8 1.3.2 Instruction Memory........................... 14 1.3.3 Program Counter Adder........................ 15 1.4 Bringing it all together - towards the MIPS processor............ 15 2 Design validation 19 2.1 Instruction Memory............................... 19 2.2 Program Counter................................ 22 2.3 Program Counter Adder............................ 23 2.4 The MIPS processor.............................. 23 3 Porting to FPGA 25 3.1 User Constraints File.............................. 25 4 Additional features 27 4.1 UART module.................................. 27 4.1.1 Connecting the UART-module to the MIPS processor........ 28 4.2 Reprogramming the MIPS processor..................... 29 A Xilinx ISE software 30 A.1 Creating a new project............................. 30 A.2 Adding a new VHDL-module......................... 30 A.3 Creating an User Constraints File....................... 30 A.4 Testbenches................................... 31 A.4.1 Creating testbenches.......................... 31 A.4.2 Running testbenches.......................... 31 2 Introduction Nowadays, most programmers write their applications in what we call \the Higher Level Programming languages", such as Java, C#, Delphi, etc. These applications are then compiled into machine code. In order to run this machine code the underlying hardware needs be able to \understand" the proposed code. The aim of this practical course is to give an inside on the principles of a working system. -

Overview of the MIPS Architecture: Part I

Overview of the MIPS Architecture: Part I CS 161: Lecture 0 1/24/17 Looking Behind the Curtain of Software • The OS sits between hardware and user-level software, providing: • Isolation (e.g., to give each process a separate memory region) • Fairness (e.g., via CPU scheduling) • Higher-level abstractions for low-level resources like IO devices • To really understand how software works, you have to understand how the hardware works! • Despite OS abstractions, low-level hardware behavior is often still visible to user-level applications • Ex: Disk thrashing Processors: From the View of a Terrible Programmer Letter “m” Drawing of bird ANSWERS Source code Compilation add t0, t1, t2 lw t3, 16(t0) slt t0, t1, 0x6eb21 Machine instructions A HARDWARE MAGIC OCCURS Processors: From the View of a Mediocre Programmer • Program instructions live Registers in RAM • PC register points to the memory address of the instruction to fetch and execute next • Arithmetic logic unit (ALU) performs operations on PC registers, writes new RAM values to registers or Instruction memory, generates ALU to execute outputs which determine whether to branches should be taken • Some instructions cause Devices devices to perform actions Processors: From the View of a Mediocre Programmer • Registers versus RAM Registers • Registers are orders of magnitude faster for ALU to access (0.3ns versus 120ns) • RAM is orders of magnitude larger (a PC few dozen 32-bit or RAM 64-bit registers versus Instruction GBs of RAM) ALU to execute Devices Instruction Set Architectures (ISAs) -

Reverse Engineering X86 Processor Microcode

Reverse Engineering x86 Processor Microcode Philipp Koppe, Benjamin Kollenda, Marc Fyrbiak, Christian Kison, Robert Gawlik, Christof Paar, and Thorsten Holz, Ruhr-University Bochum https://www.usenix.org/conference/usenixsecurity17/technical-sessions/presentation/koppe This paper is included in the Proceedings of the 26th USENIX Security Symposium August 16–18, 2017 • Vancouver, BC, Canada ISBN 978-1-931971-40-9 Open access to the Proceedings of the 26th USENIX Security Symposium is sponsored by USENIX Reverse Engineering x86 Processor Microcode Philipp Koppe, Benjamin Kollenda, Marc Fyrbiak, Christian Kison, Robert Gawlik, Christof Paar, and Thorsten Holz Ruhr-Universitat¨ Bochum Abstract hardware modifications [48]. Dedicated hardware units to counter bugs are imperfect [36, 49] and involve non- Microcode is an abstraction layer on top of the phys- negligible hardware costs [8]. The infamous Pentium fdiv ical components of a CPU and present in most general- bug [62] illustrated a clear economic need for field up- purpose CPUs today. In addition to facilitate complex and dates after deployment in order to turn off defective parts vast instruction sets, it also provides an update mechanism and patch erroneous behavior. Note that the implementa- that allows CPUs to be patched in-place without requiring tion of a modern processor involves millions of lines of any special hardware. While it is well-known that CPUs HDL code [55] and verification of functional correctness are regularly updated with this mechanism, very little is for such processors is still an unsolved problem [4, 29]. known about its inner workings given that microcode and the update mechanism are proprietary and have not been Since the 1970s, x86 processor manufacturers have throughly analyzed yet. -

Introduction to Cpu

microprocessors and microcontrollers - sadri 1 INTRODUCTION TO CPU Mohammad Sadegh Sadri Session 2 Microprocessor Course Isfahan University of Technology Sep., Oct., 2010 microprocessors and microcontrollers - sadri 2 Agenda • Review of the first session • A tour of silicon world! • Basic definition of CPU • Von Neumann Architecture • Example: Basic ARM7 Architecture • A brief detailed explanation of ARM7 Architecture • Hardvard Architecture • Example: TMS320C25 DSP microprocessors and microcontrollers - sadri 3 Agenda (2) • History of CPUs • 4004 • TMS1000 • 8080 • Z80 • Am2901 • 8051 • PIC16 microprocessors and microcontrollers - sadri 4 Von Neumann Architecture • Same Memory • Program • Data • Single Bus microprocessors and microcontrollers - sadri 5 Sample : ARM7T CPU microprocessors and microcontrollers - sadri 6 Harvard Architecture • Separate memories for program and data microprocessors and microcontrollers - sadri 7 TMS320C25 DSP microprocessors and microcontrollers - sadri 8 Silicon Market Revenue Rank Rank Country of 2009/2008 Company (million Market share 2009 2008 origin changes $ USD) Intel 11 USA 32 410 -4.0% 14.1% Corporation Samsung 22 South Korea 17 496 +3.5% 7.6% Electronics Toshiba 33Semiconduc Japan 10 319 -6.9% 4.5% tors Texas 44 USA 9 617 -12.6% 4.2% Instruments STMicroelec 55 FranceItaly 8 510 -17.6% 3.7% tronics 68Qualcomm USA 6 409 -1.1% 2.8% 79Hynix South Korea 6 246 +3.7% 2.7% 812AMD USA 5 207 -4.6% 2.3% Renesas 96 Japan 5 153 -26.6% 2.2% Technology 10 7 Sony Japan 4 468 -35.7% 1.9% microprocessors and microcontrollers -

Assembly Language: IA-X86

Assembly Language for x86 Processors X86 Processor Architecture CS 271 Computer Architecture Purdue University Fort Wayne 1 Outline Basic IA Computer Organization IA-32 Registers Instruction Execution Cycle Basic IA Computer Organization Since the 1940's, the Von Neumann computers contains three key components: Processor, called also the CPU (Central Processing Unit) Memory and Storage Devices I/O Devices Interconnected with one or more buses Data Bus Address Bus data bus Control Bus registers Processor I/O I/O IA: Intel Architecture Memory Device Device (CPU) #1 #2 32-bit (or i386) ALU CU clock control bus address bus Processor The processor consists of Datapath ALU Registers Control unit ALU (Arithmetic logic unit) Performs arithmetic and logic operations Control unit (CU) Generates the control signals required to execute instructions Memory Address Space Address Space is the set of memory locations (bytes) that are addressable Next ... Basic Computer Organization IA-32 Registers Instruction Execution Cycle Registers Registers are high speed memory inside the CPU Eight 32-bit general-purpose registers Six 16-bit segment registers Processor Status Flags (EFLAGS) and Instruction Pointer (EIP) 32-bit General-Purpose Registers EAX EBP EBX ESP ECX ESI EDX EDI 16-bit Segment Registers EFLAGS CS ES SS FS EIP DS GS General-Purpose Registers Used primarily for arithmetic and data movement mov eax 10 ;move constant integer 10 into register eax Specialized uses of Registers eax – Accumulator register Automatically -

Introduction to Microcoded Implementation of a CPU Architecture

Introduction to Microcoded Implementation of a CPU Architecture N.S. Matloff, revised by D. Franklin January 30, 1999, revised March 2004 1 Microcoding Throughout the years, Microcoding has changed dramatically. The debate over simple computers vs complex computers once raged within the architecture community. In the end, the most popular microcoded computers survived for three reasons - marketshare, technological improvements, and the embracing of the principles used in simple computers. So the two eventually merged into one. To truly understand microcoding, one must understand why they were built, what they are, why they survived, and, finally, what they look like today. 1.1 Motivation Strictly speaking, the term architecture for a CPU refers only to \what the assembly language programmer" sees|the instruction set, addressing modes, and register set. For a given target architecture, i.e. the architecture we wish to build, various implementations are possible. We could have many different internal designs of the CPU chip, all of which produced the same effect, namely the same instruction set, addressing modes, etc. The different internal designs could then all be produced for the different models of that CPU, as in the familiar Intel case. The different models would have different speed capabilities, and probably different prices to the consumer. But the same machine languge program, say a .EXE file in the Intel/DOS case, would run on any CPU in the family. When desigining an instruction set architecture, there is a tradeoff between software and hardware. If you provide very few instructions, it takes more instructions to perform the same task, but the hardware can be very simple. -

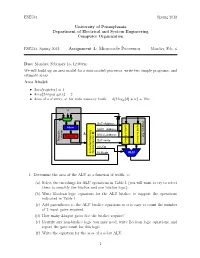

Microcode Processor Monday, Feb

ESE534 Spring 2012 University of Pennsylvania Department of Electrical and System Engineering Computer Organization ESE534, Spring 2012 Assignment 4: Microcode Processor Monday, Feb. 6 Due: Monday, February 13, 12:00pm We will build up an area model for a microcoded processor, write two simple programs, and estimate areas. Area Model: • Area(register) = 4 • Area(2-input gate) = 2 • Area of a d-entry, w-bit wide memory bank = d(2 log2(d) + w) + 10w +1 Register File DST−Address Adder SRC1−Address A D Register SRC2−Address Counter Program DST−write (memory bank) (memory bank) ALUOp ALU Instruction Memory (memory bank) PCMode 1. Determine the area of the ALU as a function of width, w. (a) Select the encodings for ALU operations in Table 1 (you will want to try to select them to simplify the bitslice and non-bitslice logic). (b) Write Boolean logic equations for the ALU bitslice to support the operations indicated in Table 1. (c) Add parentheses to the ALU bitslice equations so it is easy to count the number of 2-input gates required. (d) How many 2-input gates doe the bitslice require? (e) Identify any non-bitslice logic you may need, write Boolean logic equations, and report the gate count for this logic. (f) Write the equation for the area of a w-bit ALU. 1 ESE534 Spring 2012 2. Determine the total area of the memory banks serving as the \register file” as a function of datapath width, w, and number of data items, r, held in each bank (i.e. the number of registers). -

A Single-Cycle MIPS Processor

Lecture 8 . Assembly language wrap-up . Single-cycle implementation 1 Assembly Language Wrap-Up We’ve introduced MIPS assembly language Remember these ten facts about it 1. MIPS is representative of all assembly languages – you should be able to learn any other easily 2. Assembly language is machine language expressed in symbolic form, using decimal and naming 3. R-type instruction op $r1,$r2,$r3 is $r1 = $r2 op $r3 4. I-type instruction op $r1,$r2,imm is $r1 = $r2 op imm 5. I-type is used for arithmetic, branches, load & store, so the roles of the fields change 6. Moving data to/from memory uses imm($rs) for the effective address, ea = imm + $rs, to reference M[ea] 2 Ten Facts Continued 7. Branch and Jump destinations in instructions refer to words (instructions) not bytes 8. Branch offsets are relative to PC+4 9. By convention registers are used in a disciplined way; following it is wise! 10. ―Short form‖ explanation is on the green card, ―Long form‖ is in appendix B 3 Instruction Format Review Register-to-register arithmetic instructions are R-type op rs rt rd shamt func 6 bits 5 bits 5 bits 5 bits 5 bits 6 bits Load, store, branch, & immediate instructions are I-type op rs rt address 6 bits 5 bits 5 bits 16 bits The jump instruction uses the J-type instruction format op address 6 bits 26 bits 4 Review . If three instructions have opcodes 1, 7 and 15 are they all of the same type? . If we were to add an instruction to MIPS of the form MOD $t1, $t2, $t3, which performs $t1 = $t2 MOD $t3, what would be its opcode? .