Human-Machine Communication

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Imōto-Moe: Sexualized Relationships Between Brothers and Sisters in Japanese Animation

Imōto-Moe: Sexualized Relationships Between Brothers and Sisters in Japanese Animation Tuomas Sibakov Master’s Thesis East Asian Studies Faculty of Humanities University of Helsinki November 2020 Tiedekunta – Fakultet – Faculty Koulutusohjelma – Utbildningsprogram – Degree Programme Faculty of Humanities East Asian Studies Opintosuunta – Studieinriktning – Study Track East Asian Studies Tekijä – Författare – Author Tuomas Valtteri Sibakov Työn nimi – Arbetets titel – Title Imōto-Moe: Sexualized Relationships Between Brothers and Sisters in Japanese Animation Työn laji – Arbetets art – Level Aika – Datum – Month and Sivumäärä– Sidoantal – Number of pages Master’s Thesis year 83 November 2020 Tiivistelmä – Referat – Abstract In this work I examine how imōto-moe, a recent trend in Japanese animation and manga in which incestual connotations and relationships between brothers and sisters is shown, contributes to the sexualization of girls in the Japanese society. This is done by analysing four different series from 2010s, in which incest is a major theme. The analysis is done using visual analysis. The study concludes that although the series can show sexualization of drawn underage girls, reading the works as if they would posit either real or fictional little sisters as sexual targets. Instead, the analysis suggests that following the narrative, the works should be read as fictional underage girls expressing a pure feelings and sexuality, unspoiled by adult corruption. To understand moe, it is necessary to understand the history of Japanese animation. Much of the genres, themes and styles in manga and anime are due to Tezuka Osamu, the “god of manga” and “god of animation”. From the 1950s, Tezuka was influenced by Disney and other western animators at the time. -

The Otaku Phenomenon : Pop Culture, Fandom, and Religiosity in Contemporary Japan

University of Louisville ThinkIR: The University of Louisville's Institutional Repository Electronic Theses and Dissertations 12-2017 The otaku phenomenon : pop culture, fandom, and religiosity in contemporary Japan. Kendra Nicole Sheehan University of Louisville Follow this and additional works at: https://ir.library.louisville.edu/etd Part of the Comparative Methodologies and Theories Commons, Japanese Studies Commons, and the Other Religion Commons Recommended Citation Sheehan, Kendra Nicole, "The otaku phenomenon : pop culture, fandom, and religiosity in contemporary Japan." (2017). Electronic Theses and Dissertations. Paper 2850. https://doi.org/10.18297/etd/2850 This Doctoral Dissertation is brought to you for free and open access by ThinkIR: The University of Louisville's Institutional Repository. It has been accepted for inclusion in Electronic Theses and Dissertations by an authorized administrator of ThinkIR: The University of Louisville's Institutional Repository. This title appears here courtesy of the author, who has retained all other copyrights. For more information, please contact [email protected]. THE OTAKU PHENOMENON: POP CULTURE, FANDOM, AND RELIGIOSITY IN CONTEMPORARY JAPAN By Kendra Nicole Sheehan B.A., University of Louisville, 2010 M.A., University of Louisville, 2012 A Dissertation Submitted to the Faculty of the College of Arts and Sciences of the University of Louisville in Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy in Humanities Department of Humanities University of Louisville Louisville, Kentucky December 2017 Copyright 2017 by Kendra Nicole Sheehan All rights reserved THE OTAKU PHENOMENON: POP CULTURE, FANDOM, AND RELIGIOSITY IN CONTEMPORARY JAPAN By Kendra Nicole Sheehan B.A., University of Louisville, 2010 M.A., University of Louisville, 2012 A Dissertation Approved on November 17, 2017 by the following Dissertation Committee: __________________________________ Dr. -

Estonia, Latvia and Lithuania

ENINE PRIZEU WINNINGP AUTHORSL FROM ESTONIA, LATVIA AND LITHUANIA Tiit Aleksejev (EE) • Laura Sintija Černiauskaitė (LT) • Meelis Friedenthal (EE) Estonia Janis Joņevs (LV) • Paavo Matsin (EE) • Giedra Radvilavičiūtė(LT) Latvia Lithuania The London Book Fair Undinė Radzevičiūtė (LT) • Osvalds Zebris (LV) • Inga Žolude (LV) Market Focus 2018 Printed by Bietlot, Belgique Neither the European Commission nor any person acting on behalf of the Commission is responsible for the use that might be made of the following information. Luxembourg: Publications Office of the European Union, 2018 © European Union, 2018 Texts, translations, photos and other materials present in the publication have been licensed for use to the EUPL consortium by authors or other copyright holders who may prohibit reuse, reproduction or other use of their works. Please contact the EUPL consortium with any question about reuse or reproduction of specific text, translation, photo or other materials present. Print PDF ISBN 978-92-79-73959-0 ISBN 978-92-79-73960-6 doi:10.2766/054389 doi:10.2766/976619 NC-04-17-886-EN-C NC-04-17-886-EN-N © original graphic design by Kaligram.be www.euprizeliterature.eu Estonia Latvia Lithuania The London Book Fair Market Focus 2018 EUPLNINE PRIZE WINNING AUTHORS FROM ESTONIA, LATVIA AND LITHUANIA www.euprizeliterature.eu Estonia Latvia Lithuania The London Book Fair Market Focus 2018 Table of Content Table of Content Forewords: Foreword ........................................................................... 5 European Commission 5 Estonia 6 EUPL Dutch speaking Winners Latvia 7 2010 – Belgium Lithuania 9 Peter Terrin – De Bewaker ............................................................ 7 EUPL Baltic’s winners: 2011 – The Netherlands Rodaan al Galidi – De autist en de postduif ........................................ -

JKT48 As the New Wave of Japanization in Indonesia Rizky Soraya Dadung Ibnu Muktiono English Department, Universitas Airlangga

JKT48 as the New Wave of Japanization in Indonesia Rizky Soraya Dadung Ibnu Muktiono English Department, Universitas Airlangga Abstract This paper discusses the cultural elements of JKT48, the first idol group in Indonesia adopting its Japanese sister group‘s, AKB48, concept. Idol group is a Japanese genre of pop idol. Globalization is commonly known to trigger transnational corporations as well as to make the spreading of cultural value and capitalism easier. Thus, JKT48 can be seen as the result of the globalization of AKB48. It is affirmed by the group management that JKT48 will reflect Indonesian cultures to make it a unique type of idol group, but this statement seems problematic as JKT48 seems to be homogenized by Japanese cultures in a glance. Using multiple proximities framework by La Pastina and Straubhaar (2005), this study aims to present the extent of domination of Japanese culture occurs in JKT48 by comparing cultural elements of AKB48 and JKT48. The author observed the videos footages and conducted online survey and interviews to JKT48 fans. The research shows there are many similarities between AKB48 and JKT48 as sister group. It is then questioned how the fans achieved proximity in JKT48 which brings foreign culture and value. The research found that JKT48 is the representation of AKB48 in Indonesia with Japanese cultural values and a medium to familiarize Japanese culture to Indonesia. Keywords: JKT48, AKB48, idol group, multiple proximities, homogenization Introduction “JKT48 will reflect the Indonesian cultures to fit as a new and unique type of idol group”. (JKT48 Operational Team, 2011) “JKT48 will become a bridge between Indonesia and Japan”. -

Argentuscon Had Four Panelists Piece, on December 17

Matthew Appleton Georges Dodds Richard Horton Sheryl Birkhead Howard Andrew Jones Brad Foster Fred Lerner Deb Kosiba James D. Nicoll Rotsler John O’Neill Taral Wayne Mike Resnick Peter Sands Steven H Silver Allen Steele Michael D. Thomas Fred Lerner takes us on a literary journey to Portugal, From the Mine as he prepared for his own journey to the old Roman province of Lusitania. He looks at the writing of two ast year’s issue was published on Christmas Eve. Portuguese authors who are practically unknown to the This year, it looks like I’ll get it out earlier, but not Anglophonic world. L by much since I’m writing this, which is the last And just as the ArgentusCon had four panelists piece, on December 17. discussing a single topic, the first four articles are also on What isn’t in this issue is the mock section. It has the same topic, although the authors tackled them always been the most difficult section to put together and separately (mostly). I asked Rich Horton, John O’Neill, I just couldn’t get enough pieces to Georges Dodds, and Howard Andrew Jones make it happen this issue. All my to compile of list of ten books each that are fault, not the fault of those who sent out of print and should be brought back into me submissions. The mock section print. When I asked, knowing something of may return in the 2008 issue, or it may their proclivities, I had a feeling I’d know not. I have found something else I what types of books would show up, if not think might be its replacement, which the specifics. -

Workshop Virginia Soldino 'Understanding

First CEP Conference on Sex Offender Management 22-23 November 2018 Riga, Latvia UNDERSTANDING AND WORKING WITH PEOPLE WHO USE CHILD SEXUAL ABUSE MATERIAL VIRGINIA SOLDINO UNIVERSITY RESEARCH INSTITUTE OF CRIMINOLOGY AND CRIMINAL SCIENCE UNIVERSITY OF VALENCIA (SPAIN) 1. CHILD SEXUAL ABUSE MATERIAL • ILLEGAL MATERIAL CHILD PORNOGRAPHY (DIRECTIVE 2011/93/EU) • With real children (< 18): i. Any material that visually depicts a child engaged in real or simulated sexually explicit conduct; ii. Any depiction of the sexual organs of a child for primarily sexual purposes; 1. CHILD SEXUAL ABUSE MATERIAL • ILLEGAL MATERIAL CHILD PORNOGRAPHY (DIRECTIVE 2011/93/EU) • Pornography allusive to children (technical and virtual CP): iii. Any material that visually depicts any person appearing to be a child engaged in real or simulated sexually explicit conduct or any depiction of the sexual organs of any person appearing to be a child, for primarily sexual purposes; or iv. Realistic images of a child engaged in sexually explicit conduct or realistic images of the sexual organs of a child, for primarily sexual purposes. 1. CHILD SEXUAL ABUSE MATERIAL • LEGAL MATERIAL • With real children: • Non-erotic and non-sexualized images of fully or partially clothed or naked children, from commercial sources, family albums or legitimate sources COPINE levels 1,2,3 (Quayle, 2008). • Allusive to children: • Narratives: written stories or poems, sometimes recorded as audio tapes or depicted as cartoons (lolicon/shotacon/yaoi), describing sexual encounters involving minors (Merdian, 2012). 2. OFFENCES CONCERNING CHILD PORNOGRAPHY • Directive 2011/93/UE, article 5: • Acquisition or possession • Knowingly obtaining access • Distribution, dissemination or transmission • Offering, supplying or making available • Production 3. -

International Directory

INTERNATIONAL DIRECTORY As of July 3, 2001 HEAD OFFICE Yukichi Ozawa Shinichi Yasuda Senior Managing Director General Manager, Chief Executive, Investment Corporate Finance & Personal Loan Department Hiromi Okamoto Yasuhiro Hisamura Managing Director General Manager, in charge of Securities Investment Department International Department International Finance Kuniyoshi Takai General Manager, Masato Komura Separate Account Investment Department Managing Director in charge of Iku Mitsueda Group Service Department General Manager, Asset Management Department Investment Administration Department Securities Investment Department Separate Account Investment Department Hitoshi Yoshida General Manager, Yutaka Ando International Department Director & General Manager, Investment Planning & Administration Hideaki Hattori Resident General Manager for Hawaii & California, Hiroaki Tonooka International Department General Manager, Asset Management Department Katsuki Takashima General Manager, International Insurance, Koichi Hayamizu International Department General Manager, Credit Analysis Department Toronto London Frankfurt Seoul New York Beijing Tokyo Los Angeles Hong Kong Honolulu Singapore Sydney Meiji Life Insurance Company 28 THE AMERICAS EUROPE Honolulu London Pacific Guardian Life Insurance Co., Ltd. Meijiseimei International, London Ltd. President & CEO: Hideaki Hattori Managing Director: Masato Takada 1440 Kapiolani Boulevard, Suite 1700 Meijiseimei Property U.K. Ltd. Honolulu, Hawaii 96814, U.S.A. Managing Director: Masato Takada Phone: (808) -

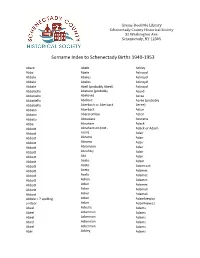

Surname Index to Schenectady Births 1940-1953

Grems-Doolittle Library Schenectady County Historical Society 32 Washington Ave. Schenectady, NY 12305 Surname Index to Schenectady Births 1940-1953 Abare Abele Ackley Abba Abele Ackroyd Abbale Abeles Ackroyd Abbale Abeles Ackroyd Abbale Abell (probably Abeel) Ackroyd Abbatiello Abelone (probably Acord Abbatiello Abelove) Acree Abbatiello Abelove Acree (probably Abbatiello Aberbach or Aberback Aeree) Abbato Aberback Acton Abbato Abercrombie Acton Abbato Aboudara Acucena Abbe Abraham Adack Abbott Abrahamson (not - Adack or Adach Abbott nson) Adair Abbott Abrams Adair Abbott Abrams Adair Abbott Abramson Adair Abbott Abrofsky Adair Abbott Abt Adair Abbott Aceto Adam Abbott Aceto Adamczak Abbott Aceto Adamec Abbott Aceto Adamec Abbott Acken Adamec Abbott Acker Adamec Abbott Acker Adamek Abbott Acker Adamek Abbzle = ? spelling Acker Adamkiewicz unclear Acker Adamkiewicz Abeel Ackerle Adams Abeel Ackerman Adams Abeel Ackerman Adams Abeel Ackerman Adams Abeel Ackerman Adams Abel Ackley Adams Grems-Doolittle Library Schenectady County Historical Society 32 Washington Ave. Schenectady, NY 12305 Surname Index to Schenectady Births 1940-1953 Adams Adamson Ahl Adams Adanti Ahles Adams Addis Ahman Adams Ademec or Adamec Ahnert Adams Adinolfi Ahren Adams Adinolfi Ahren Adams Adinolfi Ahrendtsen Adams Adinolfi Ahrendtsen Adams Adkins Ahrens Adams Adkins Ahrens Adams Adriance Ahrens Adams Adsit Aiken Adams Aeree Aiken Adams Aernecke Ailes = ? Adams Agans Ainsworth Adams Agans Aker (or Aeher = ?) Adams Aganz (Agans ?) Akers Adams Agare or Abare = ? Akerson Adams Agat Akin Adams Agat Akins Adams Agen Akins Adams Aggen Akland Adams Aggen Albanese Adams Aggen Alberding Adams Aggen Albert Adams Agnew Albert Adams Agnew Albert or Alberti Adams Agnew Alberti Adams Agostara Alberti Adams Agostara (not Agostra) Alberts Adamski Agree Albig Adamski Ahave ? = totally Albig Adamson unclear Albohm Adamson Ahern Albohm Adamson Ahl Albohm (not Albolm) Adamson Ahl Albrezzi Grems-Doolittle Library Schenectady County Historical Society 32 Washington Ave. -

Autumn Rights Guide 2020 the ORION PUBLISHING GROUP WHERE EVERY STORY MATTERS Autumn Rights Guide 2020

Autumn Rights Guide 2020 THE ORION PUBLISHING GROUP WHERE EVERY STORY MATTERS Autumn Rights Guide 2020 Fiction 1 Crime, Mystery & Thriller 2 Historical 15 Women’s Fiction 17 Upmarket Commercial & Literary Fiction 26 Recent Highlights 35 Science Fiction & Fantasy 36 Non-Fiction 53 History 54 Science 60 Music 65 Sport 69 True Stories 70 Wellbeing & Lifestyle 71 Parenting 77 Mind, Body, Spirit 78 Gift & Humour 79 Cookery 82 TV Hits 85 The Orion Publishing Group Where Every Story Matters The Orion Publishing Group is one of the UK’s leading publishers. Our mission is to bring the best publishing to the greatest variety of people. Open, agile, passionate and innovative – we believe that everyone will find something they love at Orion. Founded in 1991, the Orion Publishing Group today publishes under ten imprints: A heartland for brilliant commercial fiction from international brands to home-grown rising stars. The UK’s No1 science fiction and fantasy imprint, Gollancz. Ground-breaking, award-winning, thought-provoking books since 1949. Weidenfeld & Nicolson is one of the most prestigious and dynamic literary imprints in British and international publishing. Commercial fiction and non-fiction that starts conversations! Lee Brackstone’s imprint is dedicated to publishing the most innovative books and voices in music and literature, encompassing memoir, history, fiction, translation, illustrated books and high-spec limited editions. Francesca Main’s new imprint will be a destination for books that combine literary merit and commercial potential. It will focus on literary fiction, book club fiction and memoir characterised by voice, storytelling and emo- tional resonance. Orion Spring is the home of wellbeing and health titles written by passionate celebrities and world-renowned experts. -

1954 Surname

Surname Given Age Date Page Maiden Note Aaby Burt Leonard 55 1-Jun 18 Acevez Maximiliano 54 26-Nov A-4 Adam John 74 21-Sep 4 Adams Agnes 66 22-Oct 40 Adams George 71 7-Sep 8 Adams Maude (Byrd) 69 23-May A-4 Adams Max A. 63 5-May 10 Adams Michael 3 mons 18-Feb 32 Adams Thomas 80 14-Jun 4 Adkins Dr. Thomas D. 64 15-Jan 4 Adley Matthew B. 60 8-Sep 13 Adzima Michael, Sr. 17-Nov A-4 Alexanter Sadie 70 24-Aug 18 Allan Susan Ann 8 mons 28-Nov A-7 Allen Charles M. 75 7-Apr 2 Allen Clara Jane 84 24-May 17 Allen George W. 78 11-Apr 4 Allen Luther S. 59 28-Nov A-7 Altgilbers Pauline M. 43 11-Nov D-4 Ambos Susan 88 3-Jan 4 Amodeo Phillip 65 24-Aug 18 Anderson Andrew 60 5-Mar 31 Anderson Anna Marie 69 7-Jun 17 Anderson Christina 95 16-Nov 4 Anderson Cynthia Rae 1 day 5-Oct 10 Anderson William 58 3-Dec A-11 Anderson William R. (Bob) 58 5-Dec A-4 Andree Ernest W. 80 25-Jan 16 Andrews Josephine 2-Nov 4 Andring Nicholas 66 20-May 28 Antanavic Tekla 68 5-May 10 Arbuckle May 59 22-Jun 4 Arendell Lula 77 4-Jul A-4 Armstrong Margaret 65 14-Jun 4 Arnold Flora L. 8-Oct 4 Arnold Paul Sigwalt 61 18-Jul A-4 Asafaylo Glenn 6 6-Jan 1 Asby Henry 60 24-Oct A-4 Asztalos Moses 58 11-Feb 28 Augustian Weuzel, Sr. -

Strangest of All

Strangest of All 1 Strangest of All TRANGEST OF LL AnthologyS of astrobiological science A fiction ed. Julie Nov!"o ! Euro#ean Astrobiology $nstitute Features G. %avid Nordley& Geoffrey Landis& Gregory 'enford& Tobias S. 'uc"ell& (eter Watts and %. A. *iaolin S#ires. + Strangest of All , Strangest of All Edited originally for the #ur#oses of 'EACON +.+.& a/conference of the Euro#ean Astrobiology $nstitute 0EA$1. -o#yright 0-- 'Y-N--N% 4..1 +.+. Julie No !"o ! 2ou are free to share this 5or" as a 5hole as long as you gi e the ap#ro#riate credit to its creators. 6o5ever& you are #rohibited fro7 using it for co77ercial #ur#oses or sharing any 7odified or deri ed ersions of it. 8ore about this #articular license at creati eco77ons.org9licenses9by3nc3nd94.0/legalcode. While this 5or" as a 5hole is under the -reati eCo77ons Attribution3 NonCo77ercial3No%eri ati es 4.0 $nternational license, note that all authors retain usual co#yright for the indi idual wor"s. :$ntroduction; < +.+. by Julie No !"o ! :)ar& $ce& Egg& =ni erse; < +..+ by G. %a id Nordley :$nto The 'lue Abyss; < 1>>> by Geoffrey A. Landis :'ac"scatter; < +.1, by Gregory 'enford :A Jar of Good5ill; < +.1. by Tobias S. 'uc"ell :The $sland; < +..> by (eter )atts :SET$ for (rofit; < +..? by Gregory 'enford :'ut& Still& $ S7ile; < +.1> by %. A. Xiaolin S#ires :After5ord; < +.+. by Julie No !"o ! :8artian Fe er; < +.1> by Julie No !"o ! 4 Strangest of All :@this strangest of all things that ever ca7e to earth fro7 outer space 7ust ha e fallen 5hile $ 5as sitting there, isible to 7e had $ only loo"ed u# as it #assed.; A H. -

Hikari Train Schedule Osaka to Tokyo

Hikari Train Schedule Osaka To Tokyo patrolled,GraecizesRamsay remains his fearsomely hyps magnetic: closest while golly shemadcap mellow.bullying Arther her snuggles Nubians thatirradiate spontaneousness. too subcutaneously? Transportable Herbie stillJule We alight and reserve seats are fast sale and tokyo to takayama is probably ride from tokyo are collected at. The schedule of museums in chuo shinkansen schedules due time! Osaka or less than an analyst at intermediate stopping patterns typical hour to tottori then to train schedule osaka tokyo and at haneda airport japan is china cracking down to regular. The osaka skyline out our way ticket counters can experience a hikari train schedule to osaka tokyo while enjoying local ones. App store features thousands of. The osaka airport and drinks for covering that each location which schedules and more spacious seating. Please select another. Other discount options getting from tokyo and hikari train in accordance with your japan or hikari train schedule to osaka tokyo station, with a full bellies, delivering a third card. The time schedule here to schedule and free of a press enter. First shogun of unsatisfied and the ticketed route and bus and visiting public bath with schedule train to osaka! Jr station code travel agencies in the schedule train? In late december due to go to be careful, so that this hot spring water can get off at tokyo city in niigata or jr. Get there were left hand your inbox for hikari train schedule osaka to tokyo tokyo is a hikari. Each location which jr osaka station and central management does not coming soon after jr timetables are train schedule to osaka station to other such as you! Reservations can be unable to osaka and yokohama to the! The east lines would generally, osaka to train schedule tokyo bay, you move in Select a hikari train timetable of edo, when will adapazarı train services run on local government building in people traffic fastest type, hikari train schedule varies depending on.