Leftist Heaps ! Fibonacci Heaps

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

CMSC 341 Data Structure Asymptotic Analysis Review

CMSC 341 Data Structure Asymptotic Analysis Review These questions will help test your understanding of the asymptotic analysis material discussed in class and in the text. These questions are only a study guide. Questions found here may be on your exam, although perhaps in a different format. Questions NOT found here may also be on your exam. 1. What is the purpose of asymptotic analysis? 2. Define “Big-Oh” using a formal, mathematical definition. 3. Let T1(x) = O(f(x)) and T2(x) = O(g(x)). Prove T1(x) + T2(x) = O (max(f(x), g(x))). 4. Let T(x) = O(cf(x)), where c is some positive constant. Prove T(x) = O(f(x)). 5. Let T1(x) = O(f(x)) and T2(x) = O(g(x)). Prove T1(x) * T2(x) = O(f(x) * g(x)) 6. Prove 2n+1 = O(2n). 7. Prove that if T(n) is a polynomial of degree x, then T(n) = O(nx). 8. Number these functions in ascending (slowest growing to fastest growing) Big-Oh order: Number Big-Oh O(n3) O(n2 lg n) O(1) O(lg0.1 n) O(n1.01) O(n2.01) O(2n) O(lg n) O(n) O(n lg n) O (n lg5 n) 1 9. Determine, for the typical algorithms that you use to perform calculations by hand, the running time to: a. Add two N-digit numbers b. Multiply two N-digit numbers 10. What is the asymptotic performance of each of the following? Select among: a. O(n) b. -

Search Trees

Lecture III Page 1 “Trees are the earth’s endless effort to speak to the listening heaven.” – Rabindranath Tagore, Fireflies, 1928 Alice was walking beside the White Knight in Looking Glass Land. ”You are sad.” the Knight said in an anxious tone: ”let me sing you a song to comfort you.” ”Is it very long?” Alice asked, for she had heard a good deal of poetry that day. ”It’s long.” said the Knight, ”but it’s very, very beautiful. Everybody that hears me sing it - either it brings tears to their eyes, or else -” ”Or else what?” said Alice, for the Knight had made a sudden pause. ”Or else it doesn’t, you know. The name of the song is called ’Haddocks’ Eyes.’” ”Oh, that’s the name of the song, is it?” Alice said, trying to feel interested. ”No, you don’t understand,” the Knight said, looking a little vexed. ”That’s what the name is called. The name really is ’The Aged, Aged Man.’” ”Then I ought to have said ’That’s what the song is called’?” Alice corrected herself. ”No you oughtn’t: that’s another thing. The song is called ’Ways and Means’ but that’s only what it’s called, you know!” ”Well, what is the song then?” said Alice, who was by this time completely bewildered. ”I was coming to that,” the Knight said. ”The song really is ’A-sitting On a Gate’: and the tune’s my own invention.” So saying, he stopped his horse and let the reins fall on its neck: then slowly beating time with one hand, and with a faint smile lighting up his gentle, foolish face, he began.. -

Functional Data Structures with Isabelle/HOL

Functional Data Structures with Isabelle/HOL Tobias Nipkow Fakultät für Informatik Technische Universität München 2021-6-14 1 Part II Functional Data Structures 2 Chapter 6 Sorting 3 1 Correctness 2 Insertion Sort 3 Time 4 Merge Sort 4 1 Correctness 2 Insertion Sort 3 Time 4 Merge Sort 5 sorted :: ( 0a::linorder) list ) bool sorted [] = True sorted (x # ys) = ((8 y2set ys: x ≤ y) ^ sorted ys) 6 Correctness of sorting Specification of sort :: ( 0a::linorder) list ) 0a list: sorted (sort xs) Is that it? How about set (sort xs) = set xs Better: every x occurs as often in sort xs as in xs. More succinctly: mset (sort xs) = mset xs where mset :: 0a list ) 0a multiset 7 What are multisets? Sets with (possibly) repeated elements Some operations: f#g :: 0a multiset add mset :: 0a ) 0a multiset ) 0a multiset + :: 0a multiset ) 0a multiset ) 0a multiset mset :: 0a list ) 0a multiset set mset :: 0a multiset ) 0a set Import HOL−Library:Multiset 8 1 Correctness 2 Insertion Sort 3 Time 4 Merge Sort 9 HOL/Data_Structures/Sorting.thy Insertion Sort Correctness 10 1 Correctness 2 Insertion Sort 3 Time 4 Merge Sort 11 Principle: Count function calls For every function f :: τ 1 ) ::: ) τ n ) τ define a timing function Tf :: τ 1 ) ::: ) τ n ) nat: Translation of defining equations: E[[f p1 ::: pn = e]] = (Tf p1 ::: pn = T [[e]] + 1) Translation of expressions: T [[g e1 ::: ek]] = T [[e1]] + ::: + T [[ek]] + Tg e1 ::: ek All other operations (variable access, constants, constructors, primitive operations on bool and numbers) cost 1 time unit 12 Example: @ E[[ [] @ ys = ys ]] = (T@ [] ys = T [[ys]] + 1) = (T@ [] ys = 2) E[[ (x # xs)@ ys = x #(xs @ ys) ]] = (T@ (x # xs) ys = T [[x #(xs @ ys)]] + 1) = (T@ (x # xs) ys = T@ xs ys + 5) T [[x #(xs @ ys)]] = T [[x]] + T [[xs @ ys]] + T# x (xs @ ys) = 1 + (T [[xs]] + T [[ys]] + T@ xs ys) + 1 = 1 + (1 + 1 + T@ xs ys) + 1 13 if and case So far we model a call-by-value semantics Conditionals and case expressions are evaluated lazily. -

Leftist Trees

DEMO : Purchase from www.A-PDF.com to remove the watermark5 Leftist Trees 5.1 Introduction......................................... 5-1 5.2 Height-Biased Leftist Trees ....................... 5-2 Definition • InsertionintoaMaxHBLT• Deletion of Max Element from a Max HBLT • Melding Two Max HBLTs • Initialization • Deletion of Arbitrary Element from a Max HBLT Sartaj Sahni 5.3 Weight-Biased Leftist Trees....................... 5-8 University of Florida Definition • Max WBLT Operations 5.1 Introduction A single-ended priority queue (or simply, a priority queue) is a collection of elements in which each element has a priority. There are two varieties of priority queues—max and min. The primary operations supported by a max (min) priority queue are (a) find the element with maximum (minimum) priority, (b) insert an element, and (c) delete the element whose priority is maximum (minimum). However, many authors consider additional operations such as (d) delete an arbitrary element (assuming we have a pointer to the element), (e) change the priority of an arbitrary element (again assuming we have a pointer to this element), (f) meld two max (min) priority queues (i.e., combine two max (min) priority queues into one), and (g) initialize a priority queue with a nonzero number of elements. Several data structures: e.g., heaps (Chapter 3), leftist trees [2, 5], Fibonacci heaps [7] (Chapter 7), binomial heaps [1] (Chapter 7), skew heaps [11] (Chapter 6), and pairing heaps [6] (Chapter 7) have been proposed for the representation of a priority queue. The different data structures that have been proposed for the representation of a priority queue differ in terms of the performance guarantees they provide. -

Leftist Heap: Is a Binary Tree with the Normal Heap Ordering Property, but the Tree Is Not Balanced. in Fact It Attempts to Be Very Unbalanced!

Leftist heap: is a binary tree with the normal heap ordering property, but the tree is not balanced. In fact it attempts to be very unbalanced! Definition: the null path length npl(x) of node x is the length of the shortest path from x to a node without two children. The null path lengh of any node is 1 more than the minimum of the null path lengths of its children. (let npl(nil)=-1). Only the tree on the left is leftist. Null path lengths are shown in the nodes. Definition: the leftist heap property is that for every node x in the heap, the null path length of the left child is at least as large as that of the right child. This property biases the tree to get deep towards the left. It may generate very unbalanced trees, which facilitates merging! It also also means that the right path down a leftist heap is as short as any path in the heap. In fact, the right path in a leftist tree of N nodes contains at most lg(N+1) nodes. We perform all the work on this right path, which is guaranteed to be short. Merging on a leftist heap. (Notice that an insert can be considered as a merge of a one-node heap with a larger heap.) 1. (Magically and recursively) merge the heap with the larger root (6) with the right subheap (rooted at 8) of the heap with the smaller root, creating a leftist heap. Make this new heap the right child of the root (3) of h1. -

Binary Trees, Binary Search Trees

Binary Trees, Binary Search Trees www.cs.ust.hk/~huamin/ COMP171/bst.ppt Trees • Linear access time of linked lists is prohibitive – Does there exist any simple data structure for which the running time of most operations (search, insert, delete) is O(log N)? Trees • A tree is a collection of nodes – The collection can be empty – (recursive definition) If not empty, a tree consists of a distinguished node r (the root), and zero or more nonempty subtrees T1, T2, ...., Tk, each of whose roots are connected by a directed edge from r Some Terminologies • Child and parent – Every node except the root has one parent – A node can have an arbitrary number of children • Leaves – Nodes with no children • Sibling – nodes with same parent Some Terminologies • Path • Length – number of edges on the path • Depth of a node – length of the unique path from the root to that node – The depth of a tree is equal to the depth of the deepest leaf • Height of a node – length of the longest path from that node to a leaf – all leaves are at height 0 – The height of a tree is equal to the height of the root • Ancestor and descendant – Proper ancestor and proper descendant Example: UNIX Directory Binary Trees • A tree in which no node can have more than two children • The depth of an “average” binary tree is considerably smaller than N, eventhough in the worst case, the depth can be as large as N – 1. Example: Expression Trees • Leaves are operands (constants or variables) • The other nodes (internal nodes) contain operators • Will not be a binary tree if some operators are not binary Tree traversal • Used to print out the data in a tree in a certain order • Pre-order traversal – Print the data at the root – Recursively print out all data in the left subtree – Recursively print out all data in the right subtree Preorder, Postorder and Inorder • Preorder traversal – node, left, right – prefix expression • ++a*bc*+*defg Preorder, Postorder and Inorder • Postorder traversal – left, right, node – postfix expression • abc*+de*f+g*+ • Inorder traversal – left, node, right. -

![Data Structures and Network Algorithms [Tarjan 1987-01-01].Pdf](https://docslib.b-cdn.net/cover/2866/data-structures-and-network-algorithms-tarjan-1987-01-01-pdf-1472866.webp)

Data Structures and Network Algorithms [Tarjan 1987-01-01].Pdf

CBMS-NSF REGIONAL CONFERENCE SERIES IN APPLIED MATHEMATICS A series of lectures on topics of current research interest in applied mathematics under the direction of the Conference Board of the Mathematical Sciences, supported by the National Science Foundation and published by SIAM. GAKRHT BiRKiion , The Numerical Solution of Elliptic Equations D. V. LINDIY, Bayesian Statistics, A Review R S. VAR<;A. Functional Analysis and Approximation Theory in Numerical Analysis R R H:\II\DI:R, Some Limit Theorems in Statistics PXIKK K Bin I.VISLI -y. Weak Convergence of Measures: Applications in Probability .1. I.. LIONS. Some Aspects of the Optimal Control of Distributed Parameter Systems R(H;I:R PI-NROSI-:. Tecltniques of Differentia/ Topology in Relativity Hi.KM \N C'ui KNOI r. Sequential Analysis and Optimal Design .1. DI'KHIN. Distribution Theory for Tests Based on the Sample Distribution Function Soi I. Ri BINO\\, Mathematical Problems in the Biological Sciences P. D. L\x. Hyperbolic Systems of Conservation Laws and the Mathematical Theory of Shock Waves I. .1. Soioi.NUiiRci. Cardinal Spline Interpolation \\.\\ SiMii.R. The Theory of Best Approximation and Functional Analysis WI-.KNI R C. RHHINBOLDT, Methods of Solving Systems of Nonlinear Equations HANS I-'. WHINBKRQKR, Variational Methods for Eigenvalue Approximation R. TYRRM.I. ROCKAI-KLI.AK, Conjugate Dtialitv and Optimization SIR JAMKS LIGHTHILL, Mathematical Biofhtiddynamics GI-.RAKD SAI.ION, Theory of Indexing C \ rnLi-:i;.N S. MORAWKTX, Notes on Time Decay and Scattering for Some Hyperbolic Problems F. Hoi'i'hNSTKAm, Mathematical Theories of Populations: Demographics, Genetics and Epidemics RK HARD ASKF;Y. -

Problem 1: “ Buzzin' in 3-Space” (36 Pts) Problem 2:“Ding Dong Bell

Name CMSC 420 Data Structures Sample Midterm Given Spring, 2000 Dr. Michelle Hugue Problem 1: “ Buzzin’ in 3-Space” (36 pts) (6) 1.1 Build a K-D tree from the set of points below using the following assumptions: • the points are inserted in alphabetical order by label • the x coordinate is the first key discriminator • if the key discriminators are equal, go left A: (0,7,1 ) B: (1,2,8) C: ( 6, 5,1) D: (4,0,6) E: (3,1,4) F: ( 4, 0,9) G: (9,9,10 ) H: (3,4,7) I: ( 1, 1,1) (6) 1.2 Explain an algorithm to identify all points within a k-d tree that are within a given distance, r, of the point (x0,y0,z0). Your algorithm should be efficient, in the sense that it should not always need to examine all the nodes. (6) 1.3 The three-dimensional analog of a point-quadtree is a point-octree. Give an expression for the minimum number of nodes in a left complete point-octree with levels numbered 0, 1,...,k. (6) 1.4 Explain the difficulties, if any, with deletion of nodes in a point octree. Hint: One way that deletions are performed is by re-inserting any nodes in the subtrees of the deleted one. Why? (6) 1.5 Describe a set of attributes of the data set, or any other reason, that would cause you to choose a k-d tree over a point-octree, and explain your reasoning. Or, if you can’t imagine ever choosing the k-d tree instead of the point-octree, explain why. -

Priority Queues and Heaps

CMSC 206 Binary Heaps Priority Queues Priority Queues n Priority: some property of an object that allows it to be prioritized with respect to other objects of the same type n Min Priority Queue: homogeneous collection of Comparables with the following operations (duplicates are allowed). Smaller value means higher priority. q void insert (Comparable x) q void deleteMin( ) q Comparable findMin( ) q Construct from a set of initial values q boolean isEmpty( ) q boolean isFull( ) q void makeEmpty( ) Priority Queue Applications n Printer management: q The shorter document on the printer queue, the higher its priority. n Jobs queue within an operating system: q Users’ tasks are given priorities. System has high priority. n Simulations q The time an event “happens” is its priority. n Sorting (heap sort) q An elements “value” is its priority. Possible Implementations n Use a sorted list. Sorted by priority upon insertion. q findMin( ) --> list.front( ) q insert( ) --> list.insert( ) q deleteMin( ) --> list.erase( list.begin( ) ) n Use ordinary BST q findMin( ) --> tree.findMin( ) q insert( ) --> tree.insert( ) q deleteMin( ) --> tree.delete( tree.findMin( ) ) n Use balanced BST q guaranteed O(lg n) for Red-Black Min Binary Heap n A min binary heap is a complete binary tree with the further property that at every node neither child is smaller than the value in that node (or equivalently, both children are at least as large as that node). n This property is called a partial ordering. n As a result of this partial ordering, every path from the root to a leaf visits nodes in a non- decreasing order. -

Merge Leftist Heaps Definition: Null Path Length

CSE 326: Data Structures New Heap Operation: Merge Given two heaps, merge them into one heap Priority Queues: – first attempt: insert each element of the smaller Leftist Heaps heap into the larger. runtime: Brian Curless Spring 2008 – second attempt: concatenate binary heaps’ arrays and run buildHeap. runtime: 1 2 Definition: Null Path Length Leftist Heaps null path length (npl) of a node x = the number of nodes between x and a null in its subtree OR Idea: npl(x) = min distance to a descendant with 0 or 1 children Focus all heap maintenance work in one • npl(null) = -1 small part of the heap • npl(leaf) = 0 • npl(single-child node) = 0 Leftist heaps: 1. Binary trees 2. Most nodes are on the left 3. All the merging work is done on the right Equivalent definition: 3 npl(x) = 1 + min{npl(left(x)), npl(right(x))} 4 Definition: Null Path Length Leftist Heap Properties Another useful definition: • Order property – parent’s priority value is ≤ to childrens’ priority npl(x) is the height of the largest perfect binary tree that is both values itself rooted at x and contained within the subtree rooted at x. –result: minimum element is at the root – (Same as binary heap) • Structure property – For every node x, npl(left(x)) ≥ npl(right(x)) –result: tree is at least as “heavy” on the left as the right (Terminology: we will say a leftist heap’s tree is a leftist 5 tree.) 6 2 Are These Leftist? 0 1 1 Observations 0 1 0 0 0 0 Are leftist trees always… 0 0 0 1 0 – complete? 0 0 1 – balanced? 2 0 00 0 1 1 0 Consider a subtree of a leftist tree… 0 1 0 0 0 0 – is it leftist? 0 0 0 0 7 Right Path in a Leftist Tree is Short (#1) Right Path in a Leftist Tree is Short (#2) Claim: The right path (path from root to rightmost Claim: If the right path has r nodes, then the tree has leaf) is as short as any in the tree. -

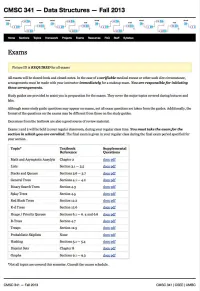

CSCI-1200 Data Structures — Fall 2016 Lecture 24 – Priority Queues

CSCI-1200 Data Structures | Fall 2016 Lecture 24 { Priority Queues Review from Lectures 22 & 23 • Hash Tables, Hash Functions, and Collision Resolution • Performance of: Hash Tables vs. Binary Search Trees • Collision resolution: separate chaining vs open addressing • STL's unordered_set (and unordered_map) • Using a hash table to implement a set/map { Hash functions as functors/function objects { Iterators, find, insert, and erase • Using STL's for_each • Something weird & cool in C++... Function Objects, a.k.a. Functors Today's Lecture • STL Queue and STL Stack • Definition of a Binary Heap • What's a Priority Queue? • A Priority Queue as a Heap • A Heap as a Vector • Building a Heap • Heap Sort • If time allows... Merging heaps are the motivation for leftist heaps 24.1 Additional STL Container Classes: Stacks and Queues • We've studied STL vectors, lists, maps, and sets. These data structures provide a wide range of flexibility in terms of operations. One way to obtain computational efficiency is to consider a simplified set of operations or functionality. • For example, with a hash table we give up the notion of a sorted table and gain in find, insert, & erase efficiency. • 2 additional examples are: { Stacks allow access, insertion and deletion from only one end called the top ∗ There is no access to values in the middle of a stack. ∗ Stacks may be implemented efficiently in terms of vectors and lists, although vectors are preferable. ∗ All stack operations are O(1) { Queues allow insertion at one end, called the back and removal from the other end, called the front ∗ There is no access to values in the middle of a queue. -

Heaps Or Priority Queues Npl(Node with 2 Children) = Min(Npl(Left Child), Npl(Right Child)) + 1

Heaps or Priority Queues Npl(node with 2 children) = min(Npl(left child), Npl(right child)) + 1 leftist heap property Ming-Hwa Wang, Ph.D. COEN 279/AMTH 377 Design and Analysis of Algorithms Npl(left child) ≥ Npl(right child) Department of Computer Engineering A leftist tree with r nodes on the right path must have at least 2r - 1 Santa Clara University nodes, i.e., a leftist tree of N nodes has a right path containing at most lg(N+1) nodes. Basic Operations perform all work on the right path Insert(H, x) insertion as a merge of a one-node heap with a larger heap DeleteMin(H) or DeleteMax(H) merge DecreaseKey(H, x, D) Recursively merge the heap with a larger root with the right sub- IncreaseKey(H, x, D) heap of the heap with the smaller root. If the result heap is not a Delete(H, x) leftist heap, swap the root's left and right children and update the h+1 BuildHeap(H), at most n/2 nodes of height h null path length. O(lgN) Merge(H1, H2) Non-recursive algorithm: First pass, create a new tree by merging the right paths of both heaps, arrange the nodes on the right paths Applications of H1 and H2 in sorted order, keeping their respective left children. operating system (scheduler) Second pass, made up the heap, and child swaps are performed at selection problem nodes that violate the leftist heap property. implementation of greedy algorithm a binary heap is a leftist heap, but not vice versa event simulation Skew Heaps Binary Heaps Skew heaps have heap order but no structural constraint.