Practical Set Partitioning and Column Generation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

1997 Sundance Film Festival Awards Jurors

1997 SUNDANCE FILM FESTIVAL The 1997 Sundance Film Festival continued to attract crowds, international attention and an appreciative group of alumni fi lmmakers. Many of the Premiere fi lmmakers were returning directors (Errol Morris, Tom DiCillo, Victor Nunez, Gregg Araki, Kevin Smith), whose earlier, sometimes unknown, work had received a warm reception at Sundance. The Piper-Heidsieck tribute to independent vision went to actor/director Tim Robbins, and a major retrospective of the works of German New-Wave giant Rainer Werner Fassbinder was staged, with many of his original actors fl own in for forums. It was a fi tting tribute to both Fassbinder and the Festival and the ways that American independent cinema was indeed becoming international. AWARDS GRAND JURY PRIZE JURY PRIZE IN LATIN AMERICAN CINEMA Documentary—GIRLS LIKE US, directed by Jane C. Wagner and LANDSCAPES OF MEMORY (O SERTÃO DAS MEMÓRIAS), directed by José Araújo Tina DiFeliciantonio SPECIAL JURY AWARD IN LATIN AMERICAN CINEMA Dramatic—SUNDAY, directed by Jonathan Nossiter DEEP CRIMSON, directed by Arturo Ripstein AUDIENCE AWARD JURY PRIZE IN SHORT FILMMAKING Documentary—Paul Monette: THE BRINK OF SUMMER’S END, directed by MAN ABOUT TOWN, directed by Kris Isacsson Monte Bramer Dramatic—HURRICANE, directed by Morgan J. Freeman; and LOVE JONES, HONORABLE MENTIONS IN SHORT FILMMAKING directed by Theodore Witcher (shared) BIRDHOUSE, directed by Richard C. Zimmerman; and SYPHON-GUN, directed by KC Amos FILMMAKERS TROPHY Documentary—LICENSED TO KILL, directed by Arthur Dong Dramatic—IN THE COMPANY OF MEN, directed by Neil LaBute DIRECTING AWARD Documentary—ARTHUR DONG, director of Licensed To Kill Dramatic—MORGAN J. -

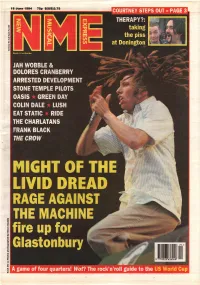

1994.06.18-NME.Pdf

INDIE 45s US 45s PICTURE: PENNIE SMITH PENNIE PICTURE: 1 I SWEAR........................ ................. AII-4-One (Blitzz) 2 I’LL REMEMBER............................. Madonna (Maverick) 3 ANYTIME, ANYPLACE...................... Janet Jackson (Virgin) 4 REGULATE....................... Warren G & Nate Dogg (Outburst) 5 THE SIGN.......... Ace Of Base (Arista) 6 DON’TTURN AROUND......................... Ace Of Base (Arista) 7 BABY I LOVE YOUR WAY....................... Big Mountain (RCA) 8 THE MOST BEAUTIFUL GIRL IN THE WORLD......... Prince(NPG) 9 YOUMEANTHEWORLDTOME.............. Toni Braxton (UFace) NETWORK UK TOP SO 4Ss 10 BACK AND FORTH......................... Aaliyah (Jive) 11 RETURN TO INNOCENCE.......................... Enigma (Virgin) 1 1 LOVE IS ALL AROUND......... ...Wet Wet Wet (Precious) 37 (—) JAILBIRD............................. Primal Scream (Creation) 12 IFYOUGO ............... ....................... JonSecada(SBK) 38 38 PATIENCE OF ANGELS. Eddi Reader (Blanco Y Negro) 13 YOUR BODY’S CALLING. R Kelly (Jive) 2 5 BABYI LOVE YOUR WAY. Big Mountain (RCA) 14 I’M READY. Tevin Campbell (Qwest) 3 11 YOU DON’T LOVE ME (NO, NO, NO).... Dawn Penn (Atlantic) 39 23 JUST A STEP FROM HEAVEN .. Eternal (EMI) 15 BUMP’N’ GRIND......................................R Kelly (Jive) 4 4 GET-A-WAY. Maxx(Pulse8) 40 31 MMMMMMMMMMMM....... Crash Test Dummies (RCA) 5 7 NO GOOD (STARTTHE DANCE)........... The Prodigy (XL) 41 37 DIE LAUGHING........ ................. Therapy? (A&M) 6 6 ABSOLUTELY FABULOUS.. Absolutely Fabulous (Spaghetti) 42 26 TAKE IT BACK ............................ Pink Floyd (EMI) 7 ( - ) ANYTIME YOU NEED A FRIEND... Mariah Carey (Columbia) 43 ( - ) HARMONICAMAN....................... Bravado (Peach) USLPs 8 3 AROUNDTHEWORLD............... East 17 (London) 44 ( - ) EASETHEPRESSURE................... 2woThird3(Epic) 9 2 COME ON YOU REDS 45 30 THEREAL THING.............. Tony Di Bart (Cleveland City) 3 THESIGN.,. Ace Of Base (Arista) 46 33 THE MOST BEAUTIFUL GIRL IN THE WORLD. -

Digital Music Sampling and Copyright

DIGITAL MUSIC SAMPLING AND COPYRIGHT POLICY-A BITTERSWEET SYMPHONY?' ASSESSING THE CONTINUED LEGALITY OF MUSIC SAMPLING IN THE UNITED KINGDOM, THE NETHERLANDS, AND THE UNITED STATES Melissa Hahn* TABLE OF CONTENTS I. INTRODUCTION: ABORIGINAL VOICES AND THE 1996 OLYMPICS-ASSESSING THE GLOBAL SCOPE OF DIGITAL M USIC SAMPLING ....................................... 715 II. BACKGROUND: THE RISE OF DIGITAL MUSIC SAMPLING AND ITS LEGAL RAMIFICATIONS ................... 719 A. The Emergence of DigitalMusic Sampling in PopularMusic and in Copyright Litigation ................ 719 B. The Berne Convention and Moral Rights .................. 721 III. ANALYSIS: A GLOBAL CONSIDERATION OF THE CURRENT LEGALITY OF DIGITAL MUSIC SAMPLING ............ 723 A. The Legality of Sampling Under U.K. Law ................ 723 B. The Legality of Sampling Under Dutch Law ............... 726 C. The Legality of Sampling Under U.S. Law ................. 730 * J.D., University ofGeorgia School of Law, 2006; B.A., UniversityofSouthern California, 2001; MSc, London School of Economics, 2002. In the late 1990s, a British court ordered rock group the Verve to pay all of the royalties stemming from their 1997 hit "Bittersweet Symphony" to the owners of the Rolling Stones' song "The Last Time" for failing to clear the use of a Rolling Stones' sample in the song. See Ben Challis, The Song Remains the Same: Music Sampling in the DigitalAge, MONDAQ, Dec. 23, 2003, http://www.mondaq.com/article.asp?articleid=23823&latestnews.html. The Verve sampled a symphonic remake of the Stones' hit, which was released on the "Rolling Stones Songbook" album under the monniker, the Andrew Oldham Orchestra. Christopher O'Connor, The Verve SuedAgain over 'BitterSweet Symphony,'VH .coM, Jan. -

Feat. Eminen) (4:48) 77

01. 50 Cent - Intro (0:06) 75. Ace Of Base - Life Is A Flower (3:44) 02. 50 Cent - What Up Gangsta? (2:59) 76. Ace Of Base - C'est La Vie (3:27) 03. 50 Cent - Patiently Waiting (feat. Eminen) (4:48) 77. Ace Of Base - Lucky Love (Frankie Knuckles Mix) 04. 50 Cent - Many Men (Wish Death) (4:16) (3:42) 05. 50 Cent - In Da Club (3:13) 78. Ace Of Base - Beautiful Life (Junior Vasquez Mix) 06. 50 Cent - High All the Time (4:29) (8:24) 07. 50 Cent - Heat (4:14) 79. Acoustic Guitars - 5 Eiffel (5:12) 08. 50 Cent - If I Can't (3:16) 80. Acoustic Guitars - Stafet (4:22) 09. 50 Cent - Blood Hound (feat. Young Buc) (4:00) 81. Acoustic Guitars - Palosanto (5:16) 10. 50 Cent - Back Down (4:03) 82. Acoustic Guitars - Straits Of Gibraltar (5:11) 11. 50 Cent - P.I.M.P. (4:09) 83. Acoustic Guitars - Guinga (3:21) 12. 50 Cent - Like My Style (feat. Tony Yayo (3:13) 84. Acoustic Guitars - Arabesque (4:42) 13. 50 Cent - Poor Lil' Rich (3:19) 85. Acoustic Guitars - Radiator (2:37) 14. 50 Cent - 21 Questions (feat. Nate Dogg) (3:44) 86. Acoustic Guitars - Through The Mist (5:02) 15. 50 Cent - Don't Push Me (feat. Eminem) (4:08) 87. Acoustic Guitars - Lines Of Cause (5:57) 16. 50 Cent - Gotta Get (4:00) 88. Acoustic Guitars - Time Flourish (6:02) 17. 50 Cent - Wanksta (Bonus) (3:39) 89. Aerosmith - Walk on Water (4:55) 18. -

234 MOTION for Permanent Injunction.. Document Filed by Capitol

Arista Records LLC et al v. Lime Wire LLC et al Doc. 237 Att. 12 EXHIBIT 12 Dockets.Justia.com CRAVATH, SWAINE & MOORE LLP WORLDWIDE PLAZA ROBERT O. JOFFE JAMES C. VARDELL, ID WILLIAM J. WHELAN, ffl DAVIDS. FINKELSTEIN ALLEN FIN KELSON ROBERT H. BARON 825 EIGHTH AVENUE SCOTT A. BARSHAY DAVID GREENWALD RONALD S. ROLFE KEVIN J. GREHAN PHILIP J. BOECKMAN RACHEL G. SKAIST1S PAULC. SAUNOERS STEPHEN S. MADSEN NEW YORK, NY IOOI9-7475 ROGER G. BROOKS PAUL H. ZUMBRO DOUGLAS D. BROADWATER C. ALLEN PARKER WILLIAM V. FOGG JOEL F. HEROLD ALAN C. STEPHENSON MARC S. ROSENBERG TELEPHONE: (212)474-1000 FAIZA J. SAEED ERIC W. HILFERS MAX R. SHULMAN SUSAN WEBSTER FACSIMILE: (212)474-3700 RICHARD J. STARK GEORGE F. SCHOEN STUART W. GOLD TIMOTHY G. MASSAD THOMAS E. DUNN ERIK R. TAVZEL JOHN E. BEERBOWER DAVID MERCADO JULIE SPELLMAN SWEET CRAIG F. ARCELLA TEENA-ANN V, SANKOORIKAL EVAN R. CHESLER ROWAN D. WILSON CITYPOINT RONALD CAM I MICHAEL L. SCHLER PETER T. BARBUR ONE ROPEMAKER STREET MARK I. GREENE ANDREW R. THOMPSON RICHARD LEVIN SANDRA C. GOLDSTEIN LONDON EC2Y 9HR SARKIS JEBEJtAN DAMIEN R. ZOUBEK KRIS F. HEINZELMAN PAUL MICHALSKI JAMES C, WOOLERY LAUREN ANGELILLI TELEPHONE: 44-20-7453-1000 TATIANA LAPUSHCHIK B. ROBBINS Kl ESS LING THOMAS G. RAFFERTY FACSIMILE: 44-20-7860-1 IBO DAVID R. MARRIOTT ROGER D. TURNER MICHAELS. GOLDMAN MICHAEL A. PASKIN ERIC L. SCHIELE PHILIP A. GELSTON RICHARD HALL ANDREW J. PITTS RORYO. MILLSON ELIZABETH L. GRAYER WRITER'S DIRECT DIAL NUMBER MICHAEL T. REYNOLDS FRANCIS P. BARRON JULIE A. -

Enigma MCMXC Ad Mp3, Flac

Enigma MCMXC a.D. mp3, flac, wma DOWNLOAD LINKS (Clickable) Genre: Electronic / Folk, World, & Country Album: MCMXC a.D. Country: Europe Released: 1990 Style: Abstract, Ambient, New Age MP3 version RAR size: 1479 mb FLAC version RAR size: 1873 mb WMA version RAR size: 1617 mb Rating: 4.7 Votes: 824 Other Formats: MIDI MP4 RA MP2 VOX DMF MOD Tracklist I. The Voice Of Enigma 2:21 Principles Of Lust (11:43) II.A: Sadeness II.B: Find Love II.C: Sadeness (Reprise) III. Callas Went Away 4:29 IV. Mea Culpa 5:01 V. The Voice & The Snake 1:41 VI. Knocking On Forbidden Doors 4:22 Back To The Rivers Of Belief (10:36) VII.A: Way To Eternity VII.B: Hallelujah VII.C: The Rivers Of Belief Companies, etc. Licensed From – EMI Music Copyright (c) – Virgin Published By – S.B.A./GALA RECORDS, INC. Distributed By – S.B.A./GALA RECORDS, INC. Pressed By – ООО "Си Ди Клуб" Credits Producer – Enigma Notes ℗ 1990 Virgin © 1990 Virgin The booklet has only 4 color pages. Packaged in a standard jewel case with black tray. Barcode and Other Identifiers Barcode (Text): 7 24356 32922 5 Barcode (String): 724356329225 Matrix / Runout: 563292 2 Mastering SID Code: IFPI LU66 Mould SID Code: IFPI ACH03 Other versions Category Artist Title (Format) Label Category Country Year 261 209 Enigma MCMXC a.D. (CD, Album) Virgin 261 209 Europe 1990 MCMXC a.D. (Ltd, Sli + 7243 8 47871 2 7243 8 47871 2 Enigma CD, Album, RE + CD, EP, Virgin Taiwan 1999 7 7 Comp, Promo) MCMXC a.D. -

List: 44 When Your Heart Stops Beating ...And You Will Know Us By

List: 44 When Your Heart Stops Beating ...And You Will Know Us By The Trail Of Dead ...And You Will Know Us By The Trail Of Dead ...And You Will Know Us By The Trail Of Dead So Divided ...And You Will Know Us By The Trail Of Dead Worlds Apart 10,000 Maniacs In My Tribe 10,000 Maniacs Our Time In Eden 10,000 Maniacs The Earth Pressed Flat 100% Funk 100% Funk 3 Doors Down Away From The Sun 3 Doors Down The Better Life 30 Seconds To Mars 30 Seconds To Mars 30 Seconds To Mars A Beautiful Lie 311 311 311 Evolver 311 Greatest Hits '93-'03 311 Soundsystem 504 Plan Treehouse Talk 7:22 Band 7:22 Live 80's New Wave 80's New Wave (Disc1) 80's New Wave 80's New Wave (Disc2) A Day Away Touch M, Tease Me, Take Me For Granted A Day To Remember And Their Name Was Treason A New Found Glory Catalyst A New Found Glory Coming Home A New Found Glory From The Screen To Your Stereo A New Found Glory Nothing Gold Can Stay A New Found Glory Sticks And Stones Aaron Spiro Sing Abba Gold Aberfeldy Young Forever AC/DC AC/DC Live: Collector's Edition (Disc 1) AC/DC AC/DC Live: Collector's Edition (Disc 2) AC/DC Back In Black AC/DC Ballbreaker AC/DC Blow Up Your Video AC/DC Dirty Deeds Done Dirt Cheap AC/DC Fly On The Wall AC/DC For Those About To Rock We Salute You AC/DC High Voltage AC/DC Highway To Hell AC/DC If You Want Blood You've Got I AC/DC Let There Be Rock AC/DC Powerage AC/DC Stiff Upper Lip AC/DC T.N.T. -

Music & Websites

Resources for SoulCollagers: Music & Websites from KaleidoSoul.com KaleidoSoul Recommended Resources For SoulCollagers Volume 2: MUSIC & WEBSITES Compiled by Anne Marie Bennett, SoulCollage® Facilitator & Facilitator Trainer, Massachusetts, New England, the Internet, & Beyond 1 Resources for SoulCollagers: Music & Websites from KaleidoSoul.com KaleidoSoul Resources for SoulCollagers- Volume 2: Music & Websites , copyright 2010 by Anne Marie Bennett. All rights reserved. Please do not copy, forward, give away or reproduce this material in any manner without written permission from the author. Hundreds of hours (and much creative energy) were devoted to the creation of this e-book. Your purchase supports the continuing efforts of KaleidoSoul.com in its mission to make SoulCollage available to people around the world. To give feedback or to contact the author, please email [email protected] . SoulCollage® is a trademarked process, created by Seena Frost. For more information about Seena, SoulCollage®, and Facilitator Trainings, please visit www.SoulCollage.com . This e-book is brought to you by ….......... www.KaleidoSoul.com Spinning the fragments of your world into wholeness and beauty through SoulCollage ® 2 Resources for SoulCollagers: Music & Websites from KaleidoSoul.com Introduction I really love my dual roles as Editor and Writer of Soul Treasures , the KaleidoSoul Kindred Spirits Members’ Newsletter. And one of my favorite jobs in those roles is seeking out resources for SoulCollagers to enhance their practice. It’s a pleasure to stumble across a website or CD that I know will resonate with you. I often wander around the web searching for sites that have potential to enhance our inner wisdom and outer knowledge. -

Strange Sounds Strange Sounds This Page Intentionally Left Blank Strange Sounds

Strange Sounds Strange Sounds This page intentionally left blank Strange Sounds Music, Technology, &Oulture TIMOTHY D. TAYLOR ROUTLEDGE NEW YORK LONDON Published in 2001 by Routledge 270 Madison Ave, NewYorkNY 10016 Published in Great Britain by Routledge 2 Park Square, Milton Park, Abingdon, Oxon, OX14 4RN Routledge is an imprint of the Taylor & Francis Group. Transferred to Digital Printing 2010 Copyright © 2001 by Routledge All rights reserved. No part of this book may be printed or reproduced or utilized in any form or by electronic, mechanical, or other means, now known or hereafter invented, including photocopying and recording or in any information storage or retrieval system, without permission in writing from the publisher. The publisher and author gratefully acknowledge permission to reprint the following: An earlier version of chapter 7 appeared as "Music at Home, Politics Afar" in Decomposition: Post-Dis ciplinary Performance, edited by Sue-Ellen Case, Philip Brett, and Susan Leigh Foster, © 2000 by Indiana University Press. Example 4.1, an exceprt from "Moon Moods," music by Harry Revel, © 1946, reprinted by permission of Michael H. Goldsen, Inc., and William C. Schulman. Figure 4.5 used courtesy of Capitol Records, Inc. Figure 7.2 and figure 7.3 reprinted by permission of Geert-Jan Hobijn. Figure 8.1 and figure 8.2 reprinted by permission of Synthetic Sa:dhus. Figure 8.3, figure 8.4, and figure 8.5 reprinted by permission of Tsunami Enterprises, Inc. Cataloguing-in-Publication Data is available from the Library of Congress. ISBN 0-415-93683-7 (hbk.) - ISBN 0-415-93684-5 (pbk.) Publisher's Note The publisher has gone to great lengths to ensure the quality of this reprint but points out that some imperfections in the original may be apparent. -

Motivational and Uplifting Songs for New Year's & Beyond

Motivational and Uplifting Songs for New Year's & Beyond Song Name Time Artist Album Theme A Change Will Do You Good 3:50 Sheryl Crow Sheryl Crow change A Quiet Revolution (Phatjack Remix) 7:40 Lustral Deeper Darker Secrets empowerment All the Above 5:11 Maino [feat. T-Pain] Blag Flag City living the dream All Things (Keep Getting Better) 3:14 Widelife (Queer Eye Theme) Life is good Andromeda 6:37 Chicane Behind the Sun reflection Anything's Possible 3:47 Johnny Lang Turn Around empowerment Applause 3:32 Lady Gaga Artpop celebration Auld Lang Syne 2:04 Red Hot Chilli Pipers Bagrock to the Masses celebration Auld Lang Syne (The New Years Anthem) 6:11 Mariah Carey Auld Lang Syne (The Remixes) new beginnings Beautiful Day 4:08 U2 All That You Can't Leave Behind empowerment, Life is good Believe 3:46 The Bravery The Sun and the Moon belief Better Days 3:36 Better Days Goo Goo Dolls Change; reflection Better Than Today 3:36 Kylie Minogue Better Than Today take a chance Brand New Book 3:47 Train Brand New Book new beginnings Bulletproof 3:28 La Roux The Gold EP personal growth Celebrate Love 4:54 Pick Me Off the Dirt Klangstrahler Projekt celebration Celebration 3:43 Celebrate! Kool & The Gang celebration Copyright © 2012 Indoor Cycling Association www.indoorcyclingassociation.com Celebration 3:35 Madonna Celebration - Single celebration Change 3:17 John Waite Ignition change Change 4:40 Taylor Swift Taylor Swift new beginnings Change 3:59 Tears For Fears Shout: The Very Best of Tears For Fears change Change is Good 4:11 Rick Danko Times Like -

First Amended Complaint for Federal Copyright

Arista Records LLC et al v. Lime Wire LLC et al Doc. 45 UNITED STATES DISTRICT COURT SOUTHERN DISTRICT OF NEW YORK ARISTA RECORDS LLC; ATLANTIC RECORDING CORPORATION; BMG MUSIC; CAPITOL RECORDS, INC.; ELEKTRA ENTERTAINMENT GROUP INC.; INTERSCOPE RECORDS; LAFACE RECORDS LLC; ECF CASE MOTOWN RECORD COMPANY, L.P.; PRIORITY RECORDS LLC; SONY BMG MUSIC FIRST AMENDED COMPLAINT FOR ENTERTAINMENT; UMG RECORDINGS, INC.; FEDERAL COPYRIGHT VIRGIN RECORDS AMERICA, INC.; and INFRINGEMENT, COMMON LAW WARNER BROS. RECORDS INC., COPYRIGHT INFRINGEMENT, Plaintiffs, UNFAIR COMPETITION, CONVEYANCE MADE WITH v. INTENT TO DEFRAUD AND UNJUST ENRICHMENT LIME WIRE LLC; LIME GROUP LLC; MARK GORTON; GREG BILDSON, and M.J.G. LIME 06 Civ. 05936 (GEL) WIRE FAMILY LIMITED PARTNERSHIP, Defendants. Plaintiffs hereby allege on personal knowledge as to allegations concerning themselves, and on information and belief as to all other allegations, as follows: NATURE OF THE ACTION 1. Plaintiffs are record companies that produce, manufacture, distribute, sell, and license the vast majority of commercial sound recordings in this country. Defendants' business, operated under the trade name "LimeWire" and variations thereof, is devoted essentially to the Internet piracy of Plaintiffs' sound recordings. Defendants designed, promote, distribute, support and maintain the LimeWire software, system/network, and related services to consumers for the well-known and overarching purpose of making and distributing unlimited copies of Plaintiffs' sound recordings REDACTED VERSION - COMPLETE VERSION FILED UNDER SEAL Dockets.Justia.com without paying Plaintiffs anything. Plaintiffs bring this action to stop Defendants' massive and daily infringement of Plaintiffs' copyrights. 2. The scope of infringement caused by Defendants is staggering. Millions of infringing copies of Plaintiff s sound recordings have been made and distributed through LimeWire — copies that can be and are permanently stored, played, and further distributed by Lime Wire's users. -

236 Valentine's Day Songs (2015)

236 Valentine's Day Songs (2015) Song Name Time Artist Album Theme Lay All Your Love On Me 3:02 ABBA The Definitive Collection passion Take a Chance on Me 4:05 Abba Gold romance Love in an Elevator 5:21 Aerosmith Big Ones fun Sweet emotion 5:10 Aerosmith Armageddon passion The Age of Love (Jam & Spoon Watch Out For Stella Mix) 4:08 Age of Love The Age of Love adventure Akon, Colby O'Donis, Kardinal Beautiful 5:13 Offishall Freedom Love Do You Love Me 2:16 Amanda Jenssen Killing My Darlings Love Sweet Pea 2:09 Amos Lee Supply and Demand passion Let Love Fly (Joe Claussell's Sacred Rhythm LP Version) 5:46 Ananda Project Night Blossom adventure True Love 6:05 Angels and Airwaves I-Empire love Reckless (With Your Love) 5:42 Azari & iii Defected in the House Ibiza passion Romeo 3:26 Basement Jaxx The Singles love Love Can Do You No Harm 3:04 Beangrowers Not In A Million Lovers adventure Able to Love (Sfaction Mix) 3:27 Benny Benassi & The Biz No Matter What You Do / Able to Love adventure Crazy in Love 3:56 Beyonce Dangerously In Love Love Cradle of love 4:38 Billy Idol Billy Idol: Greatest Hits Love Where is the Love 4:33 Black Eyed Peas Elephunk World needs love Heart of Glass 4:35 Blondie The Best of Blondie relationship I Feel Love 3:13 Blue Man Group & Venus Hum The Complex romance One Love/People Get Ready 3:02 Bob Marley & The Wailers Exodus romance Thank You For Loving Me 5:09 Bon Jovi Crush relationship Somebody to Love (UK Club Mix) 131/65bpm 4:02 Boogie Pimps Somebody to Love CDM romance Copyright © 2012 Indoor Cycling Association