Physics in Our Lives

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Igor Tamm 1895 - 1971 Awarded the Nobel Prize for Physics in 1958

Igor Tamm 1895 - 1971 Awarded the Nobel Prize for Physics in 1958 The famous Russian physicist Igor Evgenievich Tamm is best known for his theoretical explanation of the origin of the Cherenkov radiation. But really his works covered various fields of physics: nuclear physics, elementary particles, solid-state physics and so on. In about 1935, he and his colleague, Ilya Frank, concluded that although objects can’t travel faster than light in a vacuum, they can do so in other media. Cherenkov radiation is emitted if charged particles pass the media faster than the speed of light ! For this research Tamm together with his Russian colleagues was awarded the 1958 Nobel Prize in Physics. Igor Tamm was born in 1895 in Vladivostok when Russia was still ruled by the Tsar. His father was a civil engineer who worked on the electricity and sewage systems. When Tamm was six years old his family moved to Elizavetgrad, in the Ukraine. Tamm led an expedition to He graduated from the local Gymnasium in 1913. Young Tamm dreamed of becoming a revolutionary, but his father disapproved . However only his mother was able to convince him to search for treasure in the Pamirs change his plans. She told him that his father’s weak heart could not take it if something happened to him. In 1923 Tamm was offered a teaching post at the Second Moscow University and later he was And so, in 1913, Tamm decided to leave Russia for a year and continue his studies in Edinburgh. awarded a professorship at Moscow State University. -

Semiconductor Heterostructures and Their Application

Zhores Alferov The History of Semiconductor Heterostructures Reserch: from Early Double Heterostructure Concept to Modern Quantum Dot Structures St Petersburg Academic University — Nanotechnology Research and Education Centre RAS • Introduction • Transistor discovery • Discovery of laser-maser principle and birth of optoelectronics • Heterostructure early proposals • Double heterostructure concept: classical, quantum well and superlattice heterostructure. “God-made” and “Man-made” crystals • Heterostructure electronics • Quantum dot heterostructures and development of quantum dot lasers • Future trends in heterostructure technology • Summary 2 The Nobel Prize in Physics 1956 "for their researches on semiconductors and their discovery of the transistor effect" William Bradford John Walter Houser Shockley Bardeen Brattain 1910–1989 1908–1991 1902–1987 3 4 5 6 W. Shockley and A. Ioffe. Prague. 1960. 7 The Nobel Prize in Physics 1964 "for fundamental work in the field of quantum electronics, which has led to the construction of oscillators and amplifiers based on the maser-laser principle" Charles Hard Nicolay Aleksandr Townes Basov Prokhorov b. 1915 1922–2001 1916–2002 8 9 Proposals of semiconductor injection lasers • N. Basov, O. Krochin and Yu. Popov (Lebedev Institute, USSR Academy of Sciences, Moscow) JETP, 40, 1879 (1961) • M.G.A. Bernard and G. Duraffourg (Centre National d’Etudes des Telecommunications, Issy-les-Moulineaux, Seine) Physica Status Solidi, 1, 699 (1961) 10 Lasers and LEDs on p–n junctions • January 1962: observations -

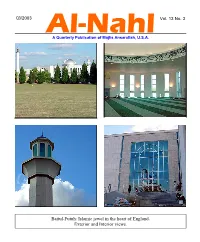

Al-Nahl Volume 13 Number 3

Q3/2003 Vol. 13 No. 3 Al-Nahl A Quarterly Publication of Majlis Ansarullah, U.S.A. Baitul-Futuh: Islamic jewel in the heart of England. Exterior and Interior views. Special Issue of the Al-Nahl on the Life of Hadrat Dr. Mufti Muhammad Sadiq, radiyallahu ‘anhu. 60 pages, $2. Special Issue on Dr. Abdus Salam. 220 pages, 42 color and B&W pictures, $3. Ansar Ansar (Ansarullah News) is published monthly by Majlis Ansarullah U.S.A. and is sent free of charge to all Ansar in the U.S. Ordering Information: Send a check or money order in the indicated amount along with your order to Chaudhary Mushtaq Ahmad, 15000 Good Hope Rd, Silver Spring, MD 20905. Price includes shipping and handling within the continental U.S. Conditions of Bai‘at, Pocket-Size Edition Majlis Ansarullah, U.S.A. has published the ten conditions of initiation into the Ahmadiyya Muslim Community in pocket size brochure. Contact your local officials for a free copy or write to Ansar Publications, 15000 Good Hope Rd, Silver Spring MD 20905. Razzaq and Farida A story for children written by Dr. Yusef A. Lateef. Children and new Muslims, all can read and enjoy this story. It makes a great gift for the children of Ahmadi, Non-Ahmadi and Non- Muslim relatives, friends and acquaintances. The book contains colorful drawings. Please send $1.50 per copy to Chaudhary Mushtaq Ahmad, 15000 Good Hope Rd, Silver Spring, MD 20905 with your mailing address and phone number. Majlis Ansarullah U.S.A. will pay the postage and handling within the continental U.S. -

State of the Nation 2008 Canada’S Science, Technology and Innovation System

State of the Nation 2008 Canada’s Science, Technology and Innovation System Science, Technology and Innovation Council Science, Technology and Innovation Council Permission to Reproduce Except as otherwise specifically noted, the information in this publication may be reproduced, in part or in whole and by any means, without charge or further permission from the Science, Technology and Innovation Council (STIC), provided that due diligence is exercised in ensuring the accuracy of the information, that STIC is identified as the source institution, and that the reproduction is not represented as an official version of the information reproduced, nor as having been made in affiliation with, or with the endorsement of, STIC. © 2009, Government of Canada (Science, Technology and Innovation Council). Canada’s Science, Technology and Innovation System: State of the Nation 2008. All rights reserved. Aussi disponible en français sous le titre Le système des sciences, de la technologie et de l’innovation au Canada : l’état des lieux en 2008. This publication is also available online at www.stic-csti.ca. This publication is available upon request in accessible formats. Contact the Science, Technology and Innovation Council Secretariat at the number listed below. For additional copies of this publication, please contact: Science, Technology and Innovation Council Secretariat 235 Queen Street 9th Floor Ottawa ON K1A 0H5 Tel.: 613-952-0998 Fax: 613-952-0459 Web: www.stic-csti.ca Email: [email protected] Cat. No. 978-1-100-12165-9 50% ISBN Iu4-142/2009E recycled 60579 fiber State of the Nation 2008 Canada’s Science, Technology and Innovation System Science, Technology and Innovation Council Canada’s Science, Technology and Innovation System iii State of the Nation 2008 Canada’s Science, Technology and Innovation System Context and Executive Summary . -

Print Version

Strategic Partners and Leaders of World Innovation Peter the Great St. Petersburg Polytechnic University and Tsinghua University Presentation of the Rector of Peter the Great St. Petersburg Polytechnic University, Academician of the RAS A.I. Rudskoi within the frame of the Tsinghua Global Vision Lectures at Tsinghua University (China); April 15, 2019 Dear Chairman of the University Council Professor Chen Xu, distinguished First Secretary of the Embassy of the Russian Federation, representative of the Ministry of Education and Science of the Russian Federation in the People's Republic of China Igor Pozdnyakov, distinguished academicians of the Chinese Academy of Sciences and professors of Tsinghua University, dear students and graduates, dear colleagues! It is a great honor for me to be here today in this Hall of Tsinghua University, a strategic partner of Peter the Great St. Petersburg Polytechnic University, as a speaker of the Tsinghua Global Vision Lectures, earlier attended by rectors of global world universities, leading experts and prominent political figures. Tsinghua University is the leader of China's global education. You hold with confidence the first place in the national ranking and are among the top-rank universities in the world. Peter the Great St. Petersburg Polytechnic University is one of the top 10 Russian universities and the largest technical one, the leader in engineering education in the Russian Federation. Our universities, as leaders of global education, are facing the essential challenge of ensuring the sustainable development of society, creating and introducing innovations. This year, Peter the Great St. Petersburg Polytechnic University ranked 85th and got in the top-100 world universities in the Times Higher Education (THE) University Impact Rankings. -

2005 CERN–CLAF School of High-Energy Physics

CERN–2006–015 19 December 2006 ORGANISATION EUROPÉENNE POUR LA RECHERCHE NUCLÉAIRE CERN EUROPEAN ORGANIZATION FOR NUCLEAR RESEARCH 2005 CERN–CLAF School of High-Energy Physics Malargüe, Argentina 27 February–12 March 2005 Proceedings Editors: N. Ellis M.T. Dova GENEVA 2006 CERN–290 copies printed–December 2006 Abstract The CERN–CLAF School of High-Energy Physics is intended to give young physicists an introduction to the theoretical aspects of recent advances in elementary particle physics. These proceedings contain lectures on field theory and the Standard Model, quantum chromodynamics, CP violation and flavour physics, as well as reports on cosmic rays, the Pierre Auger Project, instrumentation, and trigger and data-acquisition systems. iii Preface The third in the new series of Latin American Schools of High-Energy Physics took place in Malargüe, lo- cated in the south-east of the Province of Mendoza in Argentina, from 27 February to 12 March 2005. It was organized jointly by CERN and CLAF (Centro Latino Americano de Física), and with the strong support of CONICET (Consejo Nacional de Investigaciones Científicas y Técnicas). Fifty-four students coming from eleven different countries attended the School. While most of the students stayed in Hotel Rio Grande, a few students and the Staff stayed at Microtel situated close by. However, all the participants ate their meals to- gether at Hotel Rio Grande. According to the tradition of the School the students shared twin rooms mixing nationalities and in particular Europeans together with Latin Americans. María Teresa Dova from La Plata University was the local director for the School. -

Real Time Density Functional Simulations of Quantum Scale

Real Time Density Functional Simulations of Quantum Scale Conductance by Jeremy Scott Evans B.A., Franklin & Marshall College (2003) Submitted to the Department of Chemistry in partial fulfillment of the requirements for the degree of Doctor of Philosophy at the MASSACHUSETTS INSTITUTE OF TECHNOLOGY June 2009 c Massachusetts Institute of Technology 2009. All rights reserved. Author............................................... ............... Department of Chemistry February 2, 2009 Certified by........................................... ............... Troy Van Voorhis Associate Professor of Chemistry Thesis Supervisor Accepted by........................................... .............. Robert W. Field Chairman, Department Committee on Graduate Theses This doctoral thesis has been examined by a Committee of the Depart- ment of Chemistry as follows: Professor Robert J. Silbey.............................. ............. Chairman, Thesis Committee Class of 1942 Professor of Chemistry Professor Troy Van Voorhis............................... ........... Thesis Supervisor Associate Professor of Chemistry Professor Jianshu Cao................................. .............. Member, Thesis Committee Associate Professor of Chemistry 2 Real Time Density Functional Simulations of Quantum Scale Conductance by Jeremy Scott Evans Submitted to the Department of Chemistry on February 2, 2009, in partial fulfillment of the requirements for the degree of Doctor of Philosophy Abstract We study electronic conductance through single molecules by subjecting -

Urdu Syllabus

TUMKUR UINIVERSITY DEPARTMENT OF URDU'. SYLLABUS AND TEXT BOOKS UNDER CBCS SCHEME LANGUAGE URDU lst Semester B.A./llsc/B.com/BBM/BCA lffect From 20!6-tz lst Semester B.A. Svllabus: Texts: I' 1. Collection of Prose and Poetry Urdu Language Text Book for First Semister B.A.: Edited by: URDU BOS (UG) (Printed and Published by prasaranga, Bangarore university, Bangalore) 2. Non-detail : Selected 4 Chapters From Text Book Reference Books: 1. Yadgaray Hali Saleha Aabid Hussain 2. lqbal Ka Narang QopiChandt 'i Page 1 z' i!. .F}*$T g_€.9f.*g.,,,E B'A BE$BEE CBU R$E Eenlcprqrerlh'ed:.Ufifi9 TFXT B €KeCn e,A I SEMESTER, : ,1 1;5:. -ll-=-- -i- - 1. padiye Gar Bcemar. 'M,tr*hf ag:A.hmgd-$tib.uf i 1.,gglrEdnre:a E*yl{arsfrt$ay Khwaja Hasan Nizarni 3" M_ugalrnanen Ki GurashthaTaleem Shibll Nomani +. lfilopatra N+y,Ek Moti €hola Sclence Ki Duniya : 5. g,€land:|4i$ ..- Manarir,Aashiq flarganvi PelfTR.Y i X., Hazrathfsmail Ki Viladat .FJafeez,J*lan*ari Naath 2. Hsli Mir.*e6halib 3. lqbal 4. T*j &Iahat 5*-e-ubipe.t{i Saher Ludhianawi ,,, lqbal, Amjad, Akbar {Z Eaehf 6g'**e€{F} i ': 1.. 6azaf W*& 2;1 ' 66;*; JaB:Flis,qf'*kfiit" 4., : €*itrl $hmed Fara:, 4. €azgl Firaq ,5; *- ,Elajrooh 6, Gqzal Shahqr..Y.aar' V. Gazal tiiarnsp{.4i1sruu ' 8. Gaal Narir Kqgrnt NG$I.SE.f*IL.: 1- : .*akF*!h*s ,&ri*an Ch*lrdar; 3. $alartrf,;oat &jendar.Sixgir.Ee t 3-, llfar*€,Ffate Tariq.€-hil*ari 4',,&alandar t'- €hig*lrl*tn:Ftyder' Ah*|.,9 . -

50 31-01-2018 Time: 60 Min

8thAHMED BIN ABOOD MEMORIAL State Level Physics-Maths Knowledge Test-2018 Organized by Department of Physics Department of Mathematics Milliya Arts, Science and Management Science College, BEED Max. Marks: 50 31-01-2018 Time: 60 min Instructions:- All the questions carry equal marks. Mobile and Calculators are not allowed. Student must write his/her names, college name and allotted seat number on the response sheet provided. Student must stick the answer in the prescribed response sheet by completely blacken the oval with black/blue pen only. Incorrect Method Correct Method Section A 1) If the earth completely loses its gravity, then for any body……… (a) both mass and weight becomes zero (b) neither mass nor weight becomes zero (c) weight becomes zero but not the mass (d) mass becomes zero but not the weight 2) The angular speed of a flywheel is 3π rad/s. It is rotating at…….. (a) 3 rpm (b) 6 rpm (c) 90 rpm (d) 60 rpm 3) Resonance occurs when …. (a) a body vibrates at a frequency lower than its normal frequency. (b) a body vibrates at a frequency higher than its normal frequency. (c) a body is set into vibrations with its natural frequency of another body vibrating with the same frequency. (d) a body is made of the same material as the sound source. 4) The SI unit of magnetic flux density is…… (a) tesla (b) henry (c) volt (d) volt-second 5) Ampere’s circuital law is integral form of….. (a) Lenz’s law (b) Faraday’s law (c) Biot-Savart’s law (d) Coulomb’s law 1 6) The energy band gap is highest in the case of ……. -

Institute of Physics

Slectron Spectroscopy for Atoms, Molecules and Condensed Matter Nobel Lecture, December 8, 1981 by Kai Siegbahn nstitute of Physics, University of Uppsala, Box 530, S-751 21 Uppsala, Sweden UUIP-1052 December 1981 UPPSALA UNIVERSITY INSTITUTE OF PHYSICS Electron Spectroscopy for Atoms, Molecules and Condensed Matter Nobel Lecture, December 8, 1981 by Kai Siegbahn Institute of Physics, University of Uppsala, Box 530, S-751 21 Uppsala, Sweden UUIP-1052 December 1981 Electron Spectroscopy for Atoms, Molecules and Condensed Matter Nobel Lecture, December 8, 1981 by Kai Siegbahn Institute of Physics University of Uppsala, Sweden In my thesis /I/, which was presented in 1944, I described some work which I had done to study 3 decay and internal conversion in radioactive decay by means of two different principles. One of these was based on the semi-circular focusing of electrons in a homogeneous magnetic field, while the other used a big magnetic lens. The first principle could give good resolution but low intensity, and the other just the reverse. I was then looking for a possibility of combining the two good properties into one instrument. The idea was to shape the previously homogeneous magnetic field in such a way that focusir: should occur in two directions, instead of only one as in + ,• jemi-circular case. It was known that in beta- trons the ,-.•• trons performed oscillatory motions both in the radial anc j the axial directions. By putting the angles of period eq;. ;.. for the two oscillations Nils Svartholm and I /2,3/ four) a simple condition for the magnetic field form required ^ give a real electron optical image i.e. -

Photon Mapping Superluminal Particles

EUROGRAPHICS 2020/ F. Banterle and A. Wilkie Short Paper Photon Mapping Superluminal Particles G. Waldemarson1;2 and M. Doggett2 1Arm Ltd, Sweden 2 Lund University, Sweden Abstract One type of light source that remains largely unexplored in the field of light transport rendering is the light generated by superluminal particles, a phenomenon more commonly known as Cherenkov radiation [C37ˇ ]. By re-purposing the Frank-Tamm equation [FT91] for rendering, the energy output of these particles can be estimated and consequently mapped to photons, making it possible to visualize the brilliant blue light characteristic of the effect. In this paper we extend a stochastic progressive photon mapper and simulate the emission of superluminal particles from a source object close to a medium with a high index of refraction. In practice, the source is treated as a new kind of light source, allowing us to efficiently reuse existing photon mapping methods. CCS Concepts • Computing methodologies ! Ray tracing; 1. Introduction in the direction of the particle but will not otherwise cross one an- other, as depicted in figure 1. However, if the particle travels faster In the late 19th and early 20th century, the phenomenon today than these spheres the waves will constructively interfere, generat- known as Cherenkov radiation were predicted and observed a num- ing coherent photons at an angle proportional to the velocity of the ber of times [Wat11] but it was first properly investigated in 1934 particle, similar to the propagation of a sonic boom from supersonic by Pavel Cherenkov under the supervision of Sergey Vavilov at the aircraft. Formally, this occurs when the criterion c0 = c < v < c Lebedev Institute [C37ˇ ]. -

Date: To: September 22, 1 997 Mr Ian Johnston©

22-SEP-1997 16:36 NOBELSTIFTELSEN 4& 8 6603847 SID 01 NOBELSTIFTELSEN The Nobel Foundation TELEFAX Date: September 22, 1 997 To: Mr Ian Johnston© Company: Executive Office of the Secretary-General Fax no: 0091-2129633511 From: The Nobel Foundation Total number of pages: olO MESSAGE DearMrJohnstone, With reference to your fax and to our telephone conversation, I am enclosing the address list of all Nobel Prize laureates. Yours sincerely, Ingr BergstrSm Mailing address: Bos StU S-102 45 Stockholm. Sweden Strat itddrtSMi Suircfatan 14 Teleptelrtts: (-MB S) 663 » 20 Fsuc (*-«>!) «W Jg 47 22-SEP-1997 16:36 NOBELSTIFTELSEN 46 B S603847 SID 02 22-SEP-1997 16:35 NOBELSTIFTELSEN 46 8 6603847 SID 03 Professor Willis E, Lamb Jr Prof. Aleksandre M. Prokhorov Dr. Leo EsaJki 848 North Norris Avenue Russian Academy of Sciences University of Tsukuba TUCSON, AZ 857 19 Leninskii Prospect 14 Tsukuba USA MSOCOWV71 Ibaraki Ru s s I a 305 Japan 59* c>io Dr. Tsung Dao Lee Professor Hans A. Bethe Professor Antony Hewlsh Department of Physics Cornell University Cavendish Laboratory Columbia University ITHACA, NY 14853 University of Cambridge 538 West I20th Street USA CAMBRIDGE CB3 OHE NEW YORK, NY 10027 England USA S96 014 S ' Dr. Chen Ning Yang Professor Murray Gell-Mann ^ Professor Aage Bohr The Institute for Department of Physics Niels Bohr Institutet Theoretical Physics California Institute of Technology Blegdamsvej 17 State University of New York PASADENA, CA91125 DK-2100 KOPENHAMN 0 STONY BROOK, NY 11794 USA D anni ark USA 595 600 613 Professor Owen Chamberlain Professor Louis Neel ' Professor Ben Mottelson 6068 Margarldo Drive Membre de rinstitute Nordita OAKLAND, CA 946 IS 15 Rue Marcel-Allegot Blegdamsvej 17 USA F-92190 MEUDON-BELLEVUE DK-2100 KOPENHAMN 0 Frankrike D an m ar k 599 615 Professor Donald A.