Hierarchical Organization and Description of Music Collections at the Artist Level

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Hot Rod Circuit Discography Download

Hot rod circuit discography download Complete your Hot Rod Circuit record collection. Discover Hot Rod Circuit's full discography. Shop new and used Vinyl and CDs. Hot Rod Circuit by Hot Rod Circuit, released 01 November 1. Forgive Me 2. Into The Digital Album. Streaming + Download. Includes unlimited streaming via the free Bandcamp app, plus high-quality download in MP3, FLAC and more. Find Hot Rod Circuit discography, albums and singles on AllMusic. Find Hot Rod Circuit bio, music, credits, awards, & streaming links on AllMusic - Originally from Auburn, AL, Hot Rod Circuit. Hot Rod Circuit is an American emo band from Auburn, Alabama established in The next album released by HRC hit record stores in September ; it was entitled "If It's Cool .. Create a book · Download as PDF · Printable version History · Early years · Sorry About Tomorrow · Post Break Up. tan marble albums are available from the band on he upcoming tour. Street Find tickets for Hot Rod Circuit showing at The Brighton Bar - Long Branch on. Switch browsers or download Spotify for your desktop. Originally from Auburn, AL, Hot Rod Circuit comprised Andy Jackson (vocal, In their earlier days, Hot Rod Circuit was known as Antidote, under which name they released the album. Listen to songs and albums by Hot Rod Circuit, including "Gin and Juice," "The Pharmacist," "Forgive Me," and many more. Free with Apple Music. Hot Rod Circuit is another perfect example of a band working their asses off to succeed. Having toured relentlessly since releasing their debut album almost five. Hot Rod Circuit discography and songs: Music profile for Hot Rod Circuit, formed Genres: Indie Rock, Emo-Pop, Emo. -

The Dealership of Tomorrow 2.0: America’S Car Dealers Prepare for Change

The Dealership of Tomorrow 2.0: America’s Car Dealers Prepare for Change February 2020 An independent study by Glenn Mercer Prepared for the National Automobile Dealers Association The Dealership of Tomorrow 2.0 America’s Car Dealers Prepare for Change by Glenn Mercer Introduction This report is a sequel to the original Dealership of Tomorrow: 2025 (DOT) report, issued by NADA in January 2017. The original report was commissioned by NADA in order to provide its dealer members (the franchised new-car dealers of America) perspectives on the changing automotive retailing environment. The 2017 report was intended to offer “thought starters” to assist dealers in engaging in strategic planning, looking ahead to roughly 2025.1 In early 2019 NADA determined it was time to update the report, as the environment was continuing to shift. The present document is that update: It represents the findings of new work conducted between May and December of 2019. As about two and a half years have passed since the original DOT, focused on 2025, was issued, this update looks somewhat further out, to the late 2020s. Disclaimers As before, we need to make a few things clear at the outset: 1. In every case we have tried to link our forecast to specific implications for dealers. There is much to be said about the impact of things like electric vehicles and connected cars on society, congestion, the economy, etc. But these impacts lie far beyond the scope of this report, which in its focus on dealerships is already significant in size. Readers are encouraged to turn to academic, consulting, governmental and NGO reports for discussion of these broader issues. -

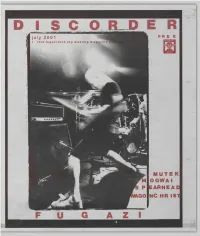

I S C O R D E R Free

I S C O R D E R FREE IUTE K OGWAI ARHEAD NC HR IS1 © "DiSCORDER" 2001 by the Student Radio Society of the University of British Columbia. All rights reserved. Circuldtion 1 7,500. Subscriptions, payable in advance, to Canadian residents are $15 for one year, to residents of the USA are $15 US; $24 CDN elsewhere. Single copies are $2 (to cover postage, of course). Please make cheques or money orders payable to DiSCORDER Mag azine. DEADLINES: Copy deadline for the August issue is July 14th. Ad space is available until July 21st and ccn be booked by calling Maren at 604.822.3017 ext. 3. Our rates are available upon request. DiS CORDER is not responsible for loss, damage, or any other injury to unsolicited mcnuscripts, unsolicit ed drtwork (including but not limited to drawings, photographs and transparencies), or any other unsolicited material. Material can be submitted on disc or in type. As always, English is preferred. Send e-mail to DSCORDER at [email protected]. From UBC to Langley and Squamish to Bellingham, CiTR can be heard at 101.9 fM as well as through all major cable systems in the Lower Mainland, except Shaw in White Rock. Call the CiTR DJ line at 822.2487, our office at 822.301 7 ext. 0, or our news and sports lines at 822.3017 ext. 2. Fax us at 822.9364, e-mail us at: [email protected], visit our web site at http://www.ams.ubc.ca/media/citr or just pick up a goddamn pen and write #233-6138 SUB Blvd., Vancouver, BC. -

Steve Waksman [email protected]

Journal of the International Association for the Study of Popular Music doi:10.5429/2079-3871(2010)v1i1.9en Live Recollections: Uses of the Past in U.S. Concert Life Steve Waksman [email protected] Smith College Abstract As an institution, the concert has long been one of the central mechanisms through which a sense of musical history is constructed and conveyed to a contemporary listening audience. Examining concert programs and critical reviews, this paper will briefly survey U.S. concert life at three distinct moments: in the 1840s, when a conflict arose between virtuoso performance and an emerging classical canon; in the 1910s through 1930s, when early jazz concerts referenced the past to highlight the music’s progress over time; and in the late twentieth century, when rock festivals sought to reclaim a sense of liveness in an increasingly mediatized cultural landscape. keywords: concerts, canons, jazz, rock, virtuosity, history. 1 During the nineteenth century, a conflict arose regarding whether concert repertories should dwell more on the presentation of works from the past, or should concentrate on works of a more contemporary character. The notion that works of the past rather than the present should be the focus of concert life gained hold only gradually over the course of the nineteenth century; as it did, concerts in Europe and the U.S. assumed a more curatorial function, acting almost as a living museum of musical artifacts. While this emphasis on the musical past took hold most sharply in the sphere of “high” or classical music, it has become increasingly common in the popular sphere as well, although whether it fulfills the same function in each realm of musical life remains an open question. -

Computational Models of Music Similarity and Their Applications In

Die approbierte Originalversion dieser Dissertation ist an der Hauptbibliothek der Technischen Universität Wien aufgestellt (http://www.ub.tuwien.ac.at). The approved original version of this thesis is available at the main library of the Vienna University of Technology (http://www.ub.tuwien.ac.at/englweb/). DISSERTATION Computational Models of Music Similarity and their Application in Music Information Retrieval ausgef¨uhrt zum Zwecke der Erlangung des akademischen Grades Doktor der technischen Wissenschaften unter der Leitung von Univ. Prof. Dipl.-Ing. Dr. techn. Gerhard Widmer Institut f¨urComputational Perception Johannes Kepler Universit¨atLinz eingereicht an der Technischen Universit¨at Wien Fakult¨atf¨urInformatik von Elias Pampalk Matrikelnummer: 9625173 Eglseegasse 10A, 1120 Wien Wien, M¨arz 2006 Kurzfassung Die Zielsetzung dieser Dissertation ist die Entwicklung von Methoden zur Unterst¨utzung von Anwendern beim Zugriff auf und bei der Entdeckung von Musik. Der Hauptteil besteht aus zwei Kapiteln. Kapitel 2 gibt eine Einf¨uhrung in berechenbare Modelle von Musik¨ahnlich- keit. Zudem wird die Optimierung der Kombination verschiedener Ans¨atze beschrieben und die gr¨oßte bisher publizierte Evaluierung von Musik¨ahnlich- keitsmassen pr¨asentiert. Die beste Kombination schneidet in den meisten Evaluierungskategorien signifikant besser ab als die Ausgangsmethode. Beson- dere Vorkehrungen wurden getroffen um Overfitting zu vermeiden. Um eine Gegenprobe zu den Ergebnissen der Genreklassifikation-basierten Evaluierung zu machen, wurde ein H¨ortest durchgef¨uhrt.Die Ergebnisse vom Test best¨a- tigen, dass Genre-basierte Evaluierungen angemessen sind um effizient große Parameterr¨aume zu evaluieren. Kapitel 2 endet mit Empfehlungen bez¨uglich der Verwendung von Ahnlichkeitsmaßen.¨ Kapitel 3 beschreibt drei Anwendungen von solchen Ahnlichkeitsmassen.¨ Die erste Anwendung demonstriert wie Musiksammlungen organisiert and visualisiert werden k¨onnen,so dass die Anwender den Ahnlichkeitsaspekt,¨ der sie interessiert, kontrollieren k¨onnen. -

Chapter One Introduction

CHAPTER ONE INTRODUCTION 1.1 BACKGROUND OF THE STUDY We use language every day to communicate with others. For some of us, we are not aware of the importance of language in our daily life. Language has meaning to us and it is really important for our communication. The explanations below will prove how important language is. According to Oxford Advanced Learner‟s Dictionary, the definitions of language are”1) system of communication in speech and writing that is used by people of particular country, 2) a way of expressing ideas and feelings using movements, symbols, and sound” (Hornby, 752). From the definition above we can conclude that language is the most significant thing for us to communicate as we can deliver our feelings and thoughts using language. Chandler supports this idea when he says “language is…the most powerful communication system by far” (Chandler 9). As communication grows, language can be represented through any media. The media that I would like to analyze in this thesis is an album cover. An album cover is included as the language used by the artists to convey their music or their feelings. 1 Maranatha Christian University Generally, an album cover contains the band‟s or artist‟s name and the title of the music album. Another important thing in an album cover is the picture. The picture can have some important roles. First, it may be used by the artist to show his/her identity. For example, the artist may use his/her portrait. Second, it can show the identity of the music. -

Musikstile Quelle: Alphabetisch Geordnet Von Mukerbude

MusikStile Quelle: www.recordsale.de Alphabetisch geordnet von MukerBude - 2-Step/BritishGarage - AcidHouse - AcidJazz - AcidRock - AcidTechno - Acappella - AcousticBlues - AcousticChicagoBlues - AdultAlternative - AdultAlternativePop/Rock - AdultContemporary -Africa - AfricanJazz - Afro - Afro-Pop -AlbumRock - Alternative - AlternativeCountry - AlternativeDance - AlternativeFolk - AlternativeMetal - AlternativePop/Rock - AlternativeRap - Ambient - AmbientBreakbeat - AmbientDub - AmbientHouse - AmbientPop - AmbientTechno - Americana - AmericanPopularSong - AmericanPunk - AmericanTradRock - AmericanUnderground - AMPop Orchestral - ArenaRock - Argentina - Asia -AussieRock - Australia - Avant -Avant-Garde - Avntg - Ballads - Baroque - BaroquePop - BassMusic - Beach - BeatPoetry - BigBand - BigBeat - BlackGospel - Blaxploitation - Blue-EyedSoul -Blues - Blues-Rock - BluesRevival - Blues - Spain - Boogie Woogie - Bop - Bolero -Boogaloo - BoogieRock - BossaNova - Brazil - BrazilianJazz - BrazilianPop - BrillBuildingPop - Britain - BritishBlues - BritishDanceBands - BritishFolk - BritishFolk Rock - BritishInvasion - BritishMetal - BritishPsychedelia - BritishPunk - BritishRap - BritishTradRock - Britpop - BrokenBeat - Bubblegum - C -86 - Cabaret -Cajun - Calypso - Canada - CanterburyScene - Caribbean - CaribbeanFolk - CastRecordings -CCM -CCM - Celebrity - Celtic - Celtic - CelticFolk - CelticFusion - CelticPop - CelticRock - ChamberJazz - ChamberMusic - ChamberPop - Chile - Choral - ChicagoBlues - ChicagoSoul - Child - Children'sFolk - Christmas -

“The Dark Side of the Moon”—Pink Floyd (1973) Added to the National Registry: 2012 Essay By: Daniel Levitin (Guest Post)*

“The Dark Side of the Moon”—Pink Floyd (1973) Added to the National Registry: 2012 Essay by: Daniel Levitin (guest post)* Dark Side of the Moon Angst. Greed. Alienation. Questioning one's own sanity. Weird time signatures. Experimental sounds. In 1973, Pink Floyd was a somewhat known progressive rock band, but it was this, their ninth album, that catapulted them into world class rock-star status. “The Dark Side of the Moon” spent an astonishing 14 years on the “Billboard” album charts, and sold an estimated 45 million copies. It is a work of outstanding artistry, skill, and craftsmanship that is popular in its reach and experimental in its grasp. An engineering masterpiece, the album received a Grammy nomination for best engineered non-classical recording, based on beautifully captured instrumental tones and a warm, lush soundscape. Engineer Alan Parsons and Mixing Supervisor Chris Thomas, who had worked extensively with The Beatles (the LP was mastered by engineer Wally Traugott), introduced a level of sonic beauty and clarity to the album that propelled the music off of any sound system to become an all- encompassing, immersive experience. In his 1973 review, Lloyd Grossman wrote in “Rolling Stone” magazine that Pink Floyd’s members comprised “preeminent techno-rockers: four musicians with a command of electronic instruments who wield an arsenal of sound effects with authority and finesse.” The used their command to create a work that introduced several generations of listeners to art-rock and to elements of 1950s cool jazz. Some reharmonization of chords (as on “Breathe”) was inspired by Miles Davis, explained keyboardist Rick Wright. -

Masculinity in Emo Music a Thesis Submitted to the Faculty of Arts and Social Science in Candi

CARLETON UNIVERSITY FLUID BODIES: MASCULINITY IN EMO MUSIC A THESIS SUBMITTED TO THE FACULTY OF ARTS AND SOCIAL SCIENCE IN CANDIDACY FOR THE DEGREE OF MASTER OF ARTS DEPARTMENT OF MUSIC BY RYAN MACK OTTAWA, ONTARIO MAY 2014 ABSTRACT Emo is a genre of music that typically involves male performers, which evolved out of the punk and hardcore movements in Washington DC during the mid-80s. Scholarly literature on emo has explored its cultural and social contexts in relation to the “crisis” of masculinity—the challenging of the legitimacy of patriarchy through “alternative” forms of masculinity. This thesis builds upon this pioneering work but departs from its perpetuation of strict masculine binaries by conflating hegemonic and subordinate/alternative masculinities into a single subject position, which I call synergistic masculinity. In doing so, I use emo to explicate this vis-à-vis an intertextual analysis that explores the dominant themes in 1) lyrics; 2) the sites of vocal production (head, throat, chest) in conjunction with pitch and timbre; 3) the extensional and intensional intervallic relationships between notes and chords, and the use of dynamics in the musical syntax; 4) the use of public and private spaces, as well as the performative masculine body in music video. I posit that masculine emo performers dissolve these hierarchically organized masculinities, which allows for a deeper musical meaning and the extramusical signification of masculinity. Keywords: emo, synergistic masculinity, performativity, music video, masculinities, lyrics, vocal production, musical syntax, dynamics. ABSTRAIT Emo est un genre musical qui implique typiquement des musiciens de sexe masculine et qui est issu de movement punk et hardcore originaire de Washington DC durant les années 80. -

Mediated Music Makers. Constructing Author Images in Popular Music

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Helsingin yliopiston digitaalinen arkisto Laura Ahonen Mediated music makers Constructing author images in popular music Academic dissertation to be publicly discussed, by due permission of the Faculty of Arts at the University of Helsinki in auditorium XII, on the 10th of November, 2007 at 10 o’clock. Laura Ahonen Mediated music makers Constructing author images in popular music Finnish Society for Ethnomusicology Publ. 16. © Laura Ahonen Layout: Tiina Kaarela, Federation of Finnish Learned Societies ISBN 978-952-99945-0-2 (paperback) ISBN 978-952-10-4117-4 (PDF) Finnish Society for Ethnomusicology Publ. 16. ISSN 0785-2746. Contents Acknowledgements. 9 INTRODUCTION – UNRAVELLING MUSICAL AUTHORSHIP. 11 Background – On authorship in popular music. 13 Underlying themes and leading ideas – The author and the work. 15 Theoretical framework – Constructing the image. 17 Specifying the image types – Presented, mediated, compiled. 18 Research material – Media texts and online sources . 22 Methodology – Social constructions and discursive readings. 24 Context and focus – Defining the object of study. 26 Research questions, aims and execution – On the work at hand. 28 I STARRING THE AUTHOR – IN THE SPOTLIGHT AND UNDERGROUND . 31 1. The author effect – Tracking down the source. .32 The author as the point of origin. 32 Authoring identities and celebrity signs. 33 Tracing back the Romantic impact . 35 Leading the way – The case of Björk . 37 Media texts and present-day myths. .39 Pieces of stardom. .40 Single authors with distinct features . 42 Between nature and technology . 45 The taskmaster and her crew. -

California State University, Northridge Where's The

CALIFORNIA STATE UNIVERSITY, NORTHRIDGE WHERE’S THE ROCK? AN EXAMINATION OF ROCK MUSIC’S LONG-TERM SUCCESS THROUGH THE GEOGRAPHY OF ITS ARTISTS’ ORIGINS AND ITS STATUS IN THE MUSIC INDUSTRY A thesis submitted in partial fulfilment of the requirements for the Degree of Master of Arts in Geography, Geographic Information Science By Mark T. Ulmer May 2015 The thesis of Mark Ulmer is approved: __________________________________________ __________________ Dr. James Craine Date __________________________________________ __________________ Dr. Ronald Davidson Date __________________________________________ __________________ Dr. Steven Graves, Chair Date California State University, Northridge ii Acknowledgements I would like to thank my committee members and all of my professors at California State University, Northridge, for helping me to broaden my geographic horizons. Dr. Boroushaki, Dr. Cox, Dr. Craine, Dr. Davidson, Dr. Graves, Dr. Jackiewicz, Dr. Maas, Dr. Sun, and David Deis, thank you! iii TABLE OF CONTENTS Signature Page .................................................................................................................... ii Acknowledgements ............................................................................................................ iii LIST OF FIGURES AND TABLES.................................................................................. vi ABSTRACT ..................................................................................................................... viii Chapter 1 – Introduction .................................................................................................... -

Sooloos Collections: Advanced Guide

Sooloos Collections: Advanced Guide Sooloos Collectiions: Advanced Guide Contents Introduction ...........................................................................................................................................................3 Organising and Using a Sooloos Collection ...........................................................................................................4 Working with Sets ..................................................................................................................................................5 Organising through Naming ..................................................................................................................................7 Album Detail ....................................................................................................................................................... 11 Finding Content .................................................................................................................................................. 12 Explore ............................................................................................................................................................ 12 Search ............................................................................................................................................................. 14 Focus ..............................................................................................................................................................