The End of the Intel Age

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Intel® Strongarm® SA-1110 High- Performance, Low-Power Processor for Portable Applied Computing Devices

Advance Copy Intel® StrongARM® SA-1110 High- Performance, Low-Power Processor For Portable Applied Computing Devices PRODUCT HIGHLIGHTS ■ Innovative Application Specific Standard Product (ASSP) delivers leadership performance, integration and low power for palm-size devices, PC companions, smart phones and other emerging portable applied computing devices As businesses and individuals rely increasingly on portable applied ■ High-speed 100 MHz memory bus and a computing devices to simplify their lives and boost their productivity, flexible memory these devices have to perform more complex functions quickly and controller that adds efficiently. To satisfy ever-increasing customer demands to support for SDRAM, communicate and access information ‘anytime, anywhere’, SMROM, and variable- manufacturers need technologies that deliver high-performance, robust latency I/O devices — provides design functionality and versatility while meeting the small-size and low-power flexibility, scalability and restrictions of portable, battery-operated products. Intel designed the high memory bandwidth SA-1110 processor with all of these requirements in mind. ■ Rich development The Intel® SA-1110 is a highly integrated 32-bit StrongARM® environment enables processor that incorporates Intel design and process technology along leading edge products with the power efficiency of the ARM* architecture. The SA-1110 is while reducing time- to-market software compatible with the ARM V4 architecture while utilizing a high-performance micro-architecture that is optimized to take advantage of Intel process technology. The Intel SA-1110 provides the performance, low power, integration and cost benefits of the Intel SA-1100 processor plus a high speed memory bus, flexible memory controller and the ability to handle variable-latency I/O devices. -

Intel® Architecture Instruction Set Extensions and Future Features Programming Reference

Intel® Architecture Instruction Set Extensions and Future Features Programming Reference 319433-037 MAY 2019 Intel technologies features and benefits depend on system configuration and may require enabled hardware, software, or service activation. Learn more at intel.com, or from the OEM or retailer. No computer system can be absolutely secure. Intel does not assume any liability for lost or stolen data or systems or any damages resulting from such losses. You may not use or facilitate the use of this document in connection with any infringement or other legal analysis concerning Intel products described herein. You agree to grant Intel a non-exclusive, royalty-free license to any patent claim thereafter drafted which includes subject matter disclosed herein. No license (express or implied, by estoppel or otherwise) to any intellectual property rights is granted by this document. The products described may contain design defects or errors known as errata which may cause the product to deviate from published specifica- tions. Current characterized errata are available on request. This document contains information on products, services and/or processes in development. All information provided here is subject to change without notice. Intel does not guarantee the availability of these interfaces in any future product. Contact your Intel representative to obtain the latest Intel product specifications and roadmaps. Copies of documents which have an order number and are referenced in this document, or other Intel literature, may be obtained by calling 1- 800-548-4725, or by visiting http://www.intel.com/design/literature.htm. Intel, the Intel logo, Intel Deep Learning Boost, Intel DL Boost, Intel Atom, Intel Core, Intel SpeedStep, MMX, Pentium, VTune, and Xeon are trademarks of Intel Corporation in the U.S. -

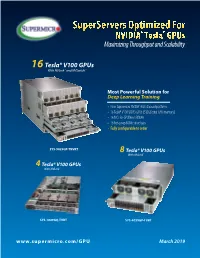

Supermicro GPU Solutions Optimized for NVIDIA Nvlink

SuperServers Optimized For NVIDIA® Tesla® GPUs Maximizing Throughput and Scalability 16 Tesla® V100 GPUs With NVLink™ and NVSwitch™ Most Powerful Solution for Deep Learning Training • New Supermicro NVIDIA® HGX-2 based platform • 16 Tesla® V100 SXM3 GPUs (512GB total GPU memory) • 16 NICs for GPUDirect RDMA • 16 hot-swap NVMe drive bays • Fully configurable to order SYS-9029GP-TNVRT 8 Tesla® V100 GPUs With NVLink™ 4 Tesla® V100 GPUs With NVLink™ SYS-1029GQ-TVRT SYS-4029GP-TVRT www.supermicro.com/GPU March 2019 Maximum Acceleration for AI/DL Training Workloads PERFORMANCE: Highest Parallel peak performance with NVIDIA Tesla V100 GPUs THROUGHPUT: Best in class GPU-to-GPU bandwidth with a maximum speed of 300GB/s SCALABILITY: Designed for direct interconections between multiple GPU nodes FLEXIBILITY: PCI-E 3.0 x16 for low latency I/O expansion capacity & GPU Direct RDMA support DESIGN: Optimized GPU cooling for highest sustained parallel computing performance EFFICIENCY: Redundant Titanium Level power supplies & intelligent cooling control Model SYS-1029GQ-TVRT SYS-4029GP-TVRT • Dual Intel® Xeon® Scalable processors with 3 UPI up to • Dual Intel® Xeon® Scalable processors with 3 UPI up to 10.4GT/s CPU Support 10.4GT/s • Supports up to 205W TDP CPU • Supports up to 205W TDP CPU • 8 NVIDIA® Tesla® V100 GPUs • 4 NVIDIA Tesla V100 GPUs • NVIDIA® NVLink™ GPU Interconnect up to 300GB/s GPU Support • NVIDIA® NVLink™ GPU Interconnect up to 300GB/s • Optimized for GPUDirect RDMA • Optimized for GPUDirect RDMA • Independent CPU and GPU thermal zones -

Field Programmable Gate Arrays with Hardwired Networks on Chip

Field Programmable Gate Arrays with Hardwired Networks on Chip PROEFSCHRIFT ter verkrijging van de graad van doctor aan de Technische Universiteit Delft, op gezag van de Rector Magnificus prof. ir. K.C.A.M. Luyben, voorzitter van het College voor Promoties, in het openbaar te verdedigen op dinsdag 6 november 2012 om 15:00 uur door MUHAMMAD AQEEL WAHLAH Master of Science in Information Technology Pakistan Institute of Engineering and Applied Sciences (PIEAS) geboren te Lahore, Pakistan. Dit proefschrift is goedgekeurd door de promotor: Prof. dr. K.G.W. Goossens Copromotor: Dr. ir. J.S.S.M. Wong Samenstelling promotiecommissie: Rector Magnificus voorzitter Prof. dr. K.G.W. Goossens Technische Universiteit Eindhoven, promotor Dr. ir. J.S.S.M. Wong Technische Universiteit Delft, copromotor Prof. dr. S. Pillement Technical University of Nantes, France Prof. dr.-Ing. M. Hubner Ruhr-Universitat-Bochum, Germany Prof. dr. D. Stroobandt University of Gent, Belgium Prof. dr. K.L.M. Bertels Technische Universiteit Delft Prof. dr.ir. A.J. van der Veen Technische Universiteit Delft, reservelid ISBN: 978-94-6186-066-8 Keywords: Field Programmable Gate Arrays, Hardwired, Networks on Chip Copyright ⃝c 2012 Muhammad Aqeel Wahlah All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording, or otherwise, without permission of the author. Printed in The Netherlands Acknowledgments oday when I look back, I find it a very interesting journey filled with different emotions, i.e., joy and frustration, hope and despair, and T laughter and sadness. -

Comparison of Contemporary Real Time Operating Systems

ISSN (Online) 2278-1021 IJARCCE ISSN (Print) 2319 5940 International Journal of Advanced Research in Computer and Communication Engineering Vol. 4, Issue 11, November 2015 Comparison of Contemporary Real Time Operating Systems Mr. Sagar Jape1, Mr. Mihir Kulkarni2, Prof.Dipti Pawade3 Student, Bachelors of Engineering, Department of Information Technology, K J Somaiya College of Engineering, Mumbai1,2 Assistant Professor, Department of Information Technology, K J Somaiya College of Engineering, Mumbai3 Abstract: With the advancement in embedded area, importance of real time operating system (RTOS) has been increased to greater extent. Now days for every embedded application low latency, efficient memory utilization and effective scheduling techniques are the basic requirements. Thus in this paper we have attempted to compare some of the real time operating systems. The systems (viz. VxWorks, QNX, Ecos, RTLinux, Windows CE and FreeRTOS) have been selected according to the highest user base criterion. We enlist the peculiar features of the systems with respect to the parameters like scheduling policies, licensing, memory management techniques, etc. and further, compare the selected systems over these parameters. Our effort to formulate the often confused, complex and contradictory pieces of information on contemporary RTOSs into simple, analytical organized structure will provide decisive insights to the reader on the selection process of an RTOS as per his requirements. Keywords:RTOS, VxWorks, QNX, eCOS, RTLinux,Windows CE, FreeRTOS I. INTRODUCTION An operating system (OS) is a set of software that handles designed known as Real Time Operating System (RTOS). computer hardware. Basically it acts as an interface The motive behind RTOS development is to process data between user program and computer hardware. -

AIX® Version 6.1 Performance Toolbox Guide Limiting Access to Data Suppliers

AIX Version 6.1 Performance Toolbox Version 3 Guide and Reference AIX Version 6.1 Performance Toolbox Version 3 Guide and Reference Note Before using this information and the product it supports, read the information in Appendix G, “Notices,” on page 431. This edition applies to AIX Version 6.1 and to all subsequent releases and modifications until otherwise indicated in new editions. (c) Copyright AT&T, 1984, 1985, 1986, 1987, 1988, 1989. All rights reserved. Copyright Sun Microsystems, Inc., 1985, 1986, 1987, 1988. All rights reserved. The Network File System (NFS) was developed by Sun Microsystems, Inc. This software and documentation is based in part on the Fourth Berkeley Software Distribution under license from The Regents of the University of California. We acknowledge the following institutions for their role in its development: the Electrical Engineering and Computer Sciences Department at the Berkeley Campus. The Rand MH Message Handling System was developed by the Rand Corporation and the University of California. Portions of the code and documentation described in this book were derived from code and documentation developed under the auspices of the Regents of the University of California and have been acquired and modified under the provisions that the following copyright notice and permission notice appear: Copyright Regents of the University of California, 1986, 1987, 1988, 1989. All rights reserved. Redistribution and use in source and binary forms are permitted provided that this notice is preserved and that due credit is given to the University of California at Berkeley. The name of the University may not be used to endorse or promote products derived from this software without specific prior written permission. -

Connecticut DEEP's List of Compliant Electronics Manufacturers Notice to Connecticut Retailersi

Connecticut DEEP’s List of Compliant Electronics manufacturers Notice to Connecticut Retailersi: This list below identifies electronics manufacturers that are in compliance with the registration and payment obligations under Connecticut’s State-wide Electronics Program. Retailers must check this list before selling Covered Electronic Devices (“CEDs”) in Connecticut. If there is a brand of a CED that is not listed below including retail over the internet, the retailer must not sell the CED to Connecticut consumers pursuant to section 22a-634 of the Connecticut General Statutes. Manufacturer Brands CED Type Acer America Corp. Acer Computer, Monitor, Television, Printer eMachines Computer, Monitor Gateway Computer, Monitor, Television ALR Computer, Monitor Gateway 2000 Computer, Monitor AG Neovo Technology AG Neovo Monitor Corporation Amazon Fulfillment Service, Inc. Kindle Computers Amazon Kindle Kindle Fire Fire American Future Technology iBuypower Computer Corporation dba iBuypower Apple, Inc. Apple Computer, Monitor, Printer NeXT Computer, Monitor iMac Computer Mac Pro Computer Mac Mini Computer Thunder Bolt Display Monitor Archos, Inc. Archos Computer ASUS Computer International ASUS Computer, Monitor Eee Computer Nexus ASUS Computer EEE PC Computer Atico International USA, Inc. Digital Prism Television ATYME CORPRATION, INC. ATYME Television Bang & Olufsen Operations A/S Bang & Olufsen Television BenQ America Corp. BenQ Monitor Best Buy Insignia Television Dynex Television UB Computer Toshiba Television VPP Matrix Computer, Monitor Blackberry Limited Balckberry PlayBook Computer Bose Corp. Bose Videowave Television Brother International Corp. Brother Monitor, Printer Canon USA, Inc. Canon Computer, Monitor, Printer Oce Printer Imagistics Printer Cellco Partnership Verizon Ellipsis Computer Changhong Trading Corp. USA Changhong Television (Former Guangdong Changhong Electronics Co. LTD) Craig Electronics Craig Computer, Television Creative Labs, Inc. -

The Intro to GPGPU CPU Vs

12/12/11! The Intro to GPGPU . Dr. Chokchai (Box) Leangsuksun, PhD! Louisiana Tech University. Ruston, LA! ! CPU vs. GPU • CPU – Fast caches – Branching adaptability – High performance • GPU – Multiple ALUs – Fast onboard memory – High throughput on parallel tasks • Executes program on each fragment/vertex • CPUs are great for task parallelism • GPUs are great for data parallelism Supercomputing 20082 Education Program 1! 12/12/11! CPU vs. GPU - Hardware • More transistors devoted to data processing CUDA programming guide 3.1 3 CPU vs. GPU – Computation Power CUDA programming guide 3.1! 2! 12/12/11! CPU vs. GPU – Memory Bandwidth CUDA programming guide 3.1! What is GPGPU ? • General Purpose computation using GPU in applications other than 3D graphics – GPU accelerates critical path of application • Data parallel algorithms leverage GPU attributes – Large data arrays, streaming throughput – Fine-grain SIMD parallelism – Low-latency floating point (FP) computation © David Kirk/NVIDIA and Wen-mei W. Hwu, 2007! ECE 498AL, University of Illinois, Urbana-Champaign! 3! 12/12/11! Why is GPGPU? • Large number of cores – – 100-1000 cores in a single card • Low cost – less than $100-$1500 • Green computing – Low power consumption – 135 watts/card – 135 w vs 30000 w (300 watts * 100) • 1 card can perform > 100 desktops 12/14/09!– $750 vs 50000 ($500 * 100) 7 Two major players 4! 12/12/11! Parallel Computing on a GPU • NVIDIA GPU Computing Architecture – Via a HW device interface – In laptops, desktops, workstations, servers • Tesla T10 1070 from 1-4 TFLOPS • AMD/ATI 5970 x2 3200 cores • NVIDIA Tegra is an all-in-one (system-on-a-chip) ATI 4850! processor architecture derived from the ARM family • GPU parallelism is better than Moore’s law, more doubling every year • GPGPU is a GPU that allows user to process both graphics and non-graphics applications. -

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27

Case M:07-cv-01826-WHA Document 249 Filed 11/08/2007 Page 1 of 34 1 BOIES, SCHILLER & FLEXNER LLP WILLIAM A. ISAACSON (pro hac vice) 2 5301 Wisconsin Ave. NW, Suite 800 Washington, D.C. 20015 3 Telephone: (202) 237-2727 Facsimile: (202) 237-6131 4 Email: [email protected] 5 6 BOIES, SCHILLER & FLEXNER LLP BOIES, SCHILLER & FLEXNER LLP JOHN F. COVE, JR. (CA Bar No. 212213) PHILIP J. IOVIENO (pro hac vice) 7 DAVID W. SHAPIRO (CA Bar No. 219265) ANNE M. NARDACCI (pro hac vice) KEVIN J. BARRY (CA Bar No. 229748) 10 North Pearl Street 8 1999 Harrison St., Suite 900 4th Floor Oakland, CA 94612 Albany, NY 12207 9 Telephone: (510) 874-1000 Telephone: (518) 434-0600 Facsimile: (510) 874-1460 Facsimile: (518) 434-0665 10 Email: [email protected] Email: [email protected] [email protected] [email protected] 11 [email protected] 12 Attorneys for Plaintiff Jordan Walker Interim Class Counsel for Direct Purchaser 13 Plaintiffs 14 15 UNITED STATES DISTRICT COURT 16 NORTHERN DISTRICT OF CALIFORNIA 17 18 IN RE GRAPHICS PROCESSING UNITS ) Case No.: M:07-CV-01826-WHA ANTITRUST LITIGATION ) 19 ) MDL No. 1826 ) 20 This Document Relates to: ) THIRD CONSOLIDATED AND ALL DIRECT PURCHASER ACTIONS ) AMENDED CLASS ACTION 21 ) COMPLAINT FOR VIOLATION OF ) SECTION 1 OF THE SHERMAN ACT, 15 22 ) U.S.C. § 1 23 ) ) 24 ) ) JURY TRIAL DEMANDED 25 ) ) 26 ) ) 27 ) 28 THIRD CONSOLIDATED AND AMENDED CLASS ACTION COMPLAINT BY DIRECT PURCHASERS M:07-CV-01826-WHA Case M:07-cv-01826-WHA Document 249 Filed 11/08/2007 Page 2 of 34 1 Plaintiffs Jordan Walker, Michael Bensignor, d/b/a Mike’s Computer Services, Fred 2 Williams, and Karol Juskiewicz, on behalf of themselves and all others similarly situated in the 3 United States, bring this action for damages and injunctive relief under the federal antitrust laws 4 against Defendants named herein, demanding trial by jury, and complaining and alleging as 5 follows: 6 NATURE OF THE CASE 7 1. -

Over View of Microprocessor 8085 and Its Application

IOSR Journal of Electronics and Communication Engineering (IOSR-JECE) e-ISSN: 2278-2834,p- ISSN: 2278-8735.Volume 10, Issue 6, Ver. II (Nov - Dec .2015), PP 09-14 www.iosrjournals.org Over view of Microprocessor 8085 and its application Kimasha Borah Assistant Professor, Centre for Computer Studies Centre for Computer Studies, Dibrugarh University, Dibrugarh, Assam, India Abstract: Microprocessor is a program controlled semiconductor device (IC), which fetches, decode and executes instructions. It is versatile in application and is flexible to some extent. Nowadays, modern microprocessors can perform extremely sophisticated operations in areas such as meteorology, aviation, nuclear physics and engineering, and take up much less space as well as delivering superior performance Here is a brief review of microprocessor and its various application Key words: Semi Conductor, Integrated Circuits, CPU, NMOS ,PMOS, VLSI I. Introduction: Microprocessor is derived from two words micro and processor. Micro means small, tiny and processor means which processes something. It is a single Very Large Scale of Integration (VLSI) chip that incorporates all functions of central processing unit (CPU) fabricated on a single Integrated Circuits (ICs) (1).Some other units like caches, pipelining, floating point processing arithmetic and superscaling units are additionally present in the microprocessor and that results in increasing speed of operation.8085,8086,8088 are some examples of microprocessors(2). The technology used for microprocessor is N type metal oxide semiconductor(NMOS)(3). Basic operations of microprocessor are fetching instructions from memory ,decoding and executing them ie it takes data or operand from input device, perform arithmetic and logical operations and store results in required location or send result to output devices(1).Word size identifies the microprocessor.E.g. -

System-On-A-Chip (Soc) & ARM Architecture

System-on-a-Chip (SoC) & ARM Architecture EE2222 Computer Interfacing and Microprocessors Partially based on System-on-Chip Design by Hao Zheng 2020 EE2222 1 Overview • A system-on-a-chip (SoC): • a computing system on a single silicon substrate that integrates both hardware and software. • Hardware packages all necessary electronics for a particular application. • which implemented by SW running on HW. • Aim for low power and low cost. • Also more reliable than multi-component systems. 2020 EE2222 2 Driven by semiconductor advances 2020 EE2222 3 Basic SoC Model 2020 EE2222 4 2020 EE2222 5 SoC vs Processors System on a chip Processors on a chip processor multiple, simple, heterogeneous few, complex, homogeneous cache one level, small 2-3 levels, extensive memory embedded, on chip very large, off chip functionality special purpose general purpose interconnect wide, high bandwidth often through cache power, cost both low both high operation largely stand-alone need other chips 2020 EE2222 6 Embedded Systems • 98% processors sold annually are used in embedded applications. 2020 EE2222 7 Embedded Systems: Design Challenges • Power/energy efficient: • mobile & battery powered • Highly reliable: • Extreme environment (e.g. temperature) • Real-time operations: • predictable performance • Highly complex • A modern automobile with 55 electronic control units • Tightly coupled Software & Hardware • Rapid development at low price 2020 EE2222 8 EECS222A: SoC Description and Modeling Lecture 1 Design Complexity Challenge Design• Productivity Complexity -

Appendix D an Alternative to RISC: the Intel 80X86

D.1 Introduction D-2 D.2 80x86 Registers and Data Addressing Modes D-3 D.3 80x86 Integer Operations D-6 D.4 80x86 Floating-Point Operations D-10 D.5 80x86 Instruction Encoding D-12 D.6 Putting It All Together: Measurements of Instruction Set Usage D-14 D.7 Concluding Remarks D-20 D.8 Historical Perspective and References D-21 D An Alternative to RISC: The Intel 80x86 The x86 isn’t all that complex—it just doesn’t make a lot of sense. Mike Johnson Leader of 80x86 Design at AMD, Microprocessor Report (1994) © 2003 Elsevier Science (USA). All rights reserved. D-2 I Appendix D An Alternative to RISC: The Intel 80x86 D.1 Introduction MIPS was the vision of a single architect. The pieces of this architecture fit nicely together and the whole architecture can be described succinctly. Such is not the case of the 80x86: It is the product of several independent groups who evolved the architecture over 20 years, adding new features to the original instruction set as you might add clothing to a packed bag. Here are important 80x86 milestones: I 1978—The Intel 8086 architecture was announced as an assembly language– compatible extension of the then-successful Intel 8080, an 8-bit microproces- sor. The 8086 is a 16-bit architecture, with all internal registers 16 bits wide. Whereas the 8080 was a straightforward accumulator machine, the 8086 extended the architecture with additional registers. Because nearly every reg- ister has a dedicated use, the 8086 falls somewhere between an accumulator machine and a general-purpose register machine, and can fairly be called an extended accumulator machine.