Machine Learning-Based Orchestration of Containers: a Taxonomy and Future Directions

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Mareşalul Alexandru Averescu, Un Om Pentru Istorie

Magazin al Fundaţiei “Mareşal Alexandru Averescu” Străjer în calea Cadranfurtunilor militar buzoian Anul III, nr. 5, 9 martie 2009 Mareşalul 150 de ani de la naştere Alexandru Averescu, un om pentru istorie http://www.jointophq.ro ------------------- Străjer în calea furtunilor Magazin trimestrial Numai generalii care fac jertfe folositoare pătrund în Adresa: Buzău, str. Independenţei nr. 24 sufletul maselor. Tel. 0238.717.113 www.jointophq.ro Director : gl. bg. Dan Ghica-Radu COLECTIVUL DE REDACŢIE Redactor-şef: Redactor-şef adjunct: mr. Romeo Feraru Secretar de redacţie: col. (r) Constantin Dinu Redactori: - col (r) Mihai Goia - col. (r) Mihail Pîrlog - preot militar Alexandru Tudose - Emil Niculescu - Viorel Frîncu Departament economie: lt. col. (r) Gherghina Oprişan Departament difuzare: plt. adj. Dan Tinca Tipar: ISSN: 1843-4045 Responsabilitatea pentru conţinutul materialelor publicate aparţine exclusiv autorilor, conform art. 205- 206 Cod penal. Reproducerea textelor şi fotografiilor este permisă numai în condiţiile prevăzute de lege. Manuscrisele nu se înapoiază. Revista pune la dispoziţia celor interesaţi spaţii de publicitate. Numărul curent al revistei se găseşte pe site-ul fundaţiei, în format pdf. Revista se difuzează- 2 la - toate structurile militare din judeţul Buzău, la asociaţiile şi fundaţiile militare locale, precum şi la instituţiile civile interesate de conţinutul său. ------------------- Străjer în calea furtunilor Pro domo Numai generalii Evocarea unor personalităţi ale istoriei naţionale, rescrierea biografiei lor, radiografierea epocii şi, mai ales, a faptelor săvârşite de ei, precum care fac jertfe şi consemnarea acestor întâmplări în documente, jurnale, memorii şi iconografie, contribuie, esenţial, la o mai atentă evaluare a ceea ce am folositoare reprezentat şi, încă, mai reprezentăm în această parte a Europei. -

Names in Multi-Lingual, -Cultural and -Ethic Contact

Oliviu Felecan, Romania 399 Romanian-Ukrainian Connections in the Anthroponymy of the Northwestern Part of Romania Oliviu Felecan Romania Abstract The first contacts between Romance speakers and the Slavic people took place between the 7th and the 11th centuries both to the North and to the South of the Danube. These contacts continued through the centuries till now. This paper approaches the Romanian – Ukrainian connection from the perspective of the contemporary names given in the Northwestern part of Romania. The linguistic contact is very significant in regions like Maramureş and Bukovina. We have chosen to study the Maramureş area, as its ethnic composition is a very appropriate starting point for our research. The unity or the coherence in the field of anthroponymy in any of the pilot localities may be the result of the multiculturalism that is typical for the Central European area, a phenomenon that is fairly reflected at the linguistic and onomastic level. Several languages are used simultaneously, and people sometimes mix words so that speakers of different ethnic origins can send a message and make themselves understood in a better way. At the same time, there are common first names (Adrian, Ana, Daniel, Florin, Gheorghe, Maria, Mihai, Ştefan) and others borrowed from English (Brian Ronald, Johny, Nicolas, Richard, Ray), Romance languages (Alessandro, Daniele, Anne, Marie, Carlos, Miguel, Joao), German (Adolf, Michaela), and other languages. *** The first contacts between the Romance natives and the Slavic people took place between the 7th and the 11th centuries both to the North and to the South of the Danube. As a result, some words from all the fields of onomasiology were borrowed, and the phonological system was changed, once the consonants h, j and z entered the language. -

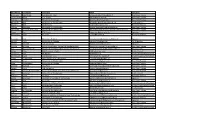

Diplomatic List

United States Department of State Diplomatic List Spring 2020 Preface This publication contains the names of the members of the diplomatic staffs of all missions and their spouses. Members of the diplomatic staff are those mission members who have diplomatic rank. These persons, with the exception of those identified by asterisks, enjoy full immunity under provisions of the Vienna Convention on Diplomatic Relations. Pertinent provisions of the Convention include the following: Article 29 The person of a diplomatic agent shall be inviolable. He shall not be liable to any form of arrest or detention. The receiving State shall treat him with due respect and shall take all appropriate steps to prevent any attack on his person, freedom, or dignity. Article 31 A diplomatic agent shall enjoy immunity from the criminal jurisdiction of the receiving State. He shall also enjoy immunity from its civil and administrative jurisdiction, except in the case of: (a) a real action relating to private immovable property situated in the territory of the receiving State, unless he holds it on behalf of the sending State for the purposes of the mission; (b) an action relating to succession in which the diplomatic agent is involved as an executor, administrator, heir or legatee as a private person and not on behalf of the sending State; (c) an action relating to any professional or commercial activity exercised by the diplomatic agent in the receiving State outside of his official functions. -- A diplomatic agent’s family members are entitled to the same immunities unless they are United States Nationals. ASTERISKS (*) IDENTIFY UNITED STATES NATIONALS. -

Family Name Given Name National Paralympic Committee Amchi Mohamed Nadjib ALG Anseur Zakarya ALG Bachir Mourad ALG Bahlaz Lahoua

Family name Given name National Paralympic Committee Amchi Mohamed Nadjib ALG Anseur Zakarya ALG Bachir Mourad ALG Bahlaz Lahouari ALG Benallou Bakhta ALG Benoumessad Louadjeda ALG Betina Karim ALG Boudjadar Asmahane ALG Boukoufa Achoura ALG Bousaid Rabah ALG Bouzit Brahim Khalil ALG Family name Given name National Paralympic Committee Bouzourine Sid Ali ALG Djemai Madjid ALG Gasmi Mounia ALG Hamoumou Mohamed Fouad ALG Hamri Lynda ALG Harkat Hamza ALG Kais Hamza ALG Medjmedj Nadia ALG Mehdi Fatiha ALG Meslem Mohamed Fethi ALG Ougour Kheir-Eddine ALG Sahli Nacir ALG Saifi Nassima ALG Almada Mariela ARG Almada Soledad ARG Arroyo Nahuel ARG Barreto Hernan ARG Castro Victoria Lujan ARG Gonzalez Diego Martin ARG Ibanez Aldana Isabel ARG Impellizzeri Brian Lionel ARG Martinez Yanina Andrea ARG Romero Florencia Belen ARG Tassi Sanchez Karen Melanie ARG Urra Hernan Emanuel ARG Anderson Corey AUS Ault-Connell Eliza AUS Ballard Angela AUS Blake Torita AUS Carter Samuel AUS Chatman Aaron AUS Clarke Rhiannon AUS Cleaver Erin AUS Clifford Jaryd AUS Colley Tamsin AUS Colman Richard AUS Coop Brianna AUS Family name Given name National Paralympic Committee Crees Dayna AUS Crombie Cameron AUS de Rozario Madison AUS Domrow Kyra AUS Donovan Kobie AUS Doyle Taylor AUS Edmiston Sarah AUS Felton Matthew AUS Foote Lachlan AUS Gesini Ari AUS Harding Sam AUS Henly Guy AUS Hodgetts Todd AUS Holt Isis AUS Hum Nicholas AUS Jackson Martin AUS Jarrett Craig AUS Joseph Kailyn AUS Keefer Claire AUS Kirk Daniel AUS Lambird Robyn AUS McCracken Rheed AUS McInerney Jamie -

First Name Last Name Company Email Category Emanuele

First Name Last Name Company Email Category Emanuele Albertinale Quantronics - CEA [email protected] Post Doc/Trainee Jean-Claude Besse ETH Zurich [email protected] Post Doc/Trainee Audrey Bienfait University of Chicago [email protected] Post Doc/Trainee Alexandre Blais Université de Sherbrooke [email protected] Co-Organizer Machiel Blok UC Berkeley [email protected] Post Doc/Trainee Jérôme Bourassa Université de Sherbrooke [email protected] Post Doc/Trainee Alexandre Choquette-Poitevin Université de Sherbrooke [email protected] Post Doc/Trainee Yiwen Chu ETH Zurich [email protected] Speaker Alessandro Ciani TU Delft [email protected] Post Doc/Trainee Cristiano Ciuti Univ. Paris Diderot [email protected] Speaker Aashish Clerk University of Chicago [email protected] Co-Organizer Michel Devoret Yale University [email protected] Speaker Agustin Di Paolo Institut quantique - Université de Sherbrooke [email protected] Post Doc/Trainee Eva Dupont-Ferrier Université de Sherbrooke - Institut Quantique [email protected] Invited Guest Stefan Filipp IBM Research - Zurich [email protected] Invited Guest Johannes Fink IST Austria [email protected] Invited Guest with Student Emmanuel Flurin Quantronics - CEA [email protected] Invited Guest with Student Simon Groeblacher Delft University of Technology [email protected] Speaker Jonathan Gross Université de Sherbrooke [email protected] Post Doc/Trainee -

Iacp New Members

44 Canal Center Plaza, Suite 200 | Alexandria, VA 22314, USA | 703.836.6767 or 1.800.THEIACP | www.theIACP.org IACP NEW MEMBERS New member applications are published pursuant to the provisions of the IACP Constitution. If any active member in good standing objects to an applicant, written notice of the objection must be submitted to the Executive Director within 60 days of publication. The full membership listing can be found in the online member directory under the Participate tab of the IACP website. Associate members are indicated with an asterisk (*). All other listings are active members. Published December 1, 2020. Argentina Buenos Aires *Benitez, Marisa Edith, Agente, Traffic Agents Corps *Cordone, Nancy, Agente, Traffic Agents Corps *Gonzalez, Maria Eugenia, Jefa de Base Cochabamba, Traffic Agents Corps Australia Australian Capital Territory Canberra *Jones, Warwick, Chief Learning Officer, Australian Federal Police Queensland Brisbane *Burke, Louise, Officer, Queensland Police Service Brazil Ponte Pequena Sao Paulo Machado Junior, Mario, 1º Tenente CPRv, Military Police of Sao Paulo Recife *Andrade, Alessandro, Coordenador de Orientadores de Trânsito, Municipal Traffic Agency *Cunha, Nilton, Gerente Geral de Fiscalizacao e Operacoes, Municipal Traffic Agency *Sales, Gustavo, Gerente Geral de Engenharia de Trafego, Municipal Traffic Agency Salvador *Cardoso e Silva, Jose Hage, Coordenador, TRANSALVADOR *dos Reis, Marcos Nascimento, Supervisor, TRANSALVADOR *dos Santos, Antonio Neri, Gerente de Trânsito, TRANSALVADOR Sao Paulo -

Alexandru Vaida-Voevod

Cluj 28 Februarie 19 i 3 Lei exemplarul mssmasasaESB&ä « ABONAMENT ANUAL REDACŢIA ŞI ADMINISTRAŢIA: Pe un an Lei 200. ANUNŢURI DUPĂ TARIF SE PklMESC Pe un jumătate an Lei 100. LA ADMINISTRAŢIA ZIARULUI Cluj, Strada Regina Maria No. 36. Autorităţi şi inştituţiuni Lei 500. Telefon 7-60. In streinătate dublu. CLUJ, STRADA REGINA MARIA No. 36 J mului românesc a fost un întruchipă- Alexandru 27 F©feFuart@s 1872 I tor al instinctului naţional, el a fost ; în ce priveşte politica socială a popo- Vaida-Voevod ! rului românesc din Ardeal şi Banat un de: IULIU MANIU adevărat reformator. Studiile sale, Nume trecut în ! lectura sa şi simţul său social l-au iâ- istorie. Multe se vor • eut să fie primul propagator al unei scrie despre el. In biopolitici naţionale la Români. In perspectiva istorică oficina planurilor politice, unde se a oameuilor şi Jap- făureau programe, manifeste şi de- telor se va cerne I claraţii, Aurel Vlad reprezenta ideîa prin sita vremii ca democraţiei integrale şi lupta înver lomnia şi răutatea şunată contra latifundiilor boiereşti, şi va rămânea la su- pe care a trecut-o şi în ţinuturile din- prajaţă adevărul. I colo de hotarele etnice ale poporului Se va constata de acord cu noi con I românesc din Ungaria, iar Alexandru timporanii nobleţă sufletului său, ca ; Vaida era propagatorul ideilor so racterul său antic, îmbrăcat uneori ciale progresiste cu votul pentru fe într'un umor sănătos, fascinator în mei şi cu îngrijirea sanitară şi socia conversaţie, care adesea dă lovituri lă a poporului cu toate consecinţele dureroase, destinate adversarilor săi. -

University Microfilms 300 North Zeeb Road Ann Arbor, Michigan 46106 a Xarox Education Company WALTERS, Everett Garrison 1944- ION C

INFORMATION TO USERS This dissertation was produced from a microfilm copy of the original document. While the most advanced technological means to photograph and reproduce this document have been used, the quality is heavily dependent upon the quality of the original submitted. The following explanation of techniques is provided to help you understand markings or patterns which may appear on this reproduction. 1. The sign or "target" for pages apparently lacking from the document photographed is "Missing Page(s)". If it was possible to obtain the missing page(s) or section, they are spliced into the film along with adjacent pages. This may have necessitated cutting thru an image and duplicating adjacent pages to insure you complete continuity. 2. When an image on the film is obliterated with a large round black mark, it is an indication that the photographer suspected that the copy may have moved during exposure and thus cause a blurred image. You will find a good image of the page in the adjacent frame. 3. When a map, drawing or chart, etc., was part of the material being photographed the photographer followed a definite method in "sectioning" the material. It is customary to begin photoing at the upper left hand corner of a large sheet and to continue photoing from left to right in equal sections with a small overlap. If necessary, sectioning is continued again — beginning below the first row and continuing on until complete. 4. The majority of users indicate that the textual content is of greatest value, however, a somewhat higher quality reproduction could be made from "photographs" if essential to the understanding of the dissertation. -

Alexandru Averescu - 150 De Ani De La Naştere

studii/documente ALEXANDRU AVERESCU - 150 DE ANI DE LA NAŞTERE Dr. PETRE OTU PREćEDINTELE COMISIEI ROMÂNE DE ISTORIE MILITARÕ S-au împlinit în aceastĆ primĆvarĆ un secol üi jumĆtate de la naüterea uneia dintre cele mai importante personalitĆĦi ale istoriei naĦionale- mareüalul Alexandru Averescu. Aniversarea nu a reprezentat un eveniment mediatic de prim plan, societatea româneascĆ în post-tranziĦie üi în post-modernism fi ind ocupatĆ, am spune fi resc, de cu totul alte probleme. Cu toate acestea, ea nu a trecut neobservatĆ în mediile ütiinĦifi ce üi culturale. Biblioteca NaĦionalĆ a României a organizat, în ziua de 5 martie, la sediul sĆu de la Serviciul ColecĦii Speciale (AüezĆmintele „Ion I.C. BrĆtianu”), un simpozion în cadrul cĆruia a fost evocatĆ personalitatea lui Alexandru Averescu. Cu acest prilej s-a vernisat üi o expoziĦie cu interesante fotografi i üi documente referitoare la viaĦa üi activitatea mareüalului. De asemenea, FundaĦia „ Mareüal Alexandru Averescu”, înfi inĦatĆ de cadre active üi de rezervĆ din municipiul BuzĆu üi care funcĦioneazĆ pe lângĆ Comandamentul 2 OperaĦional, „Mareüal Alexandru Averescu” din aceiaüi localitate, a organizat, pe 9 martie, o conferinĦĆ consacratĆ acestui eveniment. Reuniunea a fost onoratĆ de academicianul ûerban Papacostea, care l-a cunoscut pe Alexandru Averescu, tatĆl domniei sale, Petre Papacostea, fi ind secretarul particular al mareüalului üi unul dintre cei mai fi deli colaboratori ai acestuia. ComunicĆrile prezentate au fost publicate în numĆrul special al revistei fundaĦiei mai sus amintite - „StrĆjer în calea furtunilor”. Dr. Petre Otu IatĆ, cĆüi Serviciul Istoric al Armatei vine cu o iniĦiativĆ lĆudabilĆ, dedicând un numĆr al revistei „Document” personalitĆĦii mareüalului üi epocii în care el üi-a desfĆüurat activitatea. -

Vestnik Za Tuje Jezike 2015 FINAL.Indd 71 18.12.2015 12:35:59 72 VESTNIK ZA TUJE JEZIKE

Ioana Jieanu: ONOMASTIC INTERFERENCES IN THE LANGUAGE OF MIGRATION 71 Ioana Jieanu UDK 811.135.1’373.23:81’282.8(450+460) Faculty od Arts DOI: 10.4312/vestnik.7.71-85 University of Ljubljana University of Craiova University of Ploiești (UPG) [email protected] ONOMASTIC INTERFERENCES IN THE LANGUAGE OF MIGRATION 1 INTRODUCTION Migration is currently one of the most discussed social phenomena, since it has a very high impact on countries from which emigration occurs as well as on immigrant receiving coun- tries. It has been estimated that there are between 125 and 130 million immigrants around the world, taking into account only first generation immigrants and not their descendants, born in the destination countries (Aguinaga Roustan 1998: 34). This figure does not include migration waves from countries involved in armed conflicts. According to OECD1 (World Migration in Figures. A joint contribution by UN-DESA and the OECD to the United Na- tions High-Level Dialogue on Migration and Development 2013), in 20132, the number of international migrants was 232 million, having increased by 77 million since 1990. 1990 2000 2010 2013 154.2 174.5 220.7 231.5 Table 1. Evolution of international migration according to data published by OECD. Since December 19893, many Romanian people have chosen to emigrate, in search of a better life. If at first Romanian migration was directed, particularly, towards Germany and France, after the year 2000 most Romanian people chose to immigrate to Spain and Italy. In recent years Romanian emigrants have chosen Great Britain and the Nordic countries as their most popular host countries. -

In Alphabetical Order

Table 4: Full list of first forenames given, Scotland, 2015 (final) in alphabetical order NB: * indicates that, sadly, a baby who was given that first forename has since died. Number of Number of Boys' names NB Girls' names NB babies babies A-Jay 2 Aadhira 1 Aadam 4 * Aadhya 1 Aadhish 1 Aadya 2 Aadi 1 Aaeryn-Leigh 1 Aadil 2 Aahana 1 Aadishiv 1 Aailah 1 Aaditiya 1 Aaima 1 Aadm 1 Aaira 1 Aadvik 1 Aairah 2 Aahil 8 Aaisha 1 Aaiden 1 Aaleeyah 1 Aalyan 1 Aalia 1 Aamar 1 Aalin 1 Aamir 1 Aaliya-Bell 1 Aaraiz 1 Aaliyah 27 Aaran 3 Aaliyah-Lily 1 Aarav 6 Aaliyah-Rose 1 Aaravjit 1 Aaminah 4 Aarian 1 Aaniyah 1 Aaron 242 Aanya 2 Aaron-James 2 Aara 1 Aaronlee 1 Aarathya 1 Aarran 1 Aari 1 Aarron 3 Aaria 1 Aarush 2 Aariah 1 Aaryan 1 Aariya 1 Aaryn 2 Aarlea 1 Aarzish 1 Aarmeen 1 Aayam 1 Aarti 1 Aayan 1 Aarya 4 Aayden 1 Aaryanna 1 Abdalla 1 Aashirya 1 Abdallah 1 Aashna 1 Abdel-Rahman 1 Aashriya 1 Abdou 2 Aashvi 1 Abdualmalik 1 Aasiyah 1 Abdul 6 Aayah 1 * Abdul'malik 1 Aayat 1 Abdul-Hakeem 1 Abal 1 Abdul-Kareem 1 Abber 1 Abdul-Raheem 1 Abbey 14 Abdul-Raqib 1 Abbey-Rose 1 Abdulaziz 2 Abbeygail 1 Abdulelah 1 Abbi 7 Abdulhadi 1 Abbie 76 Abdulhamid 1 Abbie-Leigh 1 Abdullah 18 Abbie-May 1 Abdulrahman 1 Abbie-Rose 2 Abdulwahab 1 Abbiegail 1 Abdurrahman 2 Abbiegayle 1 Abdussalam 1 Abbigail 4 Abel 11 * Abby 17 Abhay 1 Abby-Rose 1 © Crown Copyright 2016 Table 4 (continued) NB: * indicates that, sadly, a baby who was given that first forename has since died Number of Number of Boys' names NB Girls' names NB babies babies Abheer 1 Abbygail 1 Abraham 5 Abeeha 1 Abu 2 -

Current List of Covid-19 Coordinators (By Country and Mission) 23 September 2021

CURRENT LIST OF COVID-19 COORDINATORS (BY COUNTRY AND MISSION) 23 SEPTEMBER 2021 COUNTRY Duty Station COVID-19 Coordinator email Denise Wilman 12129632668 ext: 6377 Alternate: Mr. Alhagi Marong [email protected] Afghanistan Kabul 93728302768 [email protected] Ms Fioralba Shkodra Albania Tirana 355692090254 [email protected] Ms. Lylia Oubraham 213 660 205 191 Alternate: Mr. Adel Zeddam [email protected] Algeria Algiers 213 661 413 527 [email protected] Dr. Fernanda Alves 244 923879311 Alternate: Javier Aramburu [email protected] Angola Luanda 244 931624569 [email protected] Jessica Braver 5491153162070 Alternate: Tamara Mancero 5491144382758 [email protected] Paula Mortola [email protected] Argentina Buenos Aires 5491150129990 [email protected] Ms. Denise Sumpf Armenia Yerevan 37444321140 [email protected] Mr. Farid Babayev Azerbaijan Baku 994503182933 [email protected] Mr. Sherlin Lance Brown Bahamas and 242 422-4441 Turks and Caicos Dr. Gabriel Vivas Francesconi [email protected] Islands Nassau 242 422-0243 [email protected] Dr. Ibrahim El-Ziq Bahrain Manama Mobile: +966 50006149 [email protected] Sudipto Mukerjee Cell: +8801730056188 and +8801700703910 Alternate: Alpha Bah [email protected] Bangladesh Dhaka 8801730317224 [email protected] Dr. Karen Polson 1-246-836-8988 Barbados and Alternate: Kenroy Roach [email protected] the OECS Bridgetown 1 246 832 6103 [email protected] Mr. Jahor Novikau ; +37529706378 [email protected] Belarus Minsk Mr. Egor Basalyga [email protected] Dr. Noreen Jack [email protected] Belize Belmopan 5016100188 [email protected] 1 Alternate: Edwin Bolastig 5016061033 Dr Daniel Yota Benin Cotonou +229 90 00 21 81 [email protected] Dr.