Emotion Recognition in Immersive Virtual Reality: from Statistics to Affective Computing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Vuzix Corporation Annual Report 2021

Vuzix Corporation Annual Report 2021 Form 10-K (NASDAQ:VUZI) Published: March 15th, 2021 PDF generated by stocklight.com UNITED STATES SECURITIES AND EXCHANGE COMMISSION Washington, D.C. 20549 FORM 10-K ☑ ANNUAL REPORT PURSUANT TO SECTION 13 OR 15(d) OF THE SECURITIES EXCHANGE ACT OF 1934 For the fiscal year ended December 31, 2020 ☐ TRANSITION REPORT PURSUANT TO SECTION 13 OR 15(d) OF THE SECURITIES EXCHANGE ACT OF 1934 Commission file number: 001-35955 Vuzix Corporation ( Exact name of registrant as specified in its charter ) Delaware 04-3392453 (State of incorporation) (I.R.S. employer identification no.) 25 Hendrix Road West Henrietta, New York 14586 (Address of principal executive office) (Zip code) (585) 359-5900 (Registrant’s telephone number including area code) Securities registered pursuant to Section 12(b) of the Act: Title of each class: Trading Symbol(s) Name of each exchange on which registered Common Stock, par value $0.001 VUZI Nasdaq Capital Market Securities registered pursuant to Section 12(g) of the Act: None. Indicate by check mark if the registrant is a well-known seasoned issuer, as defined in Rule 405 of the Securities Act. Yes ◻ No þ Indicate by check mark if the registrant is not required to file reports pursuant to Section 13 or Section 15(d) of the Exchange Act. Yes ◻ No þ Indicate by check mark whether the registrant: (1) has filed all reports required to be filed by Section 13 or 15(d) of the Securities Exchange Act of 1934 during the preceding 12 months (or for such shorter period that the registrant was required to file such reports), and (2) has been subject to such filing requirements for the past 90 days. -

View the Future® Vuzix Developer Newsletter

Game Developer Conference - Special Edition - March, 2010 View the Future® Vuzix Developer Newsletter Stereoscopic 3D Eyewear – Goes From Geek to Chic Royalty Free SDK Update Stereoscopic 3D is the latest trend in movies and games – which format(s) do I support? Beta Release SDK Supports Side- by-Side Stereoscopic 3D Video and 6-DoF Tracker. Vuzix has been providing a roy- alty free SDK for its PC compat- ible video eyewear since 2006. A new release of the Vuzix SDK (beta release) will be available for download from the Vuzix website (www.vuzix.com) in late March, 2010. This new release expands the depth of supported stereoscop- ic formats and adds support for the soon to be released Wrap 6-DoF (Degree of Freedom) Tracker. This is a question many game developers are asking themselves, as there is no single clear winner in the format The Side-by-Side stereoscopic wars. Until recently, Frame Sequential appeared to be the leading format for PC games, but recently Side-by-Side 3D format has become popular of has made great strides. Vuzix offers end-users two options, Frame Sequential through the iWear® VR920 video late and is expected to show up in eyewear or Side-by-Side through the Wrap line of video eyewear. other titles moving forward. This format is supported in this new The sunglass style Wrap™ eyewear line is the latest addition to the Vuzix eyewear line. When equipped with an SDK release and Vuzix encour- optional Wrap VGA Adapter, it can be connected to any Windows based PC running XP, Vista or 7 - 32 or 64-bit ages software developers to sup- versions. -

1 Automated Face Analysis for Affective Computing Jeffrey F. Cohn & Fernando De La Torre Abstract Facial Expression

Please do not quote. In press, Handbook of affective computing. New York, NY: Oxford Automated Face Analysis for Affective Computing Jeffrey F. Cohn & Fernando De la Torre Abstract Facial expression communicates emotion, intention, and physical state, and regulates interpersonal behavior. Automated Face Analysis (AFA) for detection, synthesis, and understanding of facial expression is a vital focus of basic research. While open research questions remain, the field has become sufficiently mature to support initial applications in a variety of areas. We review 1) human-observer based approaches to measurement that inform AFA; 2) advances in face detection and tracking, feature extraction, registration, and supervised learning; and 3) applications in action unit and intensity detection, physical pain, psychological distress and depression, detection of deception, interpersonal coordination, expression transfer, and other applications. We consider user-in-the-loop as well as fully automated systems and discuss open questions in basic and applied research. Keywords Automated Face Analysis and Synthesis, Facial Action Coding System (FACS), Continuous Measurement, Emotion, Nonverbal Communication, Synchrony 1. Introduction The face conveys information about a person’s age, sex, background, and identity, what they are feeling, or thinking (Darwin, 1872/1998; Ekman & Rosenberg, 2005). Facial expression regulates face-to-face interactions, indicates reciprocity and interpersonal attraction or repulsion, and enables inter-subjectivity between members of different cultures (Bråten, 2006; Fridlund, 1994; Tronick, 1989). Facial expression reveals comparative evolution, social and emotional development, neurological and psychiatric functioning, and personality processes (Burrows & Cohn, In press; Campos, Barrett, Lamb, Goldsmith, & Stenberg, 1983; Girard, Cohn, Mahoor, Mavadati, & Rosenwald, 2013; Schmidt & Cohn, 2001). Not surprisingly, the face has been of keen interest to behavioral scientists. -

Whitepaper Head Mounted Displays & Data Glasses Applications and Systems

Whitepaper Head Mounted Displays & Data Glasses Applications and Systems Dr.-Ing. Dipl.-Kfm. Christoph Runde Virtual Dimension Center (VDC) Fellbach Auberlenstr. 13 70736 Fellbach www.vdc-fellbach.de © Competence Centre for Virtual Reality and Cooperative Engineering w. V. – Virtual Dimension Center (VDC) System classes Application fields Directions of development Summary Content . System classes Head Mounted Display (HMD) – Video glasses – Data glasses . Simulator disease / Cyber Sickness . Application fields HMDs: interior inspections, training, virtual hedging engineering / ergonomics . Application fields data glasses: process support, teleservice, consistency checks, collaboration . Directions of development: technical specifications, (eye) tracking, retinal displays, light field technology, imaging depth sensors . Application preconditions information & integration (human, IT, processes) . Final remark 2 SystemSystem classes classes Application fields Directions of development Summary Head Mounted Displays (HMDs) – Overview . 1961: first HMD on market . 1965: 3D-tracked HMD by Ivan Sutherland . Since the 1970s a significant number of HMDs is applied in the military sector (training, additional display) Table: Important HMD- projects since the 1970s [Quelle: Li, Hua et. al.: Review and analysis of avionic helmet-mounted displays. In : Op-tical Engineering 52(11), 110901, Novembre2013] 3 SystemSystem classes classes Application fields Directions of development Summary Classification HMD – Video glasses – Data glasses Head Mounted Display -

Emotion Classification Based on Biophysical Signals and Machine Learning Techniques

S S symmetry Article Emotion Classification Based on Biophysical Signals and Machine Learning Techniques Oana Bălan 1,* , Gabriela Moise 2 , Livia Petrescu 3 , Alin Moldoveanu 1 , Marius Leordeanu 1 and Florica Moldoveanu 1 1 Faculty of Automatic Control and Computers, University POLITEHNICA of Bucharest, Bucharest 060042, Romania; [email protected] (A.M.); [email protected] (M.L.); fl[email protected] (F.M.) 2 Department of Computer Science, Information Technology, Mathematics and Physics (ITIMF), Petroleum-Gas University of Ploiesti, Ploiesti 100680, Romania; [email protected] 3 Faculty of Biology, University of Bucharest, Bucharest 030014, Romania; [email protected] * Correspondence: [email protected]; Tel.: +40722276571 Received: 12 November 2019; Accepted: 18 December 2019; Published: 20 December 2019 Abstract: Emotions constitute an indispensable component of our everyday life. They consist of conscious mental reactions towards objects or situations and are associated with various physiological, behavioral, and cognitive changes. In this paper, we propose a comparative analysis between different machine learning and deep learning techniques, with and without feature selection, for binarily classifying the six basic emotions, namely anger, disgust, fear, joy, sadness, and surprise, into two symmetrical categorical classes (emotion and no emotion), using the physiological recordings and subjective ratings of valence, arousal, and dominance from the DEAP (Dataset for Emotion Analysis using EEG, Physiological and Video Signals) database. The results showed that the maximum classification accuracies for each emotion were: anger: 98.02%, joy:100%, surprise: 96%, disgust: 95%, fear: 90.75%, and sadness: 90.08%. In the case of four emotions (anger, disgust, fear, and sadness), the classification accuracies were higher without feature selection. -

STAR 1200 & 1200XL User Guide

STAR 1200 & 1200XL Augmented Reality Systems User Guide VUZIX CORPORATION VUZIX CORPORATION STAR 1200 & 1200XL – User Guide © 2012 Vuzix Corporation 2166 Brighton Henrietta Town Line Road Rochester, New York 14623 Phone: 585.359.5900 ! Fax: 585.359.4172 www.vuzix.com 2 1. STAR 1200 & 1200XL OVERVIEW ................................... 7! System Requirements & Compatibility .................................. 7! 2. INSTALLATION & SETUP .................................................. 14! Step 1: Accessory Installation .................................... 14! Step 2: Hardware Connections .................................. 15! Step 3: Adjustment ..................................................... 19! Step 4: Software Installation ...................................... 21! Step 5: Tracker Calibration ........................................ 24! 3. CONTROL BUTTON & ON SCREEN DISPLAY ..................... 27! VGA Controller .................................................................... 27! PowerPak+ Controller ......................................................... 29! OSD Display Options ........................................................... 30! 4. STAR HARDWARE ......................................................... 34! STAR Display ...................................................................... 34! Nose Bridge ......................................................................... 36! Focus Adjustment ................................................................ 37! Eye Separation ................................................................... -

Conceptual Framework for Quantum Affective Computing and Its Use in Fusion of Multi-Robot Emotions

electronics Article Conceptual Framework for Quantum Affective Computing and Its Use in Fusion of Multi-Robot Emotions Fei Yan 1 , Abdullah M. Iliyasu 2,3,∗ and Kaoru Hirota 3,4 1 School of Computer Science and Technology, Changchun University of Science and Technology, Changchun 130022, China; [email protected] 2 College of Engineering, Prince Sattam Bin Abdulaziz University, Al-Kharj 11942, Saudi Arabia 3 School of Computing, Tokyo Institute of Technology, Yokohama 226-8502, Japan; [email protected] 4 School of Automation, Beijing Institute of Technology, Beijing 100081, China * Correspondence: [email protected] Abstract: This study presents a modest attempt to interpret, formulate, and manipulate the emotion of robots within the precepts of quantum mechanics. Our proposed framework encodes emotion information as a superposition state, whilst unitary operators are used to manipulate the transition of emotion states which are subsequently recovered via appropriate quantum measurement operations. The framework described provides essential steps towards exploiting the potency of quantum mechanics in a quantum affective computing paradigm. Further, the emotions of multi-robots in a specified communication scenario are fused using quantum entanglement, thereby reducing the number of qubits required to capture the emotion states of all the robots in the environment, and therefore fewer quantum gates are needed to transform the emotion of all or part of the robots from one state to another. In addition to the mathematical rigours expected of the proposed framework, we present a few simulation-based demonstrations to illustrate its feasibility and effectiveness. This exposition is an important step in the transition of formulations of emotional intelligence to the quantum era. -

Augmented and Virtual Reality in Operations

Augmented and Virtual Reality in Operations A guide for investment Introduction Augmented Reality and Virtual Reality (AR/VR) are not new, but recent advances in computational power, storage, graphics processing, and high-resolution displays have helped overcome some of the constraints that have stood in the way of the widespread use of these immersive technologies. Global spending on AR/VR is now expected to reach $17.8 billion in 2018, an increase of nearly 95% over the $9.1 billion estimate for 2017.1 Given this impetus, we wanted to understand more about the current state of play with AR/VR in the enterprise. We surveyed executives at 700+ companies, including 600 companies who are either experimenting with or implementing AR/VR; spoke with AR/VR-focused leadership at global companies, start-ups, and vendors; and analyzed over 35 use cases, with a particular focus on: • The use of AR/VR for internal industrial company operations, including, but not limited to, design, engineering, and field services • The automotive, utility, and discrete manufacturing sectors. This research focuses on organizations that have initiated their AR/VR journey, whether by experimentation or implementation, and aims to answer a number of questions: • How is AR/VR being used today? • Where should organizations invest – what are the use cases that generate the most value? • How can companies either begin or evolve their initiatives? Outside of this report, terms you will commonly find when reading about AR/VR are mixed reality, hybrid reality, or merged reality in which both the physical and the virtual or digital realities coexist or even merge. -

Optimal Arousal Identification and Classification for Affective Computing Using Physiological Signals: Virtual Reality Stroop Task

IEEE TRANSACTIONS ON AFFECTIVE COMPUTING, VOL. 1, NO. 2, JULY-DECEMBER 2010 109 Optimal Arousal Identification and Classification for Affective Computing Using Physiological Signals: Virtual Reality Stroop Task Dongrui Wu, Member, IEEE, Christopher G. Courtney, Brent J. Lance, Member, IEEE, Shrikanth S. Narayanan, Fellow, IEEE, Michael E. Dawson, Kelvin S. Oie, and Thomas D. Parsons Abstract—A closed-loop system that offers real-time assessment and manipulation of a user’s affective and cognitive states is very useful in developing adaptive environments which respond in a rational and strategic fashion to real-time changes in user affect, cognition, and motivation. The goal is to progress the user from suboptimal cognitive and affective states toward an optimal state that enhances user performance. In order to achieve this, there is need for assessment of both 1) the optimal affective/cognitive state and 2) the observed user state. This paper presents approaches for assessing these two states. Arousal, an important dimension of affect, is focused upon because of its close relation to a user’s cognitive performance, as indicated by the Yerkes-Dodson Law. Herein, we make use of a Virtual Reality Stroop Task (VRST) from the Virtual Reality Cognitive Performance Assessment Test (VRCPAT) to identify the optimal arousal level that can serve as the affective/cognitive state goal. Three stimuli presentations (with distinct arousal levels) in the VRST are selected. We demonstrate that when reaction time is used as the performance measure, one of the three stimuli presentations can elicit the optimal level of arousal for most subjects. Further, results suggest that high classification rates can be achieved when a support vector machine is used to classify the psychophysiological responses (skin conductance level, respiration, ECG, and EEG) in these three stimuli presentations into three arousal levels. -

A Fuzzy Modelling Approach of Emotion for Affective Computing Systems

A Fuzzy Modelling Approach of Emotion for Affective Computing Systems Charalampos Karyotis1, Faiyaz Doctor1, Rahat Iqbal1, Anne James1 and Victor Chang2 1Faculty of Engineering, Environment and Computing, Coventry University, Priory Street, CV1 5FB, Coventry, U.K. 2School of Computing, Creative Technologies & Engineering, Leeds Beckett University, City Campus, Leeds, LS1 3HE, U.K. Keywords: Adaptive Fuzzy Systems, Emotion Modelling, Affective Trajectories, Arousal Valence, Affective Computing, Personalised Learning. Abstract: In this paper we present a novel affective modelling approach to be utilised by Affective Computing systems. This approach is a combination of the well known Arousal Valence model of emotion and the newly introduced Affective Trajectories Hypothesis. An adaptive data driven fuzzy method is proposed in order to extract personalized emotion models, and successfully visualise the associations of these models’ basic elements, to different emotional labels, using easily interpretable fuzzy rules. Namely we explore how the combinations of arousal, valence, prediction of the future, and the experienced outcome after this prediction, enable us to differentiate between different emotional labels. We use the results obtained from a user study consisting of an online survey, to demonstrate the potential applicability of this affective modelling approach, and test the effectiveness and stability of its adaptive element, which accounts for individual differences between the users. We also propose a basic architecture in order for this approach to be used effectively by AC systems, and finally we present an implementation of a personalised learning system which utilises the suggested framework. This implementation is tested through a pilot experimental session consisting of a tutorial on fuzzy logic which was conducted under an activity-led and problem based learning context. -

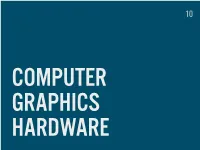

GRAPHICS HARDWARE WHAT’S in STORE GRAPHICS CARDS GRAPHICS CARDS DEDICATED (EXTERNAL) High Performance Power Consumption Heat Emission

10 COMPUTER GRAPHICS HARDWARE WHAT’S IN STORE GRAPHICS CARDS GRAPHICS CARDS DEDICATED (EXTERNAL) High performance Power consumption Heat emission INTEGRATED (INTERNAL) Low power, low heat Mobile devices, on board Integrated with CPU ARCHITECTURE VERTEX SHADERS (TRANSFORM GEOMETRY) GEOMETRY SHADERS (CREATE NEW GEOMETRY) PIXEL SHADERS (COLORS, SHADOWS…) UNIFIED SHADERS One type of processors for all operations GTX 680 = 1536 unified shader cores CUDA, GPGPU General (not only graphics) performed on GPU Parallelism (HW video encoding, numerical computations) CONNECTORS VGA Analog DVI Digital + Analog HDMI Digital miniHDMI, microHDMI DISPLAYPORT Digital, Analog Mini DisplayPort (Apple) MANUFACTURERS NVIDIA GeForce, Quadro, Tesla AMD (FORMERLY ATI) Radeon, FirePro INTEL Integrated in Core CPUs POWERVR ARM Mali DISPLAYS CATHODE RAY TUBE (CRT) CATHODE RAY TUBE (CRT) ANALOG TVS OLD COMPUTER MONITORS MEDIUM SIZE DISPLAYS LIGHT EMISSION Black is black FLICKER 75-100 Hz for work http://www.bthompson.net/ PLASMA (PDP, NEO-PDP) NOT SUITABLE FOR COMPUTER DISPLAYS LARGE SCREENS (30”+) LIGHT EMISSION Black is black HIGH ENERGY DEMANDS VIEW ANGLE DOESN’T MATTER IMAGE RETENTION Reduced for Neo-PDP LIQUID CRYSTAL DISPLAY (LCD) CRYSTALS BLOCK LIGHT FROM BACK SOURCE Black is hard to achieve DIFFERENT TECHNOLOGIES (TN, IPS, MVA, …) LOW ENERGY CONSUMPTION LARGE SCREENS VIEW ANGLES ISSUE LCD LED CONFUSION OLED (ORGANIC LIGHT-EMITTING DIODE) LIGHT EMISSION GOOD CONTRAST AND COLORS EXPENSIVE PRODUCTION Small screens so far LOW ENERGY POTENTIALLY FLEXIBLE VIEW ANGLE -

Laval Virtual's Missions Are to Gather, Inspire and Valorize Involved in This Study

The VR/AR special edition #4 health Clinical VR Medicine Well Being #EDITORIAL How VR is changing the way women breast cancer is diagnosed, treated and managed LAURENT CHRÉTIEN DIRECTOR / LAVAL VIRTUAL ancer cells live in complex communities. They will then take all the information they Just like houses in a city, each cell in a collect about the cells in a tumour and use it tumour is different from its neighbour, to construct a 3D version that can be studied Cand relies on infrastructure to support using virtual reality. its existence. And we know that there are different neighbourhoods, some worse than Using virtual reality will allow scientists others. Where we have roads, tumours contain to immerse themselves in a tumour, blood vessels that deliver nutrients, and act meaning they can study patterns and other as highways for different cell types to move characteristics within it, in entirely new around. And when a tumour spreads, the can- ways that aren’t possible in 2D. It will also cer cells themselves use these blood ‘roads’ to allow multiple doctors and scientists to look migrate. at a tumour at the same time, meaning people at opposite ends of a country, and with different areas of expertise, can What the healthcare experts need is a Google Earth-like view work together to help diagnose and treat patients better. And of a tumour. If they could make a 3D map, they would find with the Covid19 crisis, the use of virtual reality to cooperate new targets for treatment and, eventually, could use this view remotely is even more obvious! to track what’s going on in real time, such as in response to treatment.