Math Co-Processor 8087

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

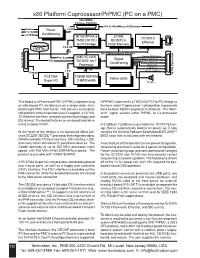

X86 Platform Coprocessor/Prpmc (PC on a PMC)

x86 Platform Coprocessor/PrPMC (PC on a PMC) 32b/33MHz PCI bus PN1/PN2 +5V to Kbd/Mouse/USB power Vcore Power +3.3V +2.5v Conditioning +3.3VIO 1K100 FPGA & 512KB 10/100TX TMS2250 PCI BIOS/PLA Ethernet RJ45 Compact to PCI bridge Flash Memory PLA I/O Flash site 8 32b/33MHz Internal PCI bus Analog SVGA Video Pwr Seq AMD SC2200 Signal COM 1 (RXD/TXD only) IDE "GEODE (tm)" Conditioning COM 2 (RXD/TXD only) Processor USB Port 1 Rear I/O PN4 I/O Rear 64 USB Port 2 LPC Keyboard/Mouse Floppy 36 Pin 36 Pin Connector PC87364 128MB SDRAM Status LEDs Super I/O (16MWx64b) PC Spkr This board is a Processor PMC (PrPMC) implementing A PrPMC implements a TMS2250 PCI-to-PCI bridge to an x86-based PC architecture on a single-wide, stan- the host, and a “Coprocessor” cofniguration implements dard height PMC form factor. This delivers a complete back-to-back 16550 compatible COM ports. The “Mon- x86 platform with comprehensive I/O support, a 10/100- arch” signal selects either PrPMC or Co-processor TX Ethernet interface, and disk access (both floppy and mode. IDE drives). The board features an on-board hard drive using Compact Flash. A 512Kbyte FLASH memory holds the 1K100 PLA im- age that is automatically loaded on power up. It also At the heart of the design is an Advanced Micro De- contains the General Software Embedded BIOS 2000™ vices SC2200 GEODE™ processor that integrates video, BIOS code that is included with each board. -

Convey Overview

THE WORLD’S FIRST HYBRID-CORE COMPUTER. CONVEY HYBRID-CORE COMPUTING Hybrid-core Computing Convey HC-1 High Performance of application- specific hardware Heterogenous solutions • can be much more efficient Performance/ • still hard to program Programmability and Power efficiency deployment ease of an x86 server Application Multicore solutions • don’t always scale well • parallel programming is hard Low Difficult Ease of Deployment Easy 1/22/2010 3 Hybrid-Core Computing Application-Specific Personalities Applications • Extend the x86 instruction set • Implement key operations in Convey Compilers hardware Life Sciences x86-64 ISA Custom ISA CAE Custom Financial Oil & Gas Shared Virtual Memory Cache-coherent, shared memory • Both ISAs address common memory *ISA: Instruction Set Architecture 7/12/2010 4 HC-1 Hardware PCI I/O FPGA FPGA Intel Personalities Chipset FPGA FPGA 8 GB/s 80 GB/s Memory Memory Cache Coherent, Shared Virtual Memory 1/22/2010 5 Using Personalities C/C++ Fortran • Personalities are user specifies reloadable personality at instruction sets Convey Software compile time Development Suite • Compiler instruction generates x86 descriptions and coprocessor instructions from Hybrid-Core Executable P ANSI standard x86-64 and Coprocessor Personalities C/C++ & Fortran Instructions • Executable can run on x86 nodes FPGA Convey HC-1 or Convey Hybrid- bitfiles Core nodes Intel x86 Coprocessor personality loaded at runtime by OS 1/22/2010 6 SYSTEM ARCHITECTURE HC-1 Architecture “Commodity” Intel Server Convey FPGA-based coprocessor Direct -

Exploiting Free Silicon for Energy-Efficient Computing Directly

Exploiting Free Silicon for Energy-Efficient Computing Directly in NAND Flash-based Solid-State Storage Systems Peng Li Kevin Gomez David J. Lilja Seagate Technology Seagate Technology University of Minnesota, Twin Cities Shakopee, MN, 55379 Shakopee, MN, 55379 Minneapolis, MN, 55455 [email protected] [email protected] [email protected] Abstract—Energy consumption is a fundamental issue in today’s data A well-known solution to the memory wall issue is moving centers as data continue growing dramatically. How to process these computing closer to the data. For example, Gokhale et al [2] data in an energy-efficient way becomes more and more important. proposed a processor-in-memory (PIM) chip by adding a processor Prior work had proposed several methods to build an energy-efficient system. The basic idea is to attack the memory wall issue (i.e., the into the main memory for computing. Riedel et al [9] proposed performance gap between CPUs and main memory) by moving com- an active disk by using the processor inside the hard disk drive puting closer to the data. However, these methods have not been widely (HDD) for computing. With the evolution of other non-volatile adopted due to high cost and limited performance improvements. In memories (NVMs), such as phase-change memory (PCM) and spin- this paper, we propose the storage processing unit (SPU) which adds computing power into NAND flash memories at standard solid-state transfer torque (STT)-RAM, researchers also proposed to use these drive (SSD) cost. By pre-processing the data using the SPU, the data NVMs as the main memory for data-intensive applications [10] to that needs to be transferred to host CPUs for further processing improve the system energy-efficiency. -

I386-Engine™ Technical Manual

i386-Engine™ C/C++ Programmable, 32-bit Microprocessor Module Based on the Intel386EX Technical Manual 1950 5 th Street, Davis, CA 95616, USA Tel: 530-758-0180 Fax: 530-758-0181 Email: [email protected] http://www.tern.com Internet Email: [email protected] http://www.tern.com COPYRIGHT i386-Engine, VE232, A-Engine, A-Core, C-Engine, V25-Engine, MotionC, BirdBox, PowerDrive, SensorWatch, Pc-Co, LittleDrive, MemCard, ACTF, and NT-Kit are trademarks of TERN, Inc. Intel386EX and Intel386SX are trademarks of Intel Coporation. Borland C/C++ are trademarks of Borland International. Microsoft, MS-DOS, Windows, and Windows 95 are trademarks of Microsoft Corporation. IBM is a trademark of International Business Machines Corporation. Version 2.00 October 28, 2010 No part of this document may be copied or reproduced in any form or by any means without the prior written consent of TERN, Inc. © 1998-2010 1950 5 th Street, Davis, CA 95616, USA Tel: 530-758-0180 Fax: 530-758-0181 Email: [email protected] http://www.tern.com Important Notice TERN is developing complex, high technology integration systems. These systems are integrated with software and hardware that are not 100% defect free. TERN products are not designed, intended, authorized, or warranted to be suitable for use in life-support applications, devices, or systems, or in other critical applications. TERN and the Buyer agree that TERN will not be liable for incidental or consequential damages arising from the use of TERN products. It is the Buyer's responsibility to protect life and property against incidental failure. TERN reserves the right to make changes and improvements to its products without providing notice. -

CUDA What Is GPGPU

What is GPGPU ? • General Purpose computation using GPU in applications other than 3D graphics CUDA – GPU accelerates critical path of application • Data parallel algorithms leverage GPU attributes – Large data arrays, streaming throughput Slides by David Kirk – Fine-grain SIMD parallelism – Low-latency floating point (FP) computation • Applications – see //GPGPU.org – Game effects (FX) physics, image processing – Physical modeling, computational engineering, matrix algebra, convolution, correlation, sorting Previous GPGPU Constraints CUDA • Dealing with graphics API per thread per Shader Input Registers • “Compute Unified Device Architecture” – Working with the corner cases per Context • General purpose programming model of the graphics API Fragment Program Texture – User kicks off batches of threads on the GPU • Addressing modes Constants – GPU = dedicated super-threaded, massively data parallel co-processor – Limited texture size/dimension Temp Registers • Targeted software stack – Compute oriented drivers, language, and tools Output Registers • Shader capabilities • Driver for loading computation programs into GPU FB Memory – Limited outputs – Standalone Driver - Optimized for computation • Instruction sets – Interface designed for compute - graphics free API – Data sharing with OpenGL buffer objects – Lack of Integer & bit ops – Guaranteed maximum download & readback speeds • Communication limited – Explicit GPU memory management – Between pixels – Scatter a[i] = p 1 Parallel Computing on a GPU Extended C • Declspecs • NVIDIA GPU Computing Architecture – global, device, shared, __device__ float filter[N]; – Via a separate HW interface local, constant __global__ void convolve (float *image) { – In laptops, desktops, workstations, servers GeForce 8800 __shared__ float region[M]; ... • Keywords • 8-series GPUs deliver 50 to 200 GFLOPS region[threadIdx] = image[i]; on compiled parallel C applications – threadIdx, blockIdx • Intrinsics __syncthreads() – __syncthreads .. -

Comparing the Power and Performance of Intel's SCC to State

Comparing the Power and Performance of Intel’s SCC to State-of-the-Art CPUs and GPUs Ehsan Totoni, Babak Behzad, Swapnil Ghike, Josep Torrellas Department of Computer Science, University of Illinois at Urbana-Champaign, Urbana, IL 61801, USA E-mail: ftotoni2, bbehza2, ghike2, [email protected] Abstract—Power dissipation and energy consumption are be- A key architectural challenge now is how to support in- coming increasingly important architectural design constraints in creasing parallelism and scale performance, while being power different types of computers, from embedded systems to large- and energy efficient. There are multiple options on the table, scale supercomputers. To continue the scaling of performance, it is essential that we build parallel processor chips that make the namely “heavy-weight” multi-cores (such as general purpose best use of exponentially increasing numbers of transistors within processors), “light-weight” many-cores (such as Intel’s Single- the power and energy budgets. Intel SCC is an appealing option Chip Cloud Computer (SCC) [1]), low-power processors (such for future many-core architectures. In this paper, we use various as embedded processors), and SIMD-like highly-parallel archi- scalable applications to quantitatively compare and analyze tectures (such as General-Purpose Graphics Processing Units the performance, power consumption and energy efficiency of different cutting-edge platforms that differ in architectural build. (GPGPUs)). These platforms include the Intel Single-Chip Cloud Computer The Intel SCC [1] is a research chip made by Intel Labs (SCC) many-core, the Intel Core i7 general-purpose multi-core, to explore future many-core architectures. It has 48 Pentium the Intel Atom low-power processor, and the Nvidia ION2 (P54C) cores in 24 tiles of two cores each. -

Xv6 Booting: Transitioning from 16 to 32 Bit Mode

238P Operating Systems, Fall 2018 xv6 Boot Recap: Transitioning from 16 bit mode to 32 bit mode 3 November 2018 Aftab Hussain University of California, Irvine BIOS xv6 Boot loader what it does Sets up the hardware. Transfers control to the Boot Loader. BIOS xv6 Boot loader what it does Sets up the hardware. Transfers control to the Boot Loader. how it transfers control to the Boot Loader Boot loader is loaded from the 1st 512-byte sector of the boot disk. This 512-byte sector is known as the boot sector. Boot loader is loaded at 0x7c00. Sets processor’s ip register to 0x7c00. BIOS xv6 Boot loader 2 source source files bootasm.S - 16 and 32 bit assembly code. bootmain.c - C code. BIOS xv6 Boot loader 2 source source files bootasm.S - 16 and 32 bit assembly code. bootmain.c - C code. executing bootasm.S 1. Disable interrupts using cli instruction. (Code). > Done in case BIOS has initialized any of its interrupt handlers while setting up the hardware. Also, BIOS is not running anymore, so better to disable them. > Clear segment registers. Use xor for %ax, and copy it to the rest (Code). 2. Switch from real mode to protected mode. (References: a, b). > Note the difference between processor modes and kernel privilege modes > We do the above switch to increase the size of the memory we can address. BIOS xv6 Boot loader 2 source source file executing bootasm.S m. Let’s 2. Switch from real mode to protected mode. expand on this a little bit Addressing in Real Mode In real mode, the processor sends 20-bit addresses to the memory. -

World's First High- Performance X86 With

World’s First High- Performance x86 with Integrated AI Coprocessor Linley Spring Processor Conference 2020 April 8, 2020 G Glenn Henry Dr. Parviz Palangpour Chief AI Architect AI Software Deep Dive into Centaur’s New x86 AI Coprocessor (Ncore) • Background • Motivations • Constraints • Architecture • Software • Benchmarks • Conclusion Demonstrated Working Silicon For Video-Analytics Edge Server Nov, 2019 ©2020 Centaur Technology. All Rights Reserved Centaur Technology Background • 25-year-old startup in Austin, owned by Via Technologies • We design, from scratch, low-cost x86 processors • Everything to produce a custom x86 SoC with ~100 people • Architecture, logic design and microcode • Design, verification, and layout • Physical build, fab interface, and tape-out • Shipped by IBM, HP, Dell, Samsung, Lenovo… ©2020 Centaur Technology. All Rights Reserved Genesis of the AI Coprocessor (Ncore) • Centaur was developing SoC (CHA) with new x86 cores • Targeted at edge/cloud server market (high-end x86 features) • Huge inference markets beyond hyperscale cloud, IOT and mobile • Video analytics, edge computing, on-premise servers • However, x86 isn’t efficient at inference • High-performance inference requires external accelerator • CHA has 44x PCIe to support GPUs, etc. • But adds cost, power, another point of failure, etc. ©2020 Centaur Technology. All Rights Reserved Why not integrate a coprocessor? • Very low cost • Many components already on SoC (“free” to Ncore) Caches, memory, clock, power, package, pins, busses, etc. • There often is “free” space on complex SOCs due to I/O & pins • Having many high-performance x86 cores allows flexibility • The x86 cores can do some of the work, in parallel • Didn’t have to implement all strange/new functions • Allows fast prototyping of new things • For customer: nothing extra to buy ©2020 Centaur Technology. -

Protected Mode - Wikipedia

2/12/2019 Protected mode - Wikipedia Protected mode In computing, protected mode, also called protected virtual address mode,[1] is an operational mode of x86- compatible central processing units (CPUs). It allows system software to use features such as virtual memory, paging and safe multi-tasking designed to increase an operating system's control over application software.[2][3] When a processor that supports x86 protected mode is powered on, it begins executing instructions in real mode, in order to maintain backward compatibility with earlier x86 processors.[4] Protected mode may only be entered after the system software sets up one descriptor table and enables the Protection Enable (PE) bit in the control register 0 (CR0).[5] Protected mode was first added to the x86 architecture in 1982,[6] with the release of Intel's 80286 (286) processor, and later extended with the release of the 80386 (386) in 1985.[7] Due to the enhancements added by protected mode, it has become widely adopted and has become the foundation for all subsequent enhancements to the x86 architecture,[8] although many of those enhancements, such as added instructions and new registers, also brought benefits to the real mode. Contents History The 286 The 386 386 additions to protected mode Entering and exiting protected mode Features Privilege levels Real mode application compatibility Virtual 8086 mode Segment addressing Protected mode 286 386 Structure of segment descriptor entry Paging Multitasking Operating systems See also References External links History https://en.wikipedia.org/wiki/Protected_mode -

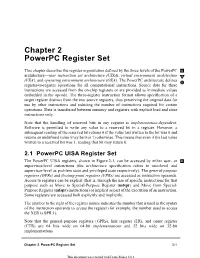

Chapter 2 Powerpc Register Set

Chapter 2 PowerPC Register Set 20 20 This chapter describes the register organization defined by the three levels of the PowerPC U architecture—user instruction set architecture (UISA), virtual environment architecture V (VEA), and operating environment architecture (OEA). The PowerPC architecture defines O register-to-register operations for all computational instructions. Source data for these instructions are accessed from the on-chip registers or are provided as immediate values embedded in the opcode. The three-register instruction format allows specification of a target register distinct from the two source registers, thus preserving the original data for use by other instructions and reducing the number of instructions required for certain operations. Data is transferred between memory and registers with explicit load and store instructions only. Note that the handling of reserved bits in any register is implementation-dependent. Software is permitted to write any value to a reserved bit in a register. However, a subsequent reading of the reserved bit returns 0 if the value last written to the bit was 0 and returns an undefined value (may be 0 or 1) otherwise. This means that even if the last value written to a reserved bit was 1, reading that bit may return 0. 2.1 PowerPC UISA Register Set The PowerPC UISA registers, shown in Figure 2-1, can be accessed by either user- or U supervisor-level instructions (the architecture specification refers to user-level and supervisor-level as problem state and privileged state respectively). The general-purpose registers (GPRs) and floating-point registers (FPRs) are accessed as instruction operands. -

A Comparison of Protection Lookaside Buffers and the PA-RISC

rI3 HEWLETT ~~PACKARD A Comparison ofProtection Lookaside Buffers and the PA·RISC Protection Architecture John Wilkes, Bart Sears Computer Systems Laboratory HPL-92-55 March 1992 processor architecture, Eric Koldinger and others at the University of single address space, 64 Washington Department of Computer Science have bitaddressing, protection, proposed a new model for memory protection in PA-RISe, protection single-address-space architectures. This paper lookaside buffers, PLB, compares the Washington proposal with what translation lookaside already exists in PA-RISC, and suggests some buffers,TLB, cache incremental improvements to the latter that would indexing, virtually provide most of the benefits of the former. addressed caches Internal Accession Date Only © Copyright Hewlett-Packard Company 1992 1 Introduction A recent technical report by Eric Koldinger and others [Koldinger91] proposes a new model for managing protectioninformationinsingle address space processors. This paper is one outcome of discussions withthe authors about the real differences betweentheir proposal and the scheme usedinPA-RISC[Lee89,HPPA90]:it offers a restatement of the newproposal, a comparisonwith the existing PA-RISCarchitecture, and some thoughts onhow the PA-RISCarchitecture mightbe extended in the future to provide some of the benefits that the PLBidea was trying to achieve. The reader is assumed to be familiar withmodem TLB,cache and processor architectures; with luck, sufficient information is provided in this paper that detailed prior knowledge about either the Washington proposal or PA-RISCisn't required. Example To help illustrate some of the finer points of the different protectionmodels, anexample based on the LRPC work of Brian Bershad is used [Bershad90] (see Figure 1). -

Intel® 64 and IA-32 Architectures Software Developer's Manual

Intel® 64 and IA-32 Architectures Software Developer’s Manual Volume 3B: System Programming Guide, Part 2 NOTE: The Intel® 64 and IA-32 Architectures Software Developer's Manual consists of five volumes: Basic Architecture, Order Number 253665; Instruction Set Reference A-M, Order Number 253666; Instruction Set Reference N-Z, Order Number 253667; System Programming Guide, Part 1, Order Number 253668; System Programming Guide, Part 2, Order Number 253669. Refer to all five volumes when evaluating your design needs. Order Number: 253669-029US November 2008 INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANT- ED BY THIS DOCUMENT. EXCEPT AS PROVIDED IN INTEL'S TERMS AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER AND INTEL DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO SALE AND/OR USE OF INTEL PRODUCTS INCLUDING LIABILITY OR WARRANTIES RELATING TO FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT OR OTHER INTELLECTUAL PROPERTY RIGHT. UNLESS OTHERWISE AGREED IN WRITING BY INTEL, THE INTEL PRODUCTS ARE NOT DESIGNED NOR IN- TENDED FOR ANY APPLICATION IN WHICH THE FAILURE OF THE INTEL PRODUCT COULD CREATE A SITUA- TION WHERE PERSONAL INJURY OR DEATH MAY OCCUR. Intel may make changes to specifications and product descriptions at any time, without notice. Designers must not rely on the absence or characteristics of any features or instructions marked "reserved" or "unde- fined." Intel reserves these for future definition and shall have no responsibility whatsoever for conflicts or incompatibilities arising from future changes to them.