ILONANI a Table-Top Robot for Remote Interaction

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Mixbus V4 1 — Last Update: 2017/12/19 Harrison Consoles

Mixbus v4 1 — Last update: 2017/12/19 Harrison Consoles Harrison Consoles Copyright Information 2017 No part of this publication may be copied, reproduced, transmitted, stored on a retrieval system, or translated into any language, in any form or by any means without the prior written consent of an authorized officer of Harrison Consoles, 1024 Firestone Parkway, La Vergne, TN 37086. Table of Contents Introduction ................................................................................................................................................ 5 About This Manual (online version and PDF download)........................................................................... 7 Features & Specifications.......................................................................................................................... 9 What’s Different About Mixbus? ............................................................................................................ 11 Operational Differences from Other DAWs ............................................................................................ 13 Installation ................................................................................................................................................ 16 Installation – Windows ......................................................................................................................... 17 Installation – OS X ............................................................................................................................... -

VLC User Guide

VLC user guide Henri Fallon Alexis de Lattre Johan Bilien Anil Daoud Mathieu Gautier Clément Stenac VLC user guide by Henri Fallon, Alexis de Lattre, Johan Bilien, Anil Daoud, Mathieu Gautier, and Clément Stenac Copyright © 2002-2004 the VideoLAN project This document is the complete user guide of VLC. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU General Public License as published by the Free Software Foundation; either version 2 of the License, or (at your option) any later version. The text of the license can be found in the appendix. GNU General Public License. Table of Contents 1. Introduction.........................................................................................................................................................................1 What is the VideoLAN project ?.....................................................................................................................................1 What is a codec ?............................................................................................................................................................3 How can I use VideoLAN ?............................................................................................................................................3 Command line usage.......................................................................................................................................................4 2. Modules and options for VLC...........................................................................................................................................8 -

Symantec Web Security Service Policy Guide

Web Security Service Policy Guide Version 6.10.4.1/OCT.12.2018 Symantec Web Security Service/Page 2 Policy Guide/Page 3 Copyrights Copyright © 2018 Symantec Corp. All rights reserved. Symantec, the Symantec Logo, the Checkmark Logo, Blue Coat, and the Blue Coat logo are trademarks or registered trademarks of Symantec Corp. or its affiliates in the U.S. and other coun- tries. Other names may be trademarks of their respective owners. This document is provided for informational purposes only and is not intended as advertising. All warranties relating to the information in this document, either express or implied, are disclaimed to the maximum extent allowed by law. The information in this document is subject to change without notice. THE DOCUMENTATION IS PROVIDED "AS IS" AND ALL EXPRESS OR IMPLIED CONDITIONS, REPRESENTATIONS AND WARRANTIES, INCLUDING ANY IMPLIED WARRANTY OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE OR NON-INFRINGEMENT, ARE DISCLAIMED, EXCEPT TO THE EXTENT THAT SUCH DISCLAIMERS ARE HELD TO BE LEGALLY INVALID. SYMANTEC CORPORATION SHALL NOT BE LIABLE FOR INCIDENTAL OR CONSEQUENTIAL DAMAGES IN CONNECTION WITH THE FURNISHING, PERFORMANCE, OR USE OF THIS DOCUMENTATION. THE INFORMATION CONTAINED IN THIS DOCUMENTATION IS SUBJECT TO CHANGE WITHOUT NOTICE. Symantec Corporation 350 Ellis Street Mountain View, CA 94043 www.symantec.com Policy Guide/Page 4 Symantec Web Security Service Policy Guide The Symantec Web Security Service solutions provide real-time protection against web-borne threats. As a cloud-based product, the Web Security Service leverages Symantec's proven security technology as well as the WebPulse™ cloud com- munity of over 75 million users. -

Symantec Web Security Service Policy Guide

Web Security Service Policy Guide Revision: NOV.07.2020 Symantec Web Security Service/Page 2 Policy Guide/Page 3 Copyrights Broadcom, the pulse logo, Connecting everything, and Symantec are among the trademarks of Broadcom. The term “Broadcom” refers to Broadcom Inc. and/or its subsidiaries. Copyright © 2020 Broadcom. All Rights Reserved. The term “Broadcom” refers to Broadcom Inc. and/or its subsidiaries. For more information, please visit www.broadcom.com. Broadcom reserves the right to make changes without further notice to any products or data herein to improve reliability, function, or design. Information furnished by Broadcom is believed to be accurate and reliable. However, Broadcom does not assume any liability arising out of the application or use of this information, nor the application or use of any product or circuit described herein, neither does it convey any license under its patent rights nor the rights of others. Policy Guide/Page 4 Symantec WSS Policy Guide The Symantec Web Security Service solutions provide real-time protection against web-borne threats. As a cloud-based product, the Web Security Service leverages Symantec's proven security technology, including the WebPulse™ cloud community. With extensive web application controls and detailed reporting features, IT administrators can use the Web Security Service to create and enforce granular policies that are applied to all covered users, including fixed locations and roaming users. If the WSS is the body, then the policy engine is the brain. While the WSS by default provides malware protection (blocks four categories: Phishing, Proxy Avoidance, Spyware Effects/Privacy Concerns, and Spyware/Malware Sources), the additional policy rules and options you create dictate exactly what content your employees can and cannot access—from global allows/denials to individual users at specific times from specific locations. -

Isma Internet Streaming Media Alliance

ISMA INTERNET STREAMING MEDIA ALLIANCE Output Document Number TD00105 November 2007 TITLE: ISMA Ultravox Part 2: Ultravox 3.0 Protocol Specification Status: Provisional Specification © 2007 ISMA12/14/2007ISMA Ultravox Part 2: Ultravox 3 Protocol Provisional Specification 1 Table of Contents Abstract...........................................................................................................................3 1 Ultravox Protocol.........................................................................................................4 1.1 Overview...............................................................................................................4 1.2 Ultravox Frames....................................................................................................4 1.3 Encrypted Messages (Payload for any classes 3-15)...............................................5 2 Ultravox Broadcaster Protocol......................................................................................6 2.1 POST Headers.......................................................................................................6 2.1.1 Required Headers............................................................................................7 2.1.2 Optional Arguments........................................................................................7 2.2 Response Codes.....................................................................................................7 2.3 Response Headers..................................................................................................8 -

Metadefender Core V4.19.0

MetaDefender Core v4.19.0 © 2019 OPSWAT, Inc. All rights reserved. OPSWAT®, MetadefenderTM and the OPSWAT logo are trademarks of OPSWAT, Inc. All other trademarks, trade names, service marks, service names, and images mentioned and/or used herein belong to their respective owners. Table of Contents About This Guide 14 Key Features of MetaDefender Core 15 1. Quick Start with MetaDefender Core 16 1.1. Installation 16 Basic setup 16 1.1.1. Configuration wizard 16 1.2. License Activation 22 1.3. Process Files with MetaDefender Core 22 2. Installing or Upgrading MetaDefender Core 23 2.1. Recommended System Configuration 23 Microsoft Windows Deployments 24 Unix Based Deployments 26 Data Retention 28 Custom Engines 28 Browser Requirements for the Metadefender Core Management Console 28 2.2. Installing MetaDefender 29 Installation 29 Installation notes 29 2.2.1. MetaDefender Core 4.18.0 or older 30 2.2.2. MetaDefender Core 4.19.0 or newer 33 2.3. Upgrading MetaDefender Core 38 Upgrading from MetaDefender Core 3.x to 4.x 38 Upgrading from MetaDefender Core older version to 4.18.0 (SQLite) 38 Upgrading from MetaDefender Core 4.18.0 or older (SQLite) to 4.19.0 or newer (PostgreSQL): 39 Upgrading from MetaDefender Core 4.19.0 to newer (PostgreSQL): 40 2.4. MetaDefender Core Licensing 41 2.4.1. Activating Metadefender Licenses 41 2.4.2. Checking Your Metadefender Core License 46 2.5. Performance and Load Estimation 47 What to know before reading the results: Some factors that affect performance 47 How test results are calculated 48 Test Reports 48 2.5.1. -

![Archive and Compressed [Edit]](https://docslib.b-cdn.net/cover/8796/archive-and-compressed-edit-1288796.webp)

Archive and Compressed [Edit]

Archive and compressed [edit] Main article: List of archive formats • .?Q? – files compressed by the SQ program • 7z – 7-Zip compressed file • AAC – Advanced Audio Coding • ace – ACE compressed file • ALZ – ALZip compressed file • APK – Applications installable on Android • AT3 – Sony's UMD Data compression • .bke – BackupEarth.com Data compression • ARC • ARJ – ARJ compressed file • BA – Scifer Archive (.ba), Scifer External Archive Type • big – Special file compression format used by Electronic Arts for compressing the data for many of EA's games • BIK (.bik) – Bink Video file. A video compression system developed by RAD Game Tools • BKF (.bkf) – Microsoft backup created by NTBACKUP.EXE • bzip2 – (.bz2) • bld - Skyscraper Simulator Building • c4 – JEDMICS image files, a DOD system • cab – Microsoft Cabinet • cals – JEDMICS image files, a DOD system • cpt/sea – Compact Pro (Macintosh) • DAA – Closed-format, Windows-only compressed disk image • deb – Debian Linux install package • DMG – an Apple compressed/encrypted format • DDZ – a file which can only be used by the "daydreamer engine" created by "fever-dreamer", a program similar to RAGS, it's mainly used to make somewhat short games. • DPE – Package of AVE documents made with Aquafadas digital publishing tools. • EEA – An encrypted CAB, ostensibly for protecting email attachments • .egg – Alzip Egg Edition compressed file • EGT (.egt) – EGT Universal Document also used to create compressed cabinet files replaces .ecab • ECAB (.ECAB, .ezip) – EGT Compressed Folder used in advanced systems to compress entire system folders, replaced by EGT Universal Document • ESS (.ess) – EGT SmartSense File, detects files compressed using the EGT compression system. • GHO (.gho, .ghs) – Norton Ghost • gzip (.gz) – Compressed file • IPG (.ipg) – Format in which Apple Inc. -

Metadefender Core V4.17.3

MetaDefender Core v4.17.3 © 2020 OPSWAT, Inc. All rights reserved. OPSWAT®, MetadefenderTM and the OPSWAT logo are trademarks of OPSWAT, Inc. All other trademarks, trade names, service marks, service names, and images mentioned and/or used herein belong to their respective owners. Table of Contents About This Guide 13 Key Features of MetaDefender Core 14 1. Quick Start with MetaDefender Core 15 1.1. Installation 15 Operating system invariant initial steps 15 Basic setup 16 1.1.1. Configuration wizard 16 1.2. License Activation 21 1.3. Process Files with MetaDefender Core 21 2. Installing or Upgrading MetaDefender Core 22 2.1. Recommended System Configuration 22 Microsoft Windows Deployments 22 Unix Based Deployments 24 Data Retention 26 Custom Engines 27 Browser Requirements for the Metadefender Core Management Console 27 2.2. Installing MetaDefender 27 Installation 27 Installation notes 27 2.2.1. Installing Metadefender Core using command line 28 2.2.2. Installing Metadefender Core using the Install Wizard 31 2.3. Upgrading MetaDefender Core 31 Upgrading from MetaDefender Core 3.x 31 Upgrading from MetaDefender Core 4.x 31 2.4. MetaDefender Core Licensing 32 2.4.1. Activating Metadefender Licenses 32 2.4.2. Checking Your Metadefender Core License 37 2.5. Performance and Load Estimation 38 What to know before reading the results: Some factors that affect performance 38 How test results are calculated 39 Test Reports 39 Performance Report - Multi-Scanning On Linux 39 Performance Report - Multi-Scanning On Windows 43 2.6. Special installation options 46 Use RAMDISK for the tempdirectory 46 3. -

Scape D10.1 Keeps V1.0

Identification and selection of large‐scale migration tools and services Authors Rui Castro, Luís Faria (KEEP Solutions), Christoph Becker, Markus Hamm (Vienna University of Technology) June 2011 This work was partially supported by the SCAPE Project. The SCAPE project is co-funded by the European Union under FP7 ICT-2009.4.1 (Grant Agreement number 270137). This work is licensed under a CC-BY-SA International License Table of Contents 1 Introduction 1 1.1 Scope of this document 1 2 Related work 2 2.1 Preservation action tools 3 2.1.1 PLANETS 3 2.1.2 RODA 5 2.1.3 CRiB 6 2.2 Software quality models 6 2.2.1 ISO standard 25010 7 2.2.2 Decision criteria in digital preservation 7 3 Criteria for evaluating action tools 9 3.1 Functional suitability 10 3.2 Performance efficiency 11 3.3 Compatibility 11 3.4 Usability 11 3.5 Reliability 12 3.6 Security 12 3.7 Maintainability 13 3.8 Portability 13 4 Methodology 14 4.1 Analysis of requirements 14 4.2 Definition of the evaluation framework 14 4.3 Identification, evaluation and selection of action tools 14 5 Analysis of requirements 15 5.1 Requirements for the SCAPE platform 16 5.2 Requirements of the testbed scenarios 16 5.2.1 Scenario 1: Normalize document formats contained in the web archive 16 5.2.2 Scenario 2: Deep characterisation of huge media files 17 v 5.2.3 Scenario 3: Migrate digitised TIFFs to JPEG2000 17 5.2.4 Scenario 4: Migrate archive to new archiving system? 17 5.2.5 Scenario 5: RAW to NEXUS migration 18 6 Evaluation framework 18 6.1 Suitability for testbeds 19 6.2 Suitability for platform 19 6.3 Technical instalability 20 6.4 Legal constrains 20 6.5 Summary 20 7 Results 21 7.1 Identification of candidate tools 21 7.2 Evaluation and selection of tools 22 8 Conclusions 24 9 References 25 10 Appendix 28 10.1 List of identified action tools 28 vi 1 Introduction A preservation action is a concrete action, usually implemented by a software tool, that is performed on digital content in order to achieve some preservation goal. -

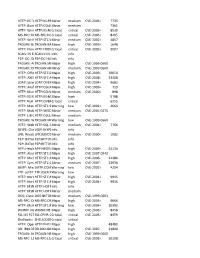

HTTP: IIS "Propfind" Rem HTTP:IIS:PROPFIND Minor Medium

HTTP: IIS "propfind"HTTP:IIS:PROPFIND RemoteMinor DoS medium CVE-2003-0226 7735 HTTP: IkonboardHTTP:CGI:IKONBOARD-BADCOOKIE IllegalMinor Cookie Languagemedium 7361 HTTP: WindowsHTTP:IIS:NSIISLOG-OF Media CriticalServices NSIISlog.DLLcritical BufferCVE-2003-0349 Overflow 8035 MS-RPC: DCOMMS-RPC:DCOM:EXPLOIT ExploitCritical critical CVE-2003-0352 8205 HTTP: WinHelp32.exeHTTP:STC:WINHELP32-OF2 RemoteMinor Buffermedium Overrun CVE-2002-0823(2) 4857 TROJAN: BackTROJAN:BACKORIFICE:BO2K-CONNECT Orifice 2000Major Client Connectionhigh CVE-1999-0660 1648 HTTP: FrontpageHTTP:FRONTPAGE:FP30REG.DLL-OF fp30reg.dllCritical Overflowcritical CVE-2003-0822 9007 SCAN: IIS EnumerationSCAN:II:IIS-ISAPI-ENUMInfo info P2P: DC: DirectP2P:DC:HUB-LOGIN ConnectInfo Plus Plus Clientinfo Hub Login TROJAN: AOLTROJAN:MISC:AOLADMIN-SRV-RESP Admin ServerMajor Responsehigh CVE-1999-0660 TROJAN: DigitalTROJAN:MISC:ROOTBEER-CLIENT RootbeerMinor Client Connectmedium CVE-1999-0660 HTTP: OfficeHTTP:STC:DL:OFFICEART-PROP Art PropertyMajor Table Bufferhigh OverflowCVE-2009-2528 36650 HTTP: AXIS CommunicationsHTTP:STC:ACTIVEX:AXIS-CAMERAMajor Camerahigh Control (AxisCamControl.ocx)CVE-2008-5260 33408 Unsafe ActiveX Control LDAP: IpswitchLDAP:OVERFLOW:IMAIL-ASN1 IMail LDAPMajor Daemonhigh Remote BufferCVE-2004-0297 Overflow 9682 HTTP: AnyformHTTP:CGI:ANYFORM-SEMICOLON SemicolonMajor high CVE-1999-0066 719 HTTP: Mini HTTP:CGI:W3-MSQL-FILE-DISCLSRSQL w3-msqlMinor File View mediumDisclosure CVE-2000-0012 898 HTTP: IIS MFCHTTP:IIS:MFC-EXT-OF ISAPI FrameworkMajor Overflowhigh (via -

List of File Formats - Wikipedia, the Free Encyclopedia

List of file formats - Wikipedia, the free encyclopedia http://en.wikipedia.org/w/index.php?title=List_of_file_fo... List of file formats From Wikipedia, the free encyclopedia See also: List of file formats (alphabetical) This is a list of file formats organized by type, as can be found on computers. Filename extensions are usually noted in parentheses if they differ from the format name or abbreviation. In theory, using the basic Latin alphabet (A–Z) and an extension of up to three single-cased letters, 18,279 combinations can be made (263+262+261+260). When other acceptable characters are accepted, the maximum number is increased (very possibly to a number consisting of at least six digits). Many operating systems do not limit filenames to a single extension shorter than 4 characters, like what was common with some operating systems that supported the FAT file system. Examples of operating systems that don't have such a small limit include Unix-like systems. Also, Microsoft Windows NT, 95, 98, and Me don't have a three character limit on extensions for 32-bit or 64-bit applications on file systems other than pre-Windows 95/Windows NT 3.5 versions of the FAT file system. Some filenames are given extensions longer than three characters. Contents 1 Archive and compressed 1.1 Physical recordable media archiving 2 Computer-aided 2.1 Computer-aided design (CAD) 2.2 Electronic design automation (EDA) 2.3 Test technology 3 Database 4 Desktop publishing 5 Document 6 Font file 7 Geographic information system 8 Graphical information organizers -

Broadband Video Networking >

IEEE Globecom 2009 November 30, 2009 Broadband Video Networking Benny Bing Georgia Institute of Technology http://users.ece.gatech.edu/~benny [email protected] Outline •Introduction to broadband Internet •HD wireless interfaces –802.15 –802.11 –Wireless HD •Broadband video –Switched digital video –Wireless video –H.264 and VC-1 compression standards –Loss resilience and error containment –Video quality assessment –Video transport protocols –Video bandwidth conservation and management –Next-generation video •Summary © 2009 Benny Bing 2 1 Broadband Internet •Economic driver for 21st century –Globally, over 300 million households subscribe to broadband Internet –Expected to increase to 525 million in 2011 •Broadband Internet can bring significant economic/social benefits –Improved healthcare and education to enhanced public-safety programs •Improved healthcare through telemedicine and electronic healthcare records –Can also bring efficiencies by ushering smart grids, smart homes, and smart transportation •Broadband in the U.S. –FCC task force estimates total cost of broadband deployments in the U.S. between $20 billion and $350 billion •Assumes services provided 100 Mbit/s or faster –Actual broadband speeds lag advertised speeds by at least 50% •Possibly more during busy hours •Peak usage hours (e.g., 7 to 10 pm) create network congestion and speed degradation •About 1% of users drive 20% of traffic while 20% of users drive up to 80% of traffic –Much more wireless spectrum needed •Smartphone sales to make up majority of wireless device