Cooperation Across Timescales Between and Hebbian and Homeostatic Plasticity

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Rab3a As a Modulator of Homeostatic Synaptic Plasticity

Wright State University CORE Scholar Browse all Theses and Dissertations Theses and Dissertations 2014 Rab3A as a Modulator of Homeostatic Synaptic Plasticity Andrew G. Koesters Wright State University Follow this and additional works at: https://corescholar.libraries.wright.edu/etd_all Part of the Biomedical Engineering and Bioengineering Commons Repository Citation Koesters, Andrew G., "Rab3A as a Modulator of Homeostatic Synaptic Plasticity" (2014). Browse all Theses and Dissertations. 1246. https://corescholar.libraries.wright.edu/etd_all/1246 This Dissertation is brought to you for free and open access by the Theses and Dissertations at CORE Scholar. It has been accepted for inclusion in Browse all Theses and Dissertations by an authorized administrator of CORE Scholar. For more information, please contact [email protected]. RAB3A AS A MODULATOR OF HOMEOSTATIC SYNAPTIC PLASTICITY A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy By ANDREW G. KOESTERS B.A., Miami University, 2004 2014 Wright State University WRIGHT STATE UNIVERSITY GRADUATE SCHOOL August 22, 2014 I HEREBY RECOMMEND THAT THE DISSERTATION PREPARED UNDER MY SUPERVISION BY Andrew G. Koesters ENTITLED Rab3A as a Modulator of Homeostatic Synaptic Plasticity BE ACCEPTED IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF Doctor of Philosophy. Kathrin Engisch, Ph.D. Dissertation Director Mill W. Miller, Ph.D. Director, Biomedical Sciences Ph.D. Program Committee on Final Examination Robert E. W. Fyffe, Ph.D. Vice President for Research Dean of the Graduate School Mark Rich, M.D./Ph.D. David Ladle, Ph.D. F. Javier Alvarez-Leefmans, M.D./Ph.D. Lynn Hartzler, Ph.D. -

Disruption of NMDA Receptor Function Prevents Normal Experience

Research Articles: Development/Plasticity/Repair Disruption of NMDA receptor function prevents normal experience-dependent homeostatic synaptic plasticity in mouse primary visual cortex https://doi.org/10.1523/JNEUROSCI.2117-18.2019 Cite as: J. Neurosci 2019; 10.1523/JNEUROSCI.2117-18.2019 Received: 16 August 2018 Revised: 7 August 2019 Accepted: 8 August 2019 This Early Release article has been peer-reviewed and accepted, but has not been through the composition and copyediting processes. The final version may differ slightly in style or formatting and will contain links to any extended data. Alerts: Sign up at www.jneurosci.org/alerts to receive customized email alerts when the fully formatted version of this article is published. Copyright © 2019 the authors Rodriguez et al. 1 Disruption of NMDA receptor function prevents normal experience-dependent homeostatic 2 synaptic plasticity in mouse primary visual cortex 3 4 Gabriela Rodriguez1,4, Lukas Mesik2,3, Ming Gao2,5, Samuel Parkins1, Rinki Saha2,6, 5 and Hey-Kyoung Lee1,2,3 6 7 8 1. Cell Molecular Developmental Biology and Biophysics (CMDB) Graduate Program, 9 Johns Hopkins University, Baltimore, MD 21218 10 2. Department of Neuroscience, Mind/Brain Institute, Johns Hopkins University, Baltimore, 11 MD 21218 12 3. Kavli Neuroscience Discovery Institute, Johns Hopkins University, Baltimore, MD 13 21218 14 4. Current address: Max Planck Florida Institute for Neuroscience, Jupiter, FL 33458 15 5. Current address: Division of Neurology, Barrow Neurological Institute, Pheonix, AZ 16 85013 17 6. Current address: Department of Psychiatry, Columbia University, New York, NY10032 18 19 Abbreviated title: NMDARs in homeostatic synaptic plasticity of V1 20 21 Corresponding Author: Hey-Kyoung Lee, Ph.D. -

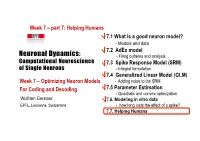

Neuronal Dynamics

Week 7 – part 7: Helping Humans 7.1 What is a good neuron model? - Models and data 7.2 AdEx model Neuronal Dynamics: - Firing patterns and analysis Computational Neuroscience 7.3 Spike Response Model (SRM) of Single Neurons - Integral formulation 7.4 Generalized Linear Model (GLM) Week 7 – Optimizing Neuron Models - Adding noise to the SRM For Coding and Decoding 7.5 Parameter Estimation - Quadratic and convex optimization Wulfram Gerstner 7.6. Modeling in vitro data EPFL, Lausanne, Switzerland - how long lasts the effect of a spike? 7.7. Helping Humans Week 7 – part 7: Helping Humans 7.1 What is a good neuron model? - Models and data 7.2 AdEx model - Firing patterns and analysis 7.3 Spike Response Model (SRM) - Integral formulation 7.4 Generalized Linear Model (GLM) - Adding noise to the SRM 7.5 Parameter Estimation - Quadratic and convex optimization 7.6. Modeling in vitro data - how long lasts the effect of a spike? 7.7. Helping Humans Neuronal Dynamics – Review: Models and Data -Predict spike times -Predict subthreshold voltage -Easy to interpret (not a ‘black box’) -Variety of phenomena -Systematic: ‘optimize’ parameters BUT so far limited to in vitro Neuronal Dynamics – 7.7 Systems neuroscience, in vivo Now: extracellular recordings visual cortex A) Predict spike times, given stimulus B) Predict subthreshold voltage C) Easy to interpret (not a ‘black box’) Model of ‘Encoding’ D) Flexible enough to account for a variety of phenomena E) Systematic procedure to ‘optimize’ parameters Neuronal Dynamics – 7.7 Estimation of receptive fields Estimation of spatial (and temporal) receptive fields ut() k I u LNP =+∑ kKk− rest firing intensity ρϑ()tfutt= ( ()− ()) Neuronal Dynamics – 7.7 Estimation of Receptive Fields visual LNP = stimulus Linear-Nonlinear-Poisson Special case of GLM= Generalized Linear Model GLM for prediction of retinal ganglion ON cell activity Pillow et al. -

Hebbian Learning and Spiking Neurons

PHYSICAL REVIEW E VOLUME 59, NUMBER 4 APRIL 1999 Hebbian learning and spiking neurons Richard Kempter* Physik Department, Technische Universita¨tMu¨nchen, D-85747 Garching bei Mu¨nchen, Germany Wulfram Gerstner† Swiss Federal Institute of Technology, Center of Neuromimetic Systems, EPFL-DI, CH-1015 Lausanne, Switzerland J. Leo van Hemmen‡ Physik Department, Technische Universita¨tMu¨nchen, D-85747 Garching bei Mu¨nchen, Germany ~Received 6 August 1998; revised manuscript received 23 November 1998! A correlation-based ~‘‘Hebbian’’! learning rule at a spike level with millisecond resolution is formulated, mathematically analyzed, and compared with learning in a firing-rate description. The relative timing of presynaptic and postsynaptic spikes influences synaptic weights via an asymmetric ‘‘learning window.’’ A differential equation for the learning dynamics is derived under the assumption that the time scales of learning and neuronal spike dynamics can be separated. The differential equation is solved for a Poissonian neuron model with stochastic spike arrival. It is shown that correlations between input and output spikes tend to stabilize structure formation. With an appropriate choice of parameters, learning leads to an intrinsic normal- ization of the average weight and the output firing rate. Noise generates diffusion-like spreading of synaptic weights. @S1063-651X~99!02804-4# PACS number~s!: 87.19.La, 87.19.La, 05.65.1b, 87.18.Sn I. INTRODUCTION In contrast to the standard rate models of Hebbian learn- ing, we introduce and analyze a learning rule where synaptic Correlation-based or ‘‘Hebbian’’ learning @1# is thought modifications are driven by the temporal correlations be- to be an important mechanism for the tuning of neuronal tween presynaptic and postsynaptic spikes. -

Connectivity Reflects Coding: a Model of Voltage-‐Based Spike-‐ Timing-‐Dependent-‐P

Connectivity reflects Coding: A Model of Voltage-based Spike- Timing-Dependent-Plasticity with Homeostasis Claudia Clopath, Lars Büsing*, Eleni Vasilaki, Wulfram Gerstner Laboratory of Computational Neuroscience Brain-Mind Institute and School of Computer and Communication Sciences Ecole Polytechnique Fédérale de Lausanne 1015 Lausanne EPFL, Switzerland * permanent address: Institut für Grundlagen der Informationsverarbeitung, TU Graz, Austria Abstract Electrophysiological connectivity patterns in cortex often show a few strong connections, sometimes bidirectional, in a sea of weak connections. In order to explain these connectivity patterns, we use a model of Spike-Timing-Dependent Plasticity where synaptic changes depend on presynaptic spike arrival and the postsynaptic membrane potential, filtered with two different time constants. The model describes several nonlinear effects in STDP experiments, as well as the voltage dependence of plasticity. We show that in a simulated recurrent network of spiking neurons our plasticity rule leads not only to development of localized receptive fields, but also to connectivity patterns that reflect the neural code: for temporal coding paradigms with spatio-temporal input correlations, strong connections are predominantly unidirectional, whereas they are bidirectional under rate coded input with spatial correlations only. Thus variable connectivity patterns in the brain could reflect different coding principles across brain areas; moreover simulations suggest that plasticity is surprisingly fast. Keywords: Synaptic plasticity, STDP, LTP, LTD, Voltage, Spike Introduction Experience-dependent changes in receptive fields1-2 or in learned behavior relate to changes in synaptic strength. Electrophysiological measurements of functional connectivity patterns in slices of neural tissue3-4 or anatomical connectivity measures5 can only present a snapshot of the momentary connectivity -- which may change over time6. -

Homeostatic Synaptic Plasticity: Local and Global Mechanisms for Stabilizing Neuronal Function

Downloaded from http://cshperspectives.cshlp.org/ on September 25, 2021 - Published by Cold Spring Harbor Laboratory Press Homeostatic Synaptic Plasticity: Local and Global Mechanisms for Stabilizing Neuronal Function Gina Turrigiano Department of Biology and Center for Behavioral Genomics, Brandeis University, Waltham, Massachusetts 02493 Correspondence: [email protected] Neural circuits must maintain stable function in the face of many plastic challenges, includ- ing changes in synapse number and strength, during learning and development. Recent work has shown that these destabilizing influences are counterbalanced by homeostatic plasticity mechanisms that act to stabilize neuronal and circuit activity. One such mechanism is syn- aptic scaling, which allows neurons to detect changes in their own firing rates through a set of calcium-dependent sensors that then regulate receptor trafficking to increase or decrease the accumulation of glutamate receptors at synaptic sites. Additional homeostatic mechanisms may allow local changes in synaptic activation to generate local synaptic adaptations, and network-wide changes in activity to generate network-wide adjustments in the balance between excitation and inhibition. The signaling pathways underlying these various forms of homeostatic plasticity are currently under intense scrutiny, and although dozens of mol- ecular pathways have now been implicated in homeostatic plasticity, a clear picture of how homeostatic feedback is structured at the molecular level has not yet emerged. On a functional level, neuronal networks likely use this complex set of regulatory mechanisms to achieve homeostasis over a wide range of temporal and spatial scales. ore than 50 years ago, Walter Cannon eter that is subject to homeostatic regulation. Mmarveled that “somehow the unstable During development billions of neurons wire stuff of which we are composed has learned themselves up into complex networks and man- the trick of maintaining stability” (Cannon age to reach a state where they can generate— 1932). -

Neural Coding and Integrate-And-Fire Model

motor Introduction to computationalneuroscience. cortex Doctoral school Oct-Nov 2006 association visual cortex cortex to motor Wulfram Gerstner output http://diwww.epfl.ch/w3mantra/ Computational Neuroscience 10 000 neurons Signal: 3 km wires action potential (spike) 1mm behavior behavior action potential neurons signals computational model molecules ion channels Swiss Federal Institute of Technology Lausanne, EPFL Laboratory of Computational Neuroscience, LCN, CH 1015 Lausanne Hodgkin-Huxley type models Integrate-and-fire type models Spike emission j i u i J Spike reception -70mV action potential Na+ K+ -spikes are events Ca2+ -threshold Ions/proteins -spike/reset/refractoriness 1 Models of synaptic Plasticity Computational Neuroscience pre post i Synapse j behavior Neurons synapses computational model molecules ion channels Ecole Polytechnique Fédérale de Lausanne, EPFL Laboratory of Computational Neuroscience, LCN, CH 1015 Lausanne Introduction to computationalneuroscience Background: What is brain-style computation? Lecture 1: Passive membrane and Integrate-and-Fire model Brain Computer Lecture 2: Hodgkin-Huxley models (detailed models) Lecture 3: Two-dimensional models (FitzHugh Nagumo) Lecture 4: synaptic plasticity Lecture 5: noise, network dynamics, associative memory Wulfram Gerstner http://diwww.epfl.ch/w3mantra/ Systems for computing and information processing Systems for computing and information processing Brain Computer Brain Computer memory Tasks: CPU slow Mathematical fast æ7p ö input 5cosç ÷ è 5 ø Distributed architecture -

Eligibility Traces and Three-Factor Rules of Synaptic Plasticity

Technische Universität München ENB Elite Master Program Neuroengineering (MSNE) Invited Presentation Prof. Dr. Wulfram Gerstner Ecole polytechnique fédérale de Lausanne (EPFL) Eligibility traces and three-factor rules of synaptic plasticity Abstract: Hebbian plasticity combines two factors: presynaptic activity must occur together with some postsynaptic variable (spikes, voltage deflection, calcium elevation ...). In three-factor learning rules the combi- nation of the two Hebbian factors is not sufficient, but leaves a trace at the synapses (eligibility trace) which decays over a few seconds; only if a third factor (neuromodulator signal) is present, either simultaneously or within a short a delay, the actual change of the synapse via long-term plasticity is triggered. After a review of classic theories and recent evidence of plasticity traces from plasticity experiments in rodents, I will discuss two studies from my own lab: the first one is a modeling study of reward-based learning with spiking neurons using an actor-critic architecture; the second one is a joint theory-experimental study showing evidence for eligibility traces in human behavior and pupillometry. Extensions from reward-based learning to surprise-based learning will be indicated. Biography: Wulfram Gerstner is Director of the Laboratory of Computational Neuroscience LCN at the EPFL. He studied physics at the universities of Tubingen and Munich and received a PhD from the Technical University of Munich. His re- search in computational neuroscience concentrates on models of spiking neurons and spike-timing dependent plasticity, on the problem of neuronal coding in single neurons and populations, as well as on the role of spatial representation for naviga- tion of rat-like autonomous agents. -

Burst-Dependent Synaptic Plasticity Can Coordinate Learning in Hierarchical Circuits

bioRxiv preprint doi: https://doi.org/10.1101/2020.03.30.015511; this version posted September 14, 2020. The copyright holder for this preprint (which was not certified by peer review) is the author/funder, who has granted bioRxiv a license to display the preprint in perpetuity. It is made available under aCC-BY-NC-ND 4.0 International license. 1 2 Burst-dependent synaptic plasticity can coordinate learning in 3 hierarchical circuits 4 1 2,3 4 5,6,7,8 5 Alexandre Payeur • Jordan Guerguiev • Friedemann Zenke Blake A. Richards ⇤• and Richard 1,9, 6 Naud ⇤• 7 1 uOttawa Brain and Mind Institute, Centre for Neural Dynamics, Department of 8 Cellular and Molecular Medicine, University of Ottawa, Ottawa, ON, Canada 9 2 Department of Biological Sciences, University of Toronto Scarborough, Toronto, ON, 10 Canada 11 3 Department of Cell and Systems Biology, University of Toronto, Toronto, ON, Canada 12 4 Friedrich Miescher Institute for Biomedical Research, Basel, Switzerland 13 5 Mila, Montr´eal, QC, Canada 14 6 Department of Neurology and Neurosurgery, McGill University, Montr´eal, QC, Canada 15 7 School of Computer Science, McGill University, Montr´eal, QC, Canada 16 8 Learning in Machines and Brains Program, Canadian Institute for Advanced Research, 17 Toronto, ON, Canada 18 9 Department of Physics, University of Ottawa, Ottawa, ON, Canada 19 Corresponding authors, email: [email protected], [email protected] ⇤ 20 Equal contributions • 21 Abstract 22 Synaptic plasticity is believed to be a key physiological mechanism for learning. It is well-established that 23 it depends on pre and postsynaptic activity. -

Tumor Necrosis Factor (TNF)-Receptor 1 and 2 Mediate Homeostatic Synaptic Plasticity of Denervated Mouse Dentate Granule Cells

www.nature.com/scientificreports OPEN Tumor necrosis factor (TNF)- receptor 1 and 2 mediate homeostatic synaptic plasticity of Received: 04 March 2015 Accepted: 06 July 2015 denervated mouse dentate granule Published: 06 August 2015 cells Denise Becker†, Thomas Deller & Andreas Vlachos Neurological diseases are often accompanied by neuronal cell death and subsequent deafferentation of connected brain regions. To study functional changes after denervation we generated entorhino- hippocampal slice cultures, transected the entorhinal pathway, and denervated dentate granule cells in vitro. Our previous work revealed that partially denervated neurons respond to the loss of input with a compensatory, i.e., homeostatic, increase in their excitatory synaptic strength. TNFα maintains this denervation-induced homeostatic strengthening of excitatory synapses. Here, we used pharmacological approaches and mouse genetics to assess the role of TNF-receptor 1 and 2 in lesion-induced excitatory synaptic strengthening. Our experiments disclose that both TNF-receptors are involved in the regulation of denervation-induced synaptic plasticity. In line with this result TNF-receptor 1 and 2 mRNA-levels were upregulated after deafferentation in vitro. These findings implicate TNF-receptor signaling cascades in the regulation of homeostatic plasticity of denervated networks and suggest an important role for TNFα-signaling in the course of neurological diseases accompanied by deafferentation. Homeostatic control of cortical excitability, connectivity and plasticity is considered essential for normal brain function1–3. Work from the past years has unraveled a wealth of information on compensatory mechanisms acting in the brain to keep the activity in neuronal networks within a physiological range4. Among the best studied mechanisms is homeostatic synaptic plasticity5–8, which adjusts synaptic strength to changes in network activity in a compensatory manner. -

Robust Rhythmogenesis Via Spike Timing Dependent Plasticity

bioRxiv preprint doi: https://doi.org/10.1101/2020.07.23.217026; this version posted March 31, 2021. The copyright holder for this preprint (which was not certified by peer review) is the author/funder, who has granted bioRxiv a license to display the preprint in perpetuity. It is made available under aCC-BY-NC-ND 4.0 International license. Robust Rhythmogenesis via Spike Timing Dependent Plasticity Gabi Socolovsky1, 2, ∗ and Maoz Shamir1, 2, 3 1Department of Physics, Faculty of Natural Sciences, 2Zlotowski Center for Neuroscience, 3Department of Physiology and Cell Biology, Faculty of Health Sciences, Ben-Gurion University of the Negev, Be'er-Sheva, Israel (Dated: March 31, 2021) Rhythmic activity has been observed in numerous animal species ranging from insects to humans, and in relation to a wide range of cognitive tasks. Various experimental and theoretical studies have investigated rhythmic activity. The theoretical efforts have mainly been focused on the neuronal dynamics, under the assumption that network connectivity satisfies certain fine-tuning conditions required to generate oscillations. However, it remains unclear how this fine tuning is achieved. Here we investigated the hypothesis that spike timing dependent plasticity (STDP) can provide the underlying mechanism for tuning synaptic connectivity to generate rhythmic activity. We addressed this question in a modeling study. We examined STDP dynamics in the framework of a network of excitatory and inhibitory neuronal populations that has been suggested to underlie the generation of oscillations in the gamma range. Mean field Fokker Planck equations for the synaptic weights dynamics are derived in the limit of slow learning. We drew on this approximation to determine which types of STDP rules drive the system to exhibit rhythmic activity, and demonstrate how the parameters that characterize the plasticity rule govern the rhythmic activity. -

Towards Biologically Plausible Gradient Descent by Jordan

Towards biologically plausible gradient descent by Jordan Guerguiev A thesis submitted in conformity with the requirements for the degree of Doctor of Philosophy Graduate Department of Cell and Systems Biology University of Toronto c Copyright 2021 by Jordan Guerguiev Abstract Towards biologically plausible gradient descent Jordan Guerguiev Doctor of Philosophy Graduate Department of Cell and Systems Biology University of Toronto 2021 Synaptic plasticity is the primary physiological mechanism underlying learning in the brain. It is de- pendent on pre- and post-synaptic neuronal activities, and can be mediated by neuromodulatory signals. However, to date, computational models of learning that are based on pre- and post-synaptic activity and/or global neuromodulatory reward signals for plasticity have not been able to learn complex tasks that animals are capable of. In the machine learning field, neural network models with many layers of computations trained using gradient descent have been highly successful in learning difficult tasks with near-human level performance. To date, it remains unclear how gradient descent could be implemented in neural circuits with many layers of synaptic connections. The overarching goal of this thesis is to develop theories for how the unique properties of neurons can be leveraged to enable gradient descent in deep circuits and allow them to learn complex tasks. The work in this thesis is divided into three projects. The first project demonstrates that networks of cortical pyramidal neurons, which have segregated apical dendrites and exhibit bursting behavior driven by dendritic plateau potentials, can in theory leverage these physiological properties to approximate gradient descent through multiple layers of synaptic connections.