Ace's Guide to Memory Technology: Part 3

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

BIOS EPOX, Motherboard 4PCA HT ======Change Optimized Defaults Are Marked !!! Změny Oproti DEFAULT Jsou Označeny !!! ======

BIOS EPOX, motherboard 4PCA HT =================================================== Change Optimized Defaults are marked !!! Změny oproti DEFAULT jsou označeny !!! =================================================== Standard CMOS Features ====================== Halt On [All, But Keyboard] !!! Advanced BIOS Features ====================== CPU Feature-Delay Prior to Termal [16 Min] Limit CPUID MaxVal [Disabled] Hard Disk Boot Priority Bootovani disku CPU L1 & L2 Cache [Enabled] Hyper-Threading Technology [Enabled] !!! First Boot Device [HDD] !!! Second Boot Device [Disabled] !!! Third Boot Device [Disabled] !!! Boot Other Device [Disabled] !!! Boot Up Floppy Seek [Disabled] !!! Boot Up NumLock Status [On] Security Option [Setup] x APIC Mode (zasedle) [Enabled] HDD S.M.A.R.T .... [Disabled] Advanced Chipset Features ========================= DRAM Timing Selectable [By SPD] x x x x Agresive Memory Mode [Standard] x x x System BIOS Cacheable [Enabled] VIDEO BIOS Cacheable [Disabled] AGP Aperture ... [128] Init Display First [AGP] DRAM DATA Inregrity Mode [ECC] Integrated Peripherals ====================== On Chip IDE Device: IDE HDD [Enabled] IDE DMA [Enabled] On-Chip Primary [Enabled] IDE Primary MASTER PIO [Auto] ... [Auto] On-Chip Secondary [Enabled] IDE Secondary MASTER PIO[Auto] ... [Auto] On Chip Serial ATA [Disabled] (ostatni sede) OnBoard Device: USB Controller [Enabled] USB 2.0 [Enabled] USB Keyboard [Auto] USB Mouse [Disabled] !!! AC97 Audio [Disabled] !!! Game Port [Disabled] !!! Midi Port [Disabled] !!! On Board LAN Device [Enabled] -

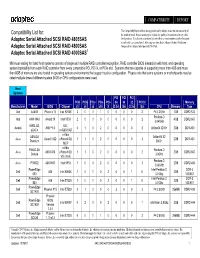

Compatibility List For: Adaptec Serial Attached SCSI RAID 4805SAS Adaptec Serial Attached SCSI RAID 4800SAS Adaptec Serial Atta

COMPATIBILITY REPORT This Compatibility Report reflects testing performed by Adaptec to test the interoperability of Compatibility List for: the products listed. It does not attempt to validate the quality of or preference for any of the Adaptec Serial Attached SCSI RAID 4805SAS listed products. It is also not an inclusive list and reflects a representative sample of products in each of the categories listed. All testing was done by the Adaptec Product Verification Adaptec Serial Attached SCSI RAID 4800SAS Group and the Adaptec InterOperability Test Lab. Adaptec Serial Attached SCSI RAID 4000SAS1 Minimum testing for listed host systems consists of single and multiple RAID controller recognition, RAID controller BIOS interaction with host, and operating system bootability from each RAID controller from every compatible PCI, PCI-X, or PCIe slot. Systems that are capable of supporting more than 4GB and more then 8GB of memory are also tested in operating system environments that support such a configuration. Please note that some systems or motherboards may be listed multiple times if different system BIOS or CPU configurations were used. Host Systems PCI PCI PCI PCIe PCIe PCIe PCIe PCI- 64 64 32 PCI 32 Memory Manufacturer Model BIOS Chipset x1 x4 x8 x16 X (3.3v) (5v) (3.3v) (5v) CPU Memory Type Abit AA8XE Phoenix 16 Intel 925XE 3 0 0 1 0 0 0 0 2 P4 3.2GHz 1GB DDR2-533 Pentium-D Abit AW8-MAX Award 19 Intel 955X 2 0 0 0 0 0 0 0 2 4GB DDR2-667 3.04GHz 939SLI32- ULI - Asrock AMI P1.0 0 1 0 2 0 0 0 0 3 Athlon64 3200+ 2GB DDR-400 eSATA m1695/1697 -

2005 Ranking Results

2005 Information Transparency and Disclosure Ranking Results in Taiwan Conducted by Securities and Futures Institute Entrusted by Taiwan Stock Exchange Corporation & GreTai Securities Market Despite all reasonable care given in the screening process to ensure objectivity, the SFI bears no responsibility in case of any damage or loss incurred from use of the ranking results. June 8, 2006 2005 Information Transparency and Disclosure Ranking Results in Taiwan About “Information Disclosure and Transparency Ranking System” The recent media focus on corporate governance has prompted calls for greater transparency and disclosure on companies around the globe. As a result, several leading financial-information providers have launched disclosure rankings, evaluations, and research related to how companies disclose information to rely on market force and mechanisms to encourage voluntary disclosures of companies. However, the evaluation criteria selected by those rankings, to some degree, could not provide an overall evaluation of disclosure practices in local market. In additions, there have been calls for standard setters and regulators to expand disclosure requirements according to international standards. Therefore, the Securities and Futures Institute (“SFI”), entrusted by the Taiwan Stock Exchange Corporation (“TSEC”) and the Gre Tai Securities Market (“GTSM”), currently launched “Information Transparency and Disclosure Rankings System”(ITDRS) to evaluate the level of transparency for all listed companies in Taiwan since 2003. For the third time, the 2005 full-year ranking results were released on June 8, 2006. It’s the first time that the full ranking results released. In the 1,032 listed companies of TSEC and GTSM, 12 companies were ranked as “Grade A+”, 183 companies were ranked as “Grade A”, 583 companies were ranked as “Grade B”, and 254 companies were ranked as “Grade C”. -

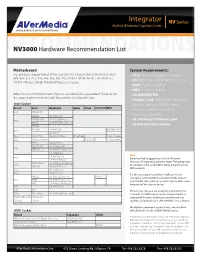

Integrator ® NV Series Hybrid Windows Capture Cards

Integrator ® NV Series www.avermedia-usa.com/surveillance Hybrid Windows Capture Cards www.averusa.com/surveillance RNV3000ECOMMENDATIONS Hardware Recommendation List Motherboard System Requirements: Our products support most of the current Intel chipsets for motherboards. Intel • CPU: Pentium 4 3.0 GHz or higher 848, 865, 875, 915, 925, 945, 955, 965, P35, NVIDIA nFORCE4 SLI - Intel Edition, • OS: Windows 7/XP/Vista/2000 - 32 bit NVIDIA nForce3 250Gb Platform Processor chipsets • RAM: 512MB or higher • HDD: 120GB or higher Below is a list of Motherboards that our validation labs have tested; however we • CD-RM/DVD-RM do support other motherboards beyond the ones listed below • Graphic Card: 32-bit high color SVGA Intel Socket graphics card with 128MB video Brand Asus Gigabyte Epox Intel FOXCONN MSI memory and DirectDraw/YUV 848 P4P800 SE Rendering Capability P4P800 GA-8IPE1000 P4P800-VM GA-8IG1000Pro-G • 10/100 Base-T Ethernet card 865 P4P8X GA-8IPE1000-G (Rev 3.1) • Sound card and speaker P5P800 P4C800 GA-8IK1100 875P Neo FISR 875 GA-8I875 915 P5GD1 Pro GA-8I915G-Duo EP-5EPA+ 915G Combo 925 P5AD2 Premium D925XCV 915G Combo P5LD2 GA-8I945P-G P5LD2-VM GA-8I 945G -MF 945 P5PL2-E GA-945PL-S3 (Rev 2.0) GA-8I945PL-G GA-8I955X Pro Note: 955 GA-8I955X Royal Based on Intel’s suggestion, for all of Microsoft P5B Deluxe/ GA-965P-DS3P (Rev 3.3) Windows XP Operating Systems, Hyper-Threading must 965 WiFi-AP GA-965P-DS3 2.0 be disabled in the system BIOS Setup program for our 975 GA-G1975X-C DVR products. -

List of Companies

1.Bharat Electronics (INDIA) 2.videocon (INDIA) 3.Bpl (INDIA) 4.Hcl (INDIA) 5.Citefa (ARGENTINA) 6.Rexio (ARGENTINA) 7.Hexacta (ARGENTINA) 8.Avibras (BRAZIL) 9.Bravox (BRAZIL) 10.Weg Industries (BRAZIL) 11.Haier (CHINA) 12.Tcl (CHINA) 13.Indumil (COLOMBIA) 14.Pc Smart (COLOMBIA) 15.Haceb (COLOMBIA) 16.cotecmar(COLOMBIA) 17.Siemens (GERMANY) 18.Nikon (JAPAN) 19.Sanyo (JAPAN) 20.Sony (JAPAN) 21.Toshiba (JAPAN) 22.TDK (JAPAN) 23.Hitachi (JAPAN) 24.Canon (JAPAN) 25.Casio (JAPAN) 26.Denon (JAPAN) 27.Epson (JAPAN) 28.LG (KOREA) 29.Samsung (KOREA) 30.Alfa (MEXICO) 31.Dina (MEXICO) 32.Telcel (MEXICO) 33.Satmex (MEXICO) 34.Telmex (MEXICO) 35.CFE (MEXICO) 36.Philips (NETHERLANDS) 37.Acer (TAIWAN) 38.Surya (TAIWAN) 39.Epox (TAIWAN) 40.Asus (TAIWAN) 41.Dell (UNITED STATES) 42.IBM (UNITED STATES) 43.Xerox (UNITED STATES) 44.Vetelca (VENEZUELA) 45.VIT,C.A (VENEZUELA) companies in ARGENTINA:- Fábrica de Aviones Córdoba CITEFA Rexio Cicaré Hexacta INVAP Nostromo Brazil Companies:- Avibras Bravox CCE Embraer Gradiente Oi Schulz WEG Industries China Companies:- Aigo Haier TCL Colombia Companies:- Indumil PC Smart Ideagro Haceb Cotecmar India Companies:- Bharat Electronics Videocon Voltas BPL HCL Germany Companies Grundig Siemens Japan Companies Alpine Brother Industries Canon Casio Clarion Corega Denon Eizo (Nanao) Epson Fujifilm Fuji Xerox Fujitsu Funai Hitachi iiyama IO Data JVC (Victor) Konica Minolta Kyocera Marantz Melco (Buffalo) Mitsubishi Electric Mouse Computer NEC Nikon Oki Olympus Panasonic Pentax Pioneer Planex Renesas Ricoh Sanyo Seiko Sharp -

Solaris 7 (Intel Platform Edition) 11/99 Hardware Compatibility List 1

Solaris 7 (Intel Platform Edition) 11/ 99 Hardware Compatibility List Sun Microsystems, Inc. 901 San Antonio Road Palo Alto, CA 94303–4900 U.S.A. Part No: 806-2510–10 November 1999 Copyright 1999 Sun Microsystems, Inc. 901 San Antonio Road, Palo Alto, California 94303-4900 U.S.A. All rights reserved. This product or document is protected by copyright and distributed under licenses restricting its use, copying, distribution, and decompilation. No part of this product or document may be reproduced in any form by any means without prior written authorization of Sun and its licensors, if any. Third-party software, including font technology, is copyrighted and licensed from Sun suppliers. Parts of the product may be derived from Berkeley BSD systems, licensed from the University of California. UNIX is a registered trademark in the U.S. and other countries, exclusively licensed through X/Open Company, Ltd. Sun, Sun Microsystems, the Sun logo, docs.sun.com, and Solaris are trademarks, registered trademarks, or service marks of Sun Microsystems, Inc. in the U.S. and other countries. All SPARC trademarks are used under license and are trademarks or registered trademarks of SPARC International, Inc. in the U.S. and other countries. Products bearing SPARC trademarks are based upon an architecture developed by Sun Microsystems, Inc. The OPEN LOOK and SunTM Graphical User Interface was developed by Sun Microsystems, Inc. for its users and licensees. Sun acknowledges the pioneering efforts of Xerox in researching and developing the concept of visual or graphical user interfaces for the computer industry. Sun holds a non-exclusive license from Xerox to the Xerox Graphical User Interface, which license also covers Sun’s licensees who implement OPEN LOOK GUIs and otherwise comply with Sun’s written license agreements. -

INTEL's TOP CPU the Pentium 4 3.4Ghz Extreme Edition Intel

44> 7625274 81181 Chips and chipsets. They’re the heart of any computing system, and as usual, we have packed this issue of PC Modder with dozens of pos- sible processor and motherboard matchups. Our Case Studies will THE PARTS SHOP help you discover what sort of performance you can expect to 19 AMD’s Top CPU achieve when overclocking various chip and chipset combos. When The Athlon 64 FX53 you’re ready to crank up your own silicon, our Cool It articles will provide tips on keeping excess heat under control. And then when 20 Intel’s Top CPU it’s time to build the ultimate home for your motherboard, our Cut Pentium 4 3.4GHz Extreme Edition It section will give you some case ideas to drool over. Whether you’re a modding novice or master, you’ll find this issue is filled with all the 21 Grantsdale Grants Intel Users’ Wishes tips and tools you need to build faster and more beautiful PCs. New i915 Chipset Adds Many New Technologies 22 Special FX SiS Adds Support For AMD’s FIRST-TIMERS Athlon 64FX CPU 4 The Need For Speed 23 Chipset Entertainment Take Your CPU To Ultimate Heights VIA’s PM880 Chipset One Step At A Time Ready For Media PCs 8 Cutting Holes & Taking Names & HDTV Your First Case Mod 24 May The 12 Water For First-Timers Force Be Tips To Help Watercooling With You Newbies Take The Plunge NVIDIA’s nForce3 Chipset 16 Benchmark Basics A Primer On 25 Essential Overclocking Utilities Measuring Power Modding Is As Much About The Your PC’s Right Software As The Right Hardware Performance 31 Sizing Up Sockets How Your Processor Saddles Up 33 The Mad Modder’s Toolkit Minireviews, Meanderings & Musings Copyright 2004 by Sandhills Publishing Company. -

Company Vendor ID (Decimal Format) (AVL) Ditest Fahrzeugdiagnose Gmbh 4621 @Pos.Com 3765 0XF8 Limited 10737 1MORE INC

Vendor ID Company (Decimal Format) (AVL) DiTEST Fahrzeugdiagnose GmbH 4621 @pos.com 3765 0XF8 Limited 10737 1MORE INC. 12048 360fly, Inc. 11161 3C TEK CORP. 9397 3D Imaging & Simulations Corp. (3DISC) 11190 3D Systems Corporation 10632 3DRUDDER 11770 3eYamaichi Electronics Co., Ltd. 8709 3M Cogent, Inc. 7717 3M Scott 8463 3T B.V. 11721 4iiii Innovations Inc. 10009 4Links Limited 10728 4MOD Technology 10244 64seconds, Inc. 12215 77 Elektronika Kft. 11175 89 North, Inc. 12070 Shenzhen 8Bitdo Tech Co., Ltd. 11720 90meter Solutions, Inc. 12086 A‐FOUR TECH CO., LTD. 2522 A‐One Co., Ltd. 10116 A‐Tec Subsystem, Inc. 2164 A‐VEKT K.K. 11459 A. Eberle GmbH & Co. KG 6910 a.tron3d GmbH 9965 A&T Corporation 11849 Aaronia AG 12146 abatec group AG 10371 ABB India Limited 11250 ABILITY ENTERPRISE CO., LTD. 5145 Abionic SA 12412 AbleNet Inc. 8262 Ableton AG 10626 ABOV Semiconductor Co., Ltd. 6697 Absolute USA 10972 AcBel Polytech Inc. 12335 Access Network Technology Limited 10568 ACCUCOMM, INC. 10219 Accumetrics Associates, Inc. 10392 Accusys, Inc. 5055 Ace Karaoke Corp. 8799 ACELLA 8758 Acer, Inc. 1282 Aces Electronics Co., Ltd. 7347 Aclima Inc. 10273 ACON, Advanced‐Connectek, Inc. 1314 Acoustic Arc Technology Holding Limited 12353 ACR Braendli & Voegeli AG 11152 Acromag Inc. 9855 Acroname Inc. 9471 Action Industries (M) SDN BHD 11715 Action Star Technology Co., Ltd. 2101 Actions Microelectronics Co., Ltd. 7649 Actions Semiconductor Co., Ltd. 4310 Active Mind Technology 10505 Qorvo, Inc 11744 Activision 5168 Acute Technology Inc. 10876 Adam Tech 5437 Adapt‐IP Company 10990 Adaptertek Technology Co., Ltd. 11329 ADATA Technology Co., Ltd. -

CPMT Newsletter Fall 2011

Components, Packaging, and Manufacturing Technology Society Newsletter THE GLOBAL SOCIETY FOR MICROELECTRONICS SYSTEMS PACKAGING VOL. 34 NO. 2, FALL 2011, ISSN 1077-2999 www.cpmt.org www.ewh.ieee.org/soc/cpmt/newsletter President’s Column.... Fan from Lamar University, USA, winner of the Exceptional Technical Achievement Award; Mark Brillhart from Cisco, who received the Electronics Manufacturing Technology Award; Rolf Aschenbrenner Muhannad Bakir from Georgia Institute of Technology¸ USA, President, IEEE CPMT Society who received the Outstanding Young Engineer Award and Paul Fraunhofer-Institut, Berlin, Germany Wesling, Santa Clara, CA, USA, who was recognized for his [email protected] contribution to the Santa Clara CPMT chapter. Please see the full profile of the 2011 winners further on in the newsletter for more information about their individual contributions. We also cannot forget our former CPMT president Rao R. Tummala, e are currently heading into another round of elections who received the “2011 IEEE Components, Packaging and for CPMT’s Board of Governors. This year, voters in Manufacturing Technology Award” (also profiled in the news- WRegions 1–7 and 9 (US, Canada and Central and South letter) – I’d like to extend my congratulations on behalf of all of America) will elect four members-at-large, while Regions 8 CPMT to you, Rao. (Europe, Middle East, Africa) and 10 (Asia, Pacific) will each From bodies of work that grow slowly but steadily over time, to elect one member-at-large. The elected representatives will join potentially game-changing flashes of inspiration, right through to continuing members-at-large: eight in Regions 1–7 and 9 and two dedicated efforts towards developing our society’s programs and each in Regions 8 and 10. -

Pcchips Vt8233 Manual

Pcchips vt8233 manual click here to download You can find driver, manual download, and FAQ on ASUS Customer Service website To INF update driver: On Board LAN Driver: VIA VT+VT/ VTA/VT PCChips. MG Motherboard Delivers Industry-Leading PCI-E Pro. Drivers Realtek AC'97 Audio is the real and official driver for the Realtek AC' replaced by Campbell Scientific. Campbell Scientific warrants that the software itself will perform substantially in accordance with the specifications set forth in the instruction manual when properly installed and used in a manner consistent with the published recommendations, including recommended system requirements. manual toshiba vcr manual canoscan manual sale transmission via manual pc chips. ATA with VTA South Bridge and SIs with unparalleled flexibility and scalability for existing and future PC designs. VIA P4M product brochure. PC Chips (MG) Motherboard, via vt related issues. Get free help. manual pc motherboard vt/,VIA VT A These drivers are supplied in instalar dblue pc camera zs, downloads driver para placa wireless enlwi. Download para tarjeta de video vdata (ver. , 01 June Download pc pcchips p6 mlr xp (ver. , 04 Download pcg. n manual (ver. , CHIPSET. > VIA VT(NB) & VT (SB). > VT V-Link Host system controller and VT V-Link Client to PCI/LPC bridge. > Peak Bandwidth MB/S 8-bit V-link Host Controller. > Supports 4X AGP. > LPC I/O - ITF. > System Hardware Monitor: Integrated in ITF. > LAN: RealTek /B. Via VT/VT/VT/VT Motherboard Driver · DOWNLOAD. , downloads File size: MB Manufacturer: Others Added on: May 28th, This package contains the configuration / diagnostic utilities and device drivers. -

Full Speed Ahead the Super7

A M D W H I T E P A P E R Full Speed Ahead The Super7™ Platform Gains Momentum in Providing a Robust PC Infrastructure For 1999 and Beyond ADVANCED MICRO DEVICES, INC. One AMD Place Sunnyvale, CA 94088 Contact: Sandra Wheatley Public Relations (408) 749-3811 Dale Weisman Public Relations (512) 602-5820 Page 1 52591A October 1, 1998 A M D W H I T E P A P E R Introduction: Headroom for the Future The Super7™ platform initiative provides a tremendous improvement on the proven, cost- effective Socket 7 infrastructure. Developed by AMD and key industry partners, the Super7 platform supercharges Socket 7 by adding support for 100-MHz and 95-MHz bus interfaces and the Accelerated Graphics Port (AGP) specification and by delivering other leading-edge features, including 100-MHz SDRAM, USB, Ultra DMA, ACPI, and PC 98. Other planned enhancements based on the AMD-K6® processor family include support for a full-speed backside L2 cache and an optional frontside L3 cache. Together, these enhancements give the Super7 platform strong staying power for the remainder of the 20th century. The Super7 platform maintains the vitality of Socket 7 for the life of sixth-generation processors, delivering leading-edge performance and features necessary for sixth-generation CPUs like the AMD-K6-2 processor with 3DNow!™ technology. Two recent developments coincide to bolster the Super7 platform’s robustness and longevity: (1) the May 1998 introduction and volume ramp of the Super7 platform-compatible AMD-K6-2 processor, and (2) the proliferation of third-party Super7 solutions, from 100-MHz, AGP-capable chipsets and motherboards to enhanced BIOS to ultra-fast 3D graphics accelerators. -

SMP Node Architecture

High Performance Computing: Concepts, Methods, & Means SMP Node Architecture Prof. Thomas Sterling Department of Computer Science Louisiana State University February 1, 2007 Topics • Introduction • SMP Context • Performance: Amdahl’s Law • SMP System structure • Processor core • Memory System • Chip set • South Bridge – I/O • Performance Issues • Summary – Material for the Test 2 2 Topics • Introduction • SMP Context • Performance: Amdahl’s Law • SMP System structure • Processor core • Memory System • Chip set • South Bridge – I/O • Performance Issues • Summary – Material for the Test 3 3 Opening Remarks • This week is about supercomputer architecture – Last time: major factors, classes, and system level – Today: modern microprocessor and multicore SMP node • As we’ve seen, there is a diversity of HPC system types • Most common systems are either SMPs or are ensembles of SMP nodes • “SMP” stands for: “Symmetric Multi-Processor ” • System performance is strongly influenced by SMP node performance • Understanding structure, functionality, and operation of SMP nodes will allow effective programming • Next time: making SMPs work for you! 4 4 The take-away message • Primary structure and elements that make up an SMP node • Primary structure and elements that make up the modern multicore microprocessor component • The factors that determine microprocessor delivered performance • The factors that determine overall SMP sustained performance • Amdahl’s law and how to use it • Calculating cpi • Reference: J. Hennessy & D. Patterson, “Computer Architecture