Cognitive Robotics and HRI

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Current Advances in Cognitive Robotics Amir Aly, Sascha Griffiths, Francesca Stramandinoli

Towards Intelligent Social Robots: Current Advances in Cognitive Robotics Amir Aly, Sascha Griffiths, Francesca Stramandinoli To cite this version: Amir Aly, Sascha Griffiths, Francesca Stramandinoli. Towards Intelligent Social Robots: Current Advances in Cognitive Robotics . France. 2015. hal-01673866 HAL Id: hal-01673866 https://hal.archives-ouvertes.fr/hal-01673866 Submitted on 1 Jan 2018 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Proceedings of the Full Day Workshop Towards Intelligent Social Robots: Current Advances in Cognitive Robotics in Conjunction with Humanoids 2015 South Korea November 3, 2015 Amir Aly1, Sascha Griffiths2, Francesca Stramandinoli3 1- ENSTA ParisTech – France 2- Queen Mary University – England 3- Italian Institute of Technology – Italy Towards Emerging Multimodal Cognitive Representations from Neural Self-Organization German I. Parisi, Cornelius Weber and Stefan Wermter Knowledge Technology Institute, Department of Informatics University of Hamburg, Germany fparisi,weber,[email protected] http://www.informatik.uni-hamburg.de/WTM/ Abstract—The integration of multisensory information plays a processing of a huge amount of visual information to learn crucial role in autonomous robotics. In this work, we investigate inherent spatiotemporal dependencies in the data. To tackle how robust multimodal representations can naturally develop in this issue, learning-based mechanisms have been typically used a self-organized manner from co-occurring multisensory inputs. -

Psychological Aspect of Cognitive Robotics

VI Psychological Aspect of Cognitive Robotics 169 CHAPTER 9 Robotic Action Control: On the Crossroads of Cognitive Psychology and Cognitive Robotics Roy de Kleijn Leiden Institute for Brain and Cognition, Leiden University, The Netherlands. George Kachergis Leiden Institute for Brain and Cognition, Leiden University, The Netherlands. Bernhard Hommel Leiden Institute for Brain and Cognition, Leiden University, The Netherlands. CONTENTS 9.1 Early history of the fields .................................... 172 9.1.1 History of cognitive psychology .................... 172 9.1.2 The computer analogy ............................... 173 9.1.3 Early cognitive robots ................................ 174 9.2 Action control ................................................. 174 9.2.1 Introduction ........................................... 175 9.2.2 Feedforward and feedback control in humans ..... 175 9.2.3 Feedforward and feedback control in robots ....... 176 9.2.4 Robotic action planning .............................. 177 9.3 Acquisition of action control ................................. 178 9.3.1 Introduction ........................................... 178 9.3.2 Human action-effect learning ....................... 179 171 172 Cognitive Robotics 9.3.2.1 Traditional action-effect learning research .................................. 179 9.3.2.2 Motor babbling .......................... 179 9.3.3 Robotic action-effect learning ........................ 180 9.4 Directions for the future ...................................... 181 9.4.1 Introduction .......................................... -

Artificial Intelligence Vs (General) Artificial Intelligence 3

ISSN 0798 1015 HOME Revista ESPACIOS ÍNDICES / Index A LOS AUTORES / To the ! ! AUTORS ! Vol. 40 (Number 4) Year 2019. Page 3 ¿Will machines ever rule the world? ¿Las máquinas dominarán el mundo? PEDROZA, Mauricio 1; VILLAMIZAR, Gustavo 2; MENDEZ, James 3 Received: 11/08/2018 • Approved: 16/12/2018 • Published 04/02/2019 Contents 1. Introduction 2. (Narrow) Artificial Intelligence vs (General) Artificial Intelligence 3. Machines designed as tools of man 4. Machines thought as similar to man 5. Conclusions Acknowledgments Bibliographic references ABSTRACT: RESUMEN: From ancient automatons, to the latest technologies Desde los antiguos autómatas hasta las últimas of robotics and supercomputing, the continued tecnologías de la robótica, el continuo progreso de la progress of mankind has led man to even question his humanidad ha llevado al hombre a cuestionar incluso future status as a dominant species. “Will machines su estatus futuro como especie dominante. "¿Alguna ever rule the world?” or more precisely: Do we want vez dominarán las máquinas el mundo?" O más machines to rule the world? Theoretical and precisamente: ¿Queremos que las máquinas dominen technological challenges involved in this choice will be el mundo? Los retos teóricos y tecnológicos que se considered both under the conservative approach of plantean en esta elección serán considerados tanto "machines designed as tools of man" and in the bajo el enfoque conservador de "máquinas que sirven scenario of "machines thought as similar to man". al hombre" como en el escenario de "máquinas Keywords: Artificial Intelligence, Cognitive Machines, equiparables al hombre" Narrow Artificial Intelligence Strong Artificial Palabras clave: Inteligencia Artificial, Máquinas Intelligence, Artificial General Intelligence. -

Cognitive Robotics

Volume title 1 The editors c 2006 Elsevier All rights reserved Chapter 24 Cognitive Robotics Hector Levesque and Gerhard Lakemeyer This chapter is dedicated to the memory of Ray Reiter. It is also an overview of cognitive robotics, as we understand it to have been envisaged by him.1 Of course, nobody can control the use of a term or the direction of research. We apologize in advance to those who feel that other approaches to cognitive robotics and related problems are inadequately represented here. 24.1 Introduction In its most general form, we take cognitive robotics to be the study of the knowledge rep- resentation and reasoning problems faced by an autonomous robot (or agent) in a dynamic and incompletely known world. To quote from a manifesto by Levesque and Reiter [42]: “Central to this effort is to develop an understanding of the relationship between the knowledge, the perception, and the action of such a robot. The sorts of questions we want to be able to answer are • to execute a program, what information does a robot need to have at the outset vs. the information that it can acquire en route by perceptual means? • what does the robot need to know about its environment vs. what need only be known by the designer? • when should a robot use perception to find out if something is true as opposed to reasoning about what it knows was true in the past? • when should the inner workings of an action be available to the robot for reasoning and when should the action be considered primitive or atomic? 1To the best of our knowledge, the term was first used publicly by Reiter at his lecture on receiving the IJCAI Award for Research Excellence in 1993. -

Cognitive Robotics

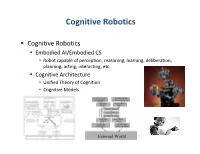

Cognitive Robotics . Cognitive Robotics . Embodied AI/Embodied CS . Robot capable of perception, reasoning, learning, deliberation, planning, acting, interacting, etc. Cognitive Architecture . Unified Theory of Cognition . Cognitive Models Cognitive Systems and Robotics • Since 2001: Cognitive Systems intensely funded by the EU "Robots need to be more robust, context-aware and easy-to-use. Endowing them with advanced learning, cognitive and reasoning capabilities will help them adapt to changing situations, and to carry out tasks intelligently with people" Research Areas . Biorobotics . Bio-inspirata, biomimetica, etc. Enactive Robotics . Dynamic interaction with the environment icub . Developmental Robotics (Epigenetic) . Robot learns as a baby . Incremental sensorimotor and cognitive ability [Piaget] . Neuro-Robotics . Models from cognitive neuroscience . Prosthesis, wearable systems, BCI, etc. Cog Developmental Robotics . iCub Platform . Italian Institute of Technology . EU project RobotCub: open source cognitive humanoid platf. Developmental Robotics . iCub Platform . Italian Institute of Technology . EU project RobotCub: open source cognitive humanoid platf. Developmental Robotics . iCub Platform Developmental Robotics . iCub Platform Developmental Robotics . Cognitive Architecture: The procedural memory is a network of associations between action events and perception events When an image is presented to the memory, if a previously stored image matches the stored image is recalled; otherwise, the presented image is stored Affordance . Affordance: property of an object or a feature of the immediate environment, that indicates how to interface with that object or feature ("action possibilities" latent in the environment [Gibson 1966]) . “The affordances of the environment are what it offers the animal, what it provides or furnishes, either for good or ill. The verb to afford is found in the dictionary, but the noun affordance is not. -

Report of Comest on Robotics Ethics

SHS/YES/COMEST-10/17/2 REV. Paris, 14 September 2017 Original: English REPORT OF COMEST ON ROBOTICS ETHICS Within the framework of its work programme for 2016-2017, COMEST decided to address the topic of robotics ethics building on its previous reflection on ethical issues related to modern robotics, as well as the ethics of nanotechnologies and converging technologies. At the 9th (Ordinary) Session of COMEST in September 2015, the Commission established a Working Group to develop an initial reflection on this topic. The COMEST Working Group met in Paris in May 2016 to define the structure and content of a preliminary draft report, which was discussed during the 9th Extraordinary Session of COMEST in September 2016. At that session, the content of the preliminary draft report was further refined and expanded, and the Working Group continued its work through email exchanges. The COMEST Working Group then met in Quebec in March 2017 to further develop its text. A revised text in the form of a draft report was submitted to COMEST and the IBC in June 2017 for comments. The draft report was then revised based on the comments received. The final draft of the report was further discussed and revised during the 10th (Ordinary) Session of COMEST, and was adopted by the Commission on 14 September 2017. This document does not pretend to be exhaustive and does not necessarily represent the views of the Member States of UNESCO. – 2 – REPORT OF COMEST ON ROBOTICS ETHICS EXECUTIVE SUMMARY I. INTRODUCTION II. WHAT IS A ROBOT? II.1. The complexity of defining a robot II.2. -

Applications of Cognitive Robotics in Disassembly of Products

Applications of Cognitive Robotics in Disassembly of Products By Supachai Vongbunyong B. Eng., M. Eng. A thesis in fulfilment of the requirements for the degree of Doctor of Philosophy School of Mechanical and Manufacturing Engineering The University of New South Wales July 2013 PLEASE TYPE THE UNIVERSITY OF NEW SOUTH WALES Thesis/Dissertation Sheet Surname or Family name Vongbunyong First name. Supachai Other name/s: - Abbreviation for degree as g1ven in the University calendar: PhD School· Mechamcal and Manufacturing Engmeering Faculty· Engmeenng Tille. Applications of cogmtlve robotics in disassembly of products Abstract 350 words maximum: (PLEASE TYPE) Disassembly automation has encountered difficulties in disassembly proces11 due to the variability in the planning and operation levels that result from uncertainties in quality-quantity of the products returned. Thus, the concept of cognitive robotics is Implemented to the vision-based and (semi-) destructive disassembly automation for end-of-life electronic products to handle this variability. The system consists of three operating modules, I.e. cognitive robotic module, vision system module, and disassembly operation module. First, the cognitive robotic module controls the system according to its behaviour influenced by four cognitive functions: reasoning, execution monitoring, learning, and revision. The cognitive robotic agent uses rule-based reasoning to schedule the actions according to the existing knowledge and sensed information from the physical world in regard to the disassembly state. Execution monitoring is used to determine accomplishment of the process. The significant Information of the current process is learned and will be Implemented in subsequent processes. Learning also occurs in the form of demonstration conducted by the expert user via the graphic user interface to overcome unresolved complex problem. -

Cognitive Robotics and Control

electronics Editorial Cognitive Robotics and Control Cecilio Angulo IDEAI-UPC, Universitat Politècnica de Catalunya, 08034 Barcelona, Spain; [email protected]; Tel.: +34-9341-34021 Received: 29 April 2020; Accepted: 1 May 2020; Published: 6 May 2020 1. Introduction Robotics and control are both research and application domains that have been frequently engineered with the use of interdisciplinary approaches like cybernetics [1]. Cognition is a particular concept of this approach, abstracted from the context of living organisms to that of artificial devices, about knowledge acquisition and understanding through thought, experience, and the senses [2]. Cognitive robotics and control refer to knowledge processing as much as knowledge generation from problem understanding, leading to special forms of architectures enabling systems to behave in an autonomous way [3–5]. The main aim of this special issue is to highlight emerging applications and address recent breakthroughs in the domain of cognitive robotics and control and related areas. Procedures, algorithms, architectures and implementations for reasoning, problem solving or decision making in the domain of robotics and control are elements under consideration. 2. The Present Issue This special issue consists of eight papers covering important topics in the field of cognitive robotics and control, including robotic platforms in interactive scenarios such as operating rooms, trajectories learning and optimisation from nature-inspired and computational cognition approaches, and hardware developments for motor control. The contents of these papers are introduced here. Robotic platforms are taking their place in the operating room, providing stability and accuracy during surgery. Most of these platforms are tele-operated, as in Reference [6], where the learning from demonstration (LfD) approach is extended for object tele-manipulation. -

Social Robots Social and Family Companion Robots

Advanced Manufacturing Technology (TechVision) Social Robots Social and Family Companion Robots D718-TV March 04, 2016 Contents Section Slide Numbers Innovation–Social Robots 3 Social Home Robot 4 Family Companion Robot 5 Leonardo–A Socially Interactive Robot 6 Keepon® Pro 7 Strategic Insights 8 Key Patents 9 Industry Interactions 11 D718-TV 2 Innovations in Social Robots D718-TV 3 Social Home Robot Jibo Inc.–Jibo Tech. Profile Wide-scale Adoption Market Opportunity Jibo is a social interactive home robot for Pre-orders for Jibo have been very family and personnel assistance. Jibo is a active from early 2015. The • Robotics static robot but is capable of seeing, company is planning on shipping • Sensors hearing, speaking, helping, learning and from April 2016. This novel • Social/Commercial robots engaging in various household chores and innovation is expected to be • Space roles such as delivering information, commercialized over the relative • Defence and military controlling the environment, capturing near term. • Hospitality and tourism pictures, providing an educative medium for children, browsing the Web and providing relevant information, managing and co-ordinating family activities. Technology Convergence 1 9 22 Robotics is actually a convergence of Competing Aspects major technologies such as sensors, microelectronics and so on. This novel Jibo has a touch sensitive 8 3 aluminum body which has the technology development has opportunities to be widely adopted for capability to rotate in all three axis Technology different applications in various and face the person who is 7 Readiness 4 addressing it. The system runs on a Level industries mentioned in market opportunities. Robotics products such Linux platform and ARM processor 6 5 and the data collected by the as Jibo have potential to impact on the system is directly stored in the Jibo commercial electronics market. -

Towards Cognitive Robotics : Robotics, Biology and Developmental

Towards cognitive robotics : robotics, biology and developmental psychology LEE, Mark, NEHMZOW, Ulrich and RODRIGUES, Marcos <http://orcid.org/0000-0002-6083-1303> Available from Sheffield Hallam University Research Archive (SHURA) at: http://shura.shu.ac.uk/5282/ This document is the author deposited version. You are advised to consult the publisher's version if you wish to cite from it. Published version LEE, Mark, NEHMZOW, Ulrich and RODRIGUES, Marcos (2012). Towards cognitive robotics : robotics, biology and developmental psychology. In: MCFARLAND, David, STENNING, Keith, MCGONIGLE, Maggie and HENDRY, Derek, (eds.) The complex mind : an interdisciplinary approach. Basingstoke, Palgrave Macmillan, 103-126. Copyright and re-use policy See http://shura.shu.ac.uk/information.html Sheffield Hallam University Research Archive http://shura.shu.ac.uk Towards Cognitive Robotics: Robotics, Biology and Developmental Psychology Mark Lee, Ulrich Nehmzow and Marcos Rodrigues 1 Introduction — Early Robotics Research The question of how “intelligent” controllers can be designed for machines has always attracted much interest and research activity. The idea of reproducing some facets of human cognition in a designed artifact has fascinated scientists, philosophers and charlatans throughout history. But despite the enormous efforts that have been directed at this issue in the twentieth century, only very recently has any significant progress been made. The robots of the last century typically were brittle, they failed in even simple tasks as soon as these tasks deviated even slightly from the original specifications; they were slow; and they needed constant attention from software engineers. Up to the late 1980s and early 1990s robotics research was dominated by work originating from the control theory and cybernetics communities, which meant that the fundamental assumptions of how intelligent behaviour could be achieved were very similar if not identical. -

Cognitive Robotics to Understand Human Beings

View metadata, citation and similar papers at core.ac.uk brought to you by CORE QUARTERLY REVIEW No.20 / July 2006 1 Cognitive Robotics to Understand Human Beings KAYOKO ISHII Life Science Unit taking place at that time. 1 Introduction In Japan, research on the computational theory of the brain[1] and research combining The question of what human beings (self theory and physiological experiments[2] has been and others), the mind, and the world are has carried out. One of the major themes in Japanese always been of great interest to humankind. brain science in the 1990s was “creating the Most academic disciplines originated to answer brain” beside analytical experimental sciences these questions. As neuroscience has advanced, (“understanding the brain”) and research oriented the notion that brain function is closely related to medical applications (“protecting the brain”). to the mind has become more widely accepted, This theme was significant in that it not only increasing the expectation that unknown aspects expressed the concept of understanding brain of the mind could be explored by neuroscience. functions through “cycles of creation of models of However, a long-standing question regarding the brain, computational theory and neural networks, mind is that one’s mind seems to be associated their verification through experimental science, with oneself as a physical existence, yet its and improvement of theories and models,” but content seems not to be expressed physically. also expressed the unconventional orientation Simply accumulating knowledge acquired of creating new systems inspired by the brain. through external analysis and observation of Furthermore, computational neuroscience was the brain as a physical entity is not sufficient to defined as “to investigate information processing elucidate the essential functions of the brain and of the brain to the extent that artificial machines, the nature of the mind. -

Cognitive Robotics: the Science of Building Intelligent Autonomous Robots and Software Agents

Cognitive Robotics: The science of building intelligent autonomous robots and software agents Giuseppe De Giacomo University of Roma “La Sapienza”, Italy Miegunyah Fellow, University of Melbourne, Australia Cognitive Robotics Giuseppe De Giacomo - Miegunyah Fellow 1 Cognitive Robotics: The science of building intelligent autonomous robots and software agents INTRODUCTION Cognitive Robotics Giuseppe De Giacomo - Miegunyah Fellow 2 Introduction • Cognitive Robotics is the science of building autonomous robots/agents that operate in changing, incompletely known, unpredictable environments. • Cognitive robots are “smart”: have autonomy, including autonomous reasoning and planning capabilities. • Cognitive robots are already a reality. Cognitive Robotics Giuseppe De Giacomo - Miegunyah Fellow 3 Example: “Curiosity” the Latest NASA Mars Rover •! “Cognitive robotics” in a NASA cartoon! Cognitive Robotics Giuseppe De Giacomo - Miegunyah Fellow 4 Example: “Curiosity” the Latest NASA Mars Rover •! The previous video is referring to Curiosity, the latest NASA Mars Rover (a large, mobile laboratory), which started its mission a year ago (landed on Mars on Aug. 6, 2012) •! Within the first 8 months of a planned 23-month primary mission, Curiosity met its major objective of finding evidence of a past environment well suited to supporting microbial life. Cognitive Robotics Giuseppe De Giacomo - Miegunyah Fellow 5 Robots Come in a Variety of Shapes •! Nowadays through increasing use of software (and hence CS) robots are increasingly acquiring forms of autonomy Rhex: power - and computation - autonomous hexapod robot with compliant legs and only one actuator per leg, developed by a consortium of American universities, including University of Michigan, McGill University, Carnegie Mellon University, University of California, Berkeley, Princeton University, Cornell University, University of Pennsylvania, within the RHex DARPA project.