Concrete Data Structures and Functional Parallel Programming

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

High Performance Computing Through Parallel and Distributed Processing

Yadav S. et al., J. Harmoniz. Res. Eng., 2013, 1(2), 54-64 Journal Of Harmonized Research (JOHR) Journal Of Harmonized Research in Engineering 1(2), 2013, 54-64 ISSN 2347 – 7393 Original Research Article High Performance Computing through Parallel and Distributed Processing Shikha Yadav, Preeti Dhanda, Nisha Yadav Department of Computer Science and Engineering, Dronacharya College of Engineering, Khentawas, Farukhnagar, Gurgaon, India Abstract : There is a very high need of High Performance Computing (HPC) in many applications like space science to Artificial Intelligence. HPC shall be attained through Parallel and Distributed Computing. In this paper, Parallel and Distributed algorithms are discussed based on Parallel and Distributed Processors to achieve HPC. The Programming concepts like threads, fork and sockets are discussed with some simple examples for HPC. Keywords: High Performance Computing, Parallel and Distributed processing, Computer Architecture Introduction time to solve large problems like weather Computer Architecture and Programming play forecasting, Tsunami, Remote Sensing, a significant role for High Performance National calamities, Defence, Mineral computing (HPC) in large applications Space exploration, Finite-element, Cloud science to Artificial Intelligence. The Computing, and Expert Systems etc. The Algorithms are problem solving procedures Algorithms are Non-Recursive Algorithms, and later these algorithms transform in to Recursive Algorithms, Parallel Algorithms particular Programming language for HPC. and Distributed Algorithms. There is need to study algorithms for High The Algorithms must be supported the Performance Computing. These Algorithms Computer Architecture. The Computer are to be designed to computer in reasonable Architecture is characterized with Flynn’s Classification SISD, SIMD, MIMD, and For Correspondence: MISD. Most of the Computer Architectures preeti.dhanda01ATgmail.com are supported with SIMD (Single Instruction Received on: October 2013 Multiple Data Streams). -

Data Structure

EDUSAT LEARNING RESOURCE MATERIAL ON DATA STRUCTURE (For 3rd Semester CSE & IT) Contributors : 1. Er. Subhanga Kishore Das, Sr. Lect CSE 2. Mrs. Pranati Pattanaik, Lect CSE 3. Mrs. Swetalina Das, Lect CA 4. Mrs Manisha Rath, Lect CA 5. Er. Dillip Kumar Mishra, Lect 6. Ms. Supriti Mohapatra, Lect 7. Ms Soma Paikaray, Lect Copy Right DTE&T,Odisha Page 1 Data Structure (Syllabus) Semester & Branch: 3rd sem CSE/IT Teachers Assessment : 10 Marks Theory: 4 Periods per Week Class Test : 20 Marks Total Periods: 60 Periods per Semester End Semester Exam : 70 Marks Examination: 3 Hours TOTAL MARKS : 100 Marks Objective : The effectiveness of implementation of any application in computer mainly depends on the that how effectively its information can be stored in the computer. For this purpose various -structures are used. This paper will expose the students to various fundamentals structures arrays, stacks, queues, trees etc. It will also expose the students to some fundamental, I/0 manipulation techniques like sorting, searching etc 1.0 INTRODUCTION: 04 1.1 Explain Data, Information, data types 1.2 Define data structure & Explain different operations 1.3 Explain Abstract data types 1.4 Discuss Algorithm & its complexity 1.5 Explain Time, space tradeoff 2.0 STRING PROCESSING 03 2.1 Explain Basic Terminology, Storing Strings 2.2 State Character Data Type, 2.3 Discuss String Operations 3.0 ARRAYS 07 3.1 Give Introduction about array, 3.2 Discuss Linear arrays, representation of linear array In memory 3.3 Explain traversing linear arrays, inserting & deleting elements 3.4 Discuss multidimensional arrays, representation of two dimensional arrays in memory (row major order & column major order), and pointers 3.5 Explain sparse matrices. -

Data Structure Invariants

Lecture 8 Notes Data Structures 15-122: Principles of Imperative Computation (Spring 2016) Frank Pfenning, André Platzer, Rob Simmons 1 Introduction In this lecture we introduce the idea of imperative data structures. So far, the only interfaces we’ve used carefully are pixels and string bundles. Both of these interfaces had the property that, once we created a pixel or a string bundle, we weren’t interested in changing its contents. In this lecture, we’ll talk about an interface that mimics the arrays that are primitively available in C0. To implement this interface, we’ll need to round out our discussion of types in C0 by discussing pointers and structs, two great tastes that go great together. We will discuss using contracts to ensure that pointer accesses are safe. Relating this to our learning goals, we have Computational Thinking: We illustrate the power of abstraction by con- sidering both the client-side and library-side of the interface to a data structure. Algorithms and Data Structures: The abstract arrays will be one of our first examples of abstract datatypes. Programming: Introduction of structs and pointers, use and design of in- terfaces. 2 Structs So far in this course, we’ve worked with five different C0 types — int, bool, char, string, and arrays t[] (there is a array type t[] for every type t). The character, Boolean and integer values that we manipulate, store LECTURE NOTES Data Structures L8.2 locally, and pass to functions are just the values themselves. For arrays (and strings), the things we store in assignable variables or pass to functions are addresses, references to the place where the data stored in the array can be accessed. -

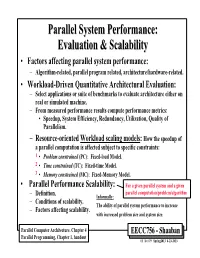

Parallel System Performance: Evaluation & Scalability

ParallelParallel SystemSystem Performance:Performance: EvaluationEvaluation && ScalabilityScalability • Factors affecting parallel system performance: – Algorithm-related, parallel program related, architecture/hardware-related. • Workload-Driven Quantitative Architectural Evaluation: – Select applications or suite of benchmarks to evaluate architecture either on real or simulated machine. – From measured performance results compute performance metrics: • Speedup, System Efficiency, Redundancy, Utilization, Quality of Parallelism. – Resource-oriented Workload scaling models: How the speedup of a parallel computation is affected subject to specific constraints: 1 • Problem constrained (PC): Fixed-load Model. 2 • Time constrained (TC): Fixed-time Model. 3 • Memory constrained (MC): Fixed-Memory Model. • Parallel Performance Scalability: For a given parallel system and a given parallel computation/problem/algorithm – Definition. Informally: – Conditions of scalability. The ability of parallel system performance to increase – Factors affecting scalability. with increased problem size and system size. Parallel Computer Architecture, Chapter 4 EECC756 - Shaaban Parallel Programming, Chapter 1, handout #1 lec # 9 Spring2013 4-23-2013 Parallel Program Performance • Parallel processing goal is to maximize speedup: Time(1) Sequential Work Speedup = < Time(p) Max (Work + Synch Wait Time + Comm Cost + Extra Work) Fixed Problem Size Speedup Max for any processor Parallelizing Overheads • By: 1 – Balancing computations/overheads (workload) on processors -

Scalable Task Parallel Programming in the Partitioned Global Address Space

Scalable Task Parallel Programming in the Partitioned Global Address Space DISSERTATION Presented in Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy in the Graduate School of The Ohio State University By James Scott Dinan, M.S. Graduate Program in Computer Science and Engineering The Ohio State University 2010 Dissertation Committee: P. Sadayappan, Advisor Atanas Rountev Paolo Sivilotti c Copyright by James Scott Dinan 2010 ABSTRACT Applications that exhibit irregular, dynamic, and unbalanced parallelism are grow- ing in number and importance in the computational science and engineering commu- nities. These applications span many domains including computational chemistry, physics, biology, and data mining. In such applications, the units of computation are often irregular in size and the availability of work may be depend on the dynamic, often recursive, behavior of the program. Because of these properties, it is challenging for these programs to achieve high levels of performance and scalability on modern high performance clusters. A new family of programming models, called the Partitioned Global Address Space (PGAS) family, provides the programmer with a global view of shared data and allows for asynchronous, one-sided access to data regardless of where it is physically stored. In this model, the global address space is distributed across the memories of multiple nodes and, for any given node, is partitioned into local patches that have high affinity and low access cost and remote patches that have a high access cost due to communication. The PGAS data model relaxes conventional two-sided communication semantics and allows the programmer to access remote data without the cooperation of the remote processor. -

Using Machine Learning to Improve Dense and Sparse Matrix Multiplication Kernels

Iowa State University Capstones, Theses and Graduate Theses and Dissertations Dissertations 2019 Using machine learning to improve dense and sparse matrix multiplication kernels Brandon Groth Iowa State University Follow this and additional works at: https://lib.dr.iastate.edu/etd Part of the Applied Mathematics Commons, and the Computer Sciences Commons Recommended Citation Groth, Brandon, "Using machine learning to improve dense and sparse matrix multiplication kernels" (2019). Graduate Theses and Dissertations. 17688. https://lib.dr.iastate.edu/etd/17688 This Dissertation is brought to you for free and open access by the Iowa State University Capstones, Theses and Dissertations at Iowa State University Digital Repository. It has been accepted for inclusion in Graduate Theses and Dissertations by an authorized administrator of Iowa State University Digital Repository. For more information, please contact [email protected]. Using machine learning to improve dense and sparse matrix multiplication kernels by Brandon Micheal Groth A dissertation submitted to the graduate faculty in partial fulfillment of the requirements for the degree of DOCTOR OF PHILOSOPHY Major: Applied Mathematics Program of Study Committee: Glenn R. Luecke, Major Professor James Rossmanith Zhijun Wu Jin Tian Kris De Brabanter The student author, whose presentation of the scholarship herein was approved by the program of study committee, is solely responsible for the content of this dissertation. The Graduate College will ensure this dissertation is globally accessible and will not permit alterations after a degree is conferred. Iowa State University Ames, Iowa 2019 Copyright c Brandon Micheal Groth, 2019. All rights reserved. ii DEDICATION I would like to dedicate this thesis to my wife Maria and our puppy Tiger. -

Verification-Aware Opencl Based Read Mapper for Heterogeneous

JOURNAL OF LATEX CLASS FILES, VOL. 14, NO. 8, AUGUST 2015 1 CORAL: Verification-aware OpenCL based Read Mapper for Heterogeneous Systems Sidharth Maheshwari, Venkateshwarlu Y. Gudur, Rishad Shafik, Senion Member, IEEE, Ian Wilson, Alex Yakovlev, Fellow, IEEE, and Amit Acharyya, Member, IEEE Abstract—Genomics has the potential to transform medicine from reactive to a personalized, predictive, preventive and participatory (P4) form. Being a Big Data application with continuously increasing rate of data production, the computational costs of genomics have become a daunting challenge. Most modern computing systems are heterogeneous consisting of various combinations of computing resources, such as CPUs, GPUs and FPGAs. They require platform-specific software and languages to program making their simultaneous operation challenging. Existing read mappers and analysis tools in the whole genome sequencing (WGS) pipeline do not scale for such heterogeneity. Additionally, the computational cost of mapping reads is high due to expensive dynamic programming based verification, where optimized implementations are already available. Thus, improvement in filtration techniques is needed to reduce verification overhead. To address the aforementioned limitations with regards to the mapping element of the WGS pipeline, we propose a Cross-platfOrm Read mApper using opencL (CORAL). CORAL is capable of executing on heterogeneous devices/platforms simultaneously. It can reduce computational time by suitably distributing the workload without any additional programming effort. We showcase this on a quadcore Intel CPU along with two Nvidia GTX 590 GPUs, distributing the workload judiciously to achieve up to 2× speedup compared to when only CPUs are used. To reduce the verification overhead, CORAL dynamically adapts k-mer length during filtration. -

Applying Front End Compiler Process to Parse Polynomials in Parallel

Western University Scholarship@Western Electronic Thesis and Dissertation Repository 12-16-2020 10:30 AM Applying Front End Compiler Process to Parse Polynomials in Parallel Amha W. Tsegaye, The University of Western Ontario Supervisor: Dr. Marc Moreno Maza, The University of Western Ontario A thesis submitted in partial fulfillment of the equirr ements for the Master of Science degree in Computer Science © Amha W. Tsegaye 2020 Follow this and additional works at: https://ir.lib.uwo.ca/etd Part of the Computer Sciences Commons, and the Mathematics Commons Recommended Citation Tsegaye, Amha W., "Applying Front End Compiler Process to Parse Polynomials in Parallel" (2020). Electronic Thesis and Dissertation Repository. 7592. https://ir.lib.uwo.ca/etd/7592 This Dissertation/Thesis is brought to you for free and open access by Scholarship@Western. It has been accepted for inclusion in Electronic Thesis and Dissertation Repository by an authorized administrator of Scholarship@Western. For more information, please contact [email protected]. Abstract Parsing large expressions, in particular large polynomial expressions, is an important task for computer algebra systems. Despite of the apparent simplicity of the problem, its efficient software implementation brings various challenges. Among them is the fact that this is a memory bound application for which a multi-threaded implementation is necessarily limited by the characteristics of the memory organization of supporting hardware. In this thesis, we design, implement and experiment with a multi-threaded parser for large polynomial expressions. We extract parallelism by splitting the input character string, into meaningful sub-strings that can be parsed concurrently before being merged into a single polynomial. -

Exploratory Large Scale Graph Analytics in Arkouda 59 2 60 3 61 4 Zhihui Du,Oliver Alvarado Rodriguez and Michael Merrill and William Reus 62 5 David A

1 Exploratory Large Scale Graph Analytics in Arkouda 59 2 60 3 61 4 Zhihui Du,Oliver Alvarado Rodriguez and Michael Merrill and William Reus 62 5 David A. Bader [email protected] 63 6 {zhihui.du,oaa9,bader}@njit.edu [email protected] 64 7 New Jersey Institute of Technology Department of Defense 65 8 Newark, New Jersey, USA USA 66 9 67 10 ABSTRACT 1 INTRODUCTION 68 11 Exploratory graph analytics helps maximize the informational value A graph is a well defined mathematical model to formulate the rela- 69 12 for a graph. However, the increasing graph size makes it impossi- tionship between different objects and is widely used in numerous 70 13 ble for existing popular exploratory data analysis tools to handle domains such as social sciences, biological systems, and informa- 71 14 dozens-of-terabytes or even larger data sets in the memory of a tion systems. The edge distributions of many large scale real world 72 15 common laptop/personal computer. Arkouda is a framework un- problems tend to follow a power-law distribution [1, 11, 26]. Dense 73 16 der early-development that brings together the productivity of graph data structures and algorithms will consume much more 74 17 Python at the user side with the high-performance of Chapel at memory and cannot analyze very large sparse graphs efficiently. 75 18 the server side. In this paper, the preliminary work on overcoming Therefore, parallel algorithms for sparse graphs [23] have become 76 19 the memory limit and high performance computing coding road- an important research topic to efficiently analyze the large and 77 20 block for high level Python users to perform large graph analysis sparse graphs from different real-world problems. -

Oblivious Network RAM and Leveraging Parallelism to Achieve Obliviousness

Oblivious Network RAM and Leveraging Parallelism to Achieve Obliviousness Dana Dachman-Soled1,3 ∗ Chang Liu2 y Charalampos Papamanthou1,3 z Elaine Shi4 x Uzi Vishkin1,3 { 1: University of Maryland, Department of Electrical and Computer Engineering 2: University of Maryland, Department of Computer Science 3: University of Maryland Institute for Advanced Computer Studies (UMIACS) 4: Cornell University January 12, 2017 Abstract Oblivious RAM (ORAM) is a cryptographic primitive that allows a trusted CPU to securely access untrusted memory, such that the access patterns reveal nothing about sensitive data. ORAM is known to have broad applications in secure processor design and secure multi-party computation for big data. Unfortunately, due to a logarithmic lower bound by Goldreich and Ostrovsky (Journal of the ACM, '96), ORAM is bound to incur a moderate cost in practice. In particular, with the latest developments in ORAM constructions, we are quickly approaching this limit, and the room for performance improvement is small. In this paper, we consider new models of computation in which the cost of obliviousness can be fundamentally reduced in comparison with the standard ORAM model. We propose the Oblivious Network RAM model of computation, where a CPU communicates with multiple memory banks, such that the adversary observes only which bank the CPU is communicating with, but not the address offset within each memory bank. In other words, obliviousness within each bank comes for free|either because the architecture prevents a malicious party from ob- serving the address accessed within a bank, or because another solution is used to obfuscate memory accesses within each bank|and hence we only need to obfuscate communication pat- terns between the CPU and the memory banks. -

Compiling for a Multithreaded Dataflow Architecture : Algorithms, Tools, and Experience Feng Li

Compiling for a multithreaded dataflow architecture : algorithms, tools, and experience Feng Li To cite this version: Feng Li. Compiling for a multithreaded dataflow architecture : algorithms, tools, and experience. Other [cs.OH]. Université Pierre et Marie Curie - Paris VI, 2014. English. NNT : 2014PA066102. tel-00992753v2 HAL Id: tel-00992753 https://tel.archives-ouvertes.fr/tel-00992753v2 Submitted on 10 Sep 2014 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Universit´ePierre et Marie Curie Ecole´ Doctorale Informatique, T´el´ecommunications et Electronique´ Compiling for a multithreaded dataflow architecture: algorithms, tools, and experience par Feng LI Th`esede doctorat d'Informatique Dirig´eepar Albert COHEN Pr´esent´ee et soutenue publiquement le 20 mai, 2014 Devant un jury compos´ede: , Pr´esident , Rapporteur , Rapporteur , Examinateur , Examinateur , Examinateur I would like to dedicate this thesis to my mother Shuxia Li, for her love Acknowledgements I would like to thank my supervisor Albert Cohen, there's nothing more exciting than working with him. Albert, you are an extraordi- nary person, full of ideas and motivation. The discussions with you always enlightens me, helps me. I am so lucky to have you as my supervisor when I first come to research as a PhD student. -

Massively Parallel Computers: Why Not Prirallel Computers for the Masses?

Maslsively Parallel Computers: Why Not Prwallel Computers for the Masses? Gordon Bell Abstract In 1989 I described the situation in high performance computers including several parallel architectures that During the 1980s the computers engineering and could deliver teraflop power by 1995, but with no price science research community generally ignored parallel constraint. I felt SIMDs and multicomputers could processing. With a focus on high performance computing achieve this goal. A shared memory multiprocessor embodied in the massive 1990s High Performance looked infeasible then. Traditional, multiple vector Computing and Communications (HPCC) program that processor supercomputers such as Crays would simply not has the short-term, teraflop peak performanc~:goal using a evolve to a teraflop until 2000. Here's what happened. network of thousands of computers, everyone with even passing interest in parallelism is involted with the 1. During the first half of 1992, NEC's four processor massive parallelism "gold rush". Funding-wise the SX3 is the fastest computer, delivering 90% of its situation is bright; applications-wise massit e parallelism peak 22 Glops for the Linpeak benchmark, and Cray's is microscopic. While there are several programming 16 processor YMP C90 has the greatest throughput. models, the mainline is data parallel Fortran. However, new algorithms are required, negating th~: decades of 2. The SIMD hardware approach of Thinking Machines progress in algorithms. Thus, utility will no doubt be the was abandoned because it was only suitable for a few, Achilles Heal of massive parallelism. very large scale problems, barely multiprogrammed, and uneconomical for workloads. It's unclear whether The Teraflop: 1992 large SIMDs are "generation" scalable, and they are clearly not "size" scalable.