Human Complex Exploration Strategies Are Enriched by Noradrenaline-Modulated Heuristics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

8123 Songs, 21 Days, 63.83 GB

Page 1 of 247 Music 8123 songs, 21 days, 63.83 GB Name Artist The A Team Ed Sheeran A-List (Radio Edit) XMIXR Sisqo feat. Waka Flocka Flame A.D.I.D.A.S. (Clean Edit) Killer Mike ft Big Boi Aaroma (Bonus Version) Pru About A Girl The Academy Is... About The Money (Radio Edit) XMIXR T.I. feat. Young Thug About The Money (Remix) (Radio Edit) XMIXR T.I. feat. Young Thug, Lil Wayne & Jeezy About Us [Pop Edit] Brooke Hogan ft. Paul Wall Absolute Zero (Radio Edit) XMIXR Stone Sour Absolutely (Story Of A Girl) Ninedays Absolution Calling (Radio Edit) XMIXR Incubus Acapella Karmin Acapella Kelis Acapella (Radio Edit) XMIXR Karmin Accidentally in Love Counting Crows According To You (Top 40 Edit) Orianthi Act Right (Promo Only Clean Edit) Yo Gotti Feat. Young Jeezy & YG Act Right (Radio Edit) XMIXR Yo Gotti ft Jeezy & YG Actin Crazy (Radio Edit) XMIXR Action Bronson Actin' Up (Clean) Wale & Meek Mill f./French Montana Actin' Up (Radio Edit) XMIXR Wale & Meek Mill ft French Montana Action Man Hafdís Huld Addicted Ace Young Addicted Enrique Iglsias Addicted Saving abel Addicted Simple Plan Addicted To Bass Puretone Addicted To Pain (Radio Edit) XMIXR Alter Bridge Addicted To You (Radio Edit) XMIXR Avicii Addiction Ryan Leslie Feat. Cassie & Fabolous Music Page 2 of 247 Name Artist Addresses (Radio Edit) XMIXR T.I. Adore You (Radio Edit) XMIXR Miley Cyrus Adorn Miguel Adorn Miguel Adorn (Radio Edit) XMIXR Miguel Adorn (Remix) Miguel f./Wiz Khalifa Adorn (Remix) (Radio Edit) XMIXR Miguel ft Wiz Khalifa Adrenaline (Radio Edit) XMIXR Shinedown Adrienne Calling, The Adult Swim (Radio Edit) XMIXR DJ Spinking feat. -

Jay Brown Rihanna, Lil Mo, Tweet, Ne-Yo, LL Cool J ISLAND/DEF JAM, 825 8Th Ave 28Th Floor, NY 10019 New York, USA Phone +1 212 333 8000 / Fax +1 212 333 7255

Jay Brown Rihanna, Lil Mo, Tweet, Ne-Yo, LL Cool J ISLAND/DEF JAM, 825 8th Ave 28th Floor, NY 10019 New York, USA Phone +1 212 333 8000 / Fax +1 212 333 7255 14. Jerome Foster a.k.a. Knobody Akon STREET RECORDS CORPORATION, 1755 Broadway 6th , NY 10019 New York, USA Phone +1 212 331 2628 / Fax +1 212 331 2620 16. Rob Walker Clipse, N.E.R.D, Kelis, Robin Thicke STAR TRAK ENTERTAINMENT, 1755 Broadway , NY 10019 New York, USA Phone +1 212 841 8040 / Fax +1 212 841 8099 Ken Bailey A&R Urban Breakthrough credits: Chingy DISTURBING THA PEACE 1867 7th Avenue Suite 4C NY 10026 New York USA tel +1 404 351 7387 fax +1 212 665 5197 William Engram A&R Urban Breakthrough credits: Bobby Valentino Chingy DISTURBING THA PEACE 1867 7th Avenue Suite 4C NY 10026 New York USA tel +1 404 351 7387 fax +1 212 665 5197 Ron Fair President, A&R Pop / Rock / Urban Accepts only solicited material Breakthrough credits: The Calling Christina Aguilera Keyshia Cole Lit Pussycat Dolls Vanessa Carlton Other credits: Mya Vanessa Carlton Current artists: Black Eyed Peas Keyshia Cole Pussycat Dolls Sheryl Crow A&M RECORDS 2220 Colorado Avenue Suite 1230 CA 90404 Santa Monica USA tel +1 310 865 1000 fax +1 310 865 6270 Marcus Heisser A&R Urban Accepts unsolicited material Breakthrough credits: G-Unit Lloyd Banks Tony Yayo Young Buck Current artists: 50 Cent G-Unit Lloyd Banks Mobb Depp Tony Yayo Young Buck INTERSCOPE RECORDS 2220 Colorado Avenue CA 90404 Santa Monica USA tel +1 310 865 1000 fax +1 310 865 7908 www.interscope.com Record Company A&R Teresa La Barbera-Whites -

Decoding Dubstep: a Rhetorical Investigation of Dubstepâ•Žs Development from the Late 1990S to the Early 2010S

Florida State University Libraries Honors Theses The Division of Undergraduate Studies 2013 Decoding Dubstep: A Rhetorical Investigation of Dubstep's Development from the Late 1990s to the Early 2010s Laura Bradley Follow this and additional works at the FSU Digital Library. For more information, please contact [email protected] 4-25-2013 Decoding Dubstep: A Rhetorical Investigation of Dubstep’s Development from the Late 1990s to the Early 2010s Laura Bradley Florida State University, [email protected] Bradley | 1 THE FLORIDA STATE UNIVERSITY COLLEGE OF ARTS AND SCIENCES DECODING DUBSTEP: A RHETORICAL INVESTIGATION OF DUBSTEP’S DEVELOPMENT FROM THE LATE 1990S TO THE EARLY 2010S By LAURA BRADLEY A Thesis submitted to the Department of English in partial fulfillment of the requirements for graduation with Honors in the Major Degree Awarded: Spring 2013 Bradley | 2 The members of the Defense Committee approve the thesis of Laura Bradley defended on April 16, 2012. ______________________________ Dr. Barry Faulk Thesis Director ______________________________ Dr. Michael Buchler Outside Committee Member ______________________________ Dr. Michael Neal Committee Member Bradley | 3 ACKNOWLEDGEMENTS Many people have shaped and improved this project, through all of its incarnations and revisions. First, I must thank my thesis director, Dr. Barry Faulk, for his constant and extremely constructive guidance through multiple drafts of this project—and also his tolerance of receiving drafts and seeing me in his office less than a week later. Dr. Michael Buchler’s extensive knowledge of music theory, and his willingness to try out a new genre, have led to stimulating and thought provoking discussions, which have shaped this paper in many ways. -

Love & Hip Hop: Hollywood

Love & Hip Hop: Hollywood (Series 4) 14 x 60’ EPISODIC BREAKDOWN 1. Girl Fight Keyshia Cole confronts unresolved issues with her estranged husband Booby. Chaos erupts when Hazel-E squares off against her haters at a women's empowerment event. A sexy new arrival threatens to turn Masika's world upside-down. Teairra's lunch date with an old frenemy goes all the way left. A shocking revelation derails Brooke and Marcus' talk of marriage. Moniece's love life moves in an unexpected direction. 2. Make It Count Ray J.'s struggle to start a family leads to an outrageous bet with A1 and Safaree. Keyshia gets advice from Too Short on a major life decision. Nikki Baby connects Chanel West Coast with a producer who can take her rap career to the next level. Brooke goes on a mission to uncover the truth about Marcus. A friend's betrayal sends Masika into a tailspin. 3. New Bae Teairra's new man clashes with her social circle. Chanel leaps to her friend's defense at Safaree's party. Ray J.'s secret weighs on his conscience. Lyrica issues a startling demand to A1. Alexis and Solo's relationship hits a snag. Brooke forces Marcus to make a decision about their future. 4. Got Swag? Masika hires Misster Ray to plan an event, only to get caught in the crossfire of his beef with Zell. Concerns mount over Teairra's self-destructive behavior. A1 considers a collaboration with Keyshia Cole. Brooke and Booby hatch a plan to teach Marcus a lesson. -

Music Review: Brandy

Music Review: Brandy http://elsegundousd.com/eshs/bayeagle/entertainment/april/brandy.htm Music Review: Brandy Brandy's highly anticipated album, Full Moon, was released on March 5. It sold more than 155,000 copies in the first week, which landed it at the #2 spot on the Billboard 200 chart. Because it has been four years since her last album, the success comes as no shock. Considering the popularity of her last album, surely it left her fans wanting more. The recent news of her secret marriage to music producer Robert Smith and surprise pregnancy have also added to the anticipation of this album. Brandy Norwood was born on February 11, 1979, in McComb, Mississippi. At a very young age, she moved to California with her family. She grew up singing in the choir of her father's church and in youth groups. She was drawn to pop music when she heard Whitney Houston on the radio. Darryl Williams signed her to Atlantic in 1993. Before releasing her debut album, she had a role on the short-lived ABC television sitcom, "Thea." On September 27, 1994, she released Brandy. The album's success was due to hits such as "Baby," "I Wanna Be Down," and "Brokenhearted." By the end of the year, it had been certified four times platinum. This album earned her two Billboard Awards and two Soul Train Awards. She won the Best New Artist, R&B and Best R&B Female Billboard Awards. She also won the Soul Train Award for Best New Artist and Best R&B/Soul Artist Female. -

Acclaimed Singer, Songwriter, Actor and Hollywood 'Bad Boy,' Ray J Teams up with VH1 to Find a True Leading Lady

Acclaimed Singer, Songwriter, Actor and Hollywood 'Bad Boy,' Ray J Teams Up With VH1 to Find a True Leading Lady "For the Love of Ray J" Premieres on VH1 on Monday, February 2 at 10:30 PM* All Following New Episodes Will Air at 10:00 PM VH1.com to Sneak Peek the First Episode Beginning Tuesday, January 27 SANTA MONICA, Calif., Jan. 29 -- Ray J's success in the entertainment business has brought him fame, fortune, and a never- ending supply of gorgeous girls. After years of hookups and rocky relationships he's ready to break away from the gold-diggers and groupies and find a unique lady capable of restoring his faith in women. VH1 is teaming up with Ray J in hopes of finding him a true "ride or die chick" among a group of 14 beautiful women from across the country. "For the Love of Ray J" premieres on VH1 on Monday, February 2 at 10:30 PM* "For the Love of Ray J" will catch every exciting moment as Ray J follows in the footsteps of his boys Flavor Flav and Bret Michaels. Fourteen lovely ladies will move into Ray J's bachelor mansion and go through a series of challenges, dates, and heart-breaking eliminations until he finds the one woman he's been waiting for. Not only will the ladies have to prove their love for Ray J, but they must also show him they can keep up with his fast-paced lifestyle. "For the Love of Ray J" follows Ray J and his entourage of female suitors to the hottest hangouts in Los Angeles, to Sin City where they'll party with his famous friends, and to Ray's childhood home where they'll meet his family and big sister Brandy. -

Seg. T Local Break 2:00 K32 "REALLZE'

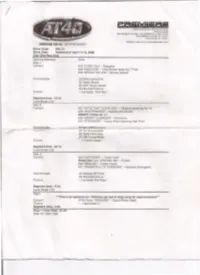

I SJTnR.MAN OAKS. CA.L.lrORNIA.~ 9;~;;;~~~ TliLRPHONK(.$18) 371•$300 ,AX (AIM) 377·~'-'33 w-.~ btl.p:/,_. P"miaencl'o..com Show Code: #08-15 Show Date: Weekend of Aprll12·13, 2008 Disc One/Hour One Opening BiUboard None Seg. t Contont: !140 "OVER You·- Daughtry #39 "KISS KISS"- Chris Brown featuring T-Pain #38 "BREAK THE ICE"- Brltney Spears Commercoats: :30 McOonald's!Doll :30 Radio Shack :30 CMT Music Award :30 Wal MarVHanna Outcue: •.. Jive bettor, Wal-Matt.' Segment time: 15:16 locol Break 2:00 Seg.2 Content: K37 "HATE THAT I LOVE YOU'"- Roha1na '='10 Ne-Yo ~ 'INDEPENDe..-- -Webbe' u 8oosle INSERT LOCAL 10 :11 #35 'MISERY BUSINESS'- Paramore 1134 ' STRONGER' - Kanyo West featuring Oaf! Punk :30 Sony/BMG!leona :30 Fox 6rooocastin ·30 State Farm tnsu :30 GM Onstar/Midsi Outcue • ...in certa1n areas.~ Segment lime: 20:14 Local Break 2:00 Seg.3 Content #33 "OUR SONG'- Taylor Swrlt Break Out: ' All AROUND ME" - Ry1ca' K32 "REALLZE' - Colboe Caiuat ;31 "POCKETFUL OF SUNSHINE"- Natasha Bedingf•otd Commerclllls: :30 Subway SS Footl :30 W~'Hanna • ...live bet:er Wal-H.art: Segment time: 17:31 Local Break 1:00 Seg 4 "'This is an optional cut ·Stations can opt to drop song for local inventory".. Content: AT40 Extra "GGODIES'-Clara !/Petey Pablo Outouc • ... 1 appreoiate rt.' Segment time: 3:26 Hour 1 Total Time: 61:27 END OF DISC ONE 15260 VL.....,..IKA BOVU.VA.i\D .sntt'LOO.R Sl.fEIL"fAN OAKS, CAJ..J?' IL''IA ~noiO)-,J;J. Tl"rl.~ 4.Alf)}7'1·Sl00 '\A ( 1'1) l77·»JJ AMERICAJI TOP 40 /WITH RYAN S!ACIIEST Show Code: #08-15 Show Date: Weekend of April 12-13, 2008 Disc Two/Hour Two Opening Billboard :05 Fox Seg. -

Asher Roth, Bow Wow, Brandy, Diddy, DJ Kid Capri, Drake

Asher Roth, Bow Wow, Brandy, Diddy, DJ Kid Capri, Drake, Fabolous, Geto Boys, Jonah Hill, Keri Hilson, Khujo Goodie, Lauren London, Romeo, Ray J, Silkk the Shocker and T.I. Join the 7th Annual '2010 VH1 Hip Hop Honors: The Dirty South' Celebration Premier 2010 Honorees Include Jermaine Dupri, J Prince of Rap-A-Lot Records, Luther "Luke" Campbell, The 2 Live Crew, Master P and Organized Noize Actor/Comedian Craig Robinson ("The Office"/"Last Comic Standing") Will Host NEW YORK, June 2, 2010 /PRNewswire via COMTEX/ --Back for its 7th year, the "2010 VH1 Hip Hop Honors" will celebrate the achievements of hip hop with a little southern twist. This year, the showwill celebrate the music, the influence and the artists from The South. Honorees Jermaine Dupri, J Prince of Rap-A-Lot Records, Luther "Luke" Campbell, The 2 Live Crew, Master P, and Organized Noize will be honored through performances in collaboration with some of today's hottest talent. Hosted by Craig Robinson, "2010 VH1 Hip Hop Honors: The Dirty South" will tape from NYC's Hammerstein Ballroom and air on VH1 on Monday, June 7 at 9:00 PM ET/PT. 100 Grand Band, A.K., Asher Roth, Black Don, Bow Wow, Cool Breeze, Diddy, DJ 5000 Watts, DJ Domination, DJ Freestyle Steve, DJ Jelly, DJ Mixx, DJ Kid Capri, DJ Mike Love, D.O.E., Drake, Fabolous, Geto Boys, Keri Hilson, Khujo Goodie, Lil D, Nasty Delicious, Romeo, Sebastian, Silkk the Shocker and Valentino will join previously announced performers Bone Crusher, Bun B, Chamillionaire, Dem Franchize Boyz, DJ Drama, DJ Khaled, Flo Rida, Gucci Mane, Juvenile, Lil Jon, Missy Elliott, Murphy Lee, Mystikal, Nelly, Paul Wall, Pitbull, Rick Ross, Slim Thug, T- Pain, Trick Daddy, Trina and Ying Yang Twins with performances that are sure to make the South proud. -

Kardashian Baby Pool Rules

Kardashian Baby Pool Rules How to enter and participate: Log onto Energy1069.com or enter on Energy 106.9’s Facebook page between May 28 and June 9, 2013 and register to play the Kardashian Baby Pool. The Kardashian Baby Pool is a game where each participant guesses what the gender, birthdate, length and weight, time of day, hair and eye color of Kim Kardashian’s expectant child. After guessing the exact gender, whoever's guess is closest each category, without going over, wins the game. In the case of a tie, one winner will be selected at random. You must be at least 18 years old to play. To participate in voting you must become a registered user through uPickem. Only one internet entry per person and one internet entry per email address is permitted. Internet entries will be deemed made by the authorized account holder of the email address submitted at the time of entry. The authorized account holder is the natural person who is assigned to the email address by an internet access provider, online service provider or other organization that is responsible for assigning an email address or the domain associated with the submitted email address. Multiple participants are not permitted to share the same email address. Entries submitted will not be acknowledged or returned. Use of any device to automate entry is prohibited. Proof of submission of an entry shall not be deemed proof of receipt by Station. Station’s computer is the official time keeping device for the Promotion. The Station is not responsible for entries not received due to difficulty accessing the internet, service outage or delays, computer difficulties or other technological problems. -

NOT PLAYEO on BUSRAOIO Sexy Can I-Ray J

NOT PLAYEO ON BUSRAOIO Sexy Can I-Ray J Sexy can I, just pardon my manners. Girl how you shake it, got a playa like (ohhhh) It's a kodak moment, let me go and get my camera Alii wanna know is, Sexy can I, sexy can i hit it from the front, then I hit it from the back. know you like it like that. then we take it to the bed, then we take it to the floor then we chill for a second, then we back at it for more Sexy can I, just pardon my manners. Girl how you shake it, got a playa like (ohhhh) It's a kodak moment, let me go and get my camera All I wanna know is SEXY CAN I [Young Berg:] What up Iii mama, it's ya boy Youngin G5 dippin, lui vuinon luggage (ay) Gona love it, ya boy so fly All the ladies go (ohhh) when a nigga go by. Gucci on the feet, Marc Jacob on the thigh She wanna ride or die with cha boy in the chi. That's right, so Ilet her kiss the prince so boyfriend, she ain't missed him since. [Ray J:] Sexy can I, just pardon my manners. Girl how you shake it, got a Playa like (ohhhh) It's a kodak moment, let me go and get my camera All I wanna know is, sexy can I. Sexy can I, keep it on the low. Got a girl at the crib, we can take it to the mo-mo. -

To Search This List, Hit CTRL+F to "Find" Any Song Or Artist Song Artist

To Search this list, hit CTRL+F to "Find" any song or artist Song Artist Length Peaches & Cream 112 3:13 U Already Know 112 3:18 All Mixed Up 311 3:00 Amber 311 3:27 Come Original 311 3:43 Love Song 311 3:29 Work 1,2,3 3:39 Dinosaurs 16bit 5:00 No Lie Featuring Drake 2 Chainz 3:58 2 Live Blues 2 Live Crew 5:15 Bad A.. B...h 2 Live Crew 4:04 Break It on Down 2 Live Crew 4:00 C'mon Babe 2 Live Crew 4:44 Coolin' 2 Live Crew 5:03 D.K. Almighty 2 Live Crew 4:53 Dirty Nursery Rhymes 2 Live Crew 3:08 Fraternity Record 2 Live Crew 4:47 Get Loose Now 2 Live Crew 4:36 Hoochie Mama 2 Live Crew 3:01 If You Believe in Having Sex 2 Live Crew 3:52 Me So Horny 2 Live Crew 4:36 Mega Mixx III 2 Live Crew 5:45 My Seven Bizzos 2 Live Crew 4:19 Put Her in the Buck 2 Live Crew 3:57 Reggae Joint 2 Live Crew 4:14 The F--k Shop 2 Live Crew 3:25 Tootsie Roll 2 Live Crew 4:16 Get Ready For This 2 Unlimited 3:43 Smooth Criminal 2CELLOS (Sulic & Hauser) 4:06 Baby Don't Cry 2Pac 4:22 California Love 2Pac 4:01 Changes 2Pac 4:29 Dear Mama 2Pac 4:40 I Ain't Mad At Cha 2Pac 4:54 Life Goes On 2Pac 5:03 Thug Passion 2Pac 5:08 Troublesome '96 2Pac 4:37 Until The End Of Time 2Pac 4:27 To Search this list, hit CTRL+F to "Find" any song or artist Ghetto Gospel 2Pac Feat. -

T-Pain E-40 Boss Hogg Outlawz Gorilla Zoe Pitbull Unk

OZONE MAGAZINE NEVER MIND WHAT HATERS SAY, IGNORE ‘EM TIL THEY FADE AWAY THEY FADE TIL ‘EM IGNORE SAY, HATERS WHAT NEVER MIND MAGAZINE OZONE YOUR FAVORITE RAPPER’S FAVORITE MAGAZINE T-PAIN E-40 BOSS HOGG OUTLAWZ GORILLA ZOE PITBULL UNK REMEMBERING SHAKIR STEWART OZONE MAG // YOUR FAVORITE RAPPER’S FAVORITE MAGAZINE T-PAIN E-40BOSS HOGG OUTLAWZ GORILLA ZOE PITBULL REMEMBERING UNK SHAKIR & MORE STEWART 28 // OZONE WEST // OZONE MAG OZONE MAG // // OZONE MAG OZONE MAG // // OZONE MAG OZONE MAG // // OZONE MAG OZONE MAG // PUBLISHER/EDITOR-IN-CHIEF // Julia Beverly MUSIC EDITOR // Randy Roper FEATURES EDITOR // Eric N. Perrin ASSOCIATE EDITOR // Maurice G. Garland monthly sections interviews GRAPHIC DESIGNER // David KA 15 10 THINGS I’M HATIN’ ON 34 BLEU DAVINCI ADVERTISING SALES // Che Johnson, Richard Spoon 22 ARE U A G? 52-53 BOSS HOGG OUTLAWZ PROMOTIONS DIRECTOR // Malik Abdul 72 BOARD GAME 68-69 GORILLA ZOE SPECIAL EDITION EDITOR // Jen McKinnon 73 CAFFEINE SUBSTITUTES 54-55 PASTOR TROY LEGAL CONSULTANT // Kyle P. King, P.A. 74 CD REVIEWS 62-63 PITBULL SUBSCRIPTIONS MANAGER // Adero Dawson 26 CHAIN REACTION 70-71 ROB G ADMINISTRATIVE // Kisha Smith 24 CHIN CHECK 60-61 UNK INTERNS // Jee’Van Brown, Kari Bradley, Torrey 44 DJ BOOTH Holmes 28 DOLLAR MENU features CONTRIBUTORS // Anthony Roberts, Bogan, Char- 12 FEEDBACK lamagne the God, Chuck T, Cierra Middlebrooks, 36 OZONE SPORTS Edward Hall, Jacquie Holmes, J Lash, Jason Cordes, 22 HOOD DEEDS 64-66 SHAKIR STEWART Johnny Louis, Keadron Smith, Keith Kennedy, K.G. 51 INDUSTRY 101 Mosley, King Yella, Luis Santana, Luxury Mindz, Marcus DeWayne, Matt Sonzala, Maurice G.