Development of a Vision-Based Sorting Operation Laboratory: a Student Driven Project

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Strategic Alliance: Rockwell Automation and FANUC

The power of STRATEGIC ALLIANCE: ROCKWELL AUTOMATION AND FANUC: Streamline operations, collaboration maintenance, management, and intelligence with agile manufacturing systems FANUC and Rockwell Automation design complementary solutions based on the Rockwell Automation Integrated Architecture™ with a complete hardware and software working for you suite for your powertrain application. The Integrated Architecture production control and information system can help you improve productivity and reduce costs. PartnerNetwork™ solutions Today, within a complex system, more sophisticated devices are being used that need from Rockwell Automation to be connected, controlled and have the ability to communicate data over integrated control and information networks. Additionally, these systems need to be integrated across multiple suppliers’ machines in continuously shorter project cycles. To address the market challenges of the increasing complexity of machine systems, new safety and security requirements and the growing importance of information technologies, FANUC and Rockwell Automation offer a pre-engineered integrated automation solution for powertrain applications. This solution integrates FANUC CNCs and robots to Rockwell Automation cell controllers so manufacturers can more easily manage operations across equipment controlled by the two systems. This provides cost efficiencies, reduced set-up, better part quality, safer work environments, usable manufacturing intelligence and overall increased productivity. For more information, please visit: -

Cobots Ebook

COBOTS EBOOK Collaborative Robots Buyer's Guide INTRODUCTION A new kind of robot has made its way into industrial settings, challenging our preconceived notions of robotics. These robots’ main feature is the ability to work safely alongside humans, and now it seems human-robot collaboration is the most sought-after characteristic for robots. There’s a lot of talk about them on the web, but what are they really? Until now, robots have always been big, strong, robust devices that work on specific tasks which were designed for them. They’ve been kept in cages and surrounded by guards for safety purposes. Their bright color was used to warn surrounding workers about the danger they represented. And it took a lot of programming skills just to set up these robots. Collaborative robots, on the other hand, are designed to work with humans. They’re built with safety features such as integrated sensors, passive compliance, or overcurrent detection. The integrated sensors will feel external forces and, if this force is too high, lead the robot to stop its movement. Passive compliance is produced by mechanical components. If an external force acts on a joint, this joint will submit itself to this force. So, in the case of a collision, the joint will move in the opposite direction or stop completely to avoid causing injury. Most collaborative robots can be easily taught by demonstration, rather than requiring a deep knowledge of programming. Thanks to their ease of implementation and the fact that no additional safety features are required (no fences, switches, etc.), they can be brought on-line much more quickly. -

Apprenticeship That Works

Apprenticeships That Work The RAMTEC Model Ritch Ramey Success Bound Conference RAMTEC Coordinator May 9th, 2018 Tri-Rivers RAMTEC Building a Robotic & Automation Technician Pipeline High Education K-5 6-8 9-12 Career Ritch Ramey RAMTEC Coordinator Tri-Rivers Career Center Marion, Ohio Robotics and our Manufacturing Crisis “Over the next decade, nearly three and a half million manufacturing jobs will be needed to be filled and the skills gap is expected to result in 2 million of those jobs going unfilled” Source; The manufacturing Institute Current Manufacturing Gap • “800 New Hires Needed in Our Honda Plant to Replace Retirees” • “300 Robotic Technicians Needed in Next 3 years” • “200 Robotic & Automation Technicians needed in next 2 Years” • “100 Skilled Workers Needed in next 6 Months” • “50 Skilled Workers in Next 6 months” • “We need 15 Entry Level Robotic Technicians Immediately” • “We Will Hire Your Whole Graduating Class as Robotics Techs ” • We will Graduate 21 Engineering Technologies students this year Our Answer to the Manufacturing Gap RAMTEC Ohio Growth • September 2013 RAMTEC Ohio at Tri- Rivers in Marion opened • June 2014 RAMTEC Ohio $14,995,000 Straight A Grant to create 8 additional RAMTEC sites funded • November 2015 additional $ 8,000,000 funding for 14 more RAMTEC Ohio sites • Provided Training for 96 Instructors • Hosted Summer Camps for more than 500 Participants Why is the RAMTEC Program Needed? Exposure of our our next generation to Highly Rewarding STEM & Manufacturing Careers Prepared for Apprenticeships "RAMTEC Engineering Technology graduates have been a great addition to our Maintenance Apprenticeship program. They have a solid foundation in electrical, PLC, hydraulics, and robotics, all which are key components of our multi-craft workforce. -

INVESTORS GUIDE 2019 Osaka Office to Our Shareholders and Investors Fukuoka Sales Office Shinjuku Office Shinjuku Support Center Tachikawa Sales Office

TOKYO ELECTRON DEVICE LIMITED Securities code: 2760 Business Locations (As of July 1, 2019) Business Location Domestic Subsidiary Omiya Sales Office Matsumoto Sales Office Sendai Sales Office Tsukuba Sales Office Iwaki Sales Office Nagoya Sales Office Mito Sales Office Kyoto Sales Office INVESTORS GUIDE 2019 Osaka Office To Our Shareholders and Investors Fukuoka Sales Office Shinjuku Office Shinjuku Support Center Tachikawa Sales Office Engineering Center Headquarters (Yokohama) FAST CORPORATION (Yamato-city, Kanagawa prefecture) TOKYO ELECTRON DEVICE Mishima Sales Office NAGASAKI LTD. Hamamatsu Sales Office (Isahaya-city, Nagasaki prefecture) Business/Marketing location Overseas Design and development location Dalian Yokohama Ottawa Seoul Silicon Valley Shanghai Taipei Shenzhen Bangkok Wuxi Hong Kong Singapore Philippines Note on forward-looking statements This Investors Guide was prepared on July 1, 2019. Forward looking statements, including business strategies and business forecasts, were made by the Company’s management, based on information available at that time, and may be revised due to changes in the business environment. Therefore, please be advised that the Company cannot guarantee the accuracy or the reliability of the statements. For the latest information, please refer to our information releases or our website. Note also that product and service names remain the trademarks of their respective owners. Corporate Communications Dept. https://www.teldevice.co.jp World Headquarters Yokohama East Square, 1-4 Kinko-cho, Kanagawa-ku,Yokohama -

CERT Education Tools

[ CNC ] [ ROBOTICS ] [ ROBOMACHINE ] [ SERVICE ] [ EDUCATION ] CERTIFIED EDUCATION PROGRAMS AUTOMATION & ADVANCED MACHINING FANUC Cert Program.indd 1 7/19/2016 6:32:30 PM MANUFACTURING IS GROWING The need for skilled workers with automation training certifi cations is growing, too. According to The Manufacturing Institute, there are over 600,000 unfi lled manufac- turing positions in the US, but many applicants lack the skills and training to do the job. In the next ten years, this gap is expected to grow to as many as 2 million unfi lled manufacturing jobs as the number of highly skilled manufacturing jobs continues to outpace the pool of trained candidates. FANUC Certifi ed Education Training FANUC certifi ed education programs provide industry-relevant training and competency- based skills development across the FANUC CNC and robotics product lines. FANUC collaborates with other industry technology leaders in automation, advanced manufac- 80% turing, the connected enterprise, and education to develop a certifi cation program that of Manufacturers addresses the needs of employers and aligns with secondary and post-secondary pro- Report Shortage of Skilled Workers grams of study. FANUC is an authorized provider of Continuing Education Units. All FANUC educational programs meet the national ANSI/IACET standards. 2 FANUC Cert Program.indd 2 7/19/2016 6:32:36 PM CERTIFIED EDUCATION Our programs provide manufacturers and educators with a STEM-based curriculum centered on CNC and robot programming and operation. With the majority of industry turning to FANUC robots and CNC systems, graduates who are familiar with FANUC equip- ment and systems have an advantage over their competitors in the job market. -

An Industrial Robotics Course for Manufacturing Engineers

Paper ID #15654 An Industrial Robotics Course for Manufacturing Engineers Dr. Jeffrey L. Newcomer, Western Washington University Dr. Jeffrey L. Newcomer is a Professor of Manufacturing Engineering and Chair of the Engineering and Design Department at Western Washington University. He received his Ph.D. in Mechanical Engineering from Rensselaer Polytechnic Institute. c American Society for Engineering Education, 2016 An Industrial Robotics Course for Manufacturing Engineers For many years the automobile industry was the home to more than half of the robots used in U.S. manufacturing. Recently, however, many other industries have been or are planning to introduce robots into their manufacturing processes.1 In the Pacific Northwest region several companies in aerospace, electronics, apparel, and commercial cookware have either introduced robots or ex- panded their use in recent years. As such, an introduction to robotics in the context of manufac- turing is becoming more important for students pursuing degrees in Manufacturing Engineering. There is, however, always a challenge when teaching robotics to find the correct balance between application and modeling. Many robotics courses taught in Electrical or Mechanical Engineering Departments have a tendency to emphasize modeling over application, but a well-prepared Man- ufacturing Engineer needs to understand where the challenges in robotics applications lie as well as understanding what is going on ‘under the hood’. A quick survey of the 21 ABET accredited Manufacturing Engineering programs indicates that only five have a manufacturing specific ro- botics course, and three of those are special topics courses that are not offered consistently.2 Other programs have robotics integrated into a general automation class, have cross-listed classes with other programs, or have graduate classes on robotics that are available to seniors, so there are not the same opportunities for depth or focus on manufacturing applications. -

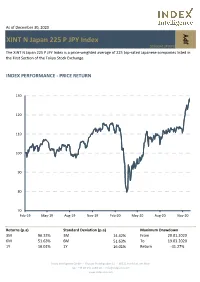

XINT N Japan 225 P JPY Index

As of December 30, 2020 XINT N Japan 225 P JPY Index JPY DE000A13PWP8 The XINT N Japan 225 P JPY Index is a price-weighted average of 225 top-rated Japanese companies listed in the First Section of the Tokyo Stock Exchange. INDEX PERFORMANCE - PRICE RETURN 130 120 110 100 90 80 70 Feb-19 May-19 Aug-19 Nov-19 Feb-20 May-20 Aug-20 Nov-20 Returns (p.a) Standard Deviation (p.a) Maximum Drawdown 3M 96.33% 3M 14.42% From 20.01.2020 6M 51.63% 6M 51.63% To 19.03.2020 1Y 16.01% 1Y 16.01% Return -31.27% Index Intelligence GmbH - Grosser Hirschgraben 15 - 60311 Frankfurt am Main Tel.: +49 69 247 5583 50 - [email protected] www.index-int.com Top 10 Constituents FFMV (JPYm) Weight % Industry Sector Fast Retailing Co Ltd 5 12.13 Retail SoftBank Group Corp 2 6.34 Telecommunications Tokyo Electron Ltd 2 5.04 Technology FANUC Corp 1 3.33 Industrial Goods & Services M3 Inc 1 3.07 Health Care Daikin Industries Ltd 1 3.01 Construction & Materials KDDI Corp 1 2.41 Telecommunications Shin-Etsu Chemical Co Ltd 1 2.37 Chemicals Terumo Corp 1 2.26 Health Care Chugai Pharmaceutical Co Ltd 1 2.17 Health Care Total 16 42.13 This information has been prepared by Index Intelligence GmbH (“IIG”). All information is provided "as is" and IIG makes no express or implied warranties, and expressly disclaims all warranties of merchantability or fitness for a particular purpose or use with respect to any data included herein. -

FTSE Publications

2 FTSE Russell Publications 20 May 2020 FTSE Japan Indicative Index Weight Data as at Closing on 31 March 2020 Constituent Index weight (%) Country Constituent Index weight (%) Country Constituent Index weight (%) Country 77 Bank 0.03 JAPAN Denso Corporation 0.45 JAPAN Isetan Mitsukoshi Holdings 0.06 JAPAN ABC-Mart 0.04 JAPAN Dentsu Inc 0.13 JAPAN Isuzu Motors 0.1 JAPAN Acom 0.05 JAPAN DIC 0.06 JAPAN Ito En 0.09 JAPAN Advantest Corp 0.24 JAPAN Disco 0.17 JAPAN Itochu Corp 0.87 JAPAN Aeon 0.48 JAPAN DMG Mori Seiki 0.03 JAPAN Itochu Techno Solutions 0.08 JAPAN AEON Financial Service 0.04 JAPAN Dowa Holdings 0.04 JAPAN Itoham Yonekyu Holdings 0.02 JAPAN Aeon Mall 0.05 JAPAN East Japan Railway 0.84 JAPAN Iyo Bank 0.04 JAPAN AGC 0.14 JAPAN Ebara 0.06 JAPAN Izumi Co Ltd 0.03 JAPAN Aica Kogyo 0.05 JAPAN Eisai 0.6 JAPAN J Front Retailing 0.06 JAPAN Ain Pharmaciez 0.05 JAPAN Electric Power Development 0.1 JAPAN Japan Airlines 0.06 JAPAN Air Water 0.08 JAPAN Ezaki Glico 0.07 JAPAN Japan Airport Terminal 0.06 JAPAN Aisin Seiki Co 0.13 JAPAN FamilyMart 0.13 JAPAN Japan Aviation Electronics 0.02 JAPAN Ajinomoto Co 0.28 JAPAN Fancl Corp 0.05 JAPAN Japan Exchange Group 0.29 JAPAN Alfresa Holdings 0.1 JAPAN Fanuc 0.8 JAPAN Japan Petroleum Exploration 0.02 JAPAN Alps Alpine 0.06 JAPAN Fast Retailing 0.65 JAPAN Japan Post Bank 0.12 JAPAN Amada 0.08 JAPAN FP Corporation 0.05 JAPAN Japan Post Holdings 0.32 JAPAN Amano Corp 0.05 JAPAN Fuji Electric 0.09 JAPAN Japan Post Insurance 0.07 JAPAN ANA Holdings 0.08 JAPAN Fuji Media Holdings 0.01 JAPAN Japan Steel -

FANUC Maintains Its Reputation for Being the Best

June 2, 2015 Rating: Market Perform Price target: Consensus ¥26,468 O'Neil ¥26,442 Closing price on 6/2/15: ¥27,700 Romeo Alvarez senior equity analyst [email protected] 310.448.6913 800.545.8940 | williamoneil.com Source: FANUC America Corp. FANUC Maintains Its Reputation for Being the Best FANUC Corp. ([email protected]; 6954:JP), producer of robotic and indus- trial automation systems, holds a dominant global market posi- tion, due in part to its highly efficient manufacturing base. Along with KUKA AG (IWKX. DE; KU2:GR), ABB Ltd. (ABB.CH; ABBN:VX), As of 06/2/2015 FANUC and Yaskawa Electric ([email protected]; 6506:JP), FANUC is part of the Symbol [email protected]; 6954:JP “the Big 4” vying for China’s rapidly expanding industrial robot Price ¥ 27,700 market, and like some of these competitors, it is launching new, Market cap (US$ bil) 53.3 non-automotive applications to fill emerging demand. We be- Shares outstanding (mil) 240 50-day avg daily volume lieve that FANUC will continue to post stable revenue and profit 42.4 (US$ mil) growth over the next few years, as one of the few pure-plays in 52-week high/low ¥28,575/¥16,940 the factory automation market. We are initiating coverage of this 2014 ROE 16.1 stock under our Robotics and Automation theme with a Market Dividend yield 2.3% Perform rating. P/E 26 Company Profile 1-year return 63% Relative Strength rating 87 Japan-based FANUC develops and manufactures computer nu- Accumulation/Distribution C merical control (CNC) equipment, factory automation systems, rating and industrial robots. -

FOCAS Automation Solutions (FAS)– the Easy Choice for CNC Communications and Data Collection

FOCAS Automation Solutions Simple connectivity, easy data transmission and collection FANUC America Corporation www.fanucamerica.com 888-FANUC-US (888-326-8287) FOCAS Automation Solutions (FAS)– The easy choice for CNC communications and data collection FOCAS Automation Solutions (FAS), based on the FANUC FOCAS API, are designed to simplify the connectivity of third-party hardware or software to the FANUC CNC, and they allow for easy transmission and collection of data from a FANUC CNC. FAS products are either purely software based solutions or propriety software and hardware solutions. All of the FAS solutions below are available in a 30 day trial version from your local FANUC America CNC salesperson or by contacting the FANUC America CNC options team. FASBacCNC – A user interface that works with FASConnect that allows browsing of all FASConnect PCs and backup data either manually or on a scheduled basis. FASConnect – Software that provides access and management of data to and from FOCAS enabled CNCs across a network. FASOPC – An OPC 3.0 compliant server for easy retrieval of data from FOCAS enabled CNCs. FASScreens – A collection of custom screens to help reduce time and effort to create new or modify existing UIs for the FANUC CNCs. Office PCs Office PCs 1) FASConnect UI 1) FASConnect UI 2) FASBacCNC 2) FASBacCNC 3) FASOPC Configuration 3) FASOPC Configuration Office Network Plant floor monitoring PCs 1) FASConnect UI 2) FASBacCNC Separate PC running real Separate PC running real 3) FASOPC Configuration or virtual FASConnect or virtual FASConnect and/or FASOPC #1 and/or FASOPC #x Plant Network FASBacCNC (FOCAS Automation Solution Backup CNC) FASBacCNC is a powerful and easy to use network based backup solution for FOCAS enabled FANUC CNCs. -

Management Direction Update

• Thank you for taking the time out of your busy schedules today to participate in this briefing on our fiscal year 2020 financial results. • To prevent the spread of COVID-19 infections, we are holding today’s event online. We apologize for any inconvenience this may cause. • My presentation will offer an overview of the progress of our management direction. To meet the management targets we announced last year for FY2022, I will explain the initiatives we have undertaken in FY2020 and the areas on which we will focus this fiscal year and into the future. Copyright 2021 FUJITSU LIMITED 0 • It was just around a year ago that we first defined our Purpose: “to make the world more sustainable by building trust in society through innovation.” • Today, all of our activities are aligned to achieve this purpose. Copyright 2021 FUJITSU LIMITED 1 Copyright 2021 FUJITSU LIMITED 2 • First, I would like to provide a summary of our financial results for FY2020. • The upper part of the table shows company-wide figures for Fujitsu. Consolidated revenue was 3,589.7 billion yen, and operating profit was 266.3 billion yen. • In the lower part of the table, which shows the results in our core business of Technology Solutions, consolidated revenue was 3,043.6 billion yen, operating profit was 188.4 billion yen, and our operating profit margin was 6.2%. • Due to the impact of COVID-19, revenue fell, but because of our shift toward the services business and greater efficiencies, on a company-wide basis our operating profit and profit for the year were the highest in Fujitsu’s history. -

PUTTING ROBOTS to WORK Global Growth Opportunity: Investing in the Second Machine Age

AlphaCentric Global Innovations Fund (GNXAX, GNXCX, GNXIX) PUTTING ROBOTS TO WORK Global Growth Opportunity: Investing in the Second Machine Age KEY TAKEAWAYS At AlphaCentric Funds, we believe growth in robotics for workplace automation has reached a tipping point towards • Global spending on robotics is expected to wide-scale adoption. In fact, the proliferation of robotics reach $103.1 billion in 2018, an increase of from its roots in the automotive industry has grown 21% over 2017. exponentially both here in the United States and around the globe due to growth in other industrial and service industry • In 2019, worldwide robotics spending is applications. Currently there are 250,000 robots in use in the forecast to be $103.4 billion. By 2022, IDC U.S., the third highest in the world behind Japan and China, expects this spending will reach $210.3 according to the Robotic Industries Association. billion with a compound annual growth rate (CAGR) of 20.2%.1 Wider adaption of robotic applications outside of the • Robotics solutions are used in five main automotive industry has translated into increased sales and sectors, with 22 application communities and brighter growth prospects for global robotic manufacturers growing. and automation companies. Accordingly, we believe the increased utilization of robotics and automation • Robotics and automation companies are set technologies presents a thematic investment opportunity to benefit from newly enacted tariffs. and the chance to participate in growth dynamics related to the adoption of robotics in industrial and service automation • Robotics and automation companies offer a in “The Second Machine Age.” thematic investment opportunity and the potential for long-term outperformance.