The Idols of Modernity: the Humanity Of

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

On Science and Pseudoscience

ON SCIENCE AND PSEUDOSCIENCE JASON ROSENHOUSE Reviews of Science or Pseudoscience: Magnetic Healing, Psychic Phe- nomena and Other Heterodoxies, by Henry Bauer, University of Illinois Press, 2000, 275 pages, ISBN 0-252-02601-2 and The Borderlands of Science: Where Sense Meets Nonsense, by Michael Shermer, Oxford University Press, 2001, 360 pages, ISBN 0-19-514326-4 Is the line between science and pseudoscience set arbitrarily by an of- ten arrogant scientific elite? Henry Bauer, emeritus professor of chem- istry at Virginia Polytechnic Institute and State University, believes that it is. While he does not claim that ESP, UFO’s, Bigfoots, cold fusion, or any other variety of fringe science is necessarily legitimate, he does claim that many of them might be. He further claims that science does itself a disservice when it allows a stifling orthodoxy to squelch offbeat ideas. Since many of the best ideas in human history began as heresies, we do well to be careful before passing judgment on anything. Bauer writes, “Comparisons between anomalistics and science as it is actually practiced will show that no sharp division can be established,” (7) “anomalistics” being a politically correct term for the study of bizarre claims. He is not impressed by the various checklists served up by philosophers for distinguishing science from pseudoscience. He points out, quite correctly, that not one perfectly distinguishes between the two. But Bauer is creating a false dichotomy. Science and pseudoscience are opposite ends of a continuum, not rigidly defined categories. Sub- jects like ESP are dismissed neither for their inherent absurdity nor for their inability to conform to an arbitrary set of philosophical criteria. -

HUMANISM Religious Practices

HUMANISM Religious Practices . Required Daily Observances . Required Weekly Observances . Required Occasional Observances/Holy Days Religious Items . Personal Religious Items . Congregate Religious Items . Searches Requirements for Membership . Requirements (Includes Rites of Conversion) . Total Membership Medical Prohibitions Dietary Standards Burial Rituals . Death . Autopsies . Mourning Practices Sacred Writings Organizational Structure . Headquarters Location . Contact Office/Person History Theology 1 Religious Practices Required Daily Observance No required daily observances. Required Weekly Observance No required weekly observances, but many Humanists find fulfillment in congregating with other Humanists on a weekly basis (especially those who characterize themselves as Religious Humanists) or other regular basis for social and intellectual engagement, discussions, book talks, lectures, and similar activities. Required Occasional Observances No required occasional observances, but some Humanists (especially those who characterize themselves as Religious Humanists) celebrate life-cycle events with baby naming, coming of age, and marriage ceremonies as well as memorial services. Even though there are no required observances, there are several days throughout the calendar year that many Humanists consider holidays. They include (but are not limited to) the following: February 12. Darwin Day: This marks the birthday of Charles Darwin, whose research and findings in the field of biology, particularly his theory of evolution by natural selection, represent a breakthrough in human knowledge that Humanists celebrate. First Thursday in May. National Day of Reason: This day acknowledges the importance of reason, as opposed to blind faith, as the best method for determining valid conclusions. June 21 - Summer Solstice. This day is also known as World Humanist Day and is a celebration of the longest day of the year. -

Ron Numbers Collection-- the Creationists

Register of the Ron Numbers Collection-- The Creationists Collection 178 Center for Adventist Research James White Library Andrews University Berrien Springs, Michigan 49104-1400 1992, revised 2007 Ron Numbers Collection--The Creationists (Collection 178) Scope and Content This collection contains the records used as resource material for the production of Dr. Numbers' book, The Creationists, published in 1992. This book documents the development of the creationist movement in the face of the growing tide of evolution. The bulk of the collection dates from the 20th century and covers most of the prominent, individual creationists and pro-creation groups of the late 19th and 20th century primarily in the United States, and secondarily, those in England, Australia, and Canada. Among the types of records included are photocopies of articles and other publications, theses, interview tapes and transcripts, and official publications of various denominations. One of the more valuable contributions of this collection is the large quantity of correspondence of prominent individuals. These records are all photocopies. A large section contains documentation related to Seventh-day Adventist creationists. Adventists were some of the leading figures in the creationist movement, and foremost among this group is George McCready Price. The Adventist Heritage Center holds a Price collection. The Numbers collection contributes additional correspondence and other documentation related to Price. Arrangement Ron Numbers organized this collection for the purpose of preparing his book manuscript, though the book itself is not organized in this way. Dr. Numbers suggested his original arrangement be retained. While the collection is arranged in its original order, the outline that follows may be of help to some researchers. -

The Three Phases of Arendt's Theory of Totalitarianism*

The Three Phases of Arendt's Theory of Totalitarianism* X~1ANNAH Arendt's The Origins of Totalitarianism, first published in 1951, is a bewilderingly wide-ranging work, a book about much more than just totalitarianism and its immediate origins.' In fact, it is not really about those immediate origins at all. The book's peculiar organization creates a certain ambiguity regarding its intended subject-matter and scope.^ The first part, "Anti- semitism," tells the story of tbe rise of modern, secular anti-Semi- tism (as distinct from what the author calls "religious Jew-hatred") up to the turn of the twentieth century, and ends with the Drey- fus affair in Erance—a "dress rehearsal," in Arendt's words, for things still worse to come (10). The second part, "Imperialism," surveys an assortment of pathologies in the world politics of the late nineteenth and early twentieth centuries, leading to (but not direcdy involving) the Eirst World War. This part of the book examines the European powers' rapacious expansionist policies in Africa and Asia—in which overseas investment became the pre- text for raw, openly racist exploitation—and the concomitant emergence in Central and Eastern Europe of "tribalist" ethnic movements whose (failed) ambition was the replication of those *Earlier versions of this essay were presented at the University of Virginia and at the conference commemorating the fiftieth anniversary of The Origins of Toudilarianism hosted by the Hannah Arendt-Zentrum at Carl Ossietzky University in Oldenburg, Ger- many. I am grateful to Joshua Dienstag and Antonia Gnmenberg. respectively, for arrang- ing these (wo occasions, and also to the inembers of the audience at each—especially Lawrie Balfour, Wolfgang Heuer, George KJosko, Allan Megili, Alfons Sollner, and Zoltan Szankay—for their helpful commeiiLs and criticisms. -

The Culture of Wikipedia

Good Faith Collaboration: The Culture of Wikipedia Good Faith Collaboration The Culture of Wikipedia Joseph Michael Reagle Jr. Foreword by Lawrence Lessig The MIT Press, Cambridge, MA. Web edition, Copyright © 2011 by Joseph Michael Reagle Jr. CC-NC-SA 3.0 Purchase at Amazon.com | Barnes and Noble | IndieBound | MIT Press Wikipedia's style of collaborative production has been lauded, lambasted, and satirized. Despite unease over its implications for the character (and quality) of knowledge, Wikipedia has brought us closer than ever to a realization of the centuries-old Author Bio & Research Blog pursuit of a universal encyclopedia. Good Faith Collaboration: The Culture of Wikipedia is a rich ethnographic portrayal of Wikipedia's historical roots, collaborative culture, and much debated legacy. Foreword Preface to the Web Edition Praise for Good Faith Collaboration Preface Extended Table of Contents "Reagle offers a compelling case that Wikipedia's most fascinating and unprecedented aspect isn't the encyclopedia itself — rather, it's the collaborative culture that underpins it: brawling, self-reflexive, funny, serious, and full-tilt committed to the 1. Nazis and Norms project, even if it means setting aside personal differences. Reagle's position as a scholar and a member of the community 2. The Pursuit of the Universal makes him uniquely situated to describe this culture." —Cory Doctorow , Boing Boing Encyclopedia "Reagle provides ample data regarding the everyday practices and cultural norms of the community which collaborates to 3. Good Faith Collaboration produce Wikipedia. His rich research and nuanced appreciation of the complexities of cultural digital media research are 4. The Puzzle of Openness well presented. -

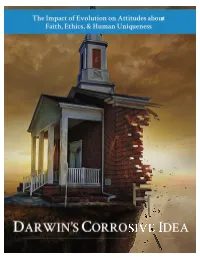

Darwins-Corrosive-Idea.Pdf

This report was prepared and published by Discovery Institute’s Center for Science and Culture, a non-profit, non-partisan educational and research organization. The Center’s mission is to advance the understanding that human beings and nature are the result of intelligent design rather than a blind and undirected process. We seek long-term scientific and cultural change through cutting-edge scientific research and scholarship; education and training of young leaders; communication to the general public; and advocacy of academic freedom and free speech for scientists, teachers, and students. For more information about the Center, visit www.discovery.org/id. FOR FREE RESOURCES ABOUT SCIENCE AND FAITH, VISIT WWW.SCIENCEANDGOD.ORG/RESOURCES. PUBLISHED NOVEMBER, 2016. © 2016 BY DISCOVERY INSTITUTE. DARWIN’S CORROSIVE IDEA The Impact of Evolution on Attitudes about Faith, Ethics, and Human Uniqueness John G. West, PhD* EXECUTIVE SUMMARY In his influential book Darwin’s Dangerous Idea, have asked about the impact of science on a person’s philosopher Daniel Dennett praised Darwinian religious faith typically have not explored the evolution for being a “universal acid” that dissolves impact of specific scientific ideas such as Darwinian traditional religious and moral beliefs.1 Evolution- evolution.5 ary biologist Richard Dawkins has similarly praised In order to gain insights into the impact of Darwin for making “it possible to be an intellect- specific scientific ideas on popular beliefs about ually fulfilled atheist.”2 Although numerous studies God and ethics, Discovery Institute conducted a have documented the influence of Darwinian nationwide survey of a representative sample of theory and other scientific ideas on the views of 3,664 American adults. -

“Junk Science”: the Criminal Cases

Case Western Reserve University School of Law Scholarly Commons Faculty Publications 1993 “Junk Science”: The Criminal Cases Paul C. Giannelli Case Western University School of Law, [email protected] Follow this and additional works at: https://scholarlycommons.law.case.edu/faculty_publications Part of the Evidence Commons, and the Litigation Commons Repository Citation Giannelli, Paul C., "“Junk Science”: The Criminal Cases" (1993). Faculty Publications. 393. https://scholarlycommons.law.case.edu/faculty_publications/393 This Article is brought to you for free and open access by Case Western Reserve University School of Law Scholarly Commons. It has been accepted for inclusion in Faculty Publications by an authorized administrator of Case Western Reserve University School of Law Scholarly Commons. 0091-4169/93/8401-0105 THE jouRNAL OF CRIMINAL LAw & CRIMINOLOGY Vol. 84, No. I Copyright© 1993 by Northwestern University, School of Law Printed in U.S.A. "JUNK SCIENCE": THE CRIMINAL CASES PAUL C. GIANNELLI* l. INTRODUCTION Currently, the role of expert witnesses in civil trials is under vigorous attack. "Expert testimony is becoming an embarrassment to the law of evidence," notes one commentator. 1 Articles like those entitled "Experts up to here"2 and "The Case Against Expert Wit nesses"3 appear in Forbes and Fortune. Terms such as "junk science," "litigation medicine," "fringe science," and "frontier science" are in vogue.4 Physicians complain that "[l]egal cases can now be de cided on the type of evidence that the scientific community rejected decades ago."5 A. THE FEDERAL RULES OF EVIDENCE The expert testimony provisions of the Federal Rules of Evi dence are the focal point of criticism. -

Biennial Report 2012–2013

Biennial Report 2012–2013 Institut für Grenzgebiete der Psychologie und Psychohygiene e.V. (IGPP) Freiburg im Breisgau Biennial Report 2012−2013 Institut für Grenzgebiete der Psychologie und Psychohygiene e.V. Freiburg i. Br. Institut für Grenzgebiete der Psychologie und Psychohygiene e.V. (IGPP) Wilhelmstr. 3a D-79098 Freiburg i. Br. Telefon: +49 (0)761 20721 10 Telefax: +49 (0)761 20721 99 Internet: www.igpp.de Prof. em. Dr. Dieter Vaitl (ed.) Printed by: Druckwerkstatt im Grün Druckerei und Verlagsgesellschaft mbH All rights reserved: Institut für Grenzgebiete der Psychologie und Psychohygiene e.V. Freiburg i. Br., March 2014 Inhalt Preface ............................................................................................................ 1 1. History ........................................................................................................ 3 2. Research .................................................................................................... 5 2.1 Theory and Data Analysis ................................................................. 6 2.2 Empirical and Analytical Psychophysics ......................................... 12 2.3 Research Group Clinical and Physiological Psychology ................ 17 2.4 Cultural Studies and Social Research ............................................ 21 2.5 Cultural and Historical Studies, Archives and Library ..................... 25 2.6 Counseling and Information ............................................................ 33 2.7 Bender Institute of Neuroimaging -

Lexical Semantic Analysis in Natural Language Text

Lexical Semantic Analysis in Natural Language Text Nathan Schneider Language Technologies Institute School of Computer Science Carnegie Mellon University ◇ July 28, 2014 Submitted in partial fulfillment of the requirements for the degree of doctor of philosophy in language and information technologies Abstract Computer programs that make inferences about natural language are easily fooled by the often haphazard relationship between words and their meanings. This thesis develops Lexical Semantic Analysis (LxSA), a general-purpose framework for describing word groupings and meanings in context. LxSA marries comprehensive linguistic annotation of corpora with engineering of statistical natural lan- guage processing tools. The framework does not require any lexical resource or syntactic parser, so it will be relatively simple to adapt to new languages and domains. The contributions of this thesis are: a formal representation of lexical segments and coarse semantic classes; a well-tested linguistic annotation scheme with detailed guidelines for identifying multi- word expressions and categorizing nouns, verbs, and prepositions; an English web corpus annotated with this scheme; and an open source NLP system that automates the analysis by statistical se- quence tagging. Finally, we motivate the applicability of lexical se- mantic information to sentence-level language technologies (such as semantic parsing and machine translation) and to corpus-based linguistic inquiry. i I, Nathan Schneider, do solemnly swear that this thesis is my own To my parents, who gave me language. work, and that everything therein is, to the best of my knowledge, true, accurate, and properly cited, so help me Vinken. § ∗ In memory of Charles “Chuck” Fillmore, one of the great linguists. -

Burdick, Clifford Leslie (1894–1992)

Burdick, Clifford Leslie (1894–1992) JAMES L. HAYWARD James L. Hayward, Ph.D. (Washington State University), is a professor emeritus of biology at Andrews University where he taught for 30 years. He is widely published in literature dealing with ornithology, behavioral ecology, and paleontology, and has contributed numerous articles to Adventist publications. His book, The Creation-Evolution Controversy: An Annotated Bibliography (Scarecrow Press, 1998), won a Choice award from the American Library Association. He also edited Creation Reconsidered (Association of Adventist Forums, 2000). Clifford Leslie Burdick, a Seventh-day Adventist consulting geologist, was an outspoken defender of young earth creationism and involved in the search for Noah’s Ark. Many of his claims were sensationalist and later discredited. Early Life and Education Born in 1894, Burdick was a graduate of the Seventh Day Baptist Milton College of Wisconsin. After accepting the Seventh-day Adventist message, Burdick enrolled at Emmanuel Missionary College (EMC, now Andrews University) in hopes of becoming a missionary. There he met the prominent creationist George McCready Price who inspired Burdick’s Clifford Leslie Burdick. interest in “Flood geology.”1 From Youth's Instructor, July 11, 1967 In 1922 Burdick submitted a thesis to EMC entitled “The Sabbath: Its Development in America,” which he later claimed earned him a Master of Arts degree in theology. Records show, however, that he never received a degree at EMC. He also claimed to have received a master’s degree in geology from the University of Wisconsin. Although he did complete several courses in geology there, he failed his oral exams and thus was denied a degree.2 During the 1950s Burdick took courses in geology and paleontology at the University of Arizona with plans to earn a PhD. -

Darwin Day in Deep Time: Promoting Evolutionary Science Through Paleontology Sarah L

Shefeld and Bauer Evo Edu Outreach (2017) 10:10 DOI 10.1186/s12052-017-0073-3 RESEARCH ARTICLE Open Access Darwin Day in deep time: promoting evolutionary science through paleontology Sarah L. Shefeld1,2* and Jennifer E. Bauer2 Abstract Charles Darwin’s birthday, February 12th, is an international celebration coined Darwin Day. During the week of his birthday, universities, museums, and science-oriented organizations worldwide host events that celebrate Darwin’s scientifc achievements in evolutionary biology. The University of Tennessee, Knoxville (UT) has one of the longest running celebrations in the nation, with 2016 marking the 19th year. For 2016, the theme for our weeklong series of events was paleontology, chosen to celebrate new research in the feld and to highlight the specifc misconceptions of evolution within the context of geologic time. We provide insight into the workings of one of our largest and most successful Darwin Day celebration to date, so that other institutions might also be able to host their own rewarding Darwin Day events in the future. Keywords: Charles Darwin, Paleontology, Tennessee, Community, Evolutionary biology, Fossils Introduction event, was given by Dr. Neil Shubin, paleontologist and February 12th is an annual international celebration of author of popular science book Your Inner Fish, was the life and work of Charles Darwin, collectively termed attended by over 600 university and community members. “Darwin Day”. Tese global events have similar objec- Celebrating Darwin’s theory of evolution by natural tives: to educate and excite the public about evolutionary selection is particularly important in the United States, science. Although Darwin’s work is most often discussed where a nearly one-third of the adult population does not in terms of its incredible impact on evolutionary biol- accept the evidence for evolution (Miller et al. -

Genetic Ancestry Testing Among White Nationalists Aaron

When Genetics Challenges a Racist’s Identity: Genetic Ancestry Testing among White Nationalists Aaron Panofsky and Joan Donovan, UCLA Abstract This paper considers the emergence of new forms of race-making using a qualitative analysis of online discussions of individuals’ genetic ancestry test (GAT) results on the white nationalist website Stormfront. Seeking genetic confirmation of personal identities, white nationalists often confront information they consider evidence of non-white or non- European ancestry. Despite their essentialist views of race, much less than using the information to police individuals’ membership, posters expend considerable energy to repair identities by rejecting or reinterpreting GAT results. Simultaneously, however, Stormfront posters use the particular relationships made visible by GATs to re-imagine the collective boundaries and constitution of white nationalism. Bricoleurs with genetic knowledge, white nationalists use a “racial realist” interpretive framework that departs from canons of genetic science but cannot be dismissed simply as ignorant. Introduction Genetic ancestry tests (GATs) are marketed as a tool for better self-knowledge. Purporting to reveal aspects of identity and relatedness often unavailable in traditional genealogical records, materials promoting GATs advertise the capacity to reveal one’s genetic ties to ethnic groups, ancient populations and historical migrations, and even famous historical figures. But this opportunity to “know thyself” can come with significant risks. Craig Cobb had gained public notoriety and cult status among white supremacists for his efforts to buy up property in Leith, ND, take over the local government, and establish a white supremacist enclave. In 2013, Cobb was invited on The Trisha Show, a daytime talk show, to debate these efforts.