A Minimax Algorithm Better Than Alpha-Beta?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Graph Traversals

Graph Traversals CS200 - Graphs 1 Tree traversal reminder Pre order A A B D G H C E F I In order B C G D H B A E C F I Post order D E F G H D B E I F C A Level order G H I A B C D E F G H I Connected Components n The connected component of a node s is the largest set of nodes reachable from s. A generic algorithm for creating connected component(s): R = {s} while ∃edge(u, v) : u ∈ R∧v ∉ R add v to R n Upon termination, R is the connected component containing s. q Breadth First Search (BFS): explore in order of distance from s. q Depth First Search (DFS): explores edges from the most recently discovered node; backtracks when reaching a dead- end. 3 Graph Traversals – Depth First Search n Depth First Search starting at u DFS(u): mark u as visited and add u to R for each edge (u,v) : if v is not marked visited : DFS(v) CS200 - Graphs 4 Depth First Search A B C D E F G H I J K L M N O P CS200 - Graphs 5 Question n What determines the order in which DFS visits nodes? n The order in which a node picks its outgoing edges CS200 - Graphs 6 DepthGraph Traversalfirst search algorithm Depth First Search (DFS) dfs(in v:Vertex) mark v as visited for (each unvisited vertex u adjacent to v) dfs(u) n Need to track visited nodes n Order of visiting nodes is not completely specified q if nodes have priority, then the order may become deterministic for (each unvisited vertex u adjacent to v in priority order) n DFS applies to both directed and undirected graphs n Which graph implementation is suitable? CS200 - Graphs 7 Iterative DFS: explicit Stack dfs(in v:Vertex) s – stack for keeping track of active vertices s.push(v) mark v as visited while (!s.isEmpty()) { if (no unvisited vertices adjacent to the vertex on top of the stack) { s.pop() //backtrack else { select unvisited vertex u adjacent to vertex on top of the stack s.push(u) mark u as visited } } CS200 - Graphs 8 Breadth First Search (BFS) n Is like level order in trees A B C D n Which is a BFS traversal starting E F G H from A? A. -

FIDE Laws of Chess

FIDE Laws of Chess FIDE Laws of Chess cover over-the-board play. The Laws of Chess have two parts: 1. Basic Rules of Play and 2. Competition Rules. The English text is the authentic version of the Laws of Chess (which was adopted at the 84th FIDE Congress at Tallinn (Estonia) coming into force on 1 July 2014. In these Laws the words ‘he’, ‘him’, and ‘his’ shall be considered to include ‘she’ and ‘her’. PREFACE The Laws of Chess cannot cover all possible situations that may arise during a game, nor can they regulate all administrative questions. Where cases are not precisely regulated by an Article of the Laws, it should be possible to reach a correct decision by studying analogous situations which are discussed in the Laws. The Laws assume that arbiters have the necessary competence, sound judgement and absolute objectivity. Too detailed a rule might deprive the arbiter of his freedom of judgement and thus prevent him from finding a solution to a problem dictated by fairness, logic and special factors. FIDE appeals to all chess players and federations to accept this view. A necessary condition for a game to be rated by FIDE is that it shall be played according to the FIDE Laws of Chess. It is recommended that competitive games not rated by FIDE be played according to the FIDE Laws of Chess. Member federations may ask FIDE to give a ruling on matters relating to the Laws of Chess. BASIC RULES OF PLAY Article 1: The nature and objectives of the game of chess 1.1 The game of chess is played between two opponents who move their pieces on a square board called a ‘chessboard’. -

Learning to Play Chess Using Temporal Differences

Machine Learning, 40, 243–263, 2000 c 2000 Kluwer Academic Publishers. Manufactured in The Netherlands. Learning to Play Chess Using Temporal Differences JONATHAN BAXTER [email protected] Department of Systems Engineering, Australian National University 0200, Australia ANDREW TRIDGELL [email protected] LEX WEAVER [email protected] Department of Computer Science, Australian National University 0200, Australia Editor: Sridhar Mahadevan Abstract. In this paper we present TDLEAF(), a variation on the TD() algorithm that enables it to be used in conjunction with game-tree search. We present some experiments in which our chess program “KnightCap” used TDLEAF() to learn its evaluation function while playing on Internet chess servers. The main success we report is that KnightCap improved from a 1650 rating to a 2150 rating in just 308 games and 3 days of play. As a reference, a rating of 1650 corresponds to about level B human play (on a scale from E (1000) to A (1800)), while 2150 is human master level. We discuss some of the reasons for this success, principle among them being the use of on-line, rather than self-play. We also investigate whether TDLEAF() can yield better results in the domain of backgammon, where TD() has previously yielded striking success. Keywords: temporal difference learning, neural network, TDLEAF, chess, backgammon 1. Introduction Temporal Difference learning, first introduced by Samuel (Samuel, 1959) and later extended and formalized by Sutton (Sutton, 1988) in his TD() algorithm, is an elegant technique for approximating the expected long term future cost (or cost-to-go) of a stochastic dy- namical system as a function of the current state. -

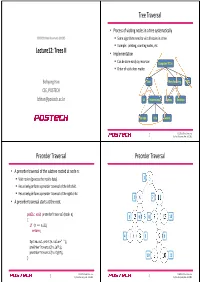

Lecture12: Trees II Tree Traversal Preorder Traversal Preorder Traversal

Tree Traversal • Process of visiting nodes in a tree systematically CSED233: Data Structures (2013F) . Some algorithms need to visit all nodes in a tree. Example: printing, counting nodes, etc. Lecture12: Trees II • Implementation . Can be done easily by recursion Computers”R”Us . Order of visits does matter. Bohyung Han Sales Manufacturing R&D CSE, POSTECH [email protected] US International Laptops Desktops Europe Asia Canada CSED233: Data Structures 2 by Prof. Bohyung Han, Fall 2013 Preorder Traversal Preorder Traversal • A preorder traversal of the subtree rooted at node n: . Visit node n (process the node's data). 1 . Recursively perform a preorder traversal of the left child. Recursively perform a preorder traversal of the right child. 2 7 • A preorder traversal starts at the root. public void preOrderTraversal(Node n) 3 6 8 12 { if (n == null) return; 4 5 9 System.out.print(n.value+" "); preOrderTraversal(n.left); preOrderTraversal(n.right); 10 11 } CSED233: Data Structures CSED233: Data Structures 3 by Prof. Bohyung Han, Fall 2013 4 by Prof. Bohyung Han, Fall 2013 Inorder Traversal Inorder Traversal • A preorder traversal of the subtree rooted at node n: . Recursively perform a preorder traversal of the left child. 6 . Visit node n (process the node's data). Recursively perform a preorder traversal of the right child. 4 11 • An iorder traversal starts at the root. public void inOrderTraversal(Node n) 2 5 7 12 { if (n == null) return; 1 3 9 inOrderTraversal(n.left); System.out.print(n.value+" "); inOrderTraversal(n.right); } 8 10 CSED233: Data Structures CSED233: Data Structures 5 by Prof. -

Hypermodern Game of Chess the Hypermodern Game of Chess

The Hypermodern Game of Chess The Hypermodern Game of Chess by Savielly Tartakower Foreword by Hans Ree 2015 Russell Enterprises, Inc. Milford, CT USA 1 The Hypermodern Game of Chess The Hypermodern Game of Chess by Savielly Tartakower © Copyright 2015 Jared Becker ISBN: 978-1-941270-30-1 All Rights Reserved No part of this book maybe used, reproduced, stored in a retrieval system or transmitted in any manner or form whatsoever or by any means, electronic, electrostatic, magnetic tape, photocopying, recording or otherwise, without the express written permission from the publisher except in the case of brief quotations embodied in critical articles or reviews. Published by: Russell Enterprises, Inc. PO Box 3131 Milford, CT 06460 USA http://www.russell-enterprises.com [email protected] Translated from the German by Jared Becker Editorial Consultant Hannes Langrock Cover design by Janel Norris Printed in the United States of America 2 The Hypermodern Game of Chess Table of Contents Foreword by Hans Ree 5 From the Translator 7 Introduction 8 The Three Phases of A Game 10 Alekhine’s Defense 11 Part I – Open Games Spanish Torture 28 Spanish 35 José Raúl Capablanca 39 The Accumulation of Small Advantages 41 Emanuel Lasker 43 The Canticle of the Combination 52 Spanish with 5...Nxe4 56 Dr. Siegbert Tarrasch and Géza Maróczy as Hypermodernists 65 What constitutes a mistake? 76 Spanish Exchange Variation 80 Steinitz Defense 82 The Doctrine of Weaknesses 90 Spanish Three and Four Knights’ Game 95 A Victory of Methodology 95 Efim Bogoljubow -

Efficient Stackless Hierarchy Traversal on Gpus with Backtracking in Constant Time

High Performance Graphics (2016) Ulf Assarsson and Warren Hunt (Editors) Efficient Stackless Hierarchy Traversal on GPUs with Backtracking in Constant Time Nikolaus Binder† and Alexander Keller‡ NVIDIA Corporation 1 1 1 2 3 2 3 2 3 4 5 6 7 4 5 6 7 4 5 6 7 10 11 10 11 10 11 22 23 22 23 22 23 a) b) c) 44 45 44 45 44 45 Figure 1: Backtracking from the current node with key 22 to the next node with key 3, which has been postponed most recently, by a) iterating along parent and sibling references, b) using references to uncle and grand uncle, c) using a stack, or as we propose, a perfect hash directly mapping the key for the next node to its address without using a stack. The heat maps visualize the reduction in the number of backtracking steps. Abstract The fastest acceleration schemes for ray tracing rely on traversing a bounding volume hierarchy (BVH) for efficient culling and use backtracking, which in the worst case may expose cost proportional to the depth of the hierarchy in either time or state memory. We show that the next node in such a traversal actually can be determined in constant time and state memory. In fact, our newly proposed parallel software implementation requires only a few modifications of existing traversal methods and outperforms the fastest stack-based algorithms on GPUs. In addition, it reduces memory access during traversal, making it a very attractive building block for ray tracing hardware. Categories and Subject Descriptors (according to ACM CCS): I.3.3 [Computer Graphics]: Picture/Image Generation—Ray Trac- ing 1. -

Rules of Chess960

Rules of Chess960 A little known and long-discarded offshoot of Classical Chess is the realm of so-called "Randomized Chess" in its various forms. Chess960 stands for Bobby Fischer's new and improved version of "Randomized Chess". Chess960 uses algebraic notation exclusively At the start of every game of a Chess960 game, both players Pawns are set up exactly as they are at the start of every game of Classical Chess. In Chess960, just before the start of every game, both players pieces on their respective back rows receive an identical random shuffle using the Chess960 Computerized Shuffler, which is programmed to set up the pieces in any combination, with the provisos that one Rook has to be to the left and one Rook has to be to the right of the King, and one Bishop has to be on a light- colored square and one Bishop has to be on a dark-colored square. White and Black have identical positions. From behind their respective Pawns the opponents pieces are facing each other directly, symmetrically. Thus for example, if the shuffler places White's back row pieces in the following position: Ra1, Bb1, Kc1, Nd1, Be1, Nf1, Rg1, Qh1, it will place Black's back row Pieces in the following position, Ra8, Bb8, Kc8, Nd8, Be8, Nf8, Rg8, Qh8, etc. (See diagram) In Chess960 there are 960 possible starting positions, the Classical Chess starting position and 959 other starting positions. Of necessity, In Chess960 the castling rule is somewhat modified and broadened to allow for the possibility of each player castling either on or into his or her left side or on or into his or her right side of the board from all of these 960 starting positions. -

Grivas Opening Laboratory

Efstratios Grivas GRIVAS OPENING LABORATORY VOLUME 1 Chess Evolution Cover designer Piotr Pielach Typesetting i-Press ‹www.i-press.pl› First edition 2019 by Chess Evolution Grivas opening laboratory. Volume 1 Copyright © 2019 Chess Evolution All rights reserved. No part of this publication may be reproduced, stored in a retrieval system or transmitted in any form or by any means, electronic, electrostatic, magnetic tape, photocopying, recording or otherwise, without prior permission of the publisher. ISBN 978-615-5793-19-6 All sales or enquiries should be directed to Chess Evolution 2040 Budaors, Nyar utca 16, Magyarorszag e-mail: [email protected] website: www.chess-evolution.com Printed in Hungary TABLE OF CONTENTS Key to symbols ...............................................................................................................5 Foreword .........................................................................................................................7 Preface ............................................................................................................................11 PART 1. THE GRUENFELD DEFENCE (D91) Chapter 1. Black’s 5th-move Deviat — Various Lines ........................................... 17 Chapter 2. Black’s 5th-move Deviat — 5...dxc4 ......................................................27 Chapter 3. Black’s 7th-move Deviat — 7...dxc4 ......................................................43 Chapter 4. Black’s 11th-move Deviat ........................................................................73 -

Lost in Translation Or Full Steam Ahead Michael Kaeding

Lost in Translation or Full Steam Ahead Michael Kaeding To cite this version: Michael Kaeding. Lost in Translation or Full Steam Ahead. European Union Politics, SAGE Publi- cations, 2008, 9 (1), pp.115-143. 10.1177/1465116507085959. hal-00571759 HAL Id: hal-00571759 https://hal.archives-ouvertes.fr/hal-00571759 Submitted on 1 Mar 2011 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. European Union Politics Lost in Translation or Full DOI: 10.1177/1465116507085959 Volume 9 (1): 115–143 Steam Ahead Copyright© 2008 SAGE Publications The Transposition of EU Transport Los Angeles, London, New Delhi Directives across Member States and Singapore Michael Kaeding European Institute of Public Administration (EIPA), The Netherlands ABSTRACT This study supplements extant literature on implementation in the European Union (EU). The quantitative analysis, which covers the EU transport acquis, reveals five main findings. First, the EU has a transposition deficit in this area, with almost 70% of all national legal instruments causing problems. Second, transposition delay is multifaceted. The results provide strong support for the assertion that dis- tinguishing between the outcomes of the transposition process (on time, short delay or long delay) is a useful method of investigation. -

A Status Report on Losing Chess

1 A status report on Losing Chess Mark Watkins University of Sydney, School of Mathematics and Statistics [email protected] It’s unfortunate that so much knowledge seems to have gone missing. – Gian-Carlo Pascutto 1. INTRODUCTION The purpose of this writing is to report on the current status of Losing Chess. Since the naming of this class of games has variant schools of terminology, we state for definiteness that captures are compulsory (but the choice of the player on turn when there are multiple captures), that the King has no special characteristics, and that castling is not legal. Promotion to a King is allowed. This is also sometimes called Antichess. In particular, we give information about responses to 1. e3. Some of this dates back more than a decade, and is due to others. However, other parts described herein have a few novel features. Unless otherwise stated, we use the International Rules, so that a stalemate is a win for the player on move.1 1.1 Historical sources There exist a number of piecemeal Internet sites that have various information about Losing Chess. However, many of the pages of interest have not been touched in 5-10 years. Most of them use FICS rules, for which stalemate is a win for the player with fewer pieces (and drawn when the piece counts are equal). See also the ICGA page [H]. 1.1.1 Pages of Fabrice Liardet Fabrice Liardet is one of best (human) Losing Chess players in the world. His French language site [Li] has a wealth of information about the game. -

MA/CSSE 473 Day 15

MA/CSSE 473 Day 15 BFS Topological Sort Combinatorial Object Generation MA/CSSE 473 Day 15 • HW 6 due tomorrow, HW 7 Friday, Exam next Tuesday • HW 8 has been updated for this term. Due Oct 8 • ConvexHull implementation problem due Day 27 (Oct 21); assignment is available now – Individual assignment (I changed my mind, but I am giving you 3.5 weeks to do it) • Schedule page now has my projected dates for all remaining reading assignments and written assignments – Topics for future class days and details of assignments 9-17 still need to be updated for this term • Student Questions • DFS and BFS • Topological Sort • Combinatorial Object Generation - Intro Q1 1 Recap: Pseudocode for DFS Graph may not be connected, so we loop. Backtracking happens when this loop ends (no more unmarked neighbors) Analysis? Q2 Notes on DFS • DFS can be implemented with graphs represented as: – adjacency matrix: Θ(|V| 2) – adjacency list: Θ(| V|+|E|) • Yields two distinct ordering of vertices: – order in which vertices are first encountered (pushed onto stack) – order in which vertices become dead-ends (popped off stack) • Applications: – check connectivity, finding connected components – Is this graph acyclic? – finding articulation points, if any (advanced) – searching the state-space of problem for (optimal) solution (AI) Q3 2 Breadth-first search (BFS) • Visits graph vertices by moving across to all the neighbors of last visited vertex. Vertices closer to the start are visited early • Instead of a stack, BFS uses a queue • Level-order tree traversal is -

Discrete Mathematics and Its Applications Seventh Edition

Discrete Mathematics and Its Applications Seventh Edition Kenneth H. Rosen Monmouth University (and.formerly AT&T Laboratories) � onnect Learn •� Succeed" The McGraw·Hi/1 Companies ��onnect Learn a_,Succeed· DISCRETE MATHEMATICS AND ITS APPLICATIONS, SEVENTH EDITION Published by McGraw-Hill, a business unit of The McGraw-Hill Companies, Inc., 1221 Avenue of the Americas, New York, NY 10020. Copyright© 2012 by The McGraw-Hill Companies, Inc. All rights reserved. Previous editions © 2007, 2003, and 1999. No part of this publication may be reproduced or distributed in any form or by any means, or stored in a database or retrieval system, without the prior written consent of The McGraw-Hill Companies, Inc., including, but not limited to, in any network or other electronic storage or transmission, or broadcast for distance learning. Some ancillaries, including electronic and print components, may not be available to customers outside the United States. This book is printed on acid-free paper. 1234567890DOW/DOW 10987654 321 ISBN 978-0-07-338309-5 0-07-338309-0 MHID Vice President & Editor-in- Chief: Marty Lange Editorial Director: Michael Lange Global Publisher: Raghothaman Srinivasan Executive Editor: Bill Stenquist Development Editors: LorraineK. Buczek/Rose Kernan Senior Marketing Manager: Curt Reynolds Project Manager: Robin A. Reed Buyer: Sandy Ludovissy Design Coordinator: Brenda A. Rolwes Cover painting: Jasper Johns, Between the Clock and the Bed, 1981. Oil on Canvas (72 x 126 I /4 inches) Collection of the artist. Photograph by Glenn Stiegelman. Cover Art© Jasper Johns/Licensed by VAGA, New York, NY Cover Designer: Studio Montage, St. Louis, Missouri Lead Photo Research Coordinator: Carrie K.