The Structure of Games

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Game Theory Lecture Notes

Game Theory: Penn State Math 486 Lecture Notes Version 2.1.1 Christopher Griffin « 2010-2021 Licensed under a Creative Commons Attribution-Noncommercial-Share Alike 3.0 United States License With Major Contributions By: James Fan George Kesidis and Other Contributions By: Arlan Stutler Sarthak Shah Contents List of Figuresv Preface xi 1. Using These Notes xi 2. An Overview of Game Theory xi Chapter 1. Probability Theory and Games Against the House1 1. Probability1 2. Random Variables and Expected Values6 3. Conditional Probability8 4. The Monty Hall Problem 11 Chapter 2. Game Trees and Extensive Form 15 1. Graphs and Trees 15 2. Game Trees with Complete Information and No Chance 18 3. Game Trees with Incomplete Information 22 4. Games of Chance 24 5. Pay-off Functions and Equilibria 26 Chapter 3. Normal and Strategic Form Games and Matrices 37 1. Normal and Strategic Form 37 2. Strategic Form Games 38 3. Review of Basic Matrix Properties 40 4. Special Matrices and Vectors 42 5. Strategy Vectors and Matrix Games 43 Chapter 4. Saddle Points, Mixed Strategies and the Minimax Theorem 45 1. Saddle Points 45 2. Zero-Sum Games without Saddle Points 48 3. Mixed Strategies 50 4. Mixed Strategies in Matrix Games 53 5. Dominated Strategies and Nash Equilibria 54 6. The Minimax Theorem 59 7. Finding Nash Equilibria in Simple Games 64 8. A Note on Nash Equilibria in General 66 Chapter 5. An Introduction to Optimization and the Karush-Kuhn-Tucker Conditions 69 1. A General Maximization Formulation 70 2. Some Geometry for Optimization 72 3. -

Game Theoretic Interaction and Decision: a Quantum Analysis

games Article Game Theoretic Interaction and Decision: A Quantum Analysis Ulrich Faigle 1 and Michel Grabisch 2,* 1 Mathematisches Institut, Universität zu Köln, Weyertal 80, 50931 Köln, Germany; [email protected] 2 Paris School of Economics, University of Paris I, 106-112, Bd. de l’Hôpital, 75013 Paris, France * Correspondence: [email protected]; Tel.: +33-144-07-8744 Received: 12 July 2017; Accepted: 21 October 2017; Published: 6 November 2017 Abstract: An interaction system has a finite set of agents that interact pairwise, depending on the current state of the system. Symmetric decomposition of the matrix of interaction coefficients yields the representation of states by self-adjoint matrices and hence a spectral representation. As a result, cooperation systems, decision systems and quantum systems all become visible as manifestations of special interaction systems. The treatment of the theory is purely mathematical and does not require any special knowledge of physics. It is shown how standard notions in cooperative game theory arise naturally in this context. In particular, states of general interaction systems are seen to arise as linear superpositions of pure quantum states and Fourier transformation to become meaningful. Moreover, quantum games fall into this framework. Finally, a theory of Markov evolution of interaction states is presented that generalizes classical homogeneous Markov chains to the present context. Keywords: cooperative game; decision system; evolution; Fourier transform; interaction system; measurement; quantum game 1. Introduction In an interaction system, economic (or general physical) agents interact pairwise, but do not necessarily cooperate towards a common goal. However, this model arises as a natural generalization of the model of cooperative TU games, for which already Owen [1] introduced the co-value as an assessment of the pairwise interaction of two cooperating players1. -

FIDE Laws of Chess

FIDE Laws of Chess FIDE Laws of Chess cover over-the-board play. The Laws of Chess have two parts: 1. Basic Rules of Play and 2. Competition Rules. The English text is the authentic version of the Laws of Chess (which was adopted at the 84th FIDE Congress at Tallinn (Estonia) coming into force on 1 July 2014. In these Laws the words ‘he’, ‘him’, and ‘his’ shall be considered to include ‘she’ and ‘her’. PREFACE The Laws of Chess cannot cover all possible situations that may arise during a game, nor can they regulate all administrative questions. Where cases are not precisely regulated by an Article of the Laws, it should be possible to reach a correct decision by studying analogous situations which are discussed in the Laws. The Laws assume that arbiters have the necessary competence, sound judgement and absolute objectivity. Too detailed a rule might deprive the arbiter of his freedom of judgement and thus prevent him from finding a solution to a problem dictated by fairness, logic and special factors. FIDE appeals to all chess players and federations to accept this view. A necessary condition for a game to be rated by FIDE is that it shall be played according to the FIDE Laws of Chess. It is recommended that competitive games not rated by FIDE be played according to the FIDE Laws of Chess. Member federations may ask FIDE to give a ruling on matters relating to the Laws of Chess. BASIC RULES OF PLAY Article 1: The nature and objectives of the game of chess 1.1 The game of chess is played between two opponents who move their pieces on a square board called a ‘chessboard’. -

Stochastic Game Theory Applications for Power Management in Cognitive Networks

STOCHASTIC GAME THEORY APPLICATIONS FOR POWER MANAGEMENT IN COGNITIVE NETWORKS A thesis submitted to Kent State University in partial fulfillment of the requirements for the degree of Master of Digital Science by Sham Fung Feb 2014 Thesis written by Sham Fung M.D.S, Kent State University, 2014 Approved by Advisor , Director, School of Digital Science ii TABLE OF CONTENTS LIST OF FIGURES . vi LIST OF TABLES . vii Acknowledgments . viii Dedication . ix 1 BACKGROUND OF COGNITIVE NETWORK . 1 1.1 Motivation and Requirements . 1 1.2 Definition of Cognitive Networks . 2 1.3 Key Elements of Cognitive Networks . 3 1.3.1 Learning and Reasoning . 3 1.3.2 Cognitive Network as a Multi-agent System . 4 2 POWER ALLOCATION IN COGNITIVE NETWORKS . 5 2.1 Overview . 5 2.2 Two Communication Paradigms . 6 2.3 Power Allocation Scheme . 8 2.3.1 Interference Constraints for Primary Users . 8 2.3.2 QoS Constraints for Secondary Users . 9 2.4 Related Works on Power Management in Cognitive Networks . 10 iii 3 GAME THEORY|PRELIMINARIES AND RELATED WORKS . 12 3.1 Non-cooperative Game . 14 3.1.1 Nash Equilibrium . 14 3.1.2 Other Equilibriums . 16 3.2 Cooperative Game . 16 3.2.1 Bargaining Game . 17 3.2.2 Coalition Game . 17 3.2.3 Solution Concept . 18 3.3 Stochastic Game . 19 3.4 Other Types of Games . 20 4 GAME THEORETICAL APPROACH ON POWER ALLOCATION . 23 4.1 Two Fundamental Types of Utility Functions . 23 4.1.1 QoS-Based Game . 23 4.1.2 Linear Pricing Game . -

Learning to Play Chess Using Temporal Differences

Machine Learning, 40, 243–263, 2000 c 2000 Kluwer Academic Publishers. Manufactured in The Netherlands. Learning to Play Chess Using Temporal Differences JONATHAN BAXTER [email protected] Department of Systems Engineering, Australian National University 0200, Australia ANDREW TRIDGELL [email protected] LEX WEAVER [email protected] Department of Computer Science, Australian National University 0200, Australia Editor: Sridhar Mahadevan Abstract. In this paper we present TDLEAF(), a variation on the TD() algorithm that enables it to be used in conjunction with game-tree search. We present some experiments in which our chess program “KnightCap” used TDLEAF() to learn its evaluation function while playing on Internet chess servers. The main success we report is that KnightCap improved from a 1650 rating to a 2150 rating in just 308 games and 3 days of play. As a reference, a rating of 1650 corresponds to about level B human play (on a scale from E (1000) to A (1800)), while 2150 is human master level. We discuss some of the reasons for this success, principle among them being the use of on-line, rather than self-play. We also investigate whether TDLEAF() can yield better results in the domain of backgammon, where TD() has previously yielded striking success. Keywords: temporal difference learning, neural network, TDLEAF, chess, backgammon 1. Introduction Temporal Difference learning, first introduced by Samuel (Samuel, 1959) and later extended and formalized by Sutton (Sutton, 1988) in his TD() algorithm, is an elegant technique for approximating the expected long term future cost (or cost-to-go) of a stochastic dy- namical system as a function of the current state. -

Deterministic and Stochastic Prisoner's Dilemma Games: Experiments in Interdependent Security

NBER TECHNICAL WORKING PAPER SERIES DETERMINISTIC AND STOCHASTIC PRISONER'S DILEMMA GAMES: EXPERIMENTS IN INTERDEPENDENT SECURITY Howard Kunreuther Gabriel Silvasi Eric T. Bradlow Dylan Small Technical Working Paper 341 http://www.nber.org/papers/t0341 NATIONAL BUREAU OF ECONOMIC RESEARCH 1050 Massachusetts Avenue Cambridge, MA 02138 August 2007 We appreciate helpful discussions in designing the experiments and comments on earlier drafts of this paper by Colin Camerer, Vince Crawford, Rachel Croson, Robyn Dawes, Aureo DePaula, Geoff Heal, Charles Holt, David Krantz, Jack Ochs, Al Roth and Christian Schade. We also benefited from helpful comments from participants at the Workshop on Interdependent Security at the University of Pennsylvania (May 31-June 1 2006) and at the Workshop on Innovation and Coordination at Humboldt University (December 18-20, 2006). We thank George Abraham and Usmann Hassan for their help in organizing the data from the experiments. Support from NSF Grant CMS-0527598 and the Wharton Risk Management and Decision Processes Center is gratefully acknowledged. The views expressed herein are those of the author(s) and do not necessarily reflect the views of the National Bureau of Economic Research. © 2007 by Howard Kunreuther, Gabriel Silvasi, Eric T. Bradlow, and Dylan Small. All rights reserved. Short sections of text, not to exceed two paragraphs, may be quoted without explicit permission provided that full credit, including © notice, is given to the source. Deterministic and Stochastic Prisoner's Dilemma Games: Experiments in Interdependent Security Howard Kunreuther, Gabriel Silvasi, Eric T. Bradlow, and Dylan Small NBER Technical Working Paper No. 341 August 2007 JEL No. C11,C12,C22,C23,C73,C91 ABSTRACT This paper examines experiments on interdependent security prisoner's dilemma games with repeated play. -

Stochastic Games with Hidden States∗

Stochastic Games with Hidden States¤ Yuichi Yamamoto† First Draft: March 29, 2014 This Version: January 14, 2015 Abstract This paper studies infinite-horizon stochastic games in which players ob- serve noisy public information about a hidden state each period. We find that if the game is connected, the limit feasible payoff set exists and is invariant to the initial prior about the state. Building on this invariance result, we pro- vide a recursive characterization of the equilibrium payoff set and establish the folk theorem. We also show that connectedness can be replaced with an even weaker condition, called asymptotic connectedness. Asymptotic con- nectedness is satisfied for generic signal distributions, if the state evolution is irreducible. Journal of Economic Literature Classification Numbers: C72, C73. Keywords: stochastic game, hidden state, connectedness, stochastic self- generation, folk theorem. ¤The author thanks Naoki Aizawa, Drew Fudenberg, Johannes Horner,¨ Atsushi Iwasaki, Michi- hiro Kandori, George Mailath, Takeaki Sunada, and Masatoshi Tsumagari for helpful conversa- tions, and seminar participants at various places. †Department of Economics, University of Pennsylvania. Email: [email protected] 1 1 Introduction When agents have a long-run relationship, underlying economic conditions may change over time. A leading example is a repeated Bertrand competition with stochastic demand shocks. Rotemberg and Saloner (1986) explore optimal col- lusive pricing when random demand shocks are i.i.d. each period. Haltiwanger and Harrington (1991), Kandori (1991), and Bagwell and Staiger (1997) further extend the analysis to the case in which demand fluctuations are cyclic or persis- tent. One of the crucial assumptions of these papers is that demand shocks are publicly observable before firms make their decisions in each period. -

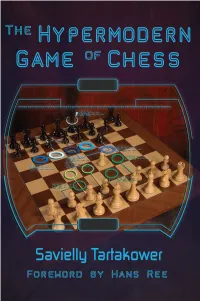

Hypermodern Game of Chess the Hypermodern Game of Chess

The Hypermodern Game of Chess The Hypermodern Game of Chess by Savielly Tartakower Foreword by Hans Ree 2015 Russell Enterprises, Inc. Milford, CT USA 1 The Hypermodern Game of Chess The Hypermodern Game of Chess by Savielly Tartakower © Copyright 2015 Jared Becker ISBN: 978-1-941270-30-1 All Rights Reserved No part of this book maybe used, reproduced, stored in a retrieval system or transmitted in any manner or form whatsoever or by any means, electronic, electrostatic, magnetic tape, photocopying, recording or otherwise, without the express written permission from the publisher except in the case of brief quotations embodied in critical articles or reviews. Published by: Russell Enterprises, Inc. PO Box 3131 Milford, CT 06460 USA http://www.russell-enterprises.com [email protected] Translated from the German by Jared Becker Editorial Consultant Hannes Langrock Cover design by Janel Norris Printed in the United States of America 2 The Hypermodern Game of Chess Table of Contents Foreword by Hans Ree 5 From the Translator 7 Introduction 8 The Three Phases of A Game 10 Alekhine’s Defense 11 Part I – Open Games Spanish Torture 28 Spanish 35 José Raúl Capablanca 39 The Accumulation of Small Advantages 41 Emanuel Lasker 43 The Canticle of the Combination 52 Spanish with 5...Nxe4 56 Dr. Siegbert Tarrasch and Géza Maróczy as Hypermodernists 65 What constitutes a mistake? 76 Spanish Exchange Variation 80 Steinitz Defense 82 The Doctrine of Weaknesses 90 Spanish Three and Four Knights’ Game 95 A Victory of Methodology 95 Efim Bogoljubow -

570: Minimax Sample Complexity for Turn-Based Stochastic Game

Minimax Sample Complexity for Turn-based Stochastic Game Qiwen Cui1 Lin F. Yang2 1School of Mathematical Sciences, Peking University 2Electrical and Computer Engineering Department, University of California, Los Angeles Abstract guarantees are rather rare due to complex interaction be- tween agents that makes the problem considerably harder than single agent reinforcement learning. This is also known The empirical success of multi-agent reinforce- as non-stationarity in MARL, which means when multi- ment learning is encouraging, while few theoret- ple agents alter their strategies based on samples collected ical guarantees have been revealed. In this work, from previous strategy, the system becomes non-stationary we prove that the plug-in solver approach, proba- for each agent and the improvement can not be guaranteed. bly the most natural reinforcement learning algo- One fundamental question in MBRL is that how to design rithm, achieves minimax sample complexity for efficient algorithms to overcome non-stationarity. turn-based stochastic game (TBSG). Specifically, we perform planning in an empirical TBSG by Two-players turn-based stochastic game (TBSG) is a two- utilizing a ‘simulator’ that allows sampling from agents generalization of Markov decision process (MDP), arbitrary state-action pair. We show that the em- where two agents choose actions in turn and one agent wants pirical Nash equilibrium strategy is an approxi- to maximize the total reward while the other wants to min- mate Nash equilibrium strategy in the true TBSG imize it. As a zero-sum game, TBSG is known to have and give both problem-dependent and problem- Nash equilibrium strategy [Shapley, 1953], which means independent bound. -

Rules of Chess960

Rules of Chess960 A little known and long-discarded offshoot of Classical Chess is the realm of so-called "Randomized Chess" in its various forms. Chess960 stands for Bobby Fischer's new and improved version of "Randomized Chess". Chess960 uses algebraic notation exclusively At the start of every game of a Chess960 game, both players Pawns are set up exactly as they are at the start of every game of Classical Chess. In Chess960, just before the start of every game, both players pieces on their respective back rows receive an identical random shuffle using the Chess960 Computerized Shuffler, which is programmed to set up the pieces in any combination, with the provisos that one Rook has to be to the left and one Rook has to be to the right of the King, and one Bishop has to be on a light- colored square and one Bishop has to be on a dark-colored square. White and Black have identical positions. From behind their respective Pawns the opponents pieces are facing each other directly, symmetrically. Thus for example, if the shuffler places White's back row pieces in the following position: Ra1, Bb1, Kc1, Nd1, Be1, Nf1, Rg1, Qh1, it will place Black's back row Pieces in the following position, Ra8, Bb8, Kc8, Nd8, Be8, Nf8, Rg8, Qh8, etc. (See diagram) In Chess960 there are 960 possible starting positions, the Classical Chess starting position and 959 other starting positions. Of necessity, In Chess960 the castling rule is somewhat modified and broadened to allow for the possibility of each player castling either on or into his or her left side or on or into his or her right side of the board from all of these 960 starting positions. -

Cooperative and Non-Cooperative Game Theory Olivier Chatain

HEC Paris From the SelectedWorks of Olivier Chatain 2014 Cooperative and Non-Cooperative Game Theory Olivier Chatain Available at: https://works.bepress.com/olivier_chatain/9/ The Palgrave Encyclopedia of Strategic Management Edited by Mie Augier and David J. Teece (http://www.palgrave.com/strategicmanagement/information/) Entry: Cooperative and non-cooperative game theory Author: Olivier Chatain Classifications: economics and strategy competitive advantage formal models methods/methodology Definition: Cooperative game theory focuses on how much players can appropriate given the value each coalition of player can create, while non-cooperative game theory focuses on which moves players should rationally make. Abstract: This article outlines the differences between cooperative and non-cooperative game theory. It introduces some of the main concepts of cooperative game theory as they apply to strategic management research. Keywords: cooperative game theory coalitional game theory value-based strategy core (solution concept) Shapley value; biform games Cooperative and non-cooperative game theory Game theory comprises two branches: cooperative game theory (CGT) and non-cooperative game theory (NCGT). CGT models how agents compete and cooperate as coalitions in unstructured interactions to create and capture value. NCGT models the actions of agents, maximizing their utility in a defined procedure, relying on a detailed description of the moves 1 and information available to each agent. CGT abstracts from these details and focuses on how the value creation abilities of each coalition of agents can bear on the agents’ ability to capture value. CGT can be thus called coalitional, while NCGT is procedural. Note that ‘cooperative’ and ‘non-cooperative’ are technical terms and are not an assessment of the degree of cooperation among agents in the model: a cooperative game can as much model extreme competition as a non-cooperative game can model cooperation. -

Grivas Opening Laboratory

Efstratios Grivas GRIVAS OPENING LABORATORY VOLUME 1 Chess Evolution Cover designer Piotr Pielach Typesetting i-Press ‹www.i-press.pl› First edition 2019 by Chess Evolution Grivas opening laboratory. Volume 1 Copyright © 2019 Chess Evolution All rights reserved. No part of this publication may be reproduced, stored in a retrieval system or transmitted in any form or by any means, electronic, electrostatic, magnetic tape, photocopying, recording or otherwise, without prior permission of the publisher. ISBN 978-615-5793-19-6 All sales or enquiries should be directed to Chess Evolution 2040 Budaors, Nyar utca 16, Magyarorszag e-mail: [email protected] website: www.chess-evolution.com Printed in Hungary TABLE OF CONTENTS Key to symbols ...............................................................................................................5 Foreword .........................................................................................................................7 Preface ............................................................................................................................11 PART 1. THE GRUENFELD DEFENCE (D91) Chapter 1. Black’s 5th-move Deviat — Various Lines ........................................... 17 Chapter 2. Black’s 5th-move Deviat — 5...dxc4 ......................................................27 Chapter 3. Black’s 7th-move Deviat — 7...dxc4 ......................................................43 Chapter 4. Black’s 11th-move Deviat ........................................................................73